Mac Schwager

Occlusion-Aware MPC for Guaranteed Safe Robot Navigation with Unseen Dynamic Obstacles

Nov 16, 2022Abstract:For safe navigation in dynamic uncertain environments, robotic systems rely on the perception and prediction of other agents. Particularly, in occluded areas where cameras and LiDAR give no data, the robot must be able to reason about potential movements of invisible dynamic agents. This work presents a provably safe motion planning scheme for real-time navigation in an a priori unmapped environment, where occluded dynamic agents are present. Safety guarantees are provided based on reachability analysis. Forward reachable sets associated with potential occluded agents, such as pedestrians, are computed and incorporated into planning. An iterative optimization-based planner is presented that alternates between two optimizations: nonlinear Model Predictive Control (NMPC) and collision avoidance. Recursive feasibility of the MPC is guaranteed by introducing a terminal stopping constraint. The effectiveness of the proposed algorithm is demonstrated through simulation studies and hardware experiments with a TurtleBot robot. A video of experimental results is available at \url{https://youtu.be/OUnkB5Feyuk}.

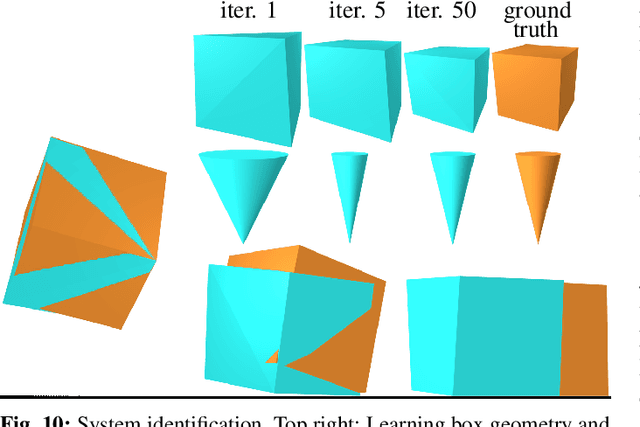

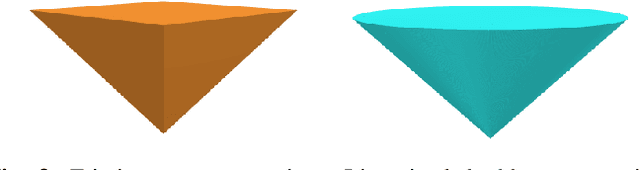

Differentiable Physics Simulation of Dynamics-Augmented Neural Objects

Oct 20, 2022

Abstract:We present a differentiable pipeline for simulating the motion of objects that represent their geometry as a continuous density field parameterized as a deep network. This includes Neural Radiance Fields (NeRFs), and other related models. From the density field, we estimate the dynamical properties of the object, including its mass, center of mass, and inertia matrix. We then introduce a differentiable contact model based on the density field for computing normal and friction forces resulting from collisions. This allows a robot to autonomously build object models that are visually and dynamically accurate from still images and videos of objects in motion. The resulting Dynamics-Augmented Neural Objects (DANOs) are simulated with an existing differentiable simulation engine, Dojo, interacting with other standard simulation objects, such as spheres, planes, and robots specified as URDFs. A robot can use this simulation to optimize grasps and manipulation trajectories of neural objects, or to improve the neural object models through gradient-based real-to-simulation transfer. We demonstrate the pipeline to learn the coefficient of friction of a bar of soap from a real video of the soap sliding on a table. We also learn the coefficient of friction and mass of a Stanford bunny through interactions with a Panda robot arm from synthetic data, and we optimize trajectories in simulation for the Panda arm to push the bunny to a goal location.

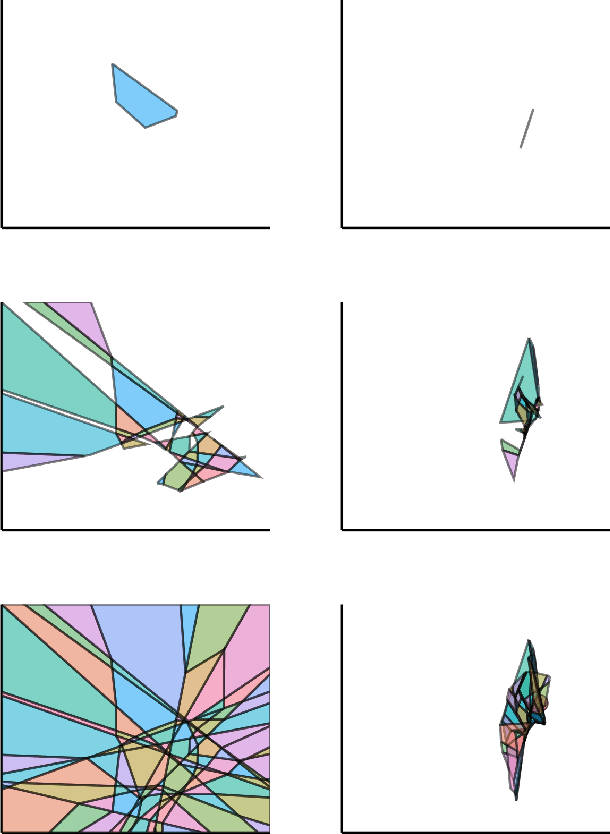

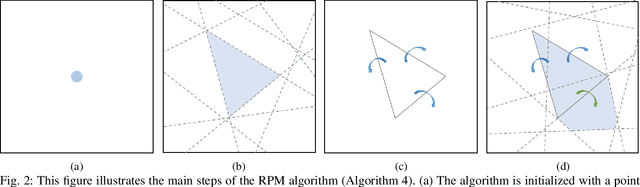

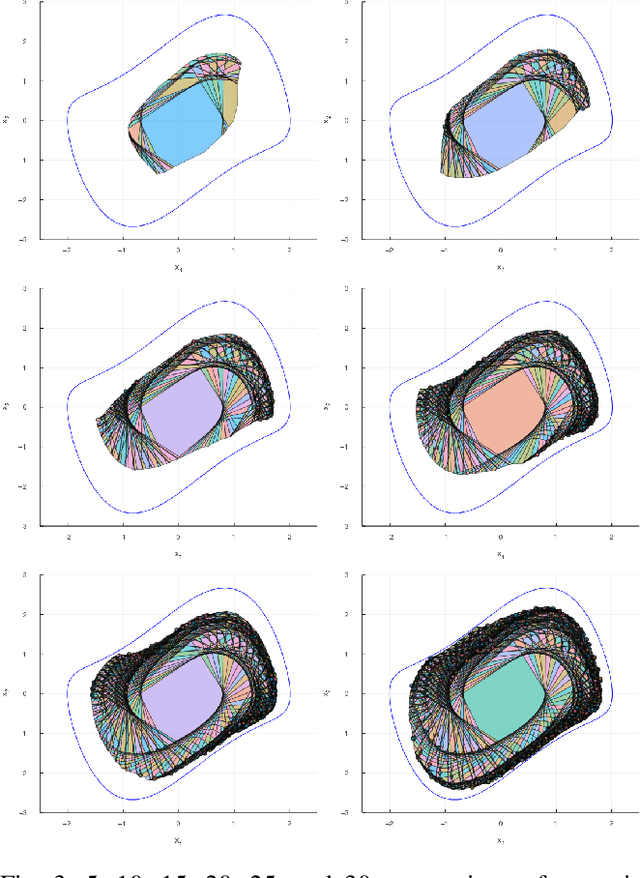

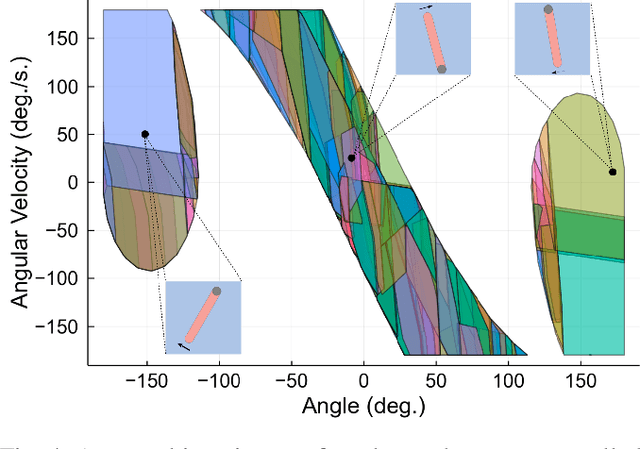

Reachable Polyhedral Marching (RPM): An Exact Analysis Tool for Deep-Learned Control Systems

Oct 15, 2022

Abstract:We present a tool for computing exact forward and backward reachable sets of deep neural networks with rectified linear unit (ReLU) activation. We then develop algorithms using this tool to compute invariant sets and regions of attraction (ROAs) for control systems with neural networks in the feedback loop. Our algorithm is unique in that it builds the reachable sets by incrementally enumerating polyhedral regions in the input space, rather than iterating layer-by-layer through the network as in other methods. When performing safety verification, if an unsafe region is found, our algorithm can return this result without completing the full reachability computation, thus giving an anytime property that accelerates safety verification. Furthermore, we introduce a method to accelerate the computation of ROAs in the case that deep learned components are homeomorphisms, which we find is surprisingly common in practice. We demonstrate our tool in several test cases. We compute a ROA for a learned van der Pol oscillator model. We find a control invariant set for a learned torque-controlled pendulum model. We also verify specific safety properties for multiple deep networks related to the ACAS Xu aircraft collision advisory system. Finally, we apply our algorithm to find ROAs for an image-based aircraft runway taxi problem. Algorithm source code: https://github.com/StanfordMSL/Neural-Network-Reach .

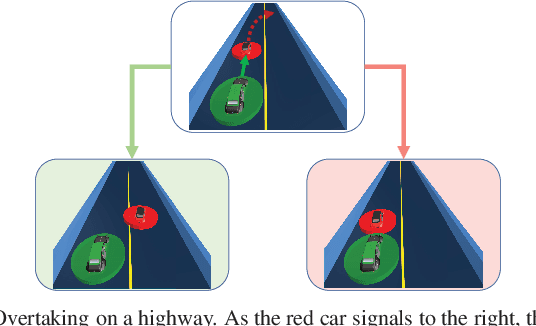

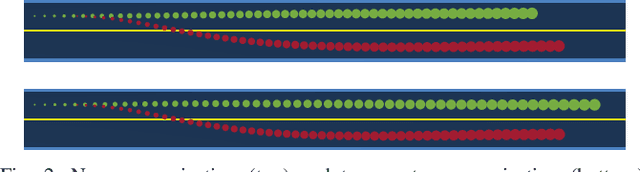

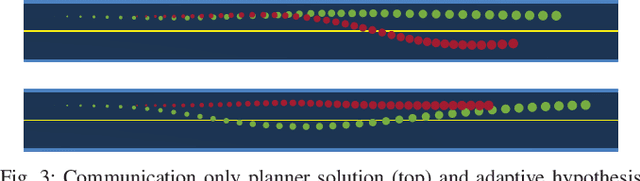

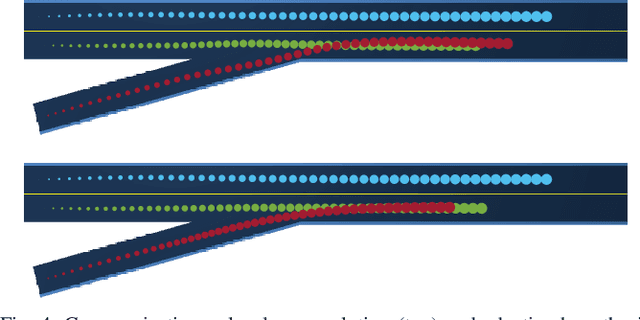

Intention Communication and Hypothesis Likelihood in Game-Theoretic Motion Planning

Sep 26, 2022

Abstract:Game-theoretic motion planners are a potent solution for controlling systems of multiple highly interactive robots. Most existing game-theoretic planners unrealistically assume a priori objective function knowledge is available to all agents. To address this, we propose a fault-tolerant receding horizon game-theoretic motion planner that leverages inter-agent communication with intention hypothesis likelihood. Specifically, robots communicate their objective function incorporating their intentions. A discrete Bayesian filter is designed to infer the objectives in real-time based on the discrepancy between observed trajectories and the ones from communicated intentions. In simulation, we consider three safety-critical autonomous driving scenarios of overtaking, lane-merging and intersection crossing, to demonstrate our planner's ability to capitalize on alternative intention hypotheses to generate safe trajectories in the presence of faulty transmissions in the communication network.

NeRF-Loc: Transformer-Based Object Localization Within Neural Radiance Fields

Sep 24, 2022

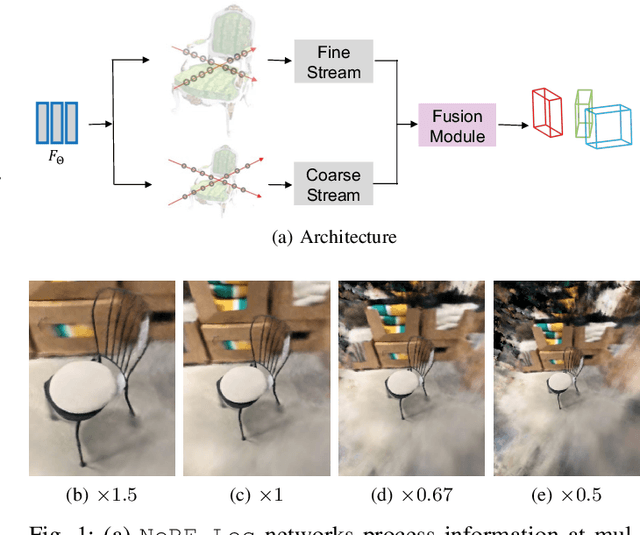

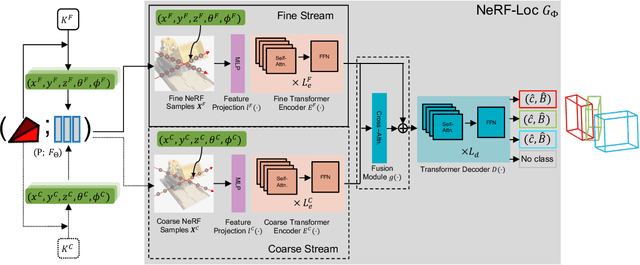

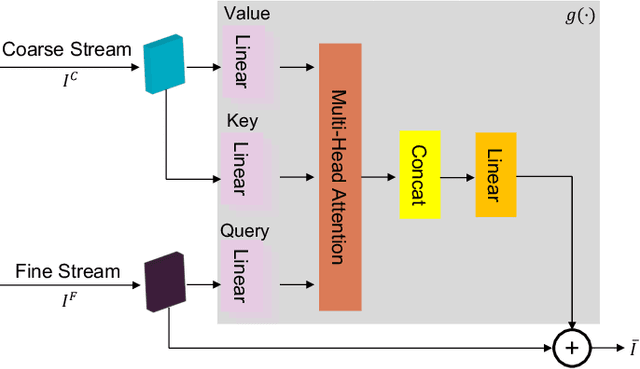

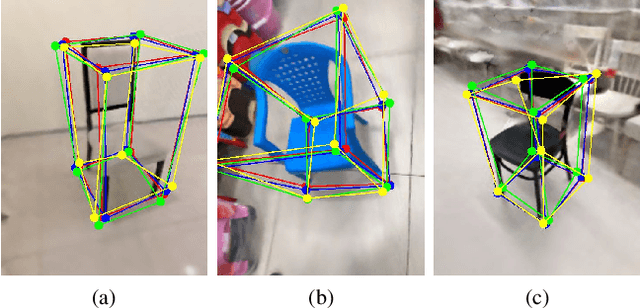

Abstract:Neural Radiance Fields (NeRFs) have been successfully used for scene representation. Recent works have also developed robotic navigation and manipulation systems using NeRF-based environment representations. As object localization is the foundation for many robotic applications, to further unleash the potential of NeRFs in robotic systems, we study object localization within a NeRF scene. We propose a transformer-based framework NeRF-Loc to extract 3D bounding boxes of objects in NeRF scenes. NeRF-Loc takes a pre-trained NeRF model and camera view as input, and produces labeled 3D bounding boxes of objects as output. Concretely, we design a pair of paralleled transformer encoder branches, namely the coarse stream and the fine stream, to encode both the context and details of target objects. The encoded features are then fused together with attention layers to alleviate ambiguities for accurate object localization. We have compared our method with the conventional transformer-based method and our method achieves better performance. In addition, we also present the first NeRF samples-based object localization benchmark NeRFLocBench.

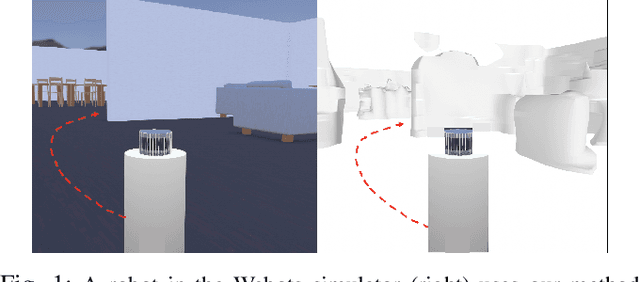

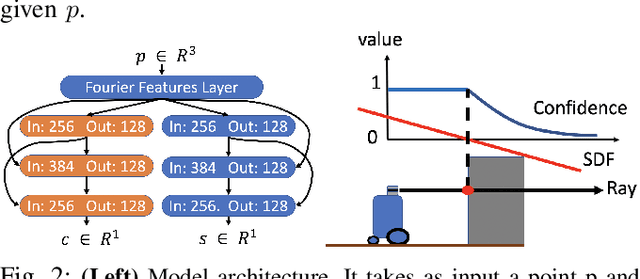

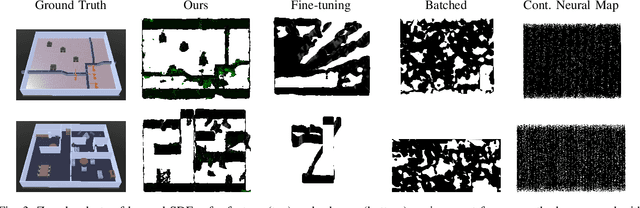

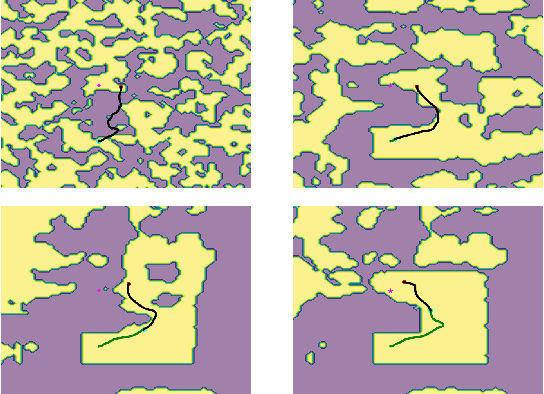

Learning Deep SDF Maps Online for Robot Navigation and Exploration

Aug 02, 2022

Abstract:We propose an algorithm to (i) learn online a deep signed distance function (SDF) with a LiDAR-equipped robot to represent the 3D environment geometry, and (ii) plan collision-free trajectories given this deep learned map. Our algorithm takes a stream of incoming LiDAR scans and continually optimizes a neural network to represent the SDF of the environment around its current vicinity. When the SDF network quality saturates, we cache a copy of the network, along with a learned confidence metric, and initialize a new SDF network to continue mapping new regions of the environment. We then concatenate all the cached local SDFs through a confidence-weighted scheme to give a global SDF for planning. For planning, we make use of a sequential convex model predictive control (MPC) algorithm. The MPC planner optimizes a dynamically feasible trajectory for the robot while enforcing no collisions with obstacles mapped in the global SDF. We show that our online mapping algorithm produces higher-quality maps than existing methods for online SDF training. In the WeBots simulator, we further showcase the combined mapper and planner running online -- navigating autonomously and without collisions in an unknown environment.

Game-Theoretic Planning for Autonomous Driving among Risk-Aware Human Drivers

May 01, 2022

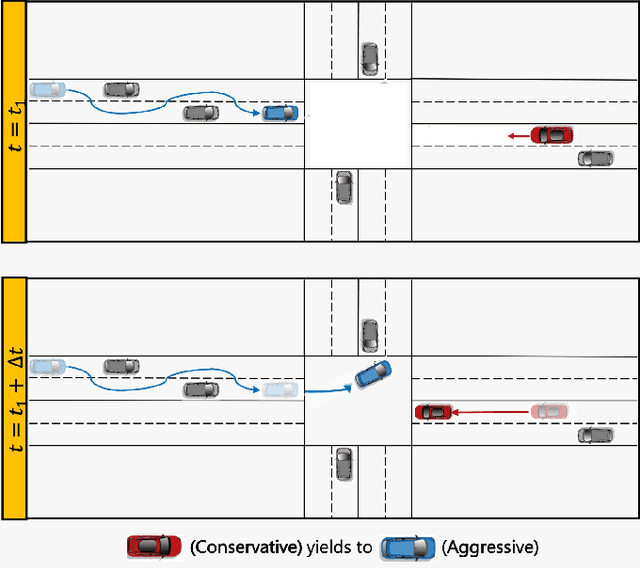

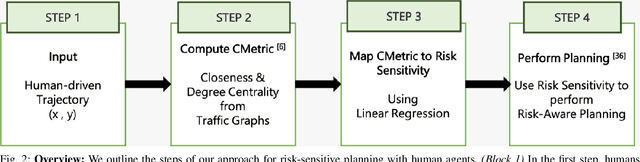

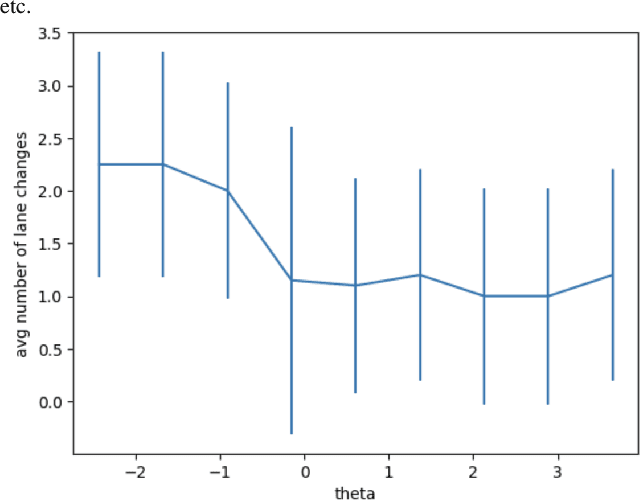

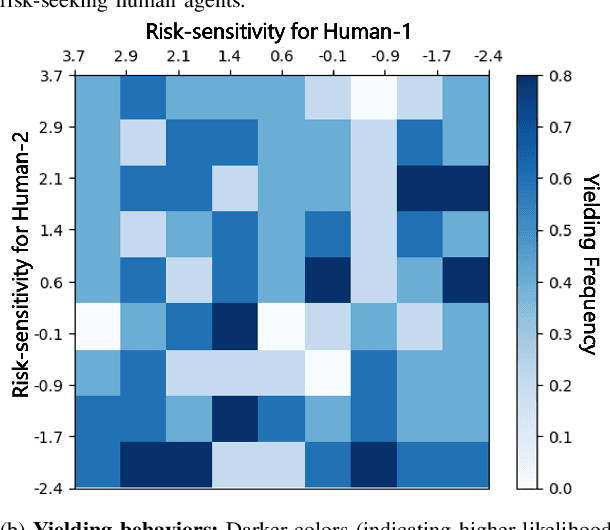

Abstract:We present a novel approach for risk-aware planning with human agents in multi-agent traffic scenarios. Our approach takes into account the wide range of human driver behaviors on the road, from aggressive maneuvers like speeding and overtaking, to conservative traits like driving slowly and conforming to the right-most lane. In our approach, we learn a mapping from a data-driven human driver behavior model called the CMetric to a driver's entropic risk preference. We then use the derived risk preference within a game-theoretic risk-sensitive planner to model risk-aware interactions among human drivers and an autonomous vehicle in various traffic scenarios. We demonstrate our method in a merging scenario, where our results show that the final trajectories obtained from the risk-aware planner generate desirable emergent behaviors. Particularly, our planner recognizes aggressive human drivers and yields to them while maintaining a greater distance from them. In a user study, participants were able to distinguish between aggressive and conservative simulated drivers based on trajectories generated from our risk-sensitive planner. We also observe that aggressive human driving results in more frequent lane-changing in the planner. Finally, we compare the performance of our modified risk-aware planner with existing methods and show that modeling human driver behavior leads to safer navigation.

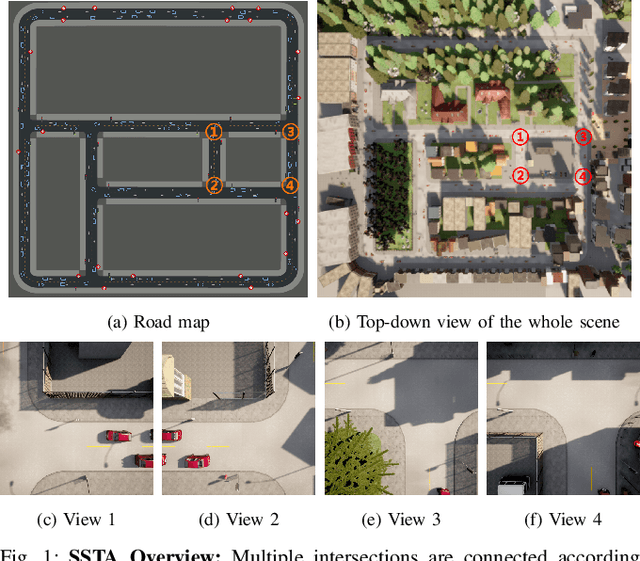

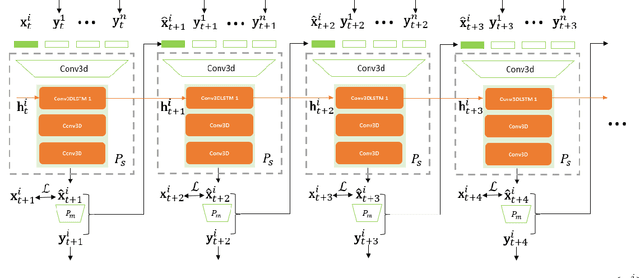

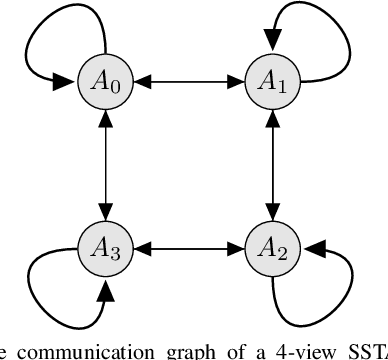

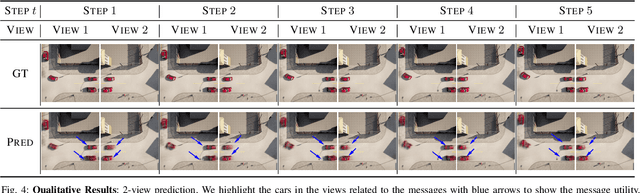

Self-Supervised Traffic Advisors: Distributed, Multi-view Traffic Prediction for Smart Cities

Apr 13, 2022

Abstract:Connected and Autonomous Vehicles (CAVs) are becoming more widely deployed, but it is unclear how to best deploy smart infrastructure to maximize their capabilities. One key challenge is to ensure CAVs can reliably perceive other agents, especially occluded ones. A further challenge is the desire for smart infrastructure to be autonomous and readily scalable to wide-area deployments, similar to modern traffic lights. The present work proposes the Self-Supervised Traffic Advisor (SSTA), an infrastructure edge device concept that leverages self-supervised video prediction in concert with a communication and co-training framework to enable autonomously predicting traffic throughout a smart city. An SSTA is a statically-mounted camera that overlooks an intersection or area of complex traffic flow that predicts traffic flow as future video frames and learns to communicate with neighboring SSTAs to enable predicting traffic before it appears in the Field of View (FOV). The proposed framework aims at three goals: (1) inter-device communication to enable high-quality predictions, (2) scalability to an arbitrary number of devices, and (3) lifelong online learning to ensure adaptability to changing circumstances. Finally, an SSTA can broadcast its future predicted video frames directly as information for CAVs to run their own post-processing for the purpose of control.

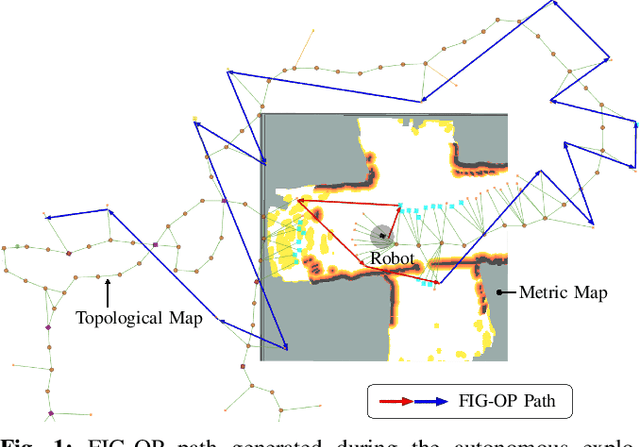

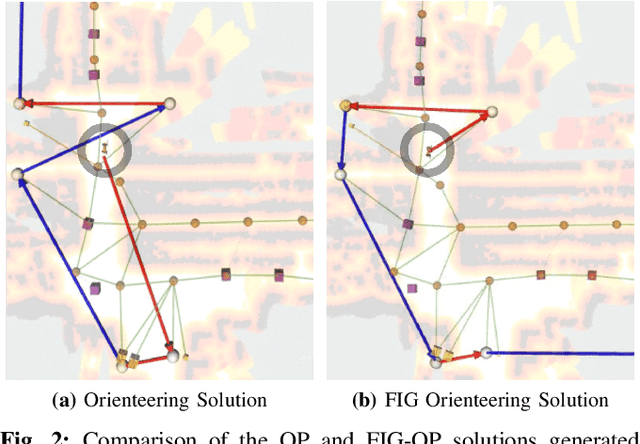

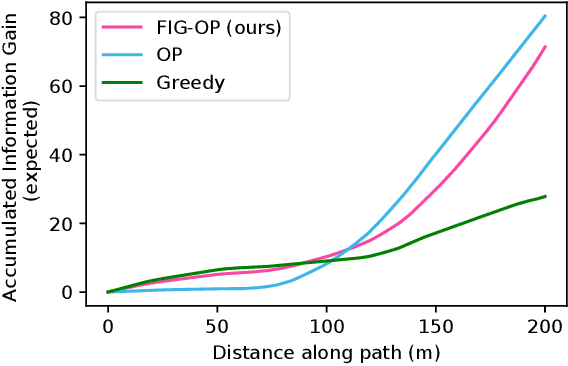

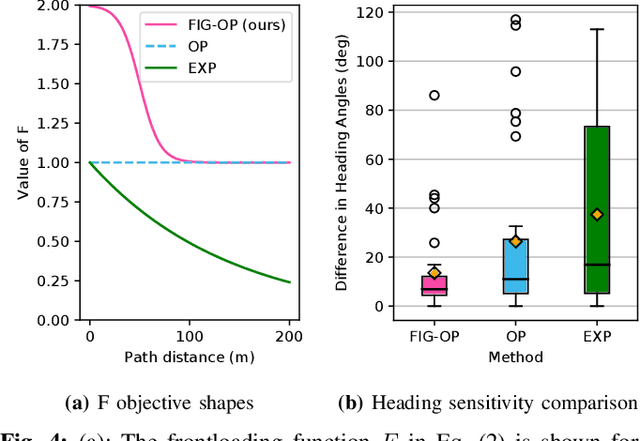

FIG-OP: Exploring Large-Scale Unknown Environments on a Fixed Time Budget

Mar 12, 2022

Abstract:We present a method for autonomous exploration of large-scale unknown environments under mission time constraints. We start by proposing the Frontloaded Information Gain Orienteering Problem (FIG-OP) -- a generalization of the traditional orienteering problem where the assumption of a reliable environmental model no longer holds. The FIG-OP addresses model uncertainty by frontloading expected information gain through the addition of a greedy incentive, effectively expediting the moment in which new area is uncovered. In order to reason across multi-kilometre environments, we solve FIG-OP over an information-efficient world representation, constructed through the aggregation of information from a topological and metric map. Our method was extensively tested and field-hardened across various complex environments, ranging from subway systems to mines. In comparative simulations, we observe that the FIG-OP solution exhibits improved coverage efficiency over solutions generated by greedy and traditional orienteering-based approaches (i.e. severe and minimal model uncertainty assumptions, respectively).

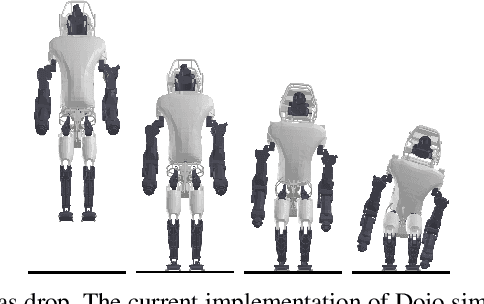

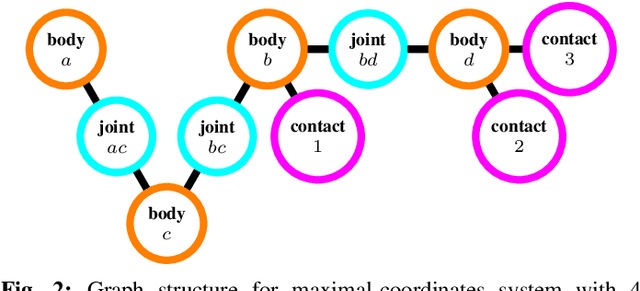

Dojo: A Differentiable Simulator for Robotics

Mar 03, 2022

Abstract:We present a differentiable rigid-body-dynamics simulator for robotics that prioritizes physical accuracy and differentiability: Dojo. The simulator utilizes an expressive maximal-coordinates representation, achieves stable simulation at low sample rates, and conserves energy and momentum by employing a variational integrator. A nonlinear complementarity problem, with nonlinear friction cones, models hard contact and is reliably solved using a custom primal-dual interior-point method. The implicit-function theorem enables efficient differentiation of an intermediate relaxed problem and computes smooth gradients from the contact model. We demonstrate the usefulness of the simulator and its gradients through a number of examples including: simulation, trajectory optimization, reinforcement learning, and system identification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge