Preston Culbertson

SCOPED: Score-Curvature Out-of-distribution Proximity Evaluator for Diffusion

Oct 01, 2025Abstract:Out-of-distribution (OOD) detection is essential for reliable deployment of machine learning systems in vision, robotics, reinforcement learning, and beyond. We introduce Score-Curvature Out-of-distribution Proximity Evaluator for Diffusion (SCOPED), a fast and general-purpose OOD detection method for diffusion models that reduces the number of forward passes on the trained model by an order of magnitude compared to prior methods, outperforming most diffusion-based baselines and closely approaching the accuracy of the strongest ones. SCOPED is computed from a single diffusion model trained once on a diverse dataset, and combines the Jacobian trace and squared norm of the model's score function into a single test statistic. Rather than thresholding on a fixed value, we estimate the in-distribution density of SCOPED scores using kernel density estimation, enabling a flexible, unsupervised test that, in the simplest case, only requires a single forward pass and one Jacobian-vector product (JVP), made efficient by Hutchinson's trace estimator. On four vision benchmarks, SCOPED achieves competitive or state-of-the-art precision-recall scores despite its low computational cost. The same method generalizes to robotic control tasks with shared state and action spaces, identifying distribution shifts across reward functions and training regimes. These results position SCOPED as a practical foundation for fast and reliable OOD detection in real-world domains, including perceptual artifacts in vision, outlier detection in autoregressive models, exploration in reinforcement learning, and dataset curation for unsupervised training.

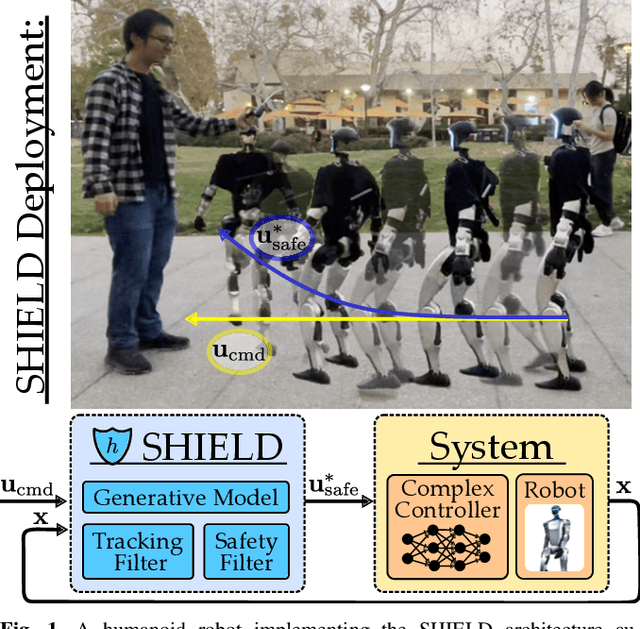

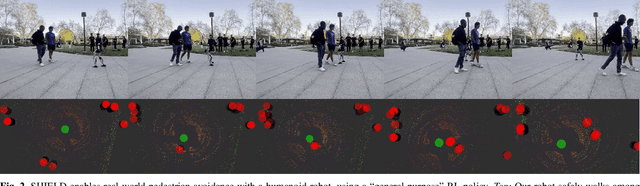

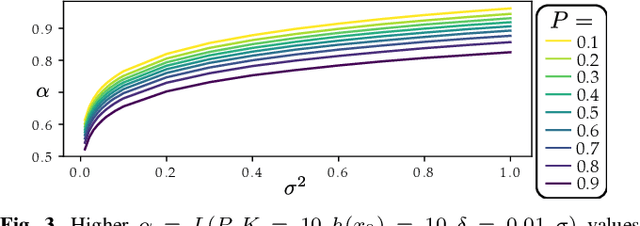

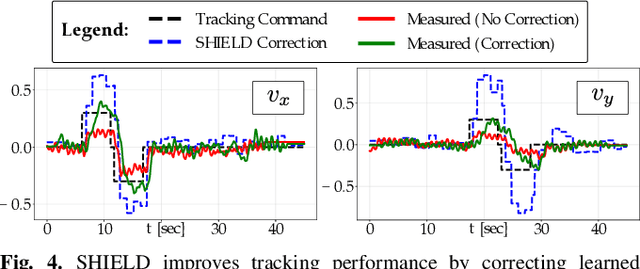

SHIELD: Safety on Humanoids via CBFs In Expectation on Learned Dynamics

May 16, 2025

Abstract:Robot learning has produced remarkably effective ``black-box'' controllers for complex tasks such as dynamic locomotion on humanoids. Yet ensuring dynamic safety, i.e., constraint satisfaction, remains challenging for such policies. Reinforcement learning (RL) embeds constraints heuristically through reward engineering, and adding or modifying constraints requires retraining. Model-based approaches, like control barrier functions (CBFs), enable runtime constraint specification with formal guarantees but require accurate dynamics models. This paper presents SHIELD, a layered safety framework that bridges this gap by: (1) training a generative, stochastic dynamics residual model using real-world data from hardware rollouts of the nominal controller, capturing system behavior and uncertainties; and (2) adding a safety layer on top of the nominal (learned locomotion) controller that leverages this model via a stochastic discrete-time CBF formulation enforcing safety constraints in probability. The result is a minimally-invasive safety layer that can be added to the existing autonomy stack to give probabilistic guarantees of safety that balance risk and performance. In hardware experiments on an Unitree G1 humanoid, SHIELD enables safe navigation (obstacle avoidance) through varied indoor and outdoor environments using a nominal (unknown) RL controller and onboard perception.

Get a Grip: Multi-Finger Grasp Evaluation at Scale Enables Robust Sim-to-Real Transfer

Oct 31, 2024Abstract:This work explores conditions under which multi-finger grasping algorithms can attain robust sim-to-real transfer. While numerous large datasets facilitate learning generative models for multi-finger grasping at scale, reliable real-world dexterous grasping remains challenging, with most methods degrading when deployed on hardware. An alternate strategy is to use discriminative grasp evaluation models for grasp selection and refinement, conditioned on real-world sensor measurements. This paradigm has produced state-of-the-art results for vision-based parallel-jaw grasping, but remains unproven in the multi-finger setting. In this work, we find that existing datasets and methods have been insufficient for training discriminitive models for multi-finger grasping. To train grasp evaluators at scale, datasets must provide on the order of millions of grasps, including both positive and negative examples, with corresponding visual data resembling measurements at inference time. To that end, we release a new, open-source dataset of 3.5M grasps on 4.3K objects annotated with RGB images, point clouds, and trained NeRFs. Leveraging this dataset, we train vision-based grasp evaluators that outperform both analytic and generative modeling-based baselines on extensive simulated and real-world trials across a diverse range of objects. We show via numerous ablations that the key factor for performance is indeed the evaluator, and that its quality degrades as the dataset shrinks, demonstrating the importance of our new dataset. Project website at: https://sites.google.com/view/get-a-grip-dataset.

DROP: Dexterous Reorientation via Online Planning

Sep 22, 2024Abstract:Achieving human-like dexterity is a longstanding challenge in robotics, in part due to the complexity of planning and control for contact-rich systems. In reinforcement learning (RL), one popular approach has been to use massively-parallelized, domain-randomized simulations to learn a policy offline over a vast array of contact conditions, allowing robust sim-to-real transfer. Inspired by recent advances in real-time parallel simulation, this work considers instead the viability of online planning methods for contact-rich manipulation by studying the well-known in-hand cube reorientation task. We propose a simple architecture that employs a sampling-based predictive controller and vision-based pose estimator to search for contact-rich control actions online. We conduct thorough experiments to assess the real-world performance of our method, architectural design choices, and key factors for robustness, demonstrating that our simple sampled-based approach achieves performance comparable to prior RL-based works. Supplemental material: https://caltech-amber.github.io/drop.

Toward An Analytic Theory of Intrinsic Robustness for Dexterous Grasping

Mar 12, 2024Abstract:Conventional approaches to grasp planning require perfect knowledge of an object's pose and geometry. Uncertainties in these quantities induce uncertainties in the quality of planned grasps, which can lead to failure. Classically, grasp robustness refers to the ability to resist external disturbances after grasping an object. In contrast, this work studies robustness to intrinsic sources of uncertainty like object pose or geometry affecting grasp planning before execution. To do so, we develop a novel analytic theory of grasping that reasons about this intrinsic robustness by characterizing the effect of friction cone uncertainty on a grasp's force closure status. As a result, we show the Ferrari-Canny metric -- which measures the size of external disturbances a grasp can reject -- bounds the friction cone uncertainty a grasp can tolerate, and thus also measures intrinsic robustness. In tandem, we show that the recently proposed min-weight metric lower bounds the Ferrari-Canny metric, justifying it as a computationally-efficient, uncertainty-aware alternative. We validate this theory on hardware experiments versus a competitive baseline and demonstrate superior performance. Finally, we use our theory to develop an analytic notion of probabilistic force closure, which we show in simulation generates grasps that can incorporate uncertainty distributions over an object's geometry.

PONG: Probabilistic Object Normals for Grasping via Analytic Bounds on Force Closure Probability

Sep 29, 2023

Abstract:Classical approaches to grasp planning are deterministic, requiring perfect knowledge of an object's pose and geometry. In response, data-driven approaches have emerged that plan grasps entirely from sensory data. While these data-driven methods have excelled in generating parallel-jaw and power grasps, their application to precision grasps (those using the fingertips of a dexterous hand, e.g, for tool use) remains limited. Precision grasping poses a unique challenge due to its sensitivity to object geometry, which allows small uncertainties in the object's shape and pose to cause an otherwise robust grasp to fail. In response to these challenges, we introduce Probabilistic Object Normals for Grasping (PONG), a novel, analytic approach for calculating a conservative estimate of force closure probability in the case when contact locations are known but surface normals are uncertain. We then present a practical application where we use PONG as a grasp metric for generating robust grasps both in simulation and real-world hardware experiments. Our results demonstrate that maximizing PONG efficiently produces robust grasps, even for challenging object geometries, and that it can serve as a well-calibrated, uncertainty-aware metric of grasp quality.

Input-to-State Stability in Probability

Apr 28, 2023Abstract:Input-to-State Stability (ISS) is fundamental in mathematically quantifying how stability degrades in the presence of bounded disturbances. If a system is ISS, its trajectories will remain bounded, and will converge to a neighborhood of an equilibrium of the undisturbed system. This graceful degradation of stability in the presence of disturbances describes a variety of real-world control implementations. Despite its utility, this property requires the disturbance to be bounded and provides invariance and stability guarantees only with respect to this worst-case bound. In this work, we introduce the concept of ``ISS in probability (ISSp)'' which generalizes ISS to discrete-time systems subject to unbounded stochastic disturbances. Using tools from martingale theory, we provide Lyapunov conditions for a system to be exponentially ISSp, and connect ISSp to stochastic stability conditions found in literature. We exemplify the utility of this method through its application to a bipedal robot confronted with step heights sampled from a truncated Gaussian distribution.

FRoGGeR: Fast Robust Grasp Generation via the Min-Weight Metric

Feb 27, 2023

Abstract:Many approaches to grasp synthesis optimize analytic quality metrics that measure grasp robustness based on finger placements and local surface geometry. However, generating feasible dexterous grasps by optimizing these metrics is slow, often taking minutes. To address this issue, this paper presents FRoGGeR: a method that quickly generates robust precision grasps using the min-weight metric, a novel, almost-everywhere differentiable approximation of the classical epsilon grasp metric. The min-weight metric is simple and interpretable, provides a reasonable measure of grasp robustness, and admits numerically efficient gradients for smooth optimization. We leverage these properties to rapidly synthesize collision-free robust grasps - typically in less than a second. FRoGGeR can refine the candidate grasps generated by other methods (heuristic, data-driven, etc.) and is compatible with many object representations (SDFs, meshes, etc.). We study FRoGGeR's performance on over 40 objects drawn from the YCB dataset, outperforming a competitive baseline in computation time, feasibility rate of grasp synthesis, and picking success in simulation. We conclude that FRoGGeR is fast: it has a median synthesis time of 0.834s over hundreds of experiments.

CATNIPS: Collision Avoidance Through Neural Implicit Probabilistic Scenes

Feb 24, 2023

Abstract:We formalize a novel interpretation of Neural Radiance Fields (NeRFs) as giving rise to a Poisson Point Process (PPP). This PPP interpretation allows for rigorous quantification of uncertainty in NeRFs, in particular, for computing collision probabilities for a robot navigating through a NeRF environment model. The PPP is a generalization of a probabilistic occupancy grid to the continuous volume and is fundamental to the volumetric ray-tracing model underlying radiance fields. Building upon this PPP model, we present a chance-constrained trajectory optimization method for safe robot navigation in NeRFs. Our method relies on a voxel representation called the Probabilistic Unsafe Robot Region (PURR) that spatially fuses the chance constraint with the NeRF model to facilitate fast trajectory optimization. We then combine a graph-based search with a spline-based trajectory optimization to yield robot trajectories through the NeRF that are guaranteed to satisfy a user-specific collision probability. We validate our chance constrained planning method through simulations, showing superior performance compared with two other methods for trajectory planning in NeRF environment models.

Vision-Only Robot Navigation in a Neural Radiance World

Oct 01, 2021

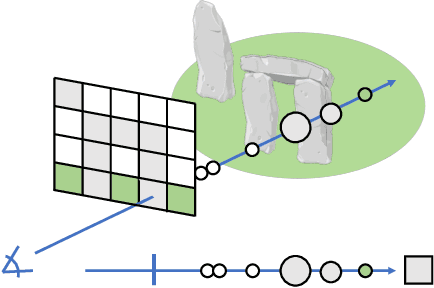

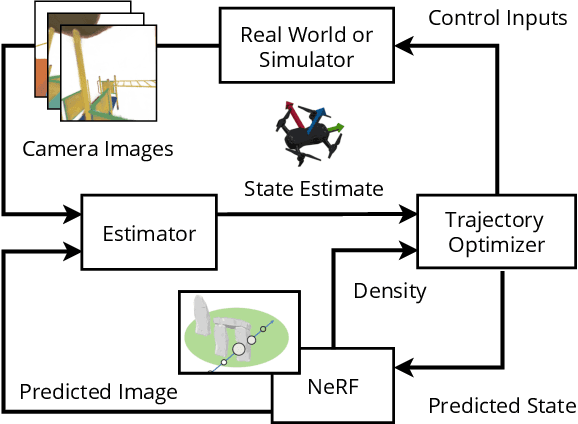

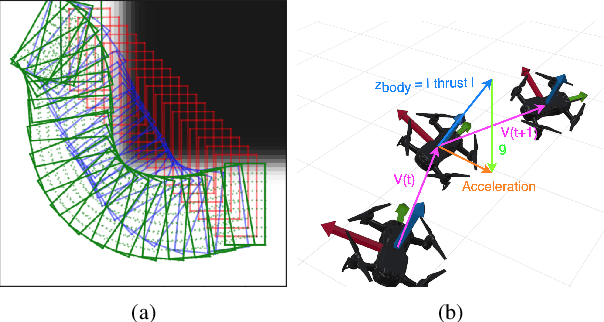

Abstract:Neural Radiance Fields (NeRFs) have recently emerged as a powerful paradigm for the representation of natural, complex 3D scenes. NeRFs represent continuous volumetric density and RGB values in a neural network, and generate photo-realistic images from unseen camera viewpoints through ray tracing. We propose an algorithm for navigating a robot through a 3D environment represented as a NeRF using only an on-board RGB camera for localization. We assume the NeRF for the scene has been pre-trained offline, and the robot's objective is to navigate through unoccupied space in the NeRF to reach a goal pose. We introduce a trajectory optimization algorithm that avoids collisions with high-density regions in the NeRF based on a discrete time version of differential flatness that is amenable to constraining the robot's full pose and control inputs. We also introduce an optimization based filtering method to estimate 6DoF pose and velocities for the robot in the NeRF given only an onboard RGB camera. We combine the trajectory planner with the pose filter in an online replanning loop to give a vision-based robot navigation pipeline. We present simulation results with a quadrotor robot navigating through a jungle gym environment, the inside of a church, and Stonehenge using only an RGB camera. We also demonstrate an omnidirectional ground robot navigating through the church, requiring it to reorient to fit through the narrow gap. Videos of this work can be found at https://mikh3x4.github.io/nerf-navigation/ .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge