Mykel Kochenderfer

Expert Evaluation and the Limits of Human Feedback in Mental Health AI Safety Testing

Jan 26, 2026Abstract:Learning from human feedback~(LHF) assumes that expert judgments, appropriately aggregated, yield valid ground truth for training and evaluating AI systems. We tested this assumption in mental health, where high safety stakes make expert consensus essential. Three certified psychiatrists independently evaluated LLM-generated responses using a calibrated rubric. Despite similar training and shared instructions, inter-rater reliability was consistently poor ($ICC$ $0.087$--$0.295$), falling below thresholds considered acceptable for consequential assessment. Disagreement was highest on the most safety-critical items. Suicide and self-harm responses produced greater divergence than any other category, and was systematic rather than random. One factor yielded negative reliability (Krippendorff's $α= -0.203$), indicating structured disagreement worse than chance. Qualitative interviews revealed that disagreement reflects coherent but incompatible individual clinical frameworks, safety-first, engagement-centered, and culturally-informed orientations, rather than measurement error. By demonstrating that experts rely on holistic risk heuristics rather than granular factor discrimination, these findings suggest that aggregated labels function as arithmetic compromises that effectively erase grounded professional philosophies. Our results characterize expert disagreement in safety-critical AI as a sociotechnical phenomenon where professional experience introduces sophisticated layers of principled divergence. We discuss implications for reward modeling, safety classification, and evaluation benchmarks, recommending that practitioners shift from consensus-based aggregation to alignment methods that preserve and learn from expert disagreement.

Who Evaluates AI's Social Impacts? Mapping Coverage and Gaps in First and Third Party Evaluations

Nov 06, 2025

Abstract:Foundation models are increasingly central to high-stakes AI systems, and governance frameworks now depend on evaluations to assess their risks and capabilities. Although general capability evaluations are widespread, social impact assessments covering bias, fairness, privacy, environmental costs, and labor practices remain uneven across the AI ecosystem. To characterize this landscape, we conduct the first comprehensive analysis of both first-party and third-party social impact evaluation reporting across a wide range of model developers. Our study examines 186 first-party release reports and 183 post-release evaluation sources, and complements this quantitative analysis with interviews of model developers. We find a clear division of evaluation labor: first-party reporting is sparse, often superficial, and has declined over time in key areas such as environmental impact and bias, while third-party evaluators including academic researchers, nonprofits, and independent organizations provide broader and more rigorous coverage of bias, harmful content, and performance disparities. However, this complementarity has limits. Only model developers can authoritatively report on data provenance, content moderation labor, financial costs, and training infrastructure, yet interviews reveal that these disclosures are often deprioritized unless tied to product adoption or regulatory compliance. Our findings indicate that current evaluation practices leave major gaps in assessing AI's societal impacts, highlighting the urgent need for policies that promote developer transparency, strengthen independent evaluation ecosystems, and create shared infrastructure to aggregate and compare third-party evaluations in a consistent and accessible way.

Scaling Recurrent Neural Networks to a Billion Parameters with Zero-Order Optimization

May 23, 2025Abstract:During inference, Recurrent Neural Networks (RNNs) scale constant in both FLOPs and GPU memory with increasing context length, as they compress all prior tokens into a fixed-size memory. In contrast, transformers scale linearly in FLOPs and, at best, linearly in memory during generation, since they must attend to all previous tokens explicitly. Despite this inference-time advantage, training large RNNs on long contexts remains impractical because standard optimization methods depend on Backpropagation Through Time (BPTT). BPTT requires retention of all intermediate activations during the forward pass, causing memory usage to scale linearly with both context length and model size. In this paper, we show that Zero-Order Optimization (ZOO) methods such as Random-vector Gradient Estimation (RGE) can successfully replace BPTT to train RNNs with convergence rates that match, or exceed BPTT by up to 19 fold, while using orders of magnitude less memory and cost, as the model remains in inference mode throughout training. We further demonstrate that Central-Difference RGE (CD-RGE) corresponds to optimizing a smoothed surrogate loss, inherently regularizing training and improving generalization. Our method matches or outperforms BPTT across three settings: (1) overfitting, (2) transduction, and (3) language modeling. Across all tasks, with sufficient perturbations, our models generalize as well as or better than those trained with BPTT, often in fewer steps. Despite the need for more forward passes per step, we can surpass BPTT wall-clock time per step using recent advancements such as FlashRNN and distributed inference.

Conditional Deep Generative Models for Belief State Planning

May 16, 2025Abstract:Partially observable Markov decision processes (POMDPs) are used to model a wide range of applications, including robotics, autonomous vehicles, and subsurface problems. However, accurately representing the belief is difficult for POMDPs with high-dimensional states. In this paper, we propose a novel approach that uses conditional deep generative models (cDGMs) to represent the belief. Unlike traditional belief representations, cDGMs are well-suited for high-dimensional states and large numbers of observations, and they can generate an arbitrary number of samples from the posterior belief. We train the cDGMs on data produced by random rollout trajectories and show their effectiveness in solving a mineral exploration POMDP with a large and continuous state space. The cDGMs outperform particle filter baselines in both task-agnostic measures of belief accuracy as well as in planning performance.

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

Markov Decision Processes for Satellite Maneuver Planning and Collision Avoidance

Jan 05, 2025

Abstract:This paper presents a decentralized, online planning approach for scalable maneuver planning for large constellations. While decentralized, rule-based strategies have facilitated efficient scaling, optimal decision-making algorithms for satellite maneuvers remain underexplored. As commercial satellite constellations grow, there are benefits of online maneuver planning, such as using real-time trajectory predictions to improve state knowledge, thereby reducing maneuver frequency and conserving fuel. We address this gap in the research by treating the satellite maneuver planning problem as a Markov decision process (MDP). This approach enables the generation of optimal maneuver policies online with low computational cost. This formulation is applied to the low Earth orbit collision avoidance problem, considering the problem of an active spacecraft deciding to maneuver to avoid a non-maneuverable object. We test the policies we generate in a simulated low Earth orbit environment, and compare the results to traditional rule-based collision avoidance techniques.

Trajectory Optimization for Adaptive Informative Path Planning with Multimodal Sensing

Apr 29, 2024Abstract:We consider the problem of an autonomous agent equipped with multiple sensors, each with different sensing precision and energy costs. The agent's goal is to explore the environment and gather information subject to its resource constraints in unknown, partially observable environments. The challenge lies in reasoning about the effects of sensing and movement while respecting the agent's resource and dynamic constraints. We formulate the problem as a trajectory optimization problem and solve it using a projection-based trajectory optimization approach where the objective is to reduce the variance of the Gaussian process world belief. Our approach outperforms previous approaches in long horizon trajectories by achieving an overall variance reduction of up to 85% and reducing the root-mean square error in the environment belief by 50%. This approach was developed in support of rover path planning for the NASA VIPER Mission.

Rank2Tell: A Multimodal Driving Dataset for Joint Importance Ranking and Reasoning

Sep 12, 2023Abstract:The widespread adoption of commercial autonomous vehicles (AVs) and advanced driver assistance systems (ADAS) may largely depend on their acceptance by society, for which their perceived trustworthiness and interpretability to riders are crucial. In general, this task is challenging because modern autonomous systems software relies heavily on black-box artificial intelligence models. Towards this goal, this paper introduces a novel dataset, Rank2Tell, a multi-modal ego-centric dataset for Ranking the importance level and Telling the reason for the importance. Using various close and open-ended visual question answering, the dataset provides dense annotations of various semantic, spatial, temporal, and relational attributes of various important objects in complex traffic scenarios. The dense annotations and unique attributes of the dataset make it a valuable resource for researchers working on visual scene understanding and related fields. Further, we introduce a joint model for joint importance level ranking and natural language captions generation to benchmark our dataset and demonstrate performance with quantitative evaluations.

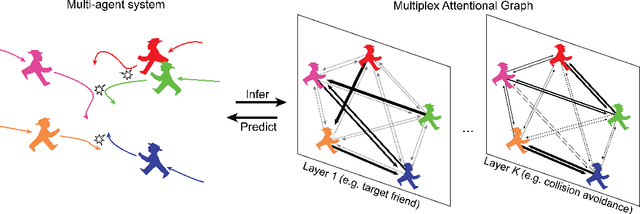

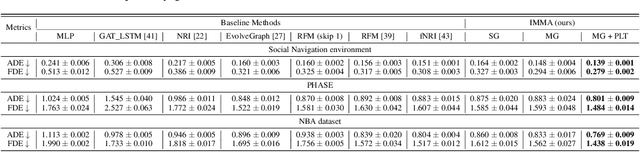

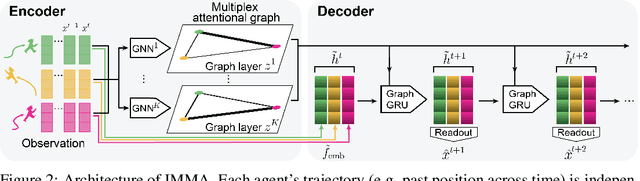

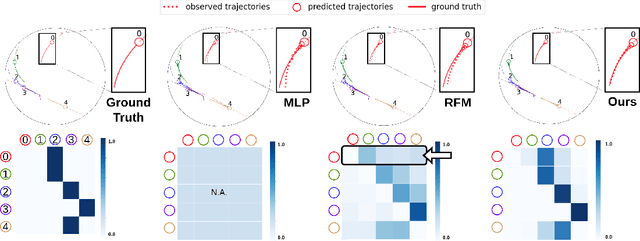

Interaction Modeling with Multiplex Attention

Aug 23, 2022

Abstract:Modeling multi-agent systems requires understanding how agents interact. Such systems are often difficult to model because they can involve a variety of types of interactions that layer together to drive rich social behavioral dynamics. Here we introduce a method for accurately modeling multi-agent systems. We present Interaction Modeling with Multiplex Attention (IMMA), a forward prediction model that uses a multiplex latent graph to represent multiple independent types of interactions and attention to account for relations of different strengths. We also introduce Progressive Layer Training, a training strategy for this architecture. We show that our approach outperforms state-of-the-art models in trajectory forecasting and relation inference, spanning three multi-agent scenarios: social navigation, cooperative task achievement, and team sports. We further demonstrate that our approach can improve zero-shot generalization and allows us to probe how different interactions impact agent behavior.

Disentangling Epistemic and Aleatoric Uncertainty in Reinforcement Learning

Jun 03, 2022

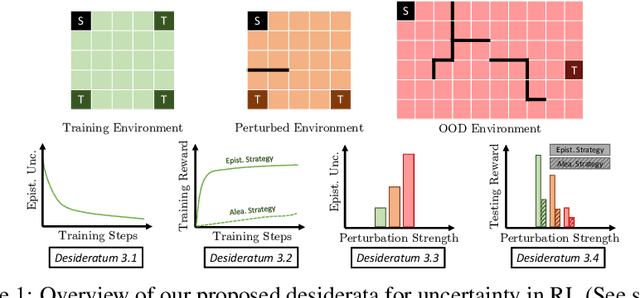

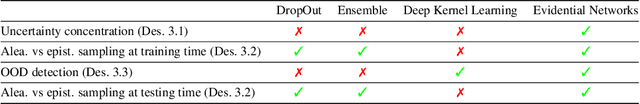

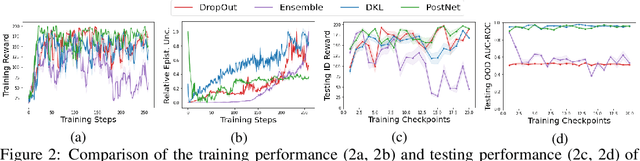

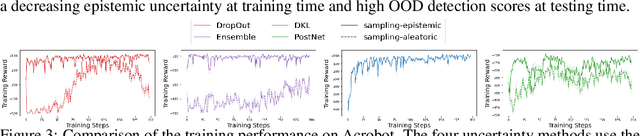

Abstract:Characterizing aleatoric and epistemic uncertainty on the predicted rewards can help in building reliable reinforcement learning (RL) systems. Aleatoric uncertainty results from the irreducible environment stochasticity leading to inherently risky states and actions. Epistemic uncertainty results from the limited information accumulated during learning to make informed decisions. Characterizing aleatoric and epistemic uncertainty can be used to speed up learning in a training environment, improve generalization to similar testing environments, and flag unfamiliar behavior in anomalous testing environments. In this work, we introduce a framework for disentangling aleatoric and epistemic uncertainty in RL. (1) We first define four desiderata that capture the desired behavior for aleatoric and epistemic uncertainty estimation in RL at both training and testing time. (2) We then present four RL models inspired by supervised learning (i.e. Monte Carlo dropout, ensemble, deep kernel learning models, and evidential networks) to instantiate aleatoric and epistemic uncertainty. Finally, (3) we propose a practical evaluation method to evaluate uncertainty estimation in model-free RL based on detection of out-of-distribution environments and generalization to perturbed environments. We present theoretical and experimental evidence to validate that carefully equipping model-free RL agents with supervised learning uncertainty methods can fulfill our desiderata.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge