DiT: Self-supervised Pre-training for Document Image Transformer

Apr 12, 2022Junlong Li, Yiheng Xu, Tengchao Lv, Lei Cui, Cha Zhang, Furu Wei

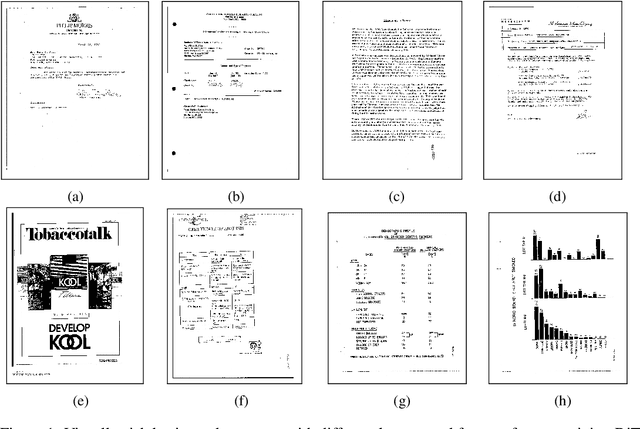

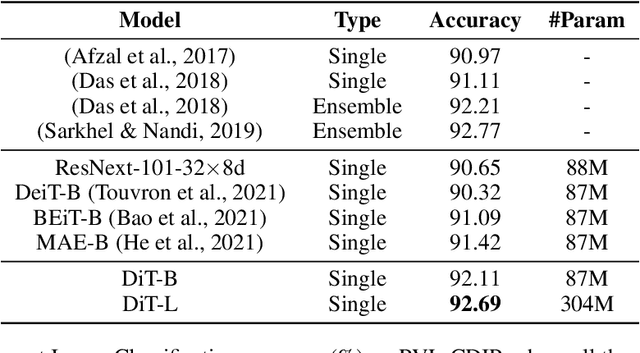

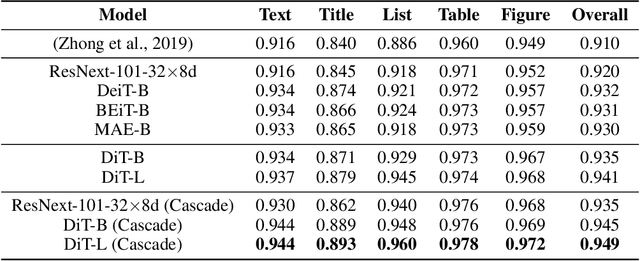

Image Transformer has recently achieved significant progress for natural image understanding, either using supervised (ViT, DeiT, etc.) or self-supervised (BEiT, MAE, etc.) pre-training techniques. In this paper, we propose DiT, a self-supervised pre-trained Document Image Transformer model using large-scale unlabeled text images for Document AI tasks, which is essential since no supervised counterparts ever exist due to the lack of human labeled document images. We leverage DiT as the backbone network in a variety of vision-based Document AI tasks, including document image classification, document layout analysis, table detection as well as text detection for OCR. Experiment results have illustrated that the self-supervised pre-trained DiT model achieves new state-of-the-art results on these downstream tasks, e.g. document image classification (91.11 $\rightarrow$ 92.69), document layout analysis (91.0 $\rightarrow$ 94.9), table detection (94.23 $\rightarrow$ 96.55) and text detection for OCR (93.07 $\rightarrow$ 94.29). The code and pre-trained models are publicly available at \url{https://aka.ms/msdit}.

Speech Pre-training with Acoustic Piece

Apr 07, 2022Shuo Ren, Shujie Liu, Yu Wu, Long Zhou, Furu Wei

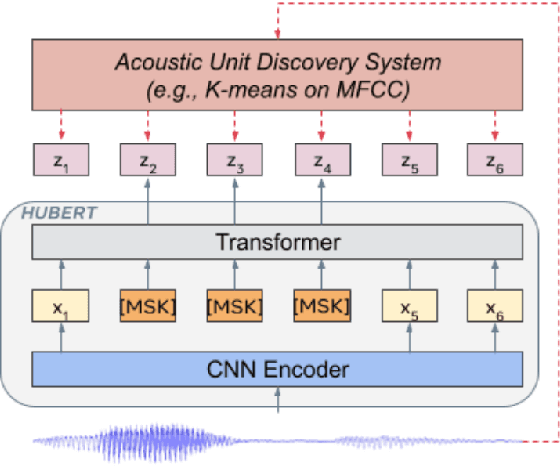

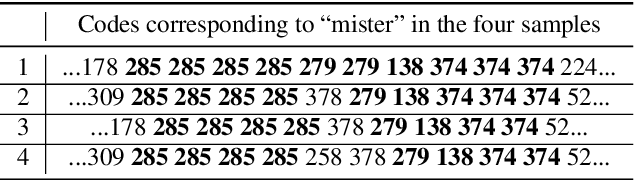

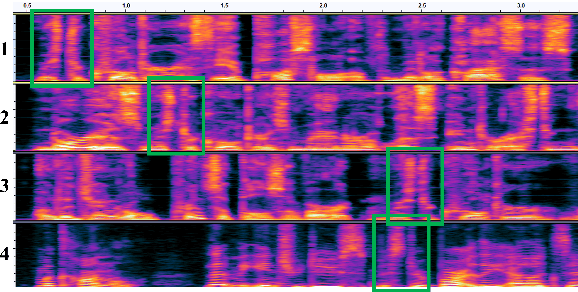

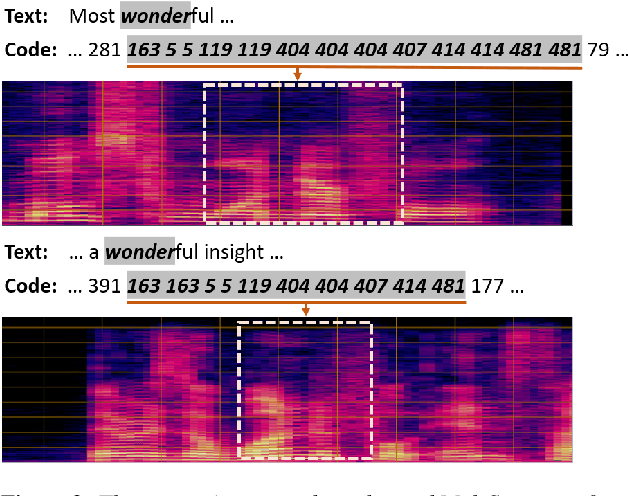

Previous speech pre-training methods, such as wav2vec2.0 and HuBERT, pre-train a Transformer encoder to learn deep representations from audio data, with objectives predicting either elements from latent vector quantized space or pre-generated labels (known as target codes) with offline clustering. However, those training signals (quantized elements or codes) are independent across different tokens without considering their relations. According to our observation and analysis, the target codes share obvious patterns aligned with phonemized text data. Based on that, we propose to leverage those patterns to better pre-train the model considering the relations among the codes. The patterns we extracted, called "acoustic piece"s, are from the sentence piece result of HuBERT codes. With the acoustic piece as the training signal, we can implicitly bridge the input audio and natural language, which benefits audio-to-text tasks, such as automatic speech recognition (ASR). Simple but effective, our method "HuBERT-AP" significantly outperforms strong baselines on the LibriSpeech ASR task.

Lossless Speedup of Autoregressive Translation with Generalized Aggressive Decoding

Apr 02, 2022Heming Xia, Tao Ge, Furu Wei, Zhifang Sui

In this paper, we propose Generalized Aggressive Decoding (GAD) -- a novel decoding paradigm for speeding up autoregressive translation without quality loss, through the collaboration of autoregressive and non-autoregressive translation (NAT) of the Transformer. At each decoding iteration, GAD aggressively decodes a number of tokens in parallel as a draft with NAT and then verifies them in the autoregressive manner, where only the tokens that pass the verification are kept as decoded tokens. GAD can achieve the same performance as autoregressive translation but much more efficiently because both NAT drafting and autoregressive verification are fast due to parallel computing. We conduct experiments in the WMT14 English-German translation task and confirm that the vanilla GAD yields exactly the same results as greedy decoding with an around 3x speedup, and that its variant (GAD++) with an advanced verification strategy not only outperforms the greedy translation and even achieves the comparable translation quality with the beam search result, but also further improves the decoding speed, resulting in an around 5x speedup over autoregressive translation. Our models and codes are available at https://github.com/hemingkx/Generalized-Aggressive-Decoding.

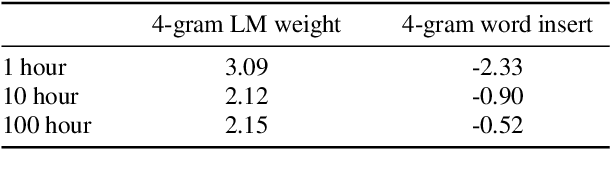

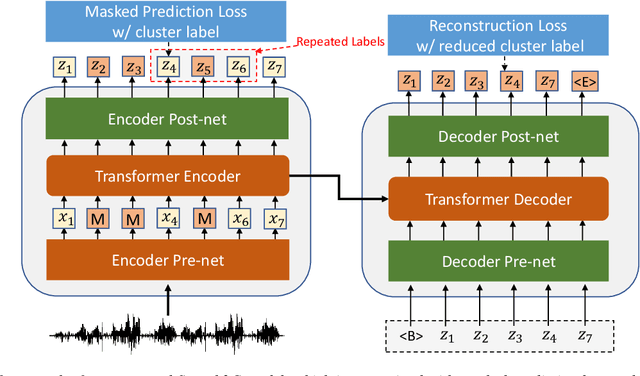

Pre-Training Transformer Decoder for End-to-End ASR Model with Unpaired Speech Data

Mar 31, 2022Junyi Ao, Ziqiang Zhang, Long Zhou, Shujie Liu, Haizhou Li, Tom Ko, Lirong Dai, Jinyu Li, Yao Qian, Furu Wei

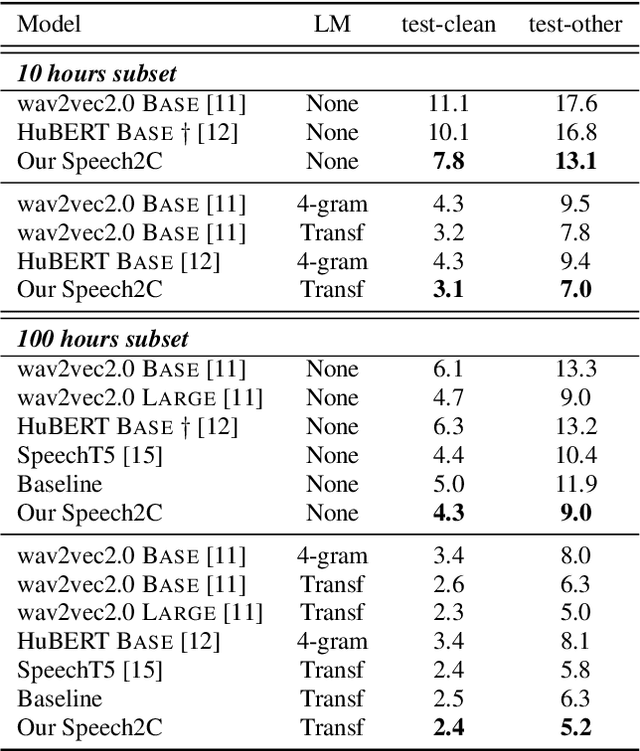

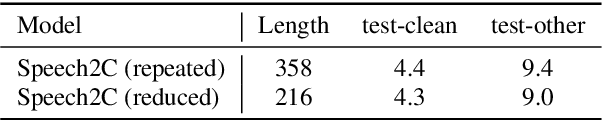

This paper studies a novel pre-training technique with unpaired speech data, Speech2C, for encoder-decoder based automatic speech recognition (ASR). Within a multi-task learning framework, we introduce two pre-training tasks for the encoder-decoder network using acoustic units, i.e., pseudo codes, derived from an offline clustering model. One is to predict the pseudo codes via masked language modeling in encoder output, like HuBERT model, while the other lets the decoder learn to reconstruct pseudo codes autoregressively instead of generating textual scripts. In this way, the decoder learns to reconstruct original speech information with codes before learning to generate correct text. Comprehensive experiments on the LibriSpeech corpus show that the proposed Speech2C can relatively reduce the word error rate (WER) by 19.2% over the method without decoder pre-training, and also outperforms significantly the state-of-the-art wav2vec 2.0 and HuBERT on fine-tuning subsets of 10h and 100h.

CLIP Models are Few-shot Learners: Empirical Studies on VQA and Visual Entailment

Mar 14, 2022Haoyu Song, Li Dong, Wei-Nan Zhang, Ting Liu, Furu Wei

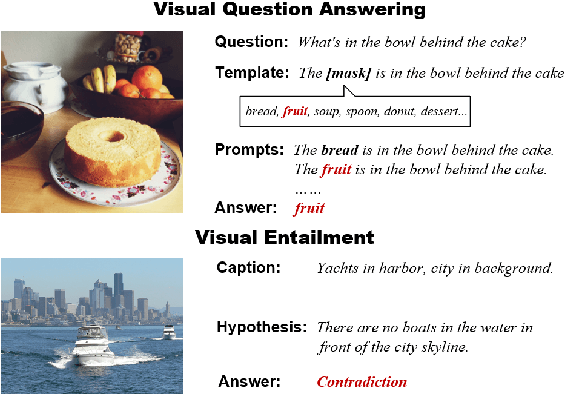

CLIP has shown a remarkable zero-shot capability on a wide range of vision tasks. Previously, CLIP is only regarded as a powerful visual encoder. However, after being pre-trained by language supervision from a large amount of image-caption pairs, CLIP itself should also have acquired some few-shot abilities for vision-language tasks. In this work, we empirically show that CLIP can be a strong vision-language few-shot learner by leveraging the power of language. We first evaluate CLIP's zero-shot performance on a typical visual question answering task and demonstrate a zero-shot cross-modality transfer capability of CLIP on the visual entailment task. Then we propose a parameter-efficient fine-tuning strategy to boost the few-shot performance on the vqa task. We achieve competitive zero/few-shot results on the visual question answering and visual entailment tasks without introducing any additional pre-training procedure.

DeepNet: Scaling Transformers to 1,000 Layers

Mar 01, 2022Hongyu Wang, Shuming Ma, Li Dong, Shaohan Huang, Dongdong Zhang, Furu Wei

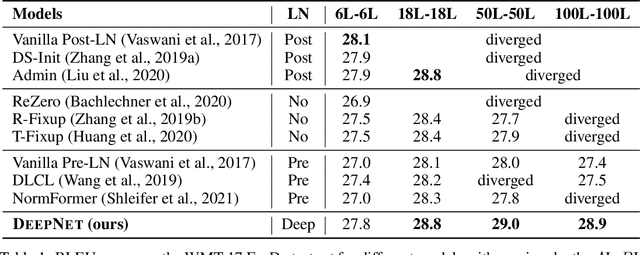

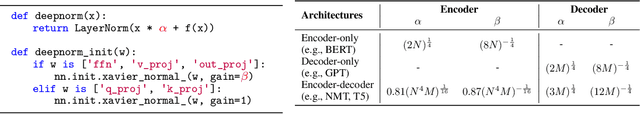

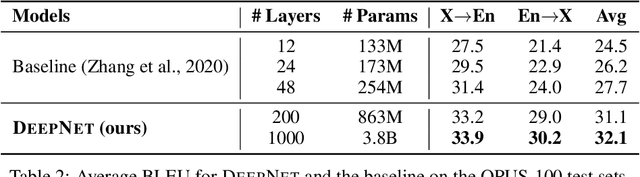

In this paper, we propose a simple yet effective method to stabilize extremely deep Transformers. Specifically, we introduce a new normalization function (DeepNorm) to modify the residual connection in Transformer, accompanying with theoretically derived initialization. In-depth theoretical analysis shows that model updates can be bounded in a stable way. The proposed method combines the best of two worlds, i.e., good performance of Post-LN and stable training of Pre-LN, making DeepNorm a preferred alternative. We successfully scale Transformers up to 1,000 layers (i.e., 2,500 attention and feed-forward network sublayers) without difficulty, which is one order of magnitude deeper than previous deep Transformers. Remarkably, on a multilingual benchmark with 7,482 translation directions, our 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a promising scaling direction.

Controllable Natural Language Generation with Contrastive Prefixes

Feb 27, 2022Jing Qian, Li Dong, Yelong Shen, Furu Wei, Weizhu Chen

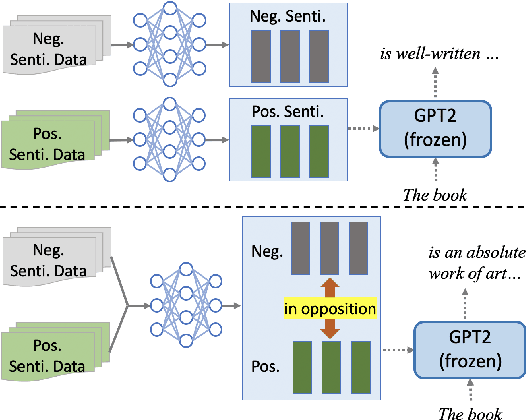

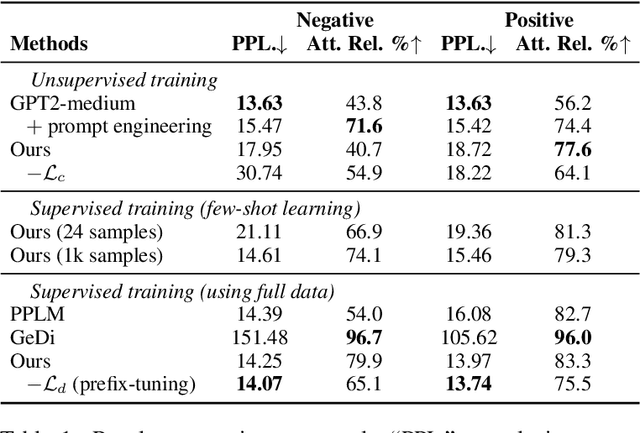

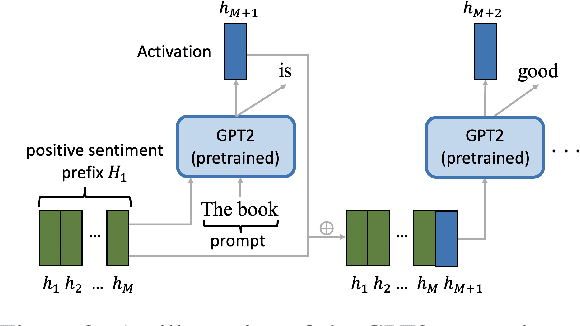

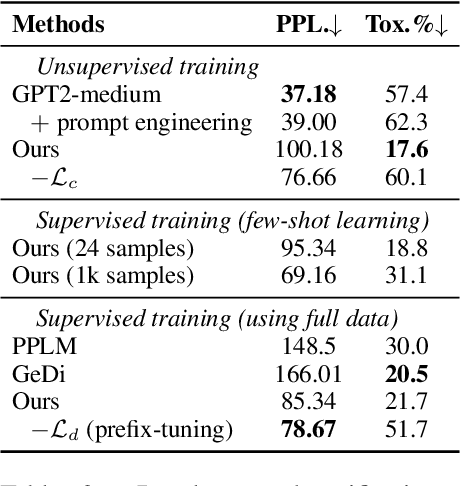

To guide the generation of large pretrained language models (LM), previous work has focused on directly fine-tuning the language model or utilizing an attribute discriminator. In this work, we propose a novel lightweight framework for controllable GPT2 generation, which utilizes a set of small attribute-specific vectors, called prefixes, to steer natural language generation. Different from prefix-tuning, where each prefix is trained independently, we take the relationship among prefixes into consideration and train multiple prefixes simultaneously. We propose a novel supervised method and also an unsupervised method to train the prefixes for single-aspect control while the combination of these two methods can achieve multi-aspect control. Experimental results on both single-aspect and multi-aspect control show that our methods can guide generation towards the desired attributes while keeping high linguistic quality.

Zero-shot Cross-lingual Transfer of Prompt-based Tuning with a Unified Multilingual Prompt

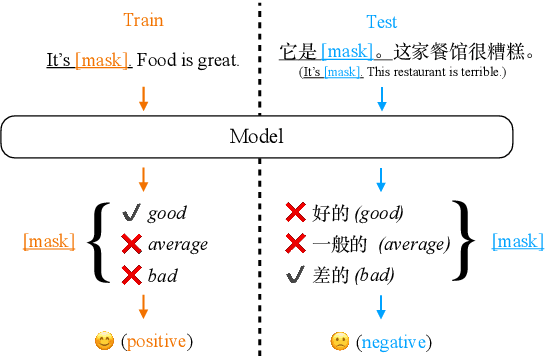

Feb 23, 2022Lianzhe Huang, Shuming Ma, Dongdong Zhang, Furu Wei, Houfeng Wang

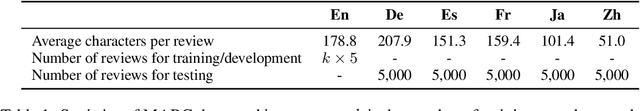

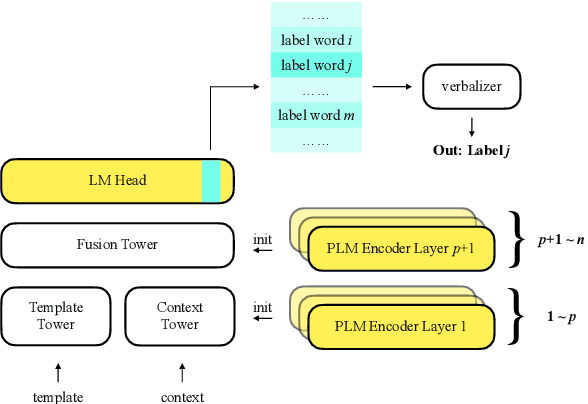

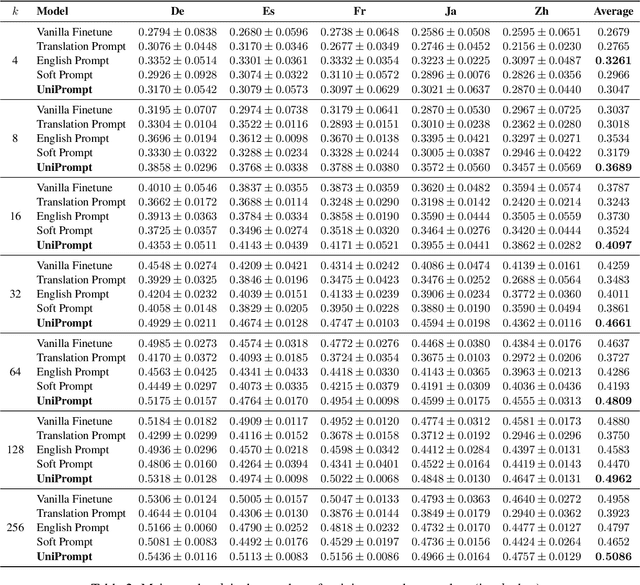

Prompt-based tuning has been proven effective for pretrained language models (PLMs). While most of the existing work focuses on the monolingual prompts, we study the multilingual prompts for multilingual PLMs, especially in the zero-shot cross-lingual setting. To alleviate the effort of designing different prompts for multiple languages, we propose a novel model that uses a unified prompt for all languages, called UniPrompt. Different from the discrete prompts and soft prompts, the unified prompt is model-based and language-agnostic. Specifically, the unified prompt is initialized by a multilingual PLM to produce language-independent representation, after which is fused with the text input. During inference, the prompts can be pre-computed so that no extra computation cost is needed. To collocate with the unified prompt, we propose a new initialization method for the target label word to further improve the model's transferability across languages. Extensive experiments show that our proposed methods can significantly outperform the strong baselines across different languages. We will release data and code to facilitate future research.

A Survey of Knowledge-Intensive NLP with Pre-Trained Language Models

Feb 17, 2022Da Yin, Li Dong, Hao Cheng, Xiaodong Liu, Kai-Wei Chang, Furu Wei, Jianfeng Gao

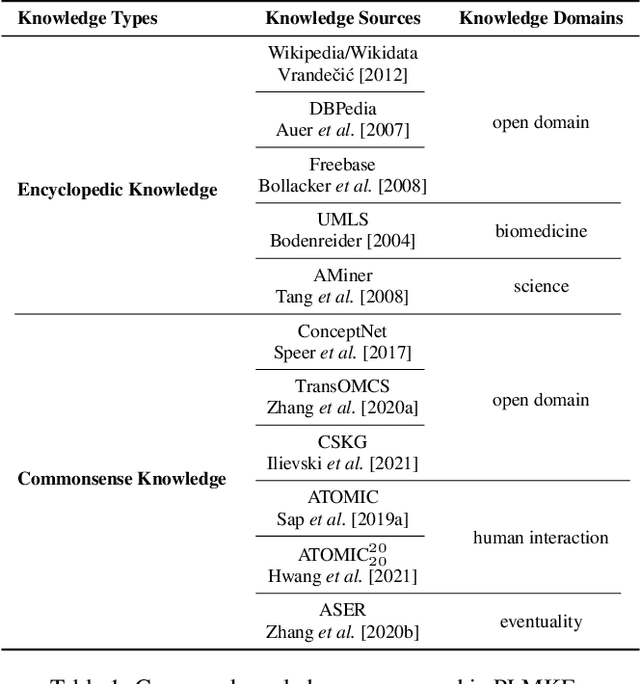

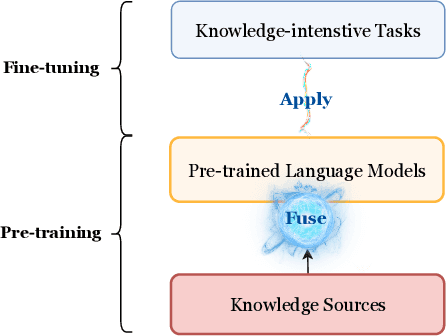

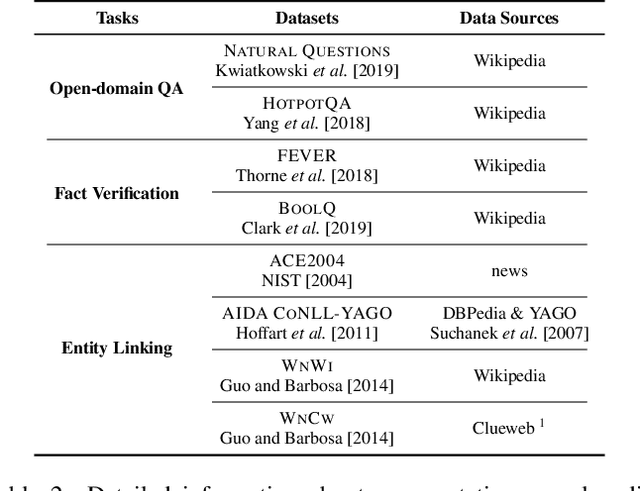

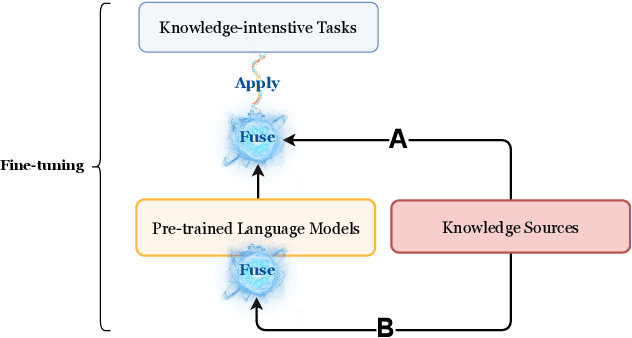

With the increasing of model capacity brought by pre-trained language models, there emerges boosting needs for more knowledgeable natural language processing (NLP) models with advanced functionalities including providing and making flexible use of encyclopedic and commonsense knowledge. The mere pre-trained language models, however, lack the capacity of handling such knowledge-intensive NLP tasks alone. To address this challenge, large numbers of pre-trained language models augmented with external knowledge sources are proposed and in rapid development. In this paper, we aim to summarize the current progress of pre-trained language model-based knowledge-enhanced models (PLMKEs) by dissecting their three vital elements: knowledge sources, knowledge-intensive NLP tasks, and knowledge fusion methods. Finally, we present the challenges of PLMKEs based on the discussion regarding the three elements and attempt to provide NLP practitioners with potential directions for further research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge