Nan Yang

Impact of Pointing Error on Coverage Performance of 3D Indoor Terahertz Communication Systems

Jan 29, 2026Abstract:In this paper, we develop a tractable analytical framework for a three-dimensional (3D) indoor terahertz (THz) communication system to theoretically assess the impact of the pointing error on its coverage performance. Specifically, we model the locations of access points (APs) using a Poisson point process, human blockages as random cylinder processes, and wall blockages through a Boolean straight line process. A pointing error refers to beamforming gain and direction mismatch between the transmitter and receiver. We characterize it based on the inaccuracy of location estimate. We then analyze the impact of this pointing error on the received signal power and derive a tractable expression for the coverage probability, incorporating the multi-cluster fluctuating two-ray distribution to accurately model small-scale fading in THz communications. Aided by simulation results, we corroborate our analysis and demonstrate that the pointing error has a pronounced impact on the coverage probability. Specifically, we find that merely increasing the antenna array size is insufficient to improve the coverage probability and mitigate the detrimental impact of the pointing error, highlighting the necessity of advanced estimation techniques in THz communication systems.

Two Pathways to Truthfulness: On the Intrinsic Encoding of LLM Hallucinations

Jan 12, 2026Abstract:Despite their impressive capabilities, large language models (LLMs) frequently generate hallucinations. Previous work shows that their internal states encode rich signals of truthfulness, yet the origins and mechanisms of these signals remain unclear. In this paper, we demonstrate that truthfulness cues arise from two distinct information pathways: (1) a Question-Anchored pathway that depends on question-answer information flow, and (2) an Answer-Anchored pathway that derives self-contained evidence from the generated answer itself. First, we validate and disentangle these pathways through attention knockout and token patching. Afterwards, we uncover notable and intriguing properties of these two mechanisms. Further experiments reveal that (1) the two mechanisms are closely associated with LLM knowledge boundaries; and (2) internal representations are aware of their distinctions. Finally, building on these insightful findings, two applications are proposed to enhance hallucination detection performance. Overall, our work provides new insight into how LLMs internally encode truthfulness, offering directions for more reliable and self-aware generative systems.

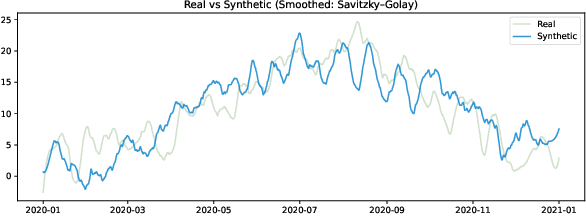

Optimal Look-back Horizon for Time Series Forecasting in Federated Learning

Nov 18, 2025

Abstract:Selecting an appropriate look-back horizon remains a fundamental challenge in time series forecasting (TSF), particularly in the federated learning scenarios where data is decentralized, heterogeneous, and often non-independent. While recent work has explored horizon selection by preserving forecasting-relevant information in an intrinsic space, these approaches are primarily restricted to centralized and independently distributed settings. This paper presents a principled framework for adaptive horizon selection in federated time series forecasting through an intrinsic space formulation. We introduce a synthetic data generator (SDG) that captures essential temporal structures in client data, including autoregressive dependencies, seasonality, and trend, while incorporating client-specific heterogeneity. Building on this model, we define a transformation that maps time series windows into an intrinsic representation space with well-defined geometric and statistical properties. We then derive a decomposition of the forecasting loss into a Bayesian term, which reflects irreducible uncertainty, and an approximation term, which accounts for finite-sample effects and limited model capacity. Our analysis shows that while increasing the look-back horizon improves the identifiability of deterministic patterns, it also increases approximation error due to higher model complexity and reduced sample efficiency. We prove that the total forecasting loss is minimized at the smallest horizon where the irreducible loss starts to saturate, while the approximation loss continues to rise. This work provides a rigorous theoretical foundation for adaptive horizon selection for time series forecasting in federated learning.

Synthetic Forgetting without Access: A Few-shot Zero-glance Framework for Machine Unlearning

Nov 17, 2025

Abstract:Machine unlearning aims to eliminate the influence of specific data from trained models to ensure privacy compliance. However, most existing methods assume full access to the original training dataset, which is often impractical. We address a more realistic yet challenging setting: few-shot zero-glance, where only a small subset of the retained data is available and the forget set is entirely inaccessible. We introduce GFOES, a novel framework comprising a Generative Feedback Network (GFN) and a two-phase fine-tuning procedure. GFN synthesises Optimal Erasure Samples (OES), which induce high loss on target classes, enabling the model to forget class-specific knowledge without access to the original forget data, while preserving performance on retained classes. The two-phase fine-tuning procedure enables aggressive forgetting in the first phase, followed by utility restoration in the second. Experiments on three image classification datasets demonstrate that GFOES achieves effective forgetting at both logit and representation levels, while maintaining strong performance using only 5% of the original data. Our framework offers a practical and scalable solution for privacy-preserving machine learning under data-constrained conditions.

Scaling Law Analysis in Federated Learning: How to Select the Optimal Model Size?

Nov 15, 2025Abstract:The recent success of large language models (LLMs) has sparked a growing interest in training large-scale models. As the model size continues to scale, concerns are growing about the depletion of high-quality, well-curated training data. This has led practitioners to explore training approaches like Federated Learning (FL), which can leverage the abundant data on edge devices while maintaining privacy. However, the decentralization of training datasets in FL introduces challenges to scaling large models, a topic that remains under-explored. This paper fills this gap and provides qualitative insights on generalizing the previous model scaling experience to federated learning scenarios. Specifically, we derive a PAC-Bayes (Probably Approximately Correct Bayesian) upper bound for the generalization error of models trained with stochastic algorithms in federated settings and quantify the impact of distributed training data on the optimal model size by finding the analytic solution of model size that minimizes this bound. Our theoretical results demonstrate that the optimal model size has a negative power law relationship with the number of clients if the total training compute is unchanged. Besides, we also find that switching to FL with the same training compute will inevitably reduce the upper bound of generalization performance that the model can achieve through training, and that estimating the optimal model size in federated scenarios should depend on the average training compute across clients. Furthermore, we also empirically validate the correctness of our results with extensive training runs on different models, network settings, and datasets.

GuardFed: A Trustworthy Federated Learning Framework Against Dual-Facet Attacks

Nov 12, 2025Abstract:Federated learning (FL) enables privacy-preserving collaborative model training but remains vulnerable to adversarial behaviors that compromise model utility or fairness across sensitive groups. While extensive studies have examined attacks targeting either objective, strategies that simultaneously degrade both utility and fairness remain largely unexplored. To bridge this gap, we introduce the Dual-Facet Attack (DFA), a novel threat model that concurrently undermines predictive accuracy and group fairness. Two variants, Synchronous DFA (S-DFA) and Split DFA (Sp-DFA), are further proposed to capture distinct real-world collusion scenarios. Experimental results show that existing robust FL defenses, including hybrid aggregation schemes, fail to resist DFAs effectively. To counter these threats, we propose GuardFed, a self-adaptive defense framework that maintains a fairness-aware reference model using a small amount of clean server data augmented with synthetic samples. In each training round, GuardFed computes a dual-perspective trust score for every client by jointly evaluating its utility deviation and fairness degradation, thereby enabling selective aggregation of trustworthy updates. Extensive experiments on real-world datasets demonstrate that GuardFed consistently preserves both accuracy and fairness under diverse non-IID and adversarial conditions, achieving state-of-the-art performance compared with existing robust FL methods.

Knowledge-Augmented Question Error Correction for Chinese Question Answer System with QuestionRAG

Nov 05, 2025Abstract:Input errors in question-answering (QA) systems often lead to incorrect responses. Large language models (LLMs) struggle with this task, frequently failing to interpret user intent (misinterpretation) or unnecessarily altering the original question's structure (over-correction). We propose QuestionRAG, a framework that tackles these problems. To address misinterpretation, it enriches the input with external knowledge (e.g., search results, related entities). To prevent over-correction, it uses reinforcement learning (RL) to align the model's objective with precise correction, not just paraphrasing. Our results demonstrate that knowledge augmentation is critical for understanding faulty questions. Furthermore, RL-based alignment proves significantly more effective than traditional supervised fine-tuning (SFT), boosting the model's ability to follow instructions and generalize. By integrating these two strategies, QuestionRAG unlocks the full potential of LLMs for the question correction task.

PSTTS: A Plug-and-Play Token Selector for Efficient Event-based Spatio-temporal Representation Learning

Sep 26, 2025Abstract:Mainstream event-based spatio-temporal representation learning methods typically process event streams by converting them into sequences of event frames, achieving remarkable performance. However, they neglect the high spatial sparsity and inter-frame motion redundancy inherent in event frame sequences, leading to significant computational overhead. Existing token sparsification methods for RGB videos rely on unreliable intermediate token representations and neglect the influence of event noise, making them ineffective for direct application to event data. In this paper, we propose Progressive Spatio-Temporal Token Selection (PSTTS), a Plug-and-Play module for event data without introducing any additional parameters. PSTTS exploits the spatio-temporal distribution characteristics embedded in raw event data to effectively identify and discard spatio-temporal redundant tokens, achieving an optimal trade-off between accuracy and efficiency. Specifically, PSTTS consists of two stages, Spatial Token Purification and Temporal Token Selection. Spatial Token Purification discards noise and non-event regions by assessing the spatio-temporal consistency of events within each event frame to prevent interference with subsequent temporal redundancy evaluation. Temporal Token Selection evaluates the motion pattern similarity between adjacent event frames, precisely identifying and removing redundant temporal information. We apply PSTTS to four representative backbones UniformerV2, VideoSwin, EVMamba, and ExACT on the HARDVS, DailyDVS-200, and SeACT datasets. Experimental results demonstrate that PSTTS achieves significant efficiency improvements. Specifically, PSTTS reduces FLOPs by 29-43.6% and increases FPS by 21.6-41.3% on the DailyDVS-200 dataset, while maintaining task accuracy. Our code will be available.

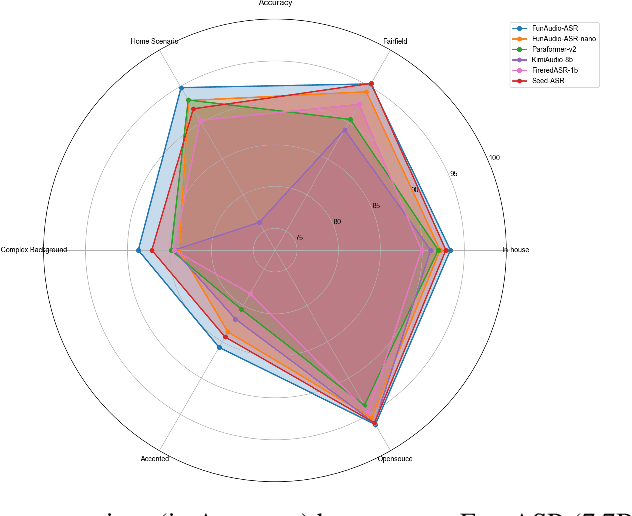

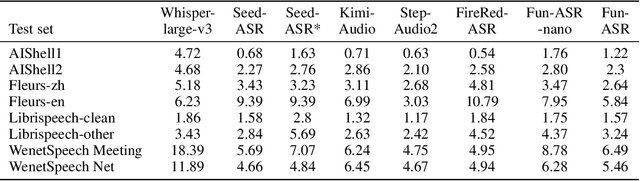

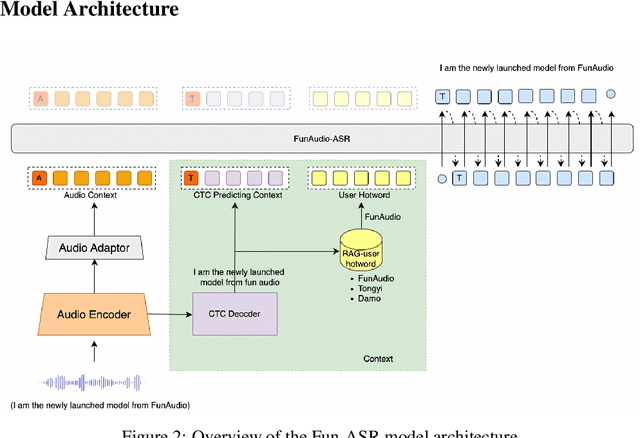

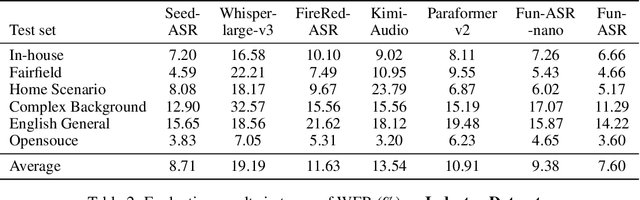

FunAudio-ASR Technical Report

Sep 15, 2025

Abstract:In recent years, automatic speech recognition (ASR) has witnessed transformative advancements driven by three complementary paradigms: data scaling, model size scaling, and deep integration with large language models (LLMs). However, LLMs are prone to hallucination, which can significantly degrade user experience in real-world ASR applications. In this paper, we present FunAudio-ASR, a large-scale, LLM-based ASR system that synergistically combines massive data, large model capacity, LLM integration, and reinforcement learning to achieve state-of-the-art performance across diverse and complex speech recognition scenarios. Moreover, FunAudio-ASR is specifically optimized for practical deployment, with enhancements in streaming capability, noise robustness, code-switching, hotword customization, and satisfying other real-world application requirements. Experimental results show that while most LLM-based ASR systems achieve strong performance on open-source benchmarks, they often underperform on real industry evaluation sets. Thanks to production-oriented optimizations, FunAudio-ASR achieves SOTA performance on real application datasets, demonstrating its effectiveness and robustness in practical settings.

Focus Through Motion: RGB-Event Collaborative Token Sparsification for Efficient Object Detection

Sep 04, 2025

Abstract:Existing RGB-Event detection methods process the low-information regions of both modalities (background in images and non-event regions in event data) uniformly during feature extraction and fusion, resulting in high computational costs and suboptimal performance. To mitigate the computational redundancy during feature extraction, researchers have respectively proposed token sparsification methods for the image and event modalities. However, these methods employ a fixed number or threshold for token selection, hindering the retention of informative tokens for samples with varying complexity. To achieve a better balance between accuracy and efficiency, we propose FocusMamba, which performs adaptive collaborative sparsification of multimodal features and efficiently integrates complementary information. Specifically, an Event-Guided Multimodal Sparsification (EGMS) strategy is designed to identify and adaptively discard low-information regions within each modality by leveraging scene content changes perceived by the event camera. Based on the sparsification results, a Cross-Modality Focus Fusion (CMFF) module is proposed to effectively capture and integrate complementary features from both modalities. Experiments on the DSEC-Det and PKU-DAVIS-SOD datasets demonstrate that the proposed method achieves superior performance in both accuracy and efficiency compared to existing methods. The code will be available at https://github.com/Zizzzzzzz/FocusMamba.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge