Lei Cui

LongReasonArena: A Long Reasoning Benchmark for Large Language Models

Aug 26, 2025Abstract:Existing long-context benchmarks for Large Language Models (LLMs) focus on evaluating comprehension of long inputs, while overlooking the evaluation of long reasoning abilities. To address this gap, we introduce LongReasonArena, a benchmark specifically designed to assess the long reasoning capabilities of LLMs. Our tasks require models to solve problems by executing multi-step algorithms that reflect key aspects of long reasoning, such as retrieval and backtracking. By controlling the inputs, the required reasoning length can be arbitrarily scaled, reaching up to 1 million tokens of reasoning for the most challenging tasks. Extensive evaluation results demonstrate that LongReasonArena presents a significant challenge for both open-source and proprietary LLMs. For instance, Deepseek-R1 achieves only 7.5% accuracy on our task. Further analysis also reveals that the accuracy exhibits a linear decline with respect to the logarithm of the expected number of reasoning steps. Our code and data is available at https://github.com/LongReasonArena/LongReasonArena.

Geometric-Mean Policy Optimization

Jul 28, 2025Abstract:Recent advancements, such as Group Relative Policy Optimization (GRPO), have enhanced the reasoning capabilities of large language models by optimizing the arithmetic mean of token-level rewards. However, GRPO suffers from unstable policy updates when processing tokens with outlier importance-weighted rewards, which manifests as extreme importance sampling ratios during training, i.e., the ratio between the sampling probabilities assigned to a token by the current and old policies. In this work, we propose Geometric-Mean Policy Optimization (GMPO), a stabilized variant of GRPO. Instead of optimizing the arithmetic mean, GMPO maximizes the geometric mean of token-level rewards, which is inherently less sensitive to outliers and maintains a more stable range of importance sampling ratio. In addition, we provide comprehensive theoretical and experimental analysis to justify the design and stability benefits of GMPO. Beyond improved stability, GMPO-7B outperforms GRPO by an average of 4.1% on multiple mathematical benchmarks and 1.4% on multimodal reasoning benchmark, including AIME24, AMC, MATH500, OlympiadBench, Minerva, and Geometry3K. Code is available at https://github.com/callsys/GMPO.

WGSR-Bench: Wargame-based Game-theoretic Strategic Reasoning Benchmark for Large Language Models

Jun 12, 2025

Abstract:Recent breakthroughs in Large Language Models (LLMs) have led to a qualitative leap in artificial intelligence' s performance on reasoning tasks, particularly demonstrating remarkable capabilities in mathematical, symbolic, and commonsense reasoning. However, as a critical component of advanced human cognition, strategic reasoning, i.e., the ability to assess multi-agent behaviors in dynamic environments, formulate action plans, and adapt strategies, has yet to be systematically evaluated or modeled. To address this gap, this paper introduces WGSR-Bench, the first strategy reasoning benchmark for LLMs using wargame as its evaluation environment. Wargame, a quintessential high-complexity strategic scenario, integrates environmental uncertainty, adversarial dynamics, and non-unique strategic choices, making it an effective testbed for assessing LLMs' capabilities in multi-agent decision-making, intent inference, and counterfactual reasoning. WGSR-Bench designs test samples around three core tasks, i.e., Environmental situation awareness, Opponent risk modeling and Policy generation, which serve as the core S-POE architecture, to systematically assess main abilities of strategic reasoning. Finally, an LLM-based wargame agent is designed to integrate these parts for a comprehensive strategy reasoning assessment. With WGSR-Bench, we hope to assess the strengths and limitations of state-of-the-art LLMs in game-theoretic strategic reasoning and to advance research in large model-driven strategic intelligence.

Think Only When You Need with Large Hybrid-Reasoning Models

May 21, 2025Abstract:Recent Large Reasoning Models (LRMs) have shown substantially improved reasoning capabilities over traditional Large Language Models (LLMs) by incorporating extended thinking processes prior to producing final responses. However, excessively lengthy thinking introduces substantial overhead in terms of token consumption and latency, which is particularly unnecessary for simple queries. In this work, we introduce Large Hybrid-Reasoning Models (LHRMs), the first kind of model capable of adaptively determining whether to perform thinking based on the contextual information of user queries. To achieve this, we propose a two-stage training pipeline comprising Hybrid Fine-Tuning (HFT) as a cold start, followed by online reinforcement learning with the proposed Hybrid Group Policy Optimization (HGPO) to implicitly learn to select the appropriate thinking mode. Furthermore, we introduce a metric called Hybrid Accuracy to quantitatively assess the model's capability for hybrid thinking. Extensive experimental results show that LHRMs can adaptively perform hybrid thinking on queries of varying difficulty and type. It outperforms existing LRMs and LLMs in reasoning and general capabilities while significantly improving efficiency. Together, our work advocates for a reconsideration of the appropriate use of extended thinking processes and provides a solid starting point for building hybrid thinking systems.

Model as a Game: On Numerical and Spatial Consistency for Generative Games

Mar 27, 2025Abstract:Recent advances in generative models have significantly impacted game generation. However, despite producing high-quality graphics and adequately receiving player input, existing models often fail to maintain fundamental game properties such as numerical and spatial consistency. Numerical consistency ensures gameplay mechanics correctly reflect score changes and other quantitative elements, while spatial consistency prevents jarring scene transitions, providing seamless player experiences. In this paper, we revisit the paradigm of generative games to explore what truly constitutes a Model as a Game (MaaG) with a well-developed mechanism. We begin with an empirical study on ``Traveler'', a 2D game created by an LLM featuring minimalist rules yet challenging generative models in maintaining consistency. Based on the DiT architecture, we design two specialized modules: (1) a numerical module that integrates a LogicNet to determine event triggers, with calculations processed externally as conditions for image generation; and (2) a spatial module that maintains a map of explored areas, retrieving location-specific information during generation and linking new observations to ensure continuity. Experiments across three games demonstrate that our integrated modules significantly enhance performance on consistency metrics compared to baselines, while incurring minimal time overhead during inference.

PEACE: Empowering Geologic Map Holistic Understanding with MLLMs

Jan 10, 2025

Abstract:Geologic map, as a fundamental diagram in geology science, provides critical insights into the structure and composition of Earth's subsurface and surface. These maps are indispensable in various fields, including disaster detection, resource exploration, and civil engineering. Despite their significance, current Multimodal Large Language Models (MLLMs) often fall short in geologic map understanding. This gap is primarily due to the challenging nature of cartographic generalization, which involves handling high-resolution map, managing multiple associated components, and requiring domain-specific knowledge. To quantify this gap, we construct GeoMap-Bench, the first-ever benchmark for evaluating MLLMs in geologic map understanding, which assesses the full-scale abilities in extracting, referring, grounding, reasoning, and analyzing. To bridge this gap, we introduce GeoMap-Agent, the inaugural agent designed for geologic map understanding, which features three modules: Hierarchical Information Extraction (HIE), Domain Knowledge Injection (DKI), and Prompt-enhanced Question Answering (PEQA). Inspired by the interdisciplinary collaboration among human scientists, an AI expert group acts as consultants, utilizing a diverse tool pool to comprehensively analyze questions. Through comprehensive experiments, GeoMap-Agent achieves an overall score of 0.811 on GeoMap-Bench, significantly outperforming 0.369 of GPT-4o. Our work, emPowering gEologic mAp holistiC undErstanding (PEACE) with MLLMs, paves the way for advanced AI applications in geology, enhancing the efficiency and accuracy of geological investigations.

MMLU-CF: A Contamination-free Multi-task Language Understanding Benchmark

Dec 19, 2024Abstract:Multiple-choice question (MCQ) datasets like Massive Multitask Language Understanding (MMLU) are widely used to evaluate the commonsense, understanding, and problem-solving abilities of large language models (LLMs). However, the open-source nature of these benchmarks and the broad sources of training data for LLMs have inevitably led to benchmark contamination, resulting in unreliable evaluation results. To alleviate this issue, we propose a contamination-free and more challenging MCQ benchmark called MMLU-CF. This benchmark reassesses LLMs' understanding of world knowledge by averting both unintentional and malicious data leakage. To avoid unintentional data leakage, we source data from a broader domain and design three decontamination rules. To prevent malicious data leakage, we divide the benchmark into validation and test sets with similar difficulty and subject distributions. The test set remains closed-source to ensure reliable results, while the validation set is publicly available to promote transparency and facilitate independent verification. Our evaluation of mainstream LLMs reveals that the powerful GPT-4o achieves merely a 5-shot score of 73.4% and a 0-shot score of 71.9% on the test set, which indicates the effectiveness of our approach in creating a more rigorous and contamination-free evaluation standard. The GitHub repository is available at https://github.com/microsoft/MMLU-CF and the dataset refers to https://huggingface.co/datasets/microsoft/MMLU-CF.

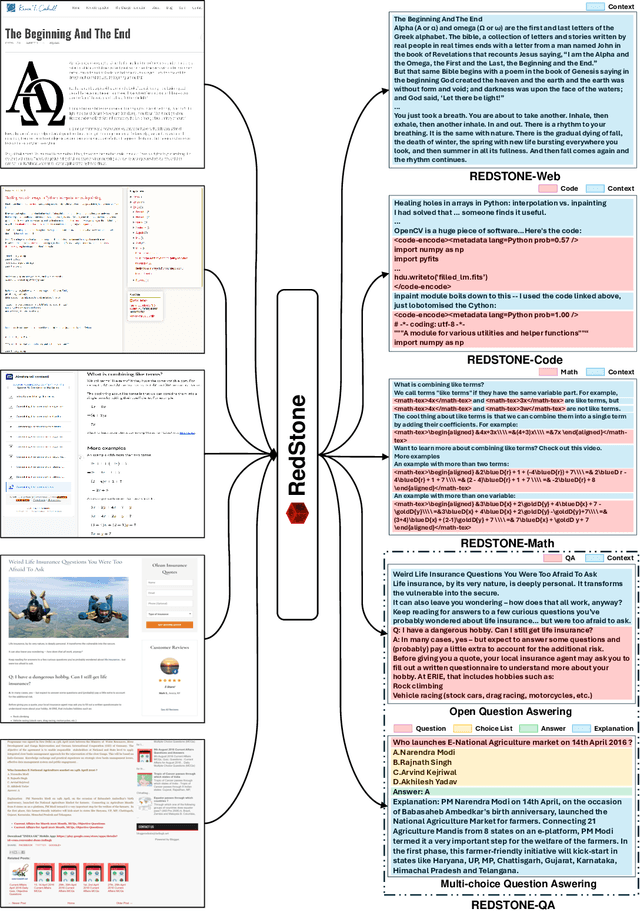

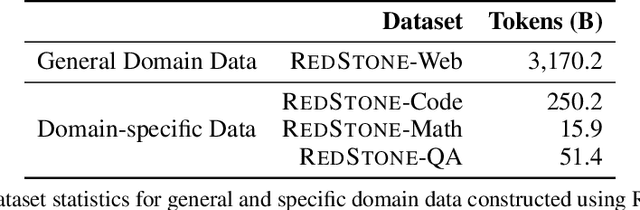

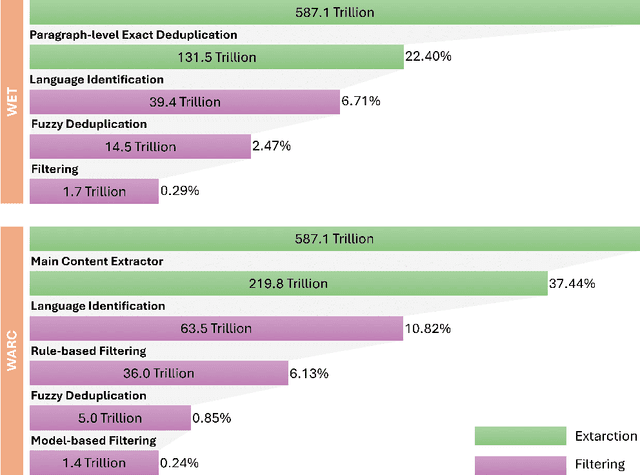

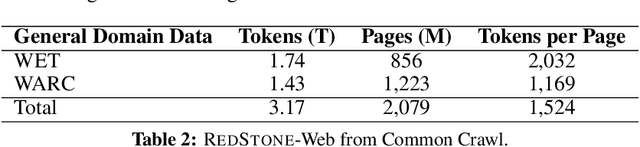

RedStone: Curating General, Code, Math, and QA Data for Large Language Models

Dec 04, 2024

Abstract:Pre-training Large Language Models (LLMs) on high-quality, meticulously curated datasets is widely recognized as critical for enhancing their performance and generalization capabilities. This study explores the untapped potential of Common Crawl as a comprehensive and flexible resource for pre-training LLMs, addressing both general-purpose language understanding and specialized domain knowledge. We introduce RedStone, an innovative and scalable pipeline engineered to extract and process data from Common Crawl, facilitating the creation of extensive and varied pre-training datasets. Unlike traditional datasets, which often require expensive curation and domain-specific expertise, RedStone leverages the breadth of Common Crawl to deliver datasets tailored to a wide array of domains. In this work, we exemplify its capability by constructing pre-training datasets across multiple fields, including general language understanding, code, mathematics, and question-answering tasks. The flexibility of RedStone allows for easy adaptation to other specialized domains, significantly lowering the barrier to creating valuable domain-specific datasets. Our findings demonstrate that Common Crawl, when harnessed through effective pipelines like RedStone, can serve as a rich, renewable source of pre-training data, unlocking new avenues for domain adaptation and knowledge discovery in LLMs. This work also underscores the importance of innovative data acquisition strategies and highlights the role of web-scale data as a powerful resource in the continued evolution of LLMs. RedStone code and data samples will be publicly available at \url{https://aka.ms/redstone}.

CTISum: A New Benchmark Dataset For Cyber Threat Intelligence Summarization

Aug 13, 2024

Abstract:Cyber Threat Intelligence (CTI) summarization task requires the system to generate concise and accurate highlights from raw intelligence data, which plays an important role in providing decision-makers with crucial information to quickly detect and respond to cyber threats in the cybersecurity domain. However, efficient techniques for summarizing CTI reports, including facts, analytical insights, attack processes, etc., have largely been unexplored, primarily due to the lack of available dataset. To this end, we present CTISum, a new benchmark for CTI summarization task. Considering the importance of attack process, a novel fine-grained subtask of attack process summarization is proposed to enable defenders to assess risk, identify security gaps, vulnerabilities, and so on. Specifically, we first design a multi-stage annotation pipeline to gather and annotate the CTI data, and then benchmark the CTISum with a collection of extractive and abstractive summarization methods. Experimental results show that current state-of-the-art models exhibit limitations when applied to CTISum, underscoring the fact that automatically producing concise summaries of CTI reports remains an open research challenge.

Inference Attacks in Machine Learning as a Service: A Taxonomy, Review, and Promising Directions

Jun 04, 2024

Abstract:The prosperity of machine learning has also brought people's concerns about data privacy. Among them, inference attacks can implement privacy breaches in various MLaaS scenarios and model training/prediction phases. Specifically, inference attacks can perform privacy inference on undisclosed target training sets based on outputs of the target model, including but not limited to statistics, membership, semantics, data representation, etc. For instance, infer whether the target data has the characteristics of AIDS. In addition, the rapid development of the machine learning community in recent years, especially the surge of model types and application scenarios, has further stimulated the inference attacks' research. Thus, studying inference attacks and analyzing them in depth is urgent and significant. However, there is still a gap in the systematic discussion of inference attacks from taxonomy, global perspective, attack, and defense perspectives. This survey provides an in-depth and comprehensive inference of attacks and corresponding countermeasures in ML-as-a-service based on taxonomy and the latest researches. Without compromising researchers' intuition, we first propose the 3MP taxonomy based on the community research status, trying to normalize the confusing naming system of inference attacks. Also, we analyze the pros and cons of each type of inference attack, their workflow, countermeasure, and how they interact with other attacks. In the end, we point out several promising directions for researchers from a more comprehensive and novel perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge