Prototype-supervised Adversarial Network for Targeted Attack of Deep Hashing

May 17, 2021Xunguang Wang, Zheng Zhang, Baoyuan Wu, Fumin Shen, Guangming Lu

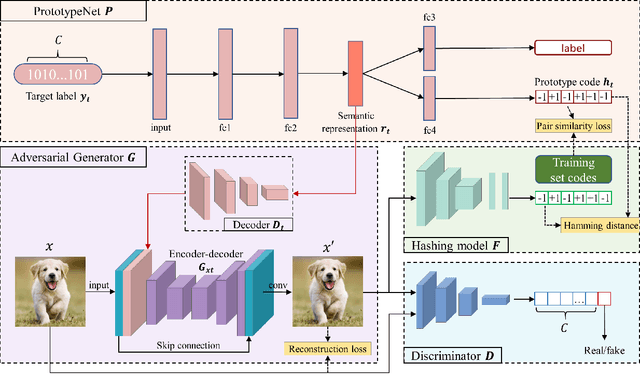

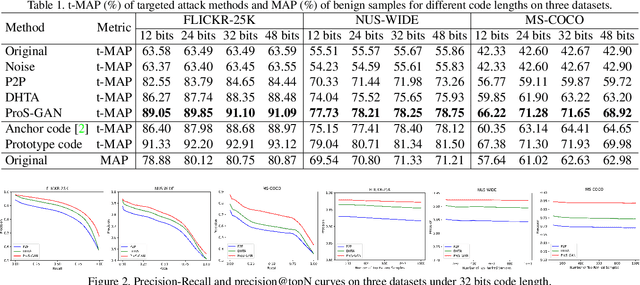

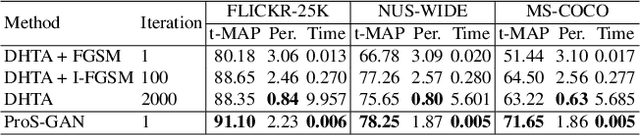

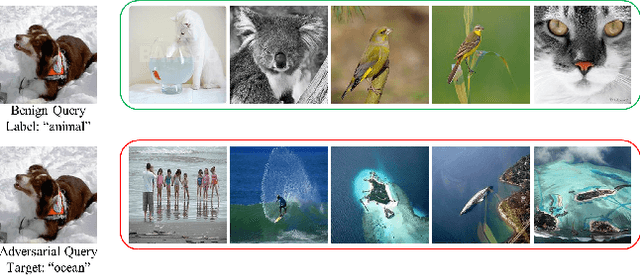

Due to its powerful capability of representation learning and high-efficiency computation, deep hashing has made significant progress in large-scale image retrieval. However, deep hashing networks are vulnerable to adversarial examples, which is a practical secure problem but seldom studied in hashing-based retrieval field. In this paper, we propose a novel prototype-supervised adversarial network (ProS-GAN), which formulates a flexible generative architecture for efficient and effective targeted hashing attack. To the best of our knowledge, this is the first generation-based method to attack deep hashing networks. Generally, our proposed framework consists of three parts, i.e., a PrototypeNet, a generator, and a discriminator. Specifically, the designed PrototypeNet embeds the target label into the semantic representation and learns the prototype code as the category-level representative of the target label. Moreover, the semantic representation and the original image are jointly fed into the generator for a flexible targeted attack. Particularly, the prototype code is adopted to supervise the generator to construct the targeted adversarial example by minimizing the Hamming distance between the hash code of the adversarial example and the prototype code. Furthermore, the generator is against the discriminator to simultaneously encourage the adversarial examples visually realistic and the semantic representation informative. Extensive experiments verify that the proposed framework can efficiently produce adversarial examples with better targeted attack performance and transferability over state-of-the-art targeted attack methods of deep hashing. The related codes could be available at https://github.com/xunguangwang/ProS-GAN .

Breaking Shortcut: Exploring Fully Convolutional Cycle-Consistency for Video Correspondence Learning

May 12, 2021Yansong Tang, Zhenyu Jiang, Zhenda Xie, Yue Cao, Zheng Zhang, Philip H. S. Torr, Han Hu

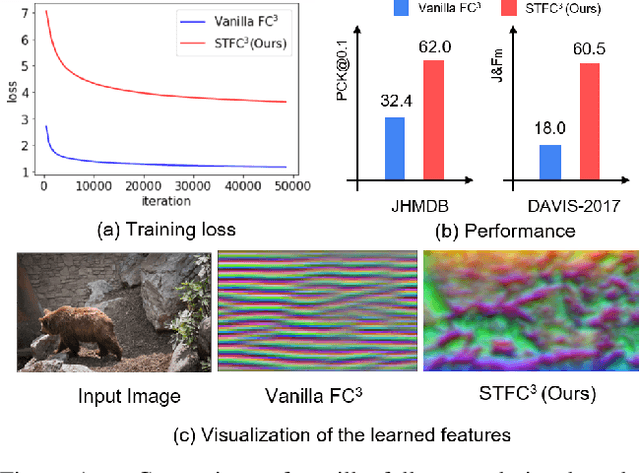

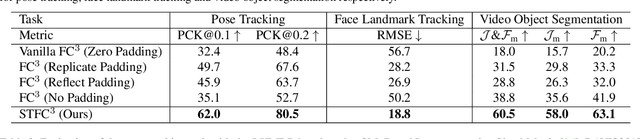

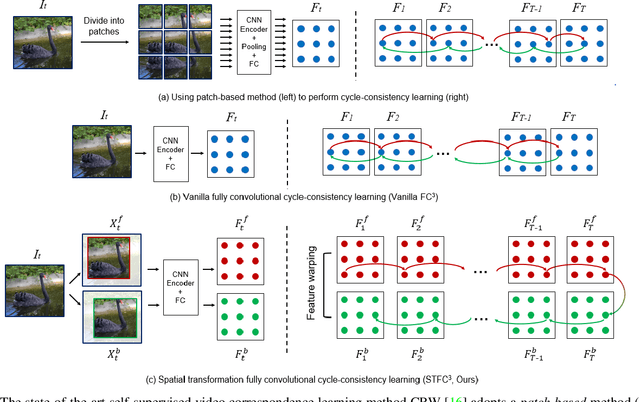

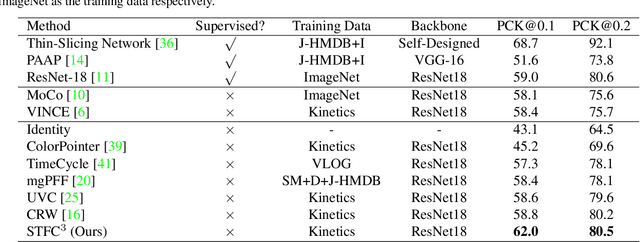

Previous cycle-consistency correspondence learning methods usually leverage image patches for training. In this paper, we present a fully convolutional method, which is simpler and more coherent to the inference process. While directly applying fully convolutional training results in model collapse, we study the underline reason behind this collapse phenomenon, indicating that the absolute positions of pixels provide a shortcut to easily accomplish cycle-consistence, which hinders the learning of meaningful visual representations. To break this absolute position shortcut, we propose to apply different crops for forward and backward frames, and adopt feature warping to establish correspondence between two crops of a same frame. The former technique enforces the corresponding pixels at forward and back tracks to have different absolute positions, and the latter effectively blocks the shortcuts going between forward and back tracks. In three label propagation benchmarks for pose tracking, face landmark tracking and video object segmentation, our method largely improves the results of vanilla fully convolutional cycle-consistency method, achieving very competitive performance compared with the self-supervised state-of-the-art approaches.

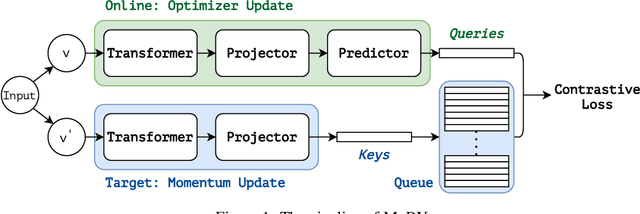

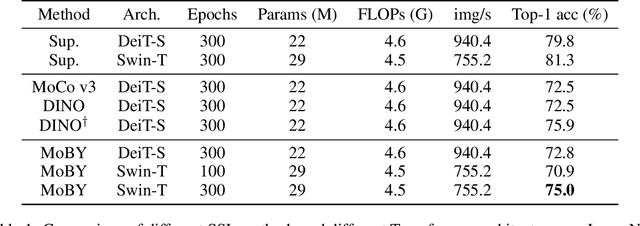

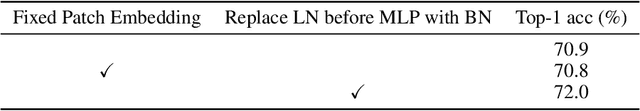

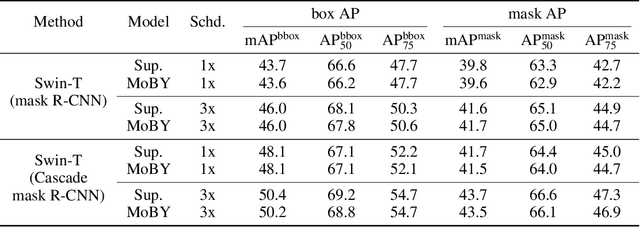

Self-Supervised Learning with Swin Transformers

May 11, 2021Zhenda Xie, Yutong Lin, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao, Han Hu

We are witnessing a modeling shift from CNN to Transformers in computer vision. In this work, we present a self-supervised learning approach called MoBY, with Vision Transformers as its backbone architecture. The approach basically has no new inventions, which is combined from MoCo v2 and BYOL and tuned to achieve reasonably high accuracy on ImageNet-1K linear evaluation: 72.8% and 75.0% top-1 accuracy using DeiT-S and Swin-T, respectively, by 300-epoch training. The performance is slightly better than recent works of MoCo v3 and DINO which adopt DeiT as the backbone, but with much lighter tricks. More importantly, the general-purpose Swin Transformer backbone enables us to also evaluate the learnt representations on downstream tasks such as object detection and semantic segmentation, in contrast to a few recent approaches built on ViT/DeiT which only report linear evaluation results on ImageNet-1K due to ViT/DeiT not tamed for these dense prediction tasks. We hope our results can facilitate more comprehensive evaluation of self-supervised learning methods designed for Transformer architectures. Our code and models are available at https://github.com/SwinTransformer/Transformer-SSL, which will be continually enriched.

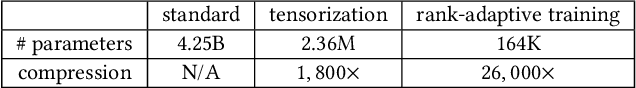

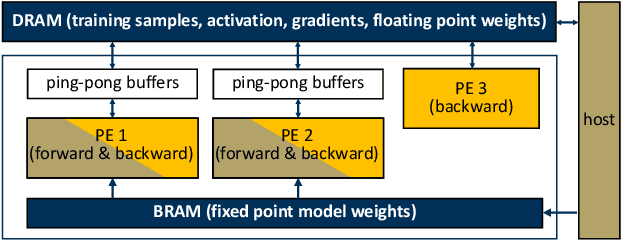

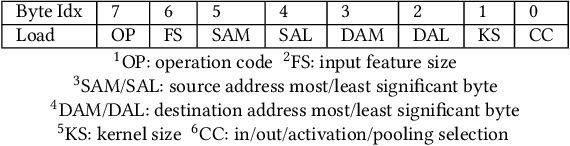

3U-EdgeAI: Ultra-Low Memory Training, Ultra-Low BitwidthQuantization, and Ultra-Low Latency Acceleration

May 11, 2021Yao Chen, Cole Hawkins, Kaiqi Zhang, Zheng Zhang, Cong Hao

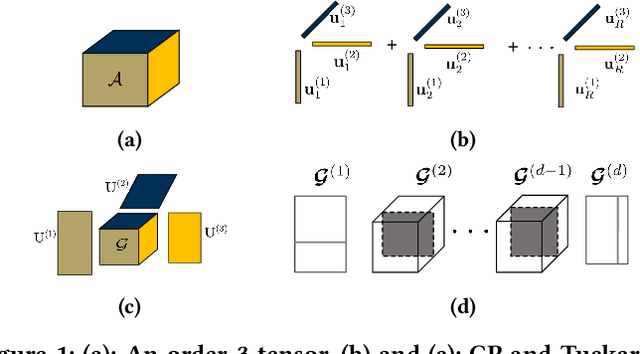

The deep neural network (DNN) based AI applications on the edge require both low-cost computing platforms and high-quality services. However, the limited memory, computing resources, and power budget of the edge devices constrain the effectiveness of the DNN algorithms. Developing edge-oriented AI algorithms and implementations (e.g., accelerators) is challenging. In this paper, we summarize our recent efforts for efficient on-device AI development from three aspects, including both training and inference. First, we present on-device training with ultra-low memory usage. We propose a novel rank-adaptive tensor-based tensorized neural network model, which offers orders-of-magnitude memory reduction during training. Second, we introduce an ultra-low bitwidth quantization method for DNN model compression, achieving the state-of-the-art accuracy under the same compression ratio. Third, we introduce an ultra-low latency DNN accelerator design, practicing the software/hardware co-design methodology. This paper emphasizes the importance and efficacy of training, quantization and accelerator design, and calls for more research breakthroughs in the area for AI on the edge.

Group-Free 3D Object Detection via Transformers

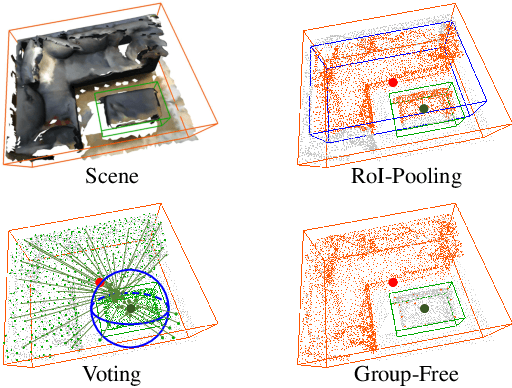

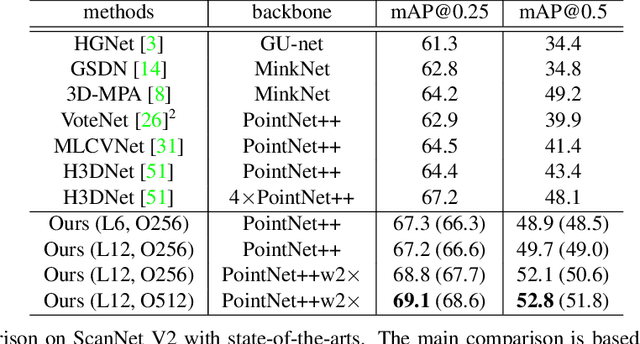

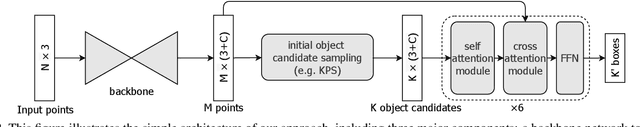

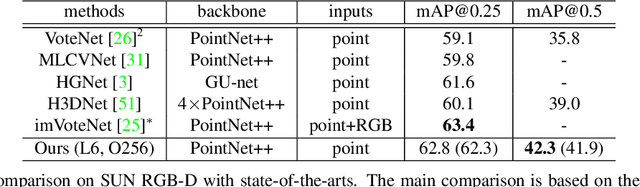

Apr 23, 2021Ze Liu, Zheng Zhang, Yue Cao, Han Hu, Xin Tong

Recently, directly detecting 3D objects from 3D point clouds has received increasing attention. To extract object representation from an irregular point cloud, existing methods usually take a point grouping step to assign the points to an object candidate so that a PointNet-like network could be used to derive object features from the grouped points. However, the inaccurate point assignments caused by the hand-crafted grouping scheme decrease the performance of 3D object detection. In this paper, we present a simple yet effective method for directly detecting 3D objects from the 3D point cloud. Instead of grouping local points to each object candidate, our method computes the feature of an object from all the points in the point cloud with the help of an attention mechanism in the Transformers \cite{vaswani2017attention}, where the contribution of each point is automatically learned in the network training. With an improved attention stacking scheme, our method fuses object features in different stages and generates more accurate object detection results. With few bells and whistles, the proposed method achieves state-of-the-art 3D object detection performance on two widely used benchmarks, ScanNet V2 and SUN RGB-D. The code and models are publicly available at \url{https://github.com/zeliu98/Group-Free-3D}

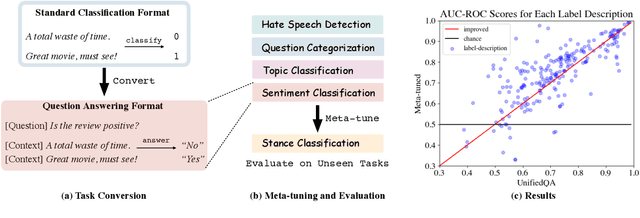

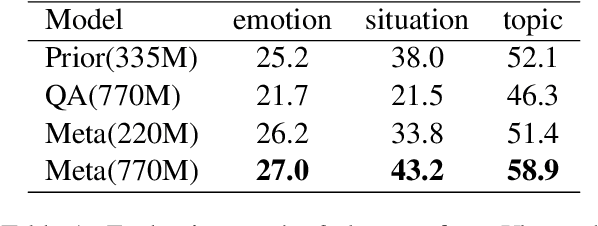

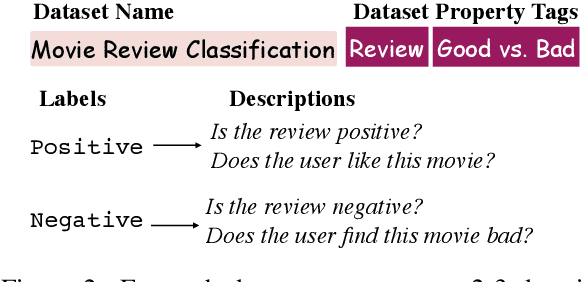

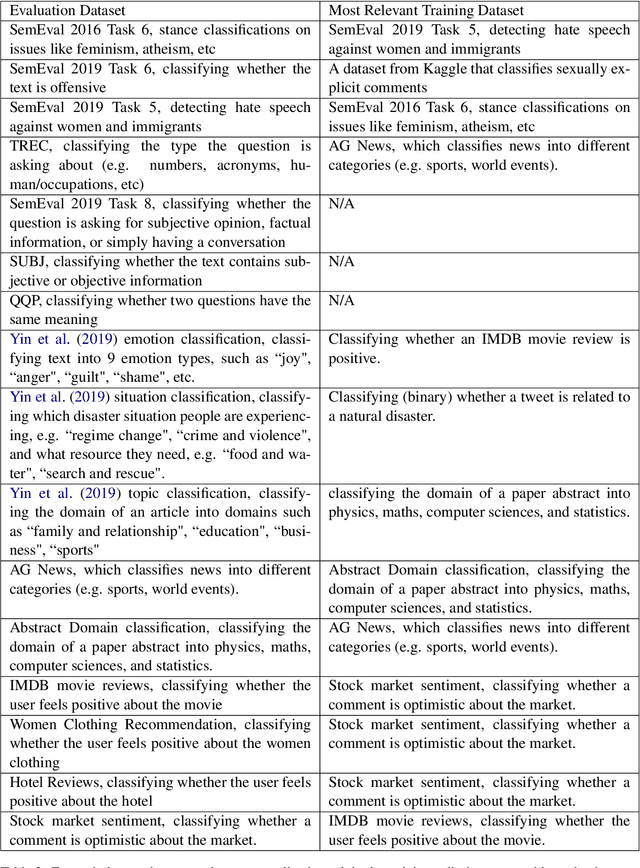

Meta-tuning Language Models to Answer Prompts Better

Apr 17, 2021Ruiqi Zhong, Kristy Lee, Zheng Zhang, Dan Klein

Large pretrained language models like GPT-3 have acquired a surprising ability to perform zero-shot classification (ZSC). For example, to classify review sentiments, we can "prompt" the language model with the review and the question "Is the review positive?" as the context, and ask it to predict whether the next word is "Yes" or "No". However, these models are not specialized for answering these prompts. To address this weakness, we propose meta-tuning, which trains the model to specialize in answering prompts but still generalize to unseen tasks. To create the training data, we aggregated 43 existing datasets, annotated 441 label descriptions in total, and unified them into the above question answering (QA) format. After meta-tuning, our model outperforms a same-sized QA model for most labels on unseen tasks, and we forecast that the performance would improve for even larger models. Therefore, measuring ZSC performance on non-specialized language models might underestimate their true capability, and community-wide efforts on aggregating datasets and unifying their formats can help build models that understand prompts better.

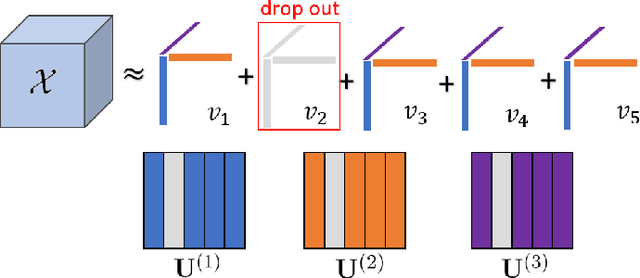

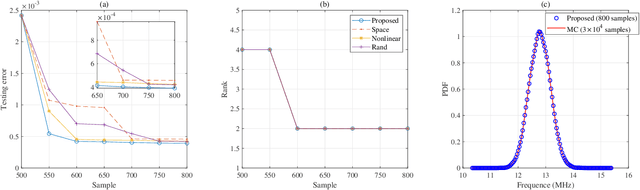

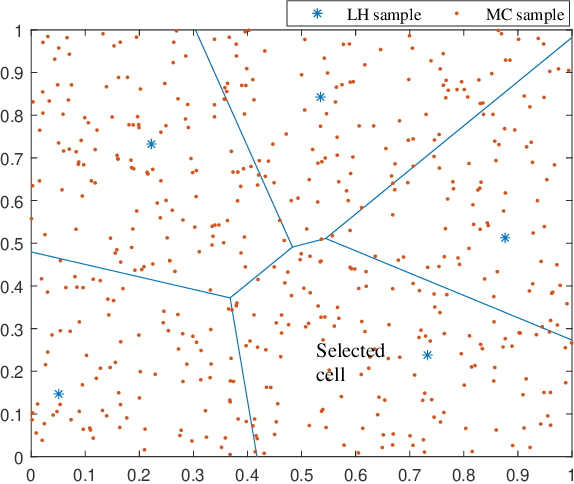

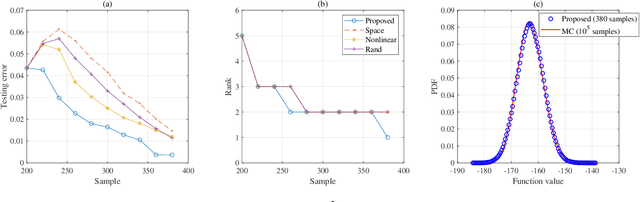

High-Dimensional Uncertainty Quantification via Rank- and Sample-Adaptive Tensor Regression

Mar 31, 2021Zichang He, Zheng Zhang

Fabrication process variations can significantly influence the performance and yield of nano-scale electronic and photonic circuits. Stochastic spectral methods have achieved great success in quantifying the impact of process variations, but they suffer from the curse of dimensionality. Recently, low-rank tensor methods have been developed to mitigate this issue, but two fundamental challenges remain open: how to automatically determine the tensor rank and how to adaptively pick the informative simulation samples. This paper proposes a novel tensor regression method to address these two challenges. We use a $\ell_{q}/ \ell_{2}$ group-sparsity regularization to determine the tensor rank. The resulting optimization problem can be efficiently solved via an alternating minimization solver. We also propose a two-stage adaptive sampling method to reduce the simulation cost. Our method considers both exploration and exploitation via the estimated Voronoi cell volume and nonlinearity measurement respectively. The proposed model is verified with synthetic and some realistic circuit benchmarks, on which our method can well capture the uncertainty caused by 19 to 100 random variables with only 100 to 600 simulation samples.

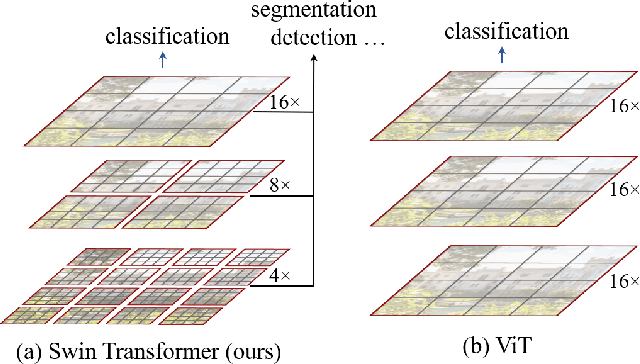

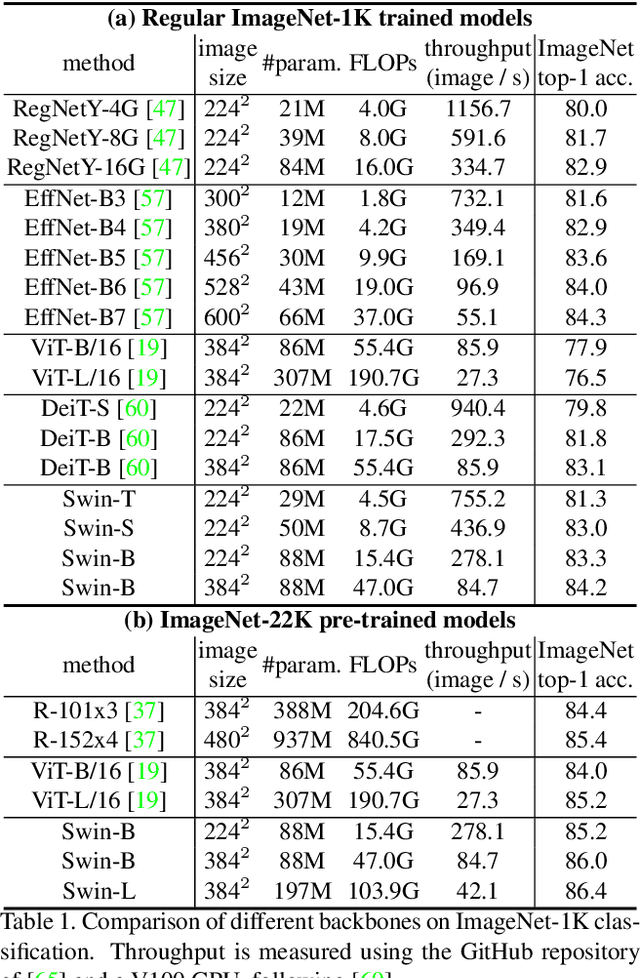

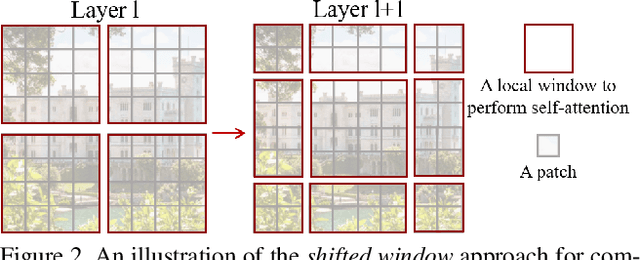

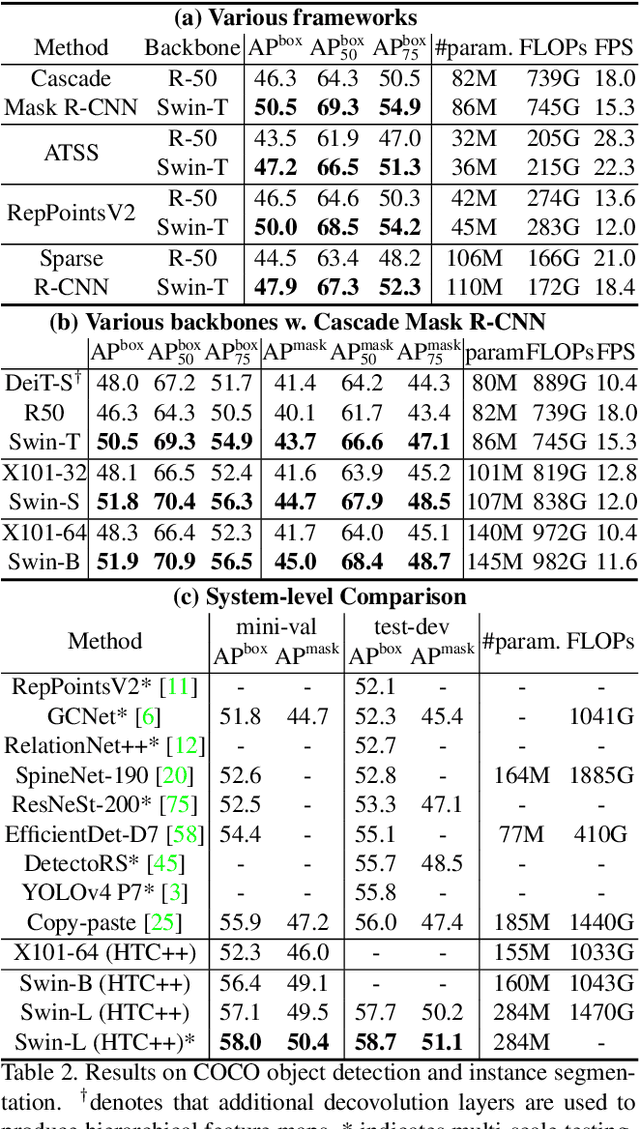

Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

Mar 25, 2021Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo

This paper presents a new vision Transformer, called Swin Transformer, that capably serves as a general-purpose backbone for computer vision. Challenges in adapting Transformer from language to vision arise from differences between the two domains, such as large variations in the scale of visual entities and the high resolution of pixels in images compared to words in text. To address these differences, we propose a hierarchical Transformer whose representation is computed with shifted windows. The shifted windowing scheme brings greater efficiency by limiting self-attention computation to non-overlapping local windows while also allowing for cross-window connection. This hierarchical architecture has the flexibility to model at various scales and has linear computational complexity with respect to image size. These qualities of Swin Transformer make it compatible with a broad range of vision tasks, including image classification (86.4 top-1 accuracy on ImageNet-1K) and dense prediction tasks such as object detection (58.7 box AP and 51.1 mask AP on COCO test-dev) and semantic segmentation (53.5 mIoU on ADE20K val). Its performance surpasses the previous state-of-the-art by a large margin of +2.7 box AP and +2.6 mask AP on COCO, and +3.2 mIoU on ADE20K, demonstrating the potential of Transformer-based models as vision backbones. The code and models will be made publicly available at~\url{https://github.com/microsoft/Swin-Transformer}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge