An Empirical Study of Training End-to-End Vision-and-Language Transformers

Nov 03, 2021Zi-Yi Dou, Yichong Xu, Zhe Gan, Jianfeng Wang, Shuohang Wang, Lijuan Wang, Chenguang Zhu, Nanyun, Peng, Zicheng Liu, Michael Zeng

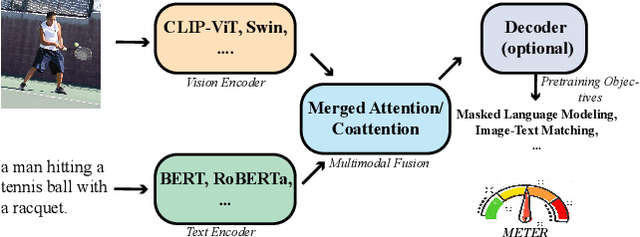

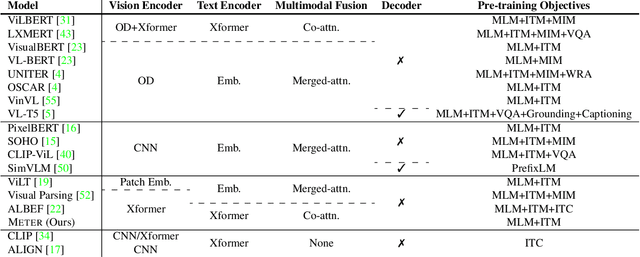

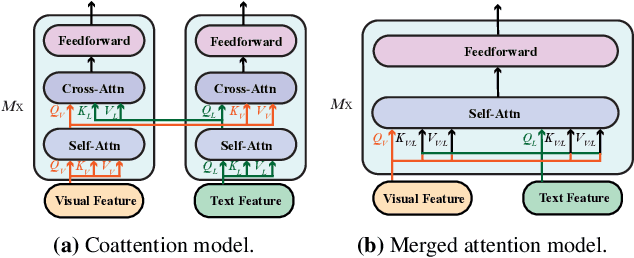

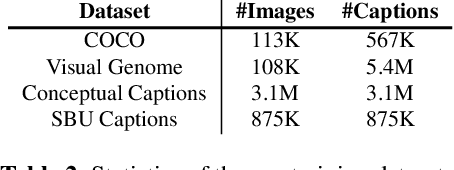

Vision-and-language (VL) pre-training has proven to be highly effective on various VL downstream tasks. While recent work has shown that fully transformer-based VL models can be more efficient than previous region-feature-based methods, their performance on downstream tasks are often degraded significantly. In this paper, we present METER~(\textbf{M}ultimodal \textbf{E}nd-to-end \textbf{T}ransform\textbf{ER}), through which we systematically investigate how to design and pre-train a fully transformer-based VL model in an end-to-end manner. Specifically, we dissect the model designs along multiple dimensions: vision encoders (e.g., CLIP-ViT, Swin transformer), text encoders (e.g., RoBERTa, DeBERTa), multimodal fusion (e.g., merged attention vs. co-attention), architecture design (e.g., encoder-only vs. encoder-decoder), and pre-training objectives (e.g., masked image modeling). We conduct comprehensive experiments on a wide range of VL tasks, and provide insights on how to train a performant VL transformer while maintaining fast inference speed. Notably, METER~achieves an accuracy of 77.64\% on the VQAv2 test-std set using only 4M images for pre-training, surpassing the state-of-the-art region-feature-based VinVL model by +1.04\%, and outperforming the previous best fully transformer-based ALBEF model by +1.6\%.

An Empirical Study of GPT-3 for Few-Shot Knowledge-Based VQA

Sep 10, 2021Zhengyuan Yang, Zhe Gan, Jianfeng Wang, Xiaowei Hu, Yumao Lu, Zicheng Liu, Lijuan Wang

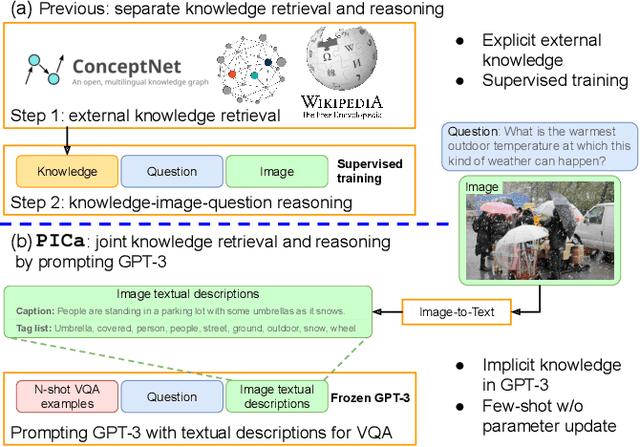

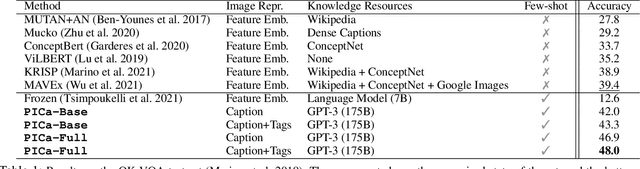

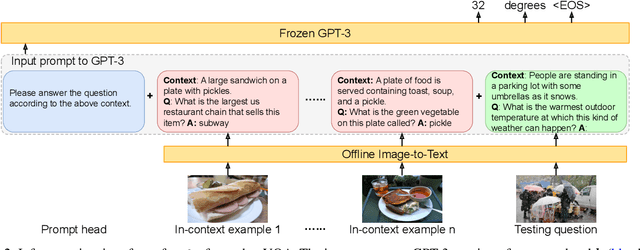

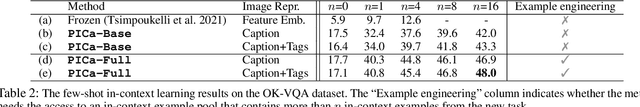

Knowledge-based visual question answering (VQA) involves answering questions that require external knowledge not present in the image. Existing methods first retrieve knowledge from external resources, then reason over the selected knowledge, the input image, and question for answer prediction. However, this two-step approach could lead to mismatches that potentially limit the VQA performance. For example, the retrieved knowledge might be noisy and irrelevant to the question, and the re-embedded knowledge features during reasoning might deviate from their original meanings in the knowledge base (KB). To address this challenge, we propose PICa, a simple yet effective method that Prompts GPT3 via the use of Image Captions, for knowledge-based VQA. Inspired by GPT-3's power in knowledge retrieval and question answering, instead of using structured KBs as in previous work, we treat GPT-3 as an implicit and unstructured KB that can jointly acquire and process relevant knowledge. Specifically, we first convert the image into captions (or tags) that GPT-3 can understand, then adapt GPT-3 to solve the VQA task in a few-shot manner by just providing a few in-context VQA examples. We further boost performance by carefully investigating: (i) what text formats best describe the image content, and (ii) how in-context examples can be better selected and used. PICa unlocks the first use of GPT-3 for multimodal tasks. By using only 16 examples, PICa surpasses the supervised state of the art by an absolute +8.6 points on the OK-VQA dataset. We also benchmark PICa on VQAv2, where PICa also shows a decent few-shot performance.

Simpler, Faster, Stronger: Breaking The log-K Curse On Contrastive Learners With FlatNCE

Jul 02, 2021Junya Chen, Zhe Gan, Xuan Li, Qing Guo, Liqun Chen, Shuyang Gao, Tagyoung Chung, Yi Xu, Belinda Zeng, Wenlian Lu, Fan Li, Lawrence Carin, Chenyang Tao

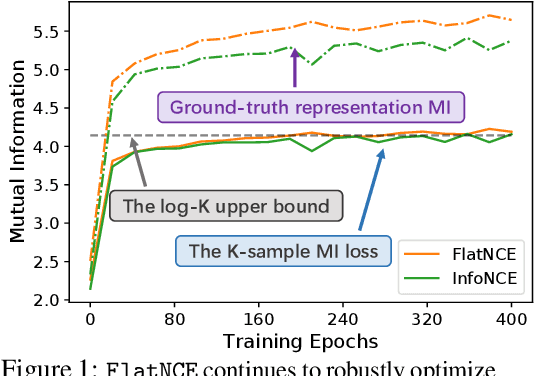

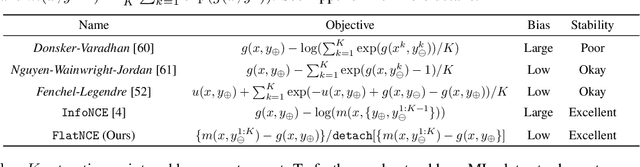

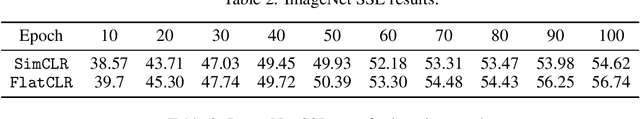

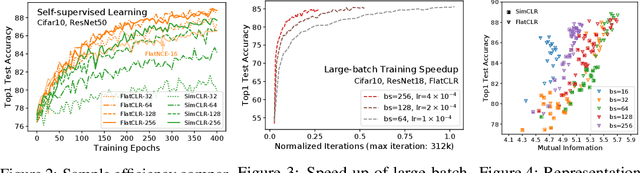

InfoNCE-based contrastive representation learners, such as SimCLR, have been tremendously successful in recent years. However, these contrastive schemes are notoriously resource demanding, as their effectiveness breaks down with small-batch training (i.e., the log-K curse, whereas K is the batch-size). In this work, we reveal mathematically why contrastive learners fail in the small-batch-size regime, and present a novel simple, non-trivial contrastive objective named FlatNCE, which fixes this issue. Unlike InfoNCE, our FlatNCE no longer explicitly appeals to a discriminative classification goal for contrastive learning. Theoretically, we show FlatNCE is the mathematical dual formulation of InfoNCE, thus bridging the classical literature on energy modeling; and empirically, we demonstrate that, with minimal modification of code, FlatNCE enables immediate performance boost independent of the subject-matter engineering efforts. The significance of this work is furthered by the powerful generalization of contrastive learning techniques, and the introduction of new tools to monitor and diagnose contrastive training. We substantiate our claims with empirical evidence on CIFAR10, ImageNet, and other datasets, where FlatNCE consistently outperforms InfoNCE.

Chasing Sparsity in Vision Transformers: An End-to-End Exploration

Jun 09, 2021Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Vision transformers (ViTs) have recently received explosive popularity, but their enormous model sizes and training costs remain daunting. Conventional post-training pruning often incurs higher training budgets. In contrast, this paper aims to trim down both the training memory overhead and the inference complexity, without sacrificing the achievable accuracy. We launch and report the first-of-its-kind comprehensive exploration, on taking a unified approach of integrating sparsity in ViTs "from end to end". Specifically, instead of training full ViTs, we dynamically extract and train sparse subnetworks, while sticking to a fixed small parameter budget. Our approach jointly optimizes model parameters and explores connectivity throughout training, ending up with one sparse network as the final output. The approach is seamlessly extended from unstructured to structured sparsity, the latter by considering to guide the prune-and-grow of self-attention heads inside ViTs. For additional efficiency gains, we further co-explore data and architecture sparsity, by plugging in a novel learnable token selector to adaptively determine the currently most vital patches. Extensive results on ImageNet with diverse ViT backbones validate the effectiveness of our proposals which obtain significantly reduced computational cost and almost unimpaired generalization. Perhaps most surprisingly, we find that the proposed sparse (co-)training can even improve the ViT accuracy rather than compromising it, making sparsity a tantalizing "free lunch". For example, our sparsified DeiT-Small at (5%, 50%) sparsity for (data, architecture), improves 0.28% top-1 accuracy, and meanwhile enjoys 49.32% FLOPs and 4.40% running time savings. Our codes are available at https://github.com/VITA-Group/SViTE.

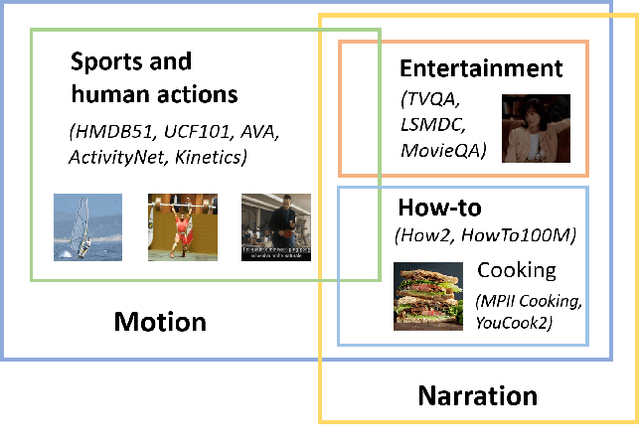

VALUE: A Multi-Task Benchmark for Video-and-Language Understanding Evaluation

Jun 08, 2021Linjie Li, Jie Lei, Zhe Gan, Licheng Yu, Yen-Chun Chen, Rohit Pillai, Yu Cheng, Luowei Zhou, Xin Eric Wang, William Yang Wang, Tamara Lee Berg, Mohit Bansal, Jingjing Liu, Lijuan Wang, Zicheng Liu

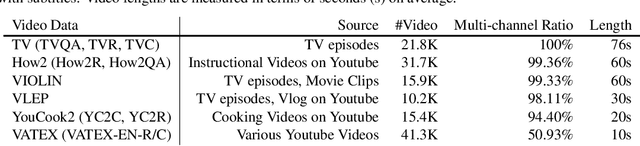

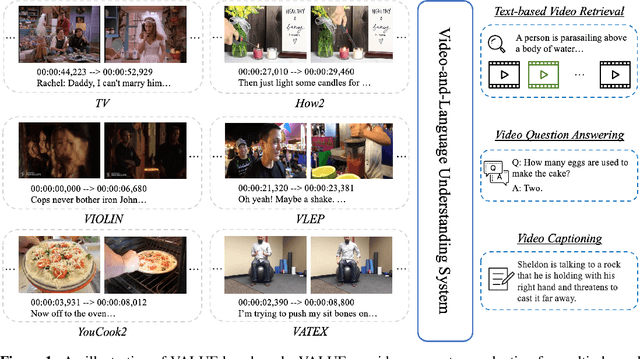

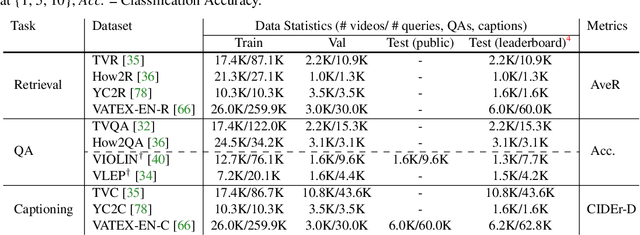

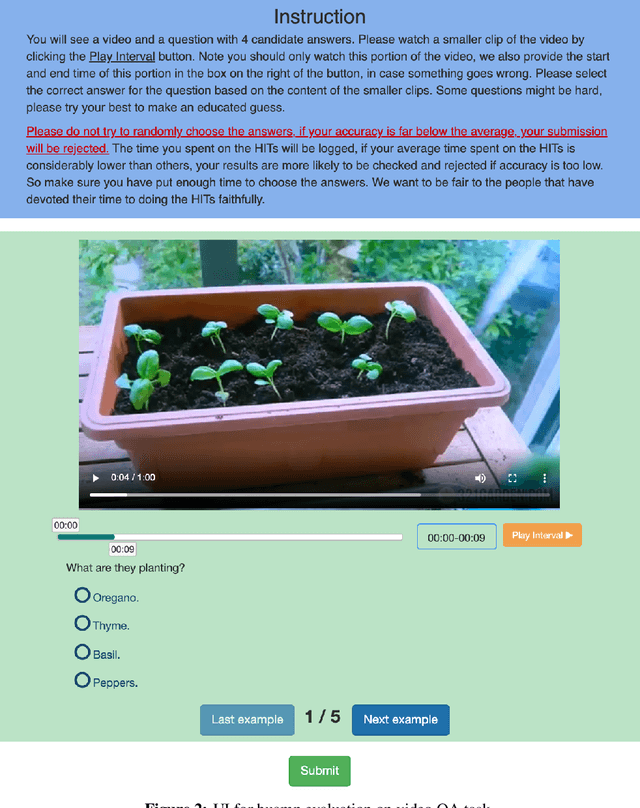

Most existing video-and-language (VidL) research focuses on a single dataset, or multiple datasets of a single task. In reality, a truly useful VidL system is expected to be easily generalizable to diverse tasks, domains, and datasets. To facilitate the evaluation of such systems, we introduce Video-And-Language Understanding Evaluation (VALUE) benchmark, an assemblage of 11 VidL datasets over 3 popular tasks: (i) text-to-video retrieval; (ii) video question answering; and (iii) video captioning. VALUE benchmark aims to cover a broad range of video genres, video lengths, data volumes, and task difficulty levels. Rather than focusing on single-channel videos with visual information only, VALUE promotes models that leverage information from both video frames and their associated subtitles, as well as models that share knowledge across multiple tasks. We evaluate various baseline methods with and without large-scale VidL pre-training, and systematically investigate the impact of video input channels, fusion methods, and different video representations. We also study the transferability between tasks, and conduct multi-task learning under different settings. The significant gap between our best model and human performance calls for future study for advanced VidL models. VALUE is available at https://value-leaderboard.github.io/.

Chasing Sparsity in Vision Transformers:An End-to-End Exploration

Jun 08, 2021Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Vision transformers (ViTs) have recently received explosive popularity, but their enormous model sizes and training costs remain daunting. Conventional post-training pruning often incurs higher training budgets. In contrast, this paper aims to trim down both the training memory overhead and the inference complexity, without scarifying the achievable accuracy. We launch and report the first-of-its-kind comprehensive exploration, on taking a unified approach of integrating sparsity in ViTs "from end to end". Specifically, instead of training full ViTs, we dynamically extract and train sparse subnetworks, while sticking to a fixed small parameter budget. Our approach jointly optimizes model parameters and explores connectivity throughout training, ending up with one sparse network as the final output. The approach is seamlessly extended from unstructured to structured sparsity, the latter by considering to guide the prune-and-grow of self-attention heads inside ViTs. For additional efficiency gains, we further co-explore data and architecture sparsity, by plugging in a novel learnable token selector to adaptively determine the currently most vital patches. Extensive results validate the effectiveness of our proposals on ImageNet with diverse ViT backbones. For instance, at 40% structured sparsity, our sparsified DeiT-Base can achieve 0.42% accuracy gain, at 33.13% and 24.70% running time} savings, compared to its dense counterpart. Perhaps most surprisingly, we find that the proposed sparse (co-)training can even improve the ViT accuracy rather than compromising it, making sparsity a tantalizing "free lunch". For example, our sparsified DeiT-Small at 5%, 50% sparsity for (data, architecture), improves 0.28% top-1 accuracy and meanwhile enjoys 49.32% FLOPs and 4.40% running time savings.

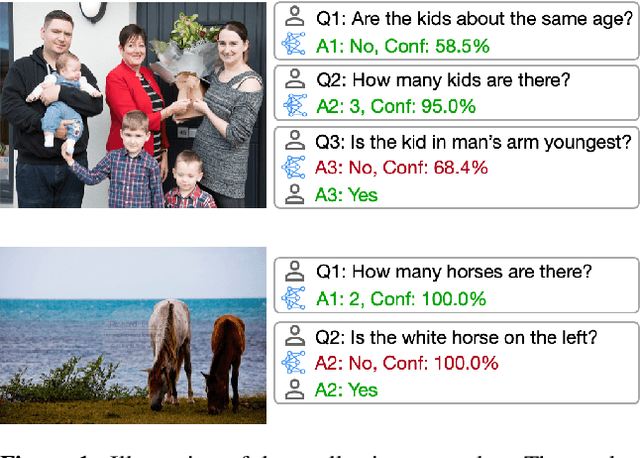

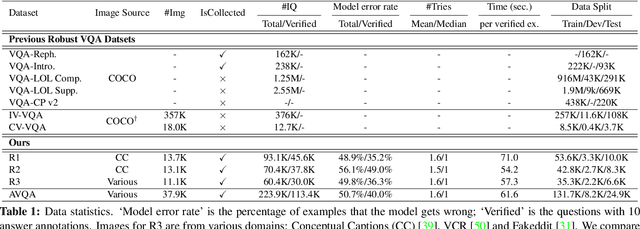

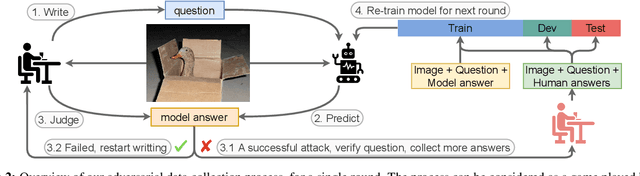

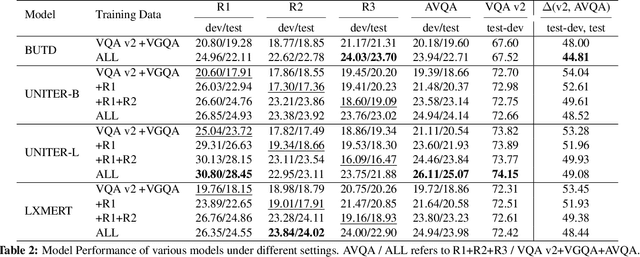

Adversarial VQA: A New Benchmark for Evaluating the Robustness of VQA Models

Jun 01, 2021Linjie Li, Jie Lei, Zhe Gan, Jingjing Liu

With large-scale pre-training, the past two years have witnessed significant performance boost on the Visual Question Answering (VQA) task. Though rapid progresses have been made, it remains unclear whether these state-of-the-art (SOTA) VQA models are robust when encountering test examples in the wild. To study this, we introduce Adversarial VQA, a new large-scale VQA benchmark, collected iteratively via an adversarial human-and-model-in-the-loop procedure. Through this new benchmark, we present several interesting findings. (i) Surprisingly, during dataset collection, we find that non-expert annotators can successfully attack SOTA VQA models with relative ease. (ii) We test a variety of SOTA VQA models on our new dataset to highlight their fragility, and find that both large-scale pre-trained models and adversarial training methods can only achieve far lower performance than what they can achieve on the standard VQA v2 dataset. (iii) When considered as data augmentation, our dataset can be used to improve the performance on other robust VQA benchmarks. (iv) We present a detailed analysis of the dataset, providing valuable insights on the challenges it brings to the community. We hope Adversarial VQA can serve as a valuable benchmark that will be used by future work to test the robustness of its developed VQA models. Our dataset is publicly available at https://adversarialvqa. github.io/.

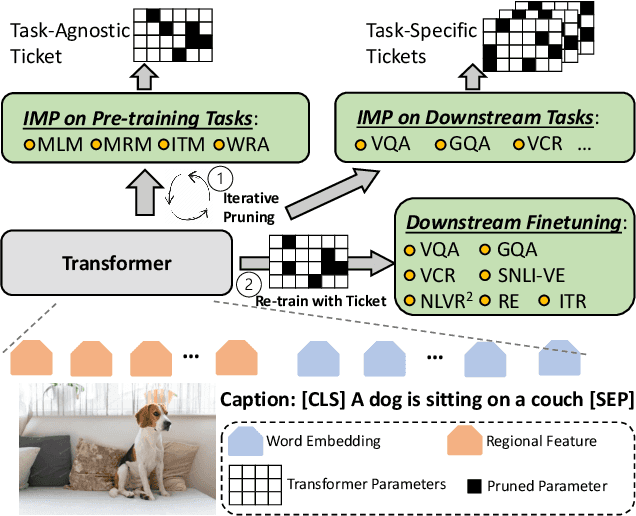

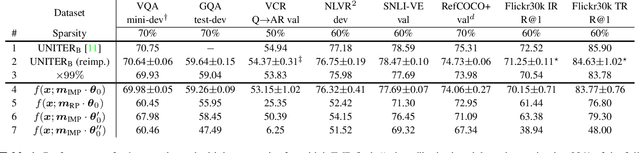

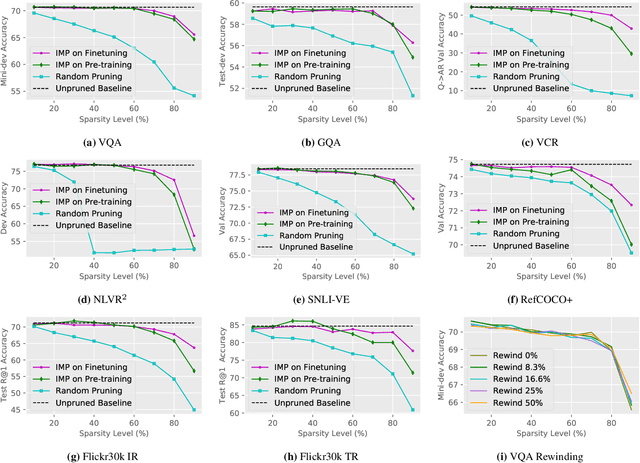

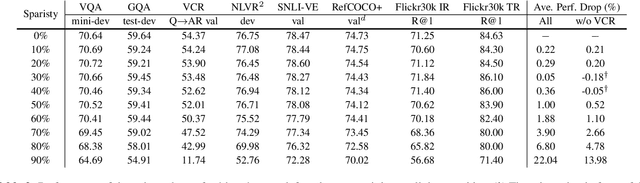

Playing Lottery Tickets with Vision and Language

Apr 23, 2021Zhe Gan, Yen-Chun Chen, Linjie Li, Tianlong Chen, Yu Cheng, Shuohang Wang, Jingjing Liu

Large-scale transformer-based pre-training has recently revolutionized vision-and-language (V+L) research. Models such as LXMERT, ViLBERT and UNITER have significantly lifted the state of the art over a wide range of V+L tasks. However, the large number of parameters in such models hinders their application in practice. In parallel, work on the lottery ticket hypothesis has shown that deep neural networks contain small matching subnetworks that can achieve on par or even better performance than the dense networks when trained in isolation. In this work, we perform the first empirical study to assess whether such trainable subnetworks also exist in pre-trained V+L models. We use UNITER, one of the best-performing V+L models, as the testbed, and consolidate 7 representative V+L tasks for experiments, including visual question answering, visual commonsense reasoning, visual entailment, referring expression comprehension, image-text retrieval, GQA, and NLVR$^2$. Through comprehensive analysis, we summarize our main findings as follows. ($i$) It is difficult to find subnetworks (i.e., the tickets) that strictly match the performance of the full UNITER model. However, it is encouraging to confirm that we can find "relaxed" winning tickets at 50%-70% sparsity that maintain 99% of the full accuracy. ($ii$) Subnetworks found by task-specific pruning transfer reasonably well to the other tasks, while those found on the pre-training tasks at 60%/70% sparsity transfer universally, matching 98%/96% of the full accuracy on average over all the tasks. ($iii$) Adversarial training can be further used to enhance the performance of the found lottery tickets.

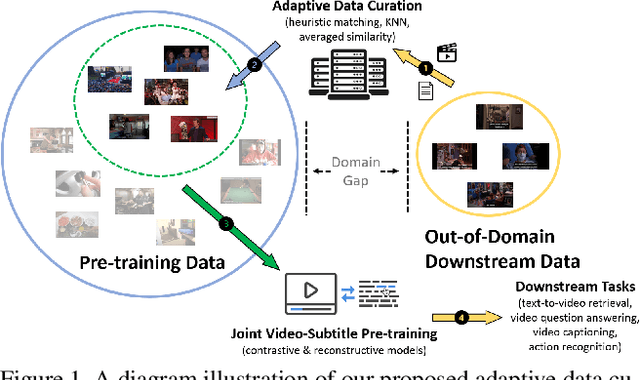

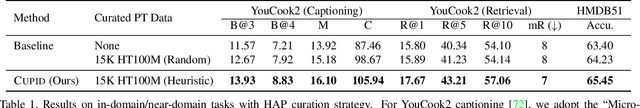

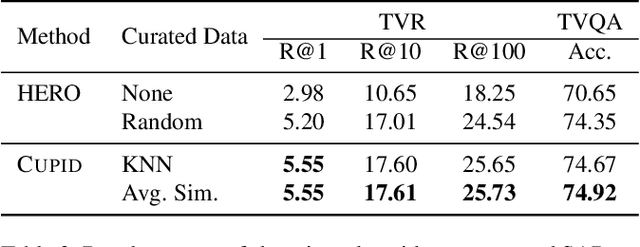

CUPID: Adaptive Curation of Pre-training Data for Video-and-Language Representation Learning

Apr 13, 2021Luowei Zhou, Jingjing Liu, Yu Cheng, Zhe Gan, Lei Zhang

This work concerns video-language pre-training and representation learning. In this now ubiquitous training scheme, a model first performs pre-training on paired videos and text (e.g., video clips and accompanied subtitles) from a large uncurated source corpus, before transferring to specific downstream tasks. This two-stage training process inevitably raises questions about the generalization ability of the pre-trained model, which is particularly pronounced when a salient domain gap exists between source and target data (e.g., instructional cooking videos vs. movies). In this paper, we first bring to light the sensitivity of pre-training objectives (contrastive vs. reconstructive) to domain discrepancy. Then, we propose a simple yet effective framework, CUPID, to bridge this domain gap by filtering and adapting source data to the target data, followed by domain-focused pre-training. Comprehensive experiments demonstrate that pre-training on a considerably small subset of domain-focused data can effectively close the source-target domain gap and achieve significant performance gain, compared to random sampling or even exploiting the full pre-training dataset. CUPID yields new state-of-the-art performance across multiple video-language and video tasks, including text-to-video retrieval [72, 37], video question answering [36], and video captioning [72], with consistent performance lift over different pre-training methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge