Cheng Cheng

The Llama 4 Herd: Architecture, Training, Evaluation, and Deployment Notes

Jan 15, 2026Abstract:This document consolidates publicly reported technical details about Metas Llama 4 model family. It summarizes (i) released variants (Scout and Maverick) and the broader herd context including the previewed Behemoth teacher model, (ii) architectural characteristics beyond a high-level MoE description covering routed/shared-expert structure, early-fusion multimodality, and long-context design elements reported for Scout (iRoPE and length generalization strategies), (iii) training disclosures spanning pre-training, mid-training for long-context extension, and post-training methodology (lightweight SFT, online RL, and lightweight DPO) as described in release materials, (iv) developer-reported benchmark results for both base and instruction-tuned checkpoints, and (v) practical deployment constraints observed across major serving environments, including provider-specific context limits and quantization packaging. The manuscript also summarizes licensing obligations relevant to redistribution and derivative naming, and reviews publicly described safeguards and evaluation practices. The goal is to provide a compact technical reference for researchers and practitioners who need precise, source-backed facts about Llama 4.

Conjugate Relation Modeling for Few-Shot Knowledge Graph Completion

Oct 26, 2025Abstract:Few-shot Knowledge Graph Completion (FKGC) infers missing triples from limited support samples, tackling long-tail distribution challenges. Existing methods, however, struggle to capture complex relational patterns and mitigate data sparsity. To address these challenges, we propose a novel FKGC framework for conjugate relation modeling (CR-FKGC). Specifically, it employs a neighborhood aggregation encoder to integrate higher-order neighbor information, a conjugate relation learner combining an implicit conditional diffusion relation module with a stable relation module to capture stable semantics and uncertainty offsets, and a manifold conjugate decoder for efficient evaluation and inference of missing triples in manifold space. Experiments on three benchmarks demonstrate that our method achieves superior performance over state-of-the-art methods.

LoRA-Gen: Specializing Large Language Model via Online LoRA Generation

Jun 13, 2025

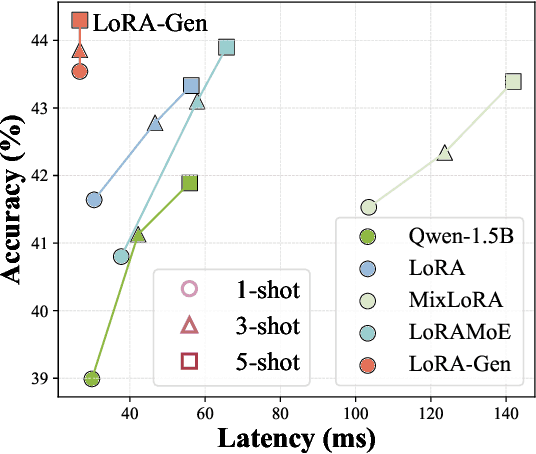

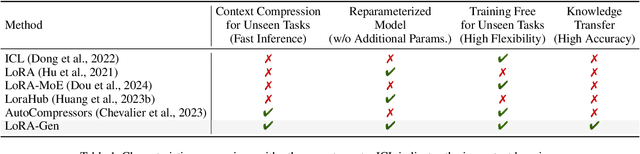

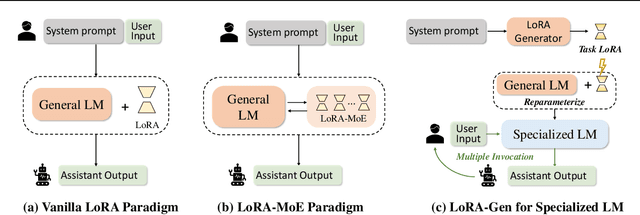

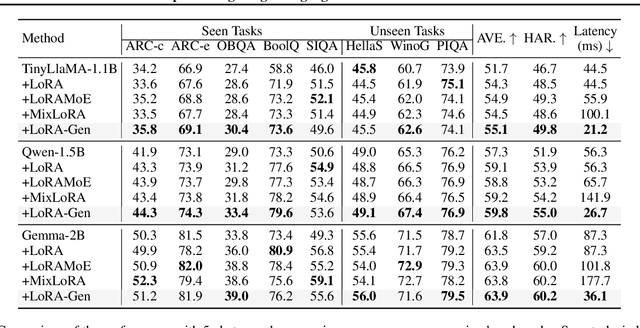

Abstract:Recent advances have highlighted the benefits of scaling language models to enhance performance across a wide range of NLP tasks. However, these approaches still face limitations in effectiveness and efficiency when applied to domain-specific tasks, particularly for small edge-side models. We propose the LoRA-Gen framework, which utilizes a large cloud-side model to generate LoRA parameters for edge-side models based on task descriptions. By employing the reparameterization technique, we merge the LoRA parameters into the edge-side model to achieve flexible specialization. Our method facilitates knowledge transfer between models while significantly improving the inference efficiency of the specialized model by reducing the input context length. Without specialized training, LoRA-Gen outperforms conventional LoRA fine-tuning, which achieves competitive accuracy and a 2.1x speedup with TinyLLaMA-1.1B in reasoning tasks. Besides, our method delivers a compression ratio of 10.1x with Gemma-2B on intelligent agent tasks.

CogMath: Assessing LLMs' Authentic Mathematical Ability from a Human Cognitive Perspective

Jun 04, 2025Abstract:Although large language models (LLMs) show promise in solving complex mathematical tasks, existing evaluation paradigms rely solely on a coarse measure of overall answer accuracy, which are insufficient for assessing their authentic capabilities. In this paper, we propose \textbf{CogMath}, which comprehensively assesses LLMs' mathematical abilities through the lens of human cognition. Specifically, inspired by psychological theories, CogMath formalizes human reasoning process into 3 stages: \emph{problem comprehension}, \emph{problem solving}, and \emph{solution summarization}. Within these stages, we investigate perspectives such as numerical calculation, knowledge, and counterfactuals, and design a total of 9 fine-grained evaluation dimensions. In each dimension, we develop an ``\emph{Inquiry}-\emph{Judge}-\emph{Reference}'' multi-agent system to generate inquiries that assess LLMs' mastery from this dimension. An LLM is considered to truly master a problem only when excelling in all inquiries from the 9 dimensions. By applying CogMath on three benchmarks, we reveal that the mathematical capabilities of 7 mainstream LLMs are overestimated by 30\%-40\%. Moreover, we locate their strengths and weaknesses across specific stages/dimensions, offering in-depth insights to further enhance their reasoning abilities.

Towards Foundation Model on Temporal Knowledge Graph Reasoning

Jun 04, 2025Abstract:Temporal Knowledge Graphs (TKGs) store temporal facts with quadruple formats (s, p, o, t). Existing Temporal Knowledge Graph Embedding (TKGE) models perform link prediction tasks in transductive or semi-inductive settings, which means the entities, relations, and temporal information in the test graph are fully or partially observed during training. Such reliance on seen elements during inference limits the models' ability to transfer to new domains and generalize to real-world scenarios. A central limitation is the difficulty in learning representations for entities, relations, and timestamps that are transferable and not tied to dataset-specific vocabularies. To overcome these limitations, we introduce the first fully-inductive approach to temporal knowledge graph link prediction. Our model employs sinusoidal positional encodings to capture fine-grained temporal patterns and generates adaptive entity and relation representations using message passing conditioned on both local and global temporal contexts. Our model design is agnostic to temporal granularity and time span, effectively addressing temporal discrepancies across TKGs and facilitating time-aware structural information transfer. As a pretrained, scalable, and transferable model, POSTRA demonstrates strong zero-shot performance on unseen temporal knowledge graphs, effectively generalizing to novel entities, relations, and timestamps. Extensive theoretical analysis and empirical results show that a single pretrained model can improve zero-shot performance on various inductive temporal reasoning scenarios, marking a significant step toward a foundation model for temporal KGs.

Skywork Open Reasoner 1 Technical Report

May 29, 2025Abstract:The success of DeepSeek-R1 underscores the significant role of reinforcement learning (RL) in enhancing the reasoning capabilities of large language models (LLMs). In this work, we present Skywork-OR1, an effective and scalable RL implementation for long Chain-of-Thought (CoT) models. Building on the DeepSeek-R1-Distill model series, our RL approach achieves notable performance gains, increasing average accuracy across AIME24, AIME25, and LiveCodeBench from 57.8% to 72.8% (+15.0%) for the 32B model and from 43.6% to 57.5% (+13.9%) for the 7B model. Our Skywork-OR1-32B model surpasses both DeepSeek-R1 and Qwen3-32B on the AIME24 and AIME25 benchmarks, while achieving comparable results on LiveCodeBench. The Skywork-OR1-7B and Skywork-OR1-Math-7B models demonstrate competitive reasoning capabilities among models of similar size. We perform comprehensive ablation studies on the core components of our training pipeline to validate their effectiveness. Additionally, we thoroughly investigate the phenomenon of entropy collapse, identify key factors affecting entropy dynamics, and demonstrate that mitigating premature entropy collapse is critical for improved test performance. To support community research, we fully open-source our model weights, training code, and training datasets.

TensorAR: Refinement is All You Need in Autoregressive Image Generation

May 22, 2025

Abstract:Autoregressive (AR) image generators offer a language-model-friendly approach to image generation by predicting discrete image tokens in a causal sequence. However, unlike diffusion models, AR models lack a mechanism to refine previous predictions, limiting their generation quality. In this paper, we introduce TensorAR, a new AR paradigm that reformulates image generation from next-token prediction to next-tensor prediction. By generating overlapping windows of image patches (tensors) in a sliding fashion, TensorAR enables iterative refinement of previously generated content. To prevent information leakage during training, we propose a discrete tensor noising scheme, which perturbs input tokens via codebook-indexed noise. TensorAR is implemented as a plug-and-play module compatible with existing AR models. Extensive experiments on LlamaGEN, Open-MAGVIT2, and RAR demonstrate that TensorAR significantly improves the generation performance of autoregressive models.

AD-AGENT: A Multi-agent Framework for End-to-end Anomaly Detection

May 19, 2025

Abstract:Anomaly detection (AD) is essential in areas such as fraud detection, network monitoring, and scientific research. However, the diversity of data modalities and the increasing number of specialized AD libraries pose challenges for non-expert users who lack in-depth library-specific knowledge and advanced programming skills. To tackle this, we present AD-AGENT, an LLM-driven multi-agent framework that turns natural-language instructions into fully executable AD pipelines. AD-AGENT coordinates specialized agents for intent parsing, data preparation, library and model selection, documentation mining, and iterative code generation and debugging. Using a shared short-term workspace and a long-term cache, the agents integrate popular AD libraries like PyOD, PyGOD, and TSLib into a unified workflow. Experiments demonstrate that AD-AGENT produces reliable scripts and recommends competitive models across libraries. The system is open-sourced to support further research and practical applications in AD.

Iterative Polynomial Approximation Algorithms for Inverse Graph Filters

Apr 19, 2025Abstract:Chebyshev interpolation polynomials exhibit the exponential approximation property to analytic functions on a cube. Based on the Chebyshev interpolation polynomial approximation, we propose iterative polynomial approximation algorithms to implement the inverse filter with a polynomial graph filter of commutative graph shifts in a distributed manner. The proposed algorithms exhibit exponential convergence properties, and they can be implemented on distributed networks in which agents are equipped with a data processing subsystem for limited data storage and computation power, and with a one-hop communication subsystem for direct data exchange only with their adjacent agents. Our simulations show that the proposed polynomial approximation algorithms may converge faster than the Chebyshev polynomial approximation algorithm and the conventional gradient descent algorithm do.

Automatic Spectral Calibration of Hyperspectral Images:Method, Dataset and Benchmark

Dec 19, 2024

Abstract:Hyperspectral image (HSI) densely samples the world in both the space and frequency domain and therefore is more distinctive than RGB images. Usually, HSI needs to be calibrated to minimize the impact of various illumination conditions. The traditional way to calibrate HSI utilizes a physical reference, which involves manual operations, occlusions, and/or limits camera mobility. These limitations inspire this paper to automatically calibrate HSIs using a learning-based method. Towards this goal, a large-scale HSI calibration dataset is created, which has 765 high-quality HSI pairs covering diversified natural scenes and illuminations. The dataset is further expanded to 7650 pairs by combining with 10 different physically measured illuminations. A spectral illumination transformer (SIT) together with an illumination attention module is proposed. Extensive benchmarks demonstrate the SoTA performance of the proposed SIT. The benchmarks also indicate that low-light conditions are more challenging than normal conditions. The dataset and codes are available online:https://github.com/duranze/Automatic-spectral-calibration-of-HSI

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge