Zhongyu Li

Creating a Dynamic Quadrupedal Robotic Goalkeeper with Reinforcement Learning

Oct 10, 2022

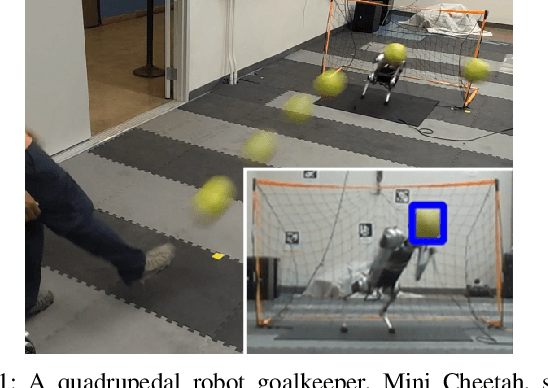

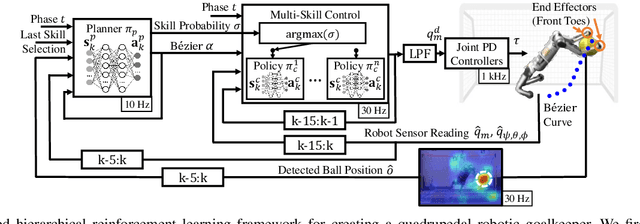

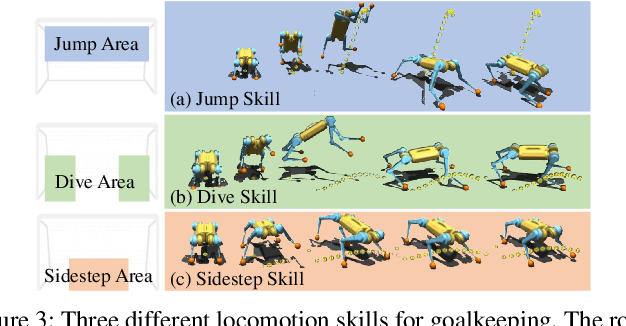

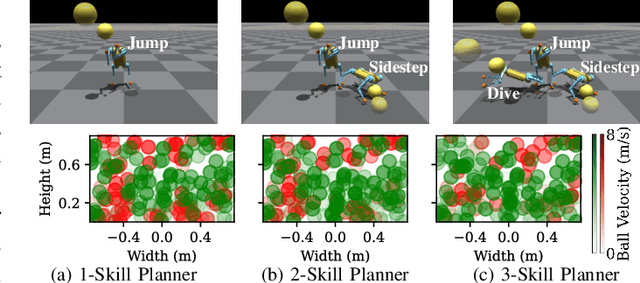

Abstract:We present a reinforcement learning (RL) framework that enables quadrupedal robots to perform soccer goalkeeping tasks in the real world. Soccer goalkeeping using quadrupeds is a challenging problem, that combines highly dynamic locomotion with precise and fast non-prehensile object (ball) manipulation. The robot needs to react to and intercept a potentially flying ball using dynamic locomotion maneuvers in a very short amount of time, usually less than one second. In this paper, we propose to address this problem using a hierarchical model-free RL framework. The first component of the framework contains multiple control policies for distinct locomotion skills, which can be used to cover different regions of the goal. Each control policy enables the robot to track random parametric end-effector trajectories while performing one specific locomotion skill, such as jump, dive, and sidestep. These skills are then utilized by the second part of the framework which is a high-level planner to determine a desired skill and end-effector trajectory in order to intercept a ball flying to different regions of the goal. We deploy the proposed framework on a Mini Cheetah quadrupedal robot and demonstrate the effectiveness of our framework for various agile interceptions of a fast-moving ball in the real world.

GenLoco: Generalized Locomotion Controllers for Quadrupedal Robots

Sep 12, 2022

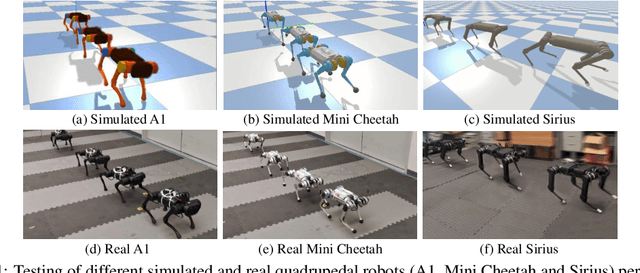

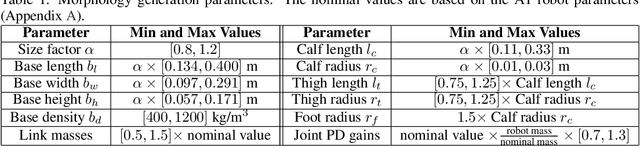

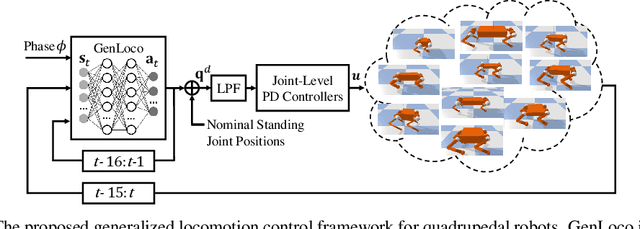

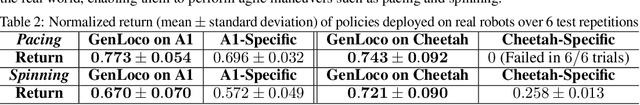

Abstract:Recent years have seen a surge in commercially-available and affordable quadrupedal robots, with many of these platforms being actively used in research and industry. As the availability of legged robots grows, so does the need for controllers that enable these robots to perform useful skills. However, most learning-based frameworks for controller development focus on training robot-specific controllers, a process that needs to be repeated for every new robot. In this work, we introduce a framework for training generalized locomotion (GenLoco) controllers for quadrupedal robots. Our framework synthesizes general-purpose locomotion controllers that can be deployed on a large variety of quadrupedal robots with similar morphologies. We present a simple but effective morphology randomization method that procedurally generates a diverse set of simulated robots for training. We show that by training a controller on this large set of simulated robots, our models acquire more general control strategies that can be directly transferred to novel simulated and real-world robots with diverse morphologies, which were not observed during training.

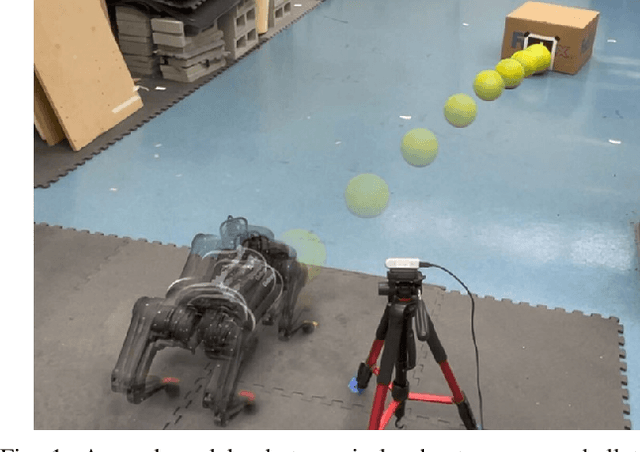

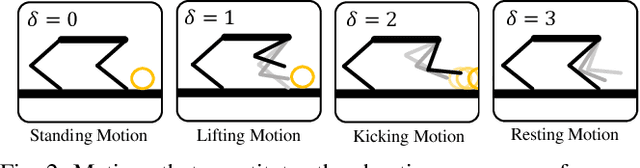

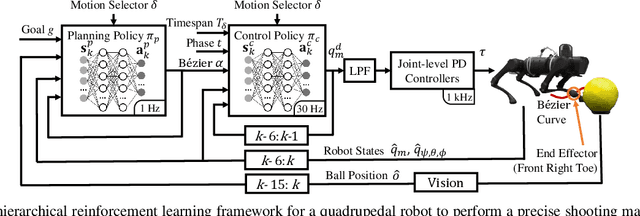

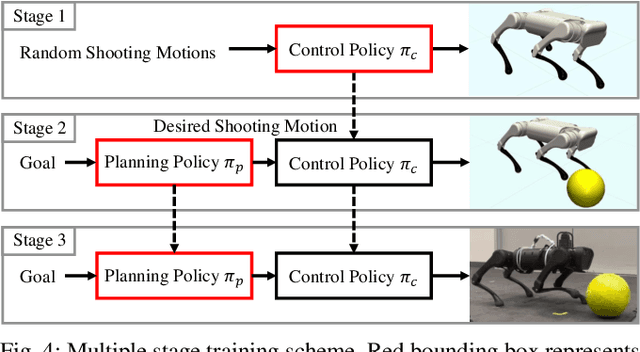

Hierarchical Reinforcement Learning for Precise Soccer Shooting Skills using a Quadrupedal Robot

Aug 01, 2022

Abstract:We address the problem of enabling quadrupedal robots to perform precise shooting skills in the real world using reinforcement learning. Developing algorithms to enable a legged robot to shoot a soccer ball to a given target is a challenging problem that combines robot motion control and planning into one task. To solve this problem, we need to consider the dynamics limitation and motion stability during the control of a dynamic legged robot. Moreover, we need to consider motion planning to shoot the hard-to-model deformable ball rolling on the ground with uncertain friction to a desired location. In this paper, we propose a hierarchical framework that leverages deep reinforcement learning to train (a) a robust motion control policy that can track arbitrary motions and (b) a planning policy to decide the desired kicking motion to shoot a soccer ball to a target. We deploy the proposed framework on an A1 quadrupedal robot and enable it to accurately shoot the ball to random targets in the real world.

Key-frame Guided Network for Thyroid Nodule Recognition using Ultrasound Videos

Jun 30, 2022

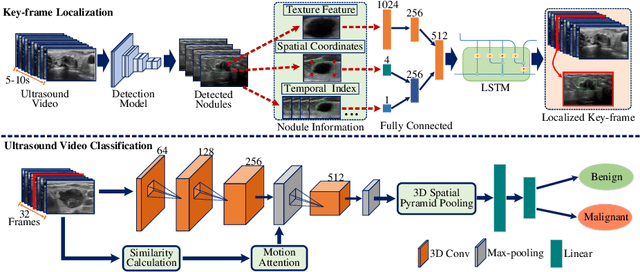

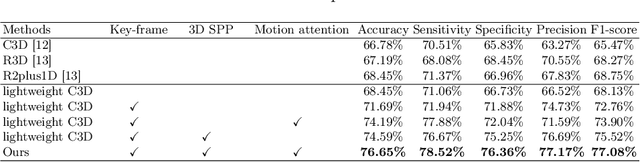

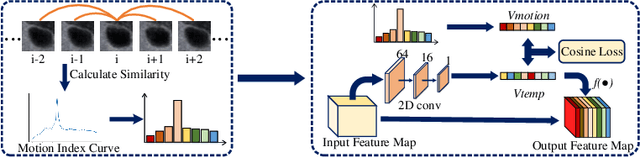

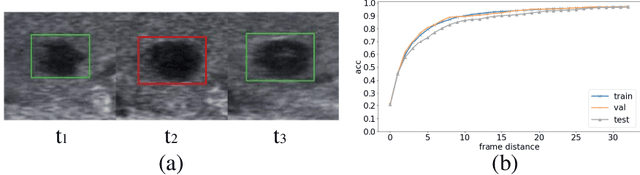

Abstract:Ultrasound examination is widely used in the clinical diagnosis of thyroid nodules (benign/malignant). However, the accuracy relies heavily on radiologist experience. Although deep learning techniques have been investigated for thyroid nodules recognition. Current solutions are mainly based on static ultrasound images, with limited temporal information used and inconsistent with clinical diagnosis. This paper proposes a novel method for the automated recognition of thyroid nodules through an exhaustive exploration of ultrasound videos and key-frames. We first propose a detection-localization framework to automatically identify the clinical key-frame with a typical nodule in each ultrasound video. Based on the localized key-frame, we develop a key-frame guided video classification model for thyroid nodule recognition. Besides, we introduce a motion attention module to help the network focus on significant frames in an ultrasound video, which is consistent with clinical diagnosis. The proposed thyroid nodule recognition framework is validated on clinically collected ultrasound videos, demonstrating superior performance compared with other state-of-the-art methods.

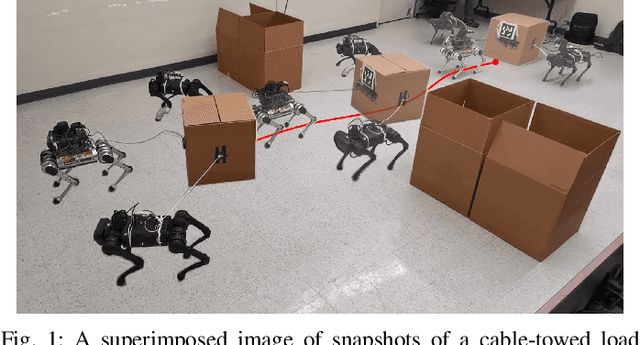

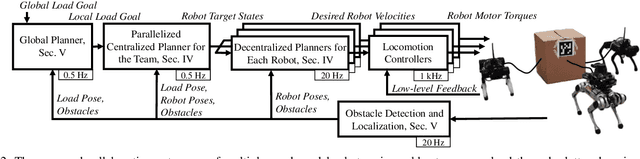

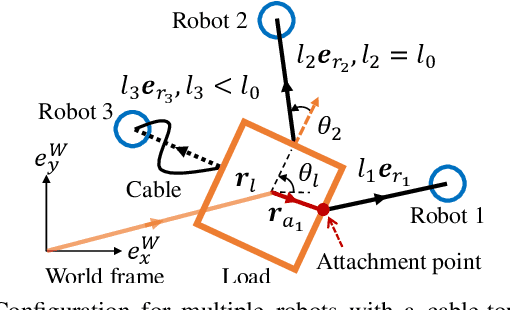

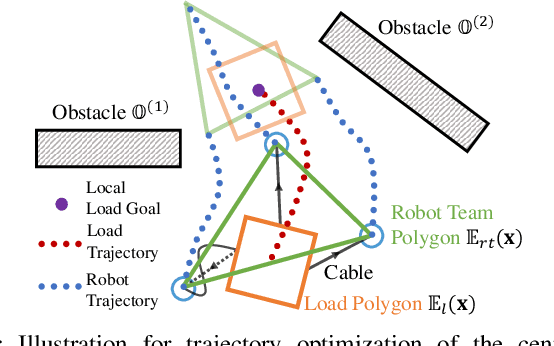

Collaborative Navigation and Manipulation of a Cable-towed Load by Multiple Quadrupedal Robots

Jun 29, 2022

Abstract:This paper tackles the problem of robots collaboratively towing a load with cables to a specified goal location while avoiding collisions in real time. The introduction of cables (as opposed to rigid links) enables the robotic team to travel through narrow spaces by changing its intrinsic dimensions through slack/taut switches of the cable. However, this is a challenging problem because of the hybrid mode switches and the dynamical coupling among multiple robots and the load. Previous attempts at addressing such a problem were performed offline and do not consider avoiding obstacles online. In this paper, we introduce a cascaded planning scheme with a parallelized centralized trajectory optimization that deals with hybrid mode switches. We additionally develop a set of decentralized planners per robot, which enables our approach to solve the problem of collaborative load manipulation online. We develop and demonstrate one of the first collaborative autonomy framework that is able to move a cable-towed load, which is too heavy to move by a single robot, through narrow spaces with real-time feedback and reactive planning in experiments.

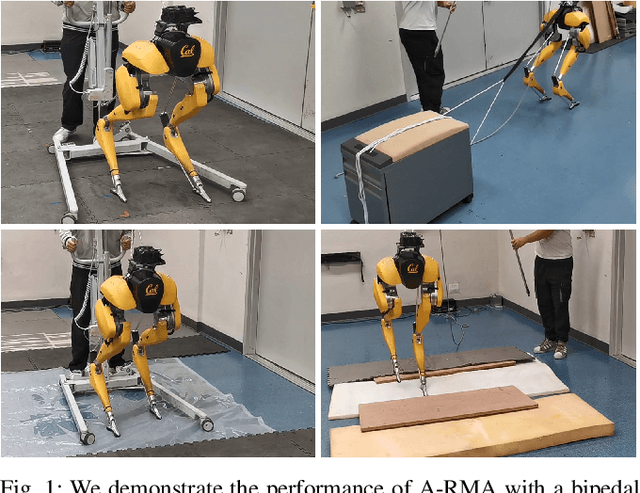

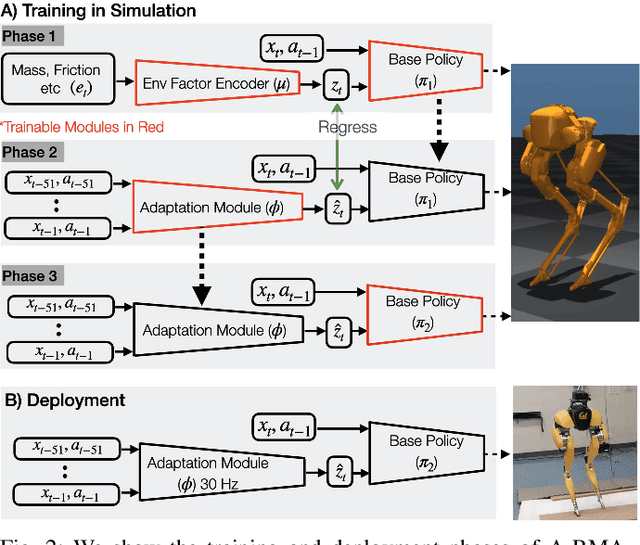

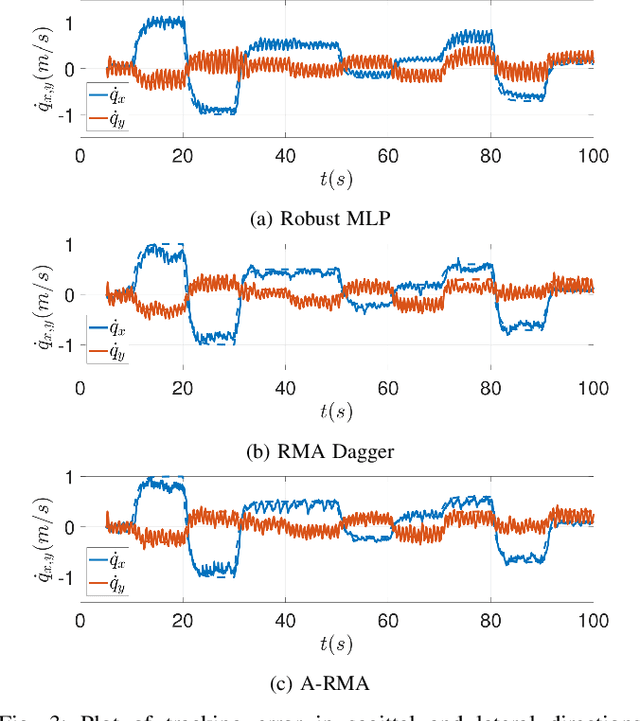

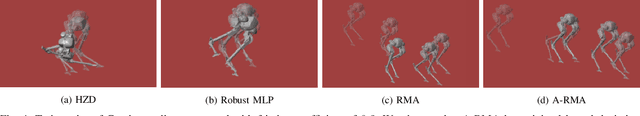

Adapting Rapid Motor Adaptation for Bipedal Robots

May 30, 2022

Abstract:Recent advances in legged locomotion have enabled quadrupeds to walk on challenging terrains. However, bipedal robots are inherently more unstable and hence it's harder to design walking controllers for them. In this work, we leverage recent advances in rapid adaptation for locomotion control, and extend them to work on bipedal robots. Similar to existing works, we start with a base policy which produces actions while taking as input an estimated extrinsics vector from an adaptation module. This extrinsics vector contains information about the environment and enables the walking controller to rapidly adapt online. However, the extrinsics estimator could be imperfect, which might lead to poor performance of the base policy which expects a perfect estimator. In this paper, we propose A-RMA (Adapting RMA), which additionally adapts the base policy for the imperfect extrinsics estimator by finetuning it using model-free RL. We demonstrate that A-RMA outperforms a number of RL-based baseline controllers and model-based controllers in simulation, and show zero-shot deployment of a single A-RMA policy to enable a bipedal robot, Cassie, to walk in a variety of different scenarios in the real world beyond what it has seen during training. Videos and results at https://ashish-kmr.github.io/a-rma/

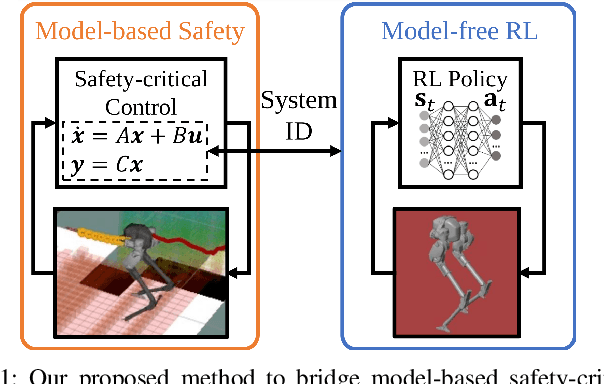

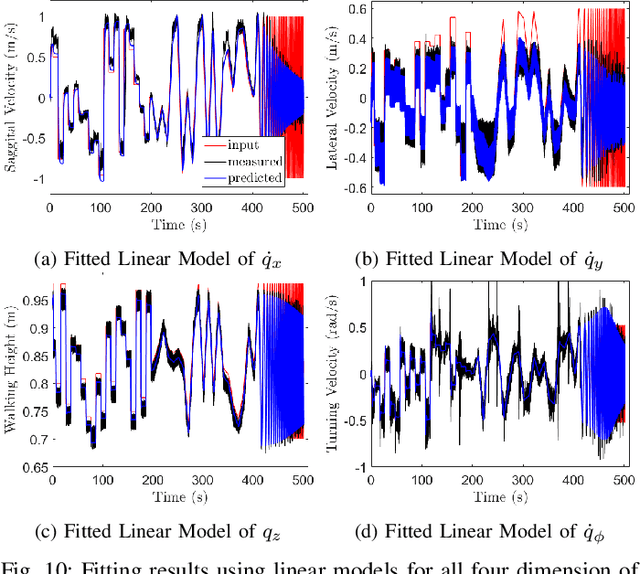

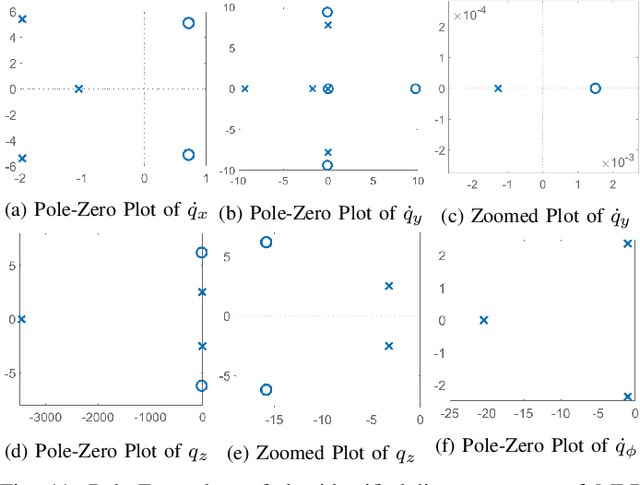

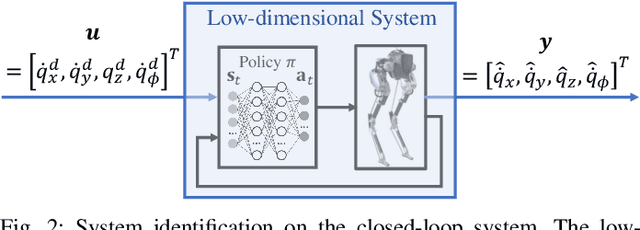

Bridging Model-based Safety and Model-free Reinforcement Learning through System Identification of Low Dimensional Linear Models

May 11, 2022

Abstract:Bridging model-based safety and model-free reinforcement learning (RL) for dynamic robots is appealing since model-based methods are able to provide formal safety guarantees, while RL-based methods are able to exploit the robot agility by learning from the full-order system dynamics. However, current approaches to tackle this problem are mostly restricted to simple systems. In this paper, we propose a new method to combine model-based safety with model-free reinforcement learning by explicitly finding a low-dimensional model of the system controlled by a RL policy and applying stability and safety guarantees on that simple model. We use a complex bipedal robot Cassie, which is a high dimensional nonlinear system with hybrid dynamics and underactuation, and its RL-based walking controller as an example. We show that a low-dimensional dynamical model is sufficient to capture the dynamics of the closed-loop system. We demonstrate that this model is linear, asymptotically stable, and is decoupled across control input in all dimensions. We further exemplify that such linearity exists even when using different RL control policies. Such results point out an interesting direction to understand the relationship between RL and optimal control: whether RL tends to linearize the nonlinear system during training in some cases. Furthermore, we illustrate that the found linear model is able to provide guarantees by safety-critical optimal control framework, e.g., Model Predictive Control with Control Barrier Functions, on an example of autonomous navigation using Cassie while taking advantage of the agility provided by the RL-based controller.

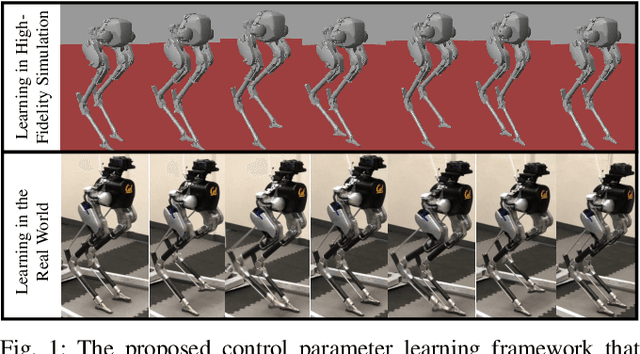

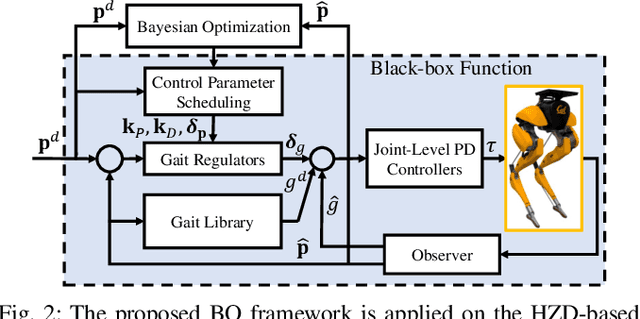

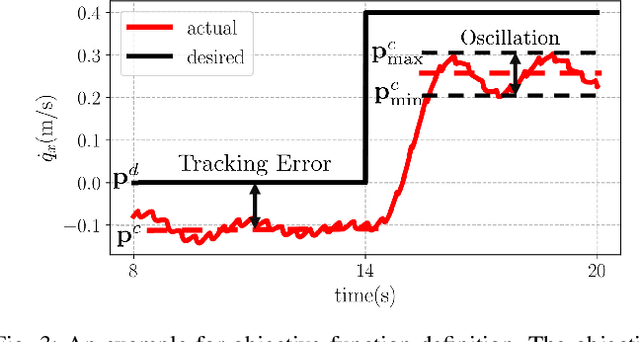

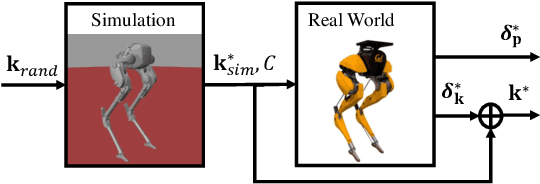

Bayesian Optimization Meets Hybrid Zero Dynamics: Safe Parameter Learning for Bipedal Locomotion Control

Mar 04, 2022

Abstract:In this paper, we propose a multi-domain control parameter learning framework that combines Bayesian Optimization (BO) and Hybrid Zero Dynamics (HZD) for locomotion control of bipedal robots. We leverage BO to learn the control parameters used in the HZD-based controller. The learning process is firstly deployed in simulation to optimize different control parameters for a large repertoire of gaits. Next, to tackle the discrepancy between the simulation and the real world, the learning process is applied on the physical robot to learn for corrections to the control parameters learned in simulation while also respecting a safety constraint for gait stability. This method empowers an efficient sim-to-real transition with a small number of samples in the real world, and does not require a valid controller to initialize the training in simulation. Our proposed learning framework is experimentally deployed and validated on a bipedal robot Cassie to perform versatile locomotion skills with improved performance on smoothness of walking gaits and reduction of steady-state tracking errors.

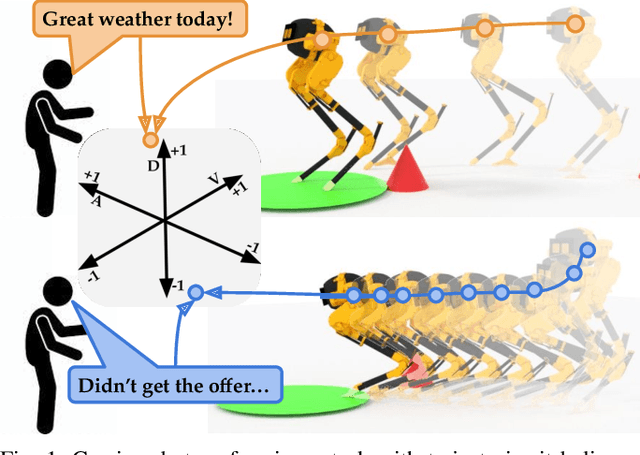

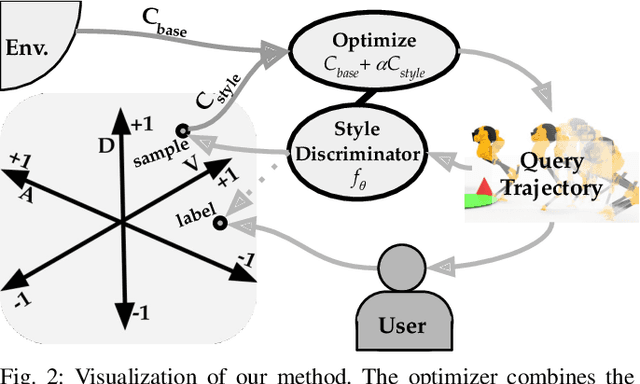

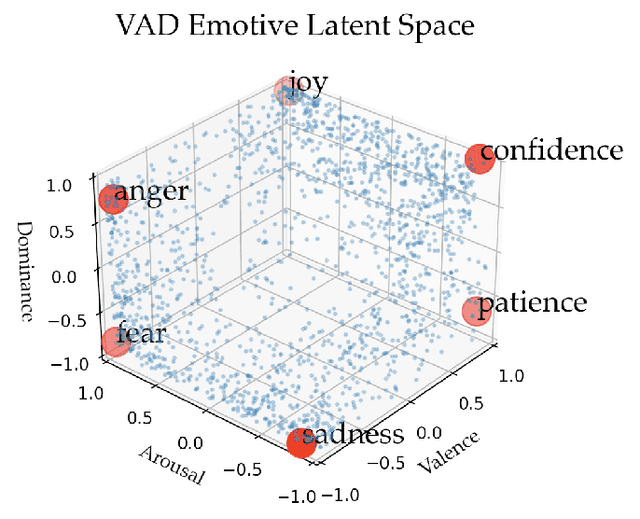

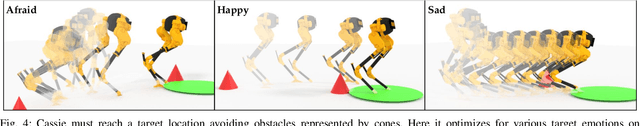

Teaching Robots to Span the Space of Functional Expressive Motion

Mar 04, 2022

Abstract:Our goal is to enable robots to perform functional tasks in emotive ways, be it in response to their users' emotional states, or expressive of their confidence levels. Prior work has proposed learning independent cost functions from user feedback for each target emotion, so that the robot may optimize it alongside task and environment specific objectives for any situation it encounters. However, this approach is inefficient when modeling multiple emotions and unable to generalize to new ones. In this work, we leverage the fact that emotions are not independent of each other: they are related through a latent space of Valence-Arousal-Dominance (VAD). Our key idea is to learn a model for how trajectories map onto VAD with user labels. Considering the distance between a trajectory's mapping and a target VAD allows this single model to represent cost functions for all emotions. As a result 1) all user feedback can contribute to learning about every emotion; 2) the robot can generate trajectories for any emotion in the space instead of only a few predefined ones; and 3) the robot can respond emotively to user-generated natural language by mapping it to a target VAD. We introduce a method that interactively learns to map trajectories to this latent space and test it in simulation and in a user study. In experiments, we use a simple vacuum robot as well as the Cassie biped.

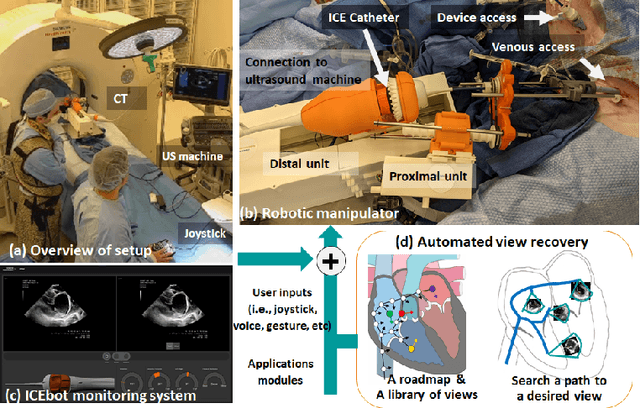

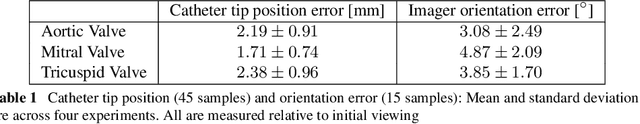

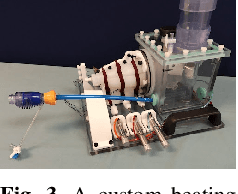

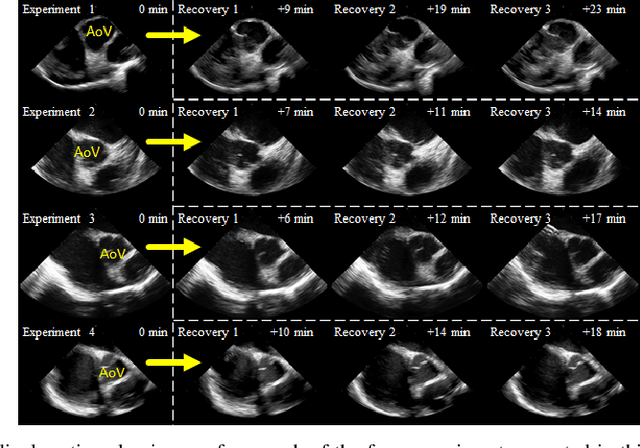

Automated Catheter Tip Repositioning for Intra-cardiac Echocardiography

Jan 21, 2022

Abstract:Purpose: Intra-Cardiac Echocardiography (ICE) is a powerful imaging modality for guiding cardiac electrophysiology and structural heart interventions. ICE provides real-time observation of anatomy and devices, while enabling direct monitoring of potential complications. In single operator settings, the physician needs to switch back-and-forth between the ICE catheter and therapy device, making continuous ICE support impossible. Two operators setup are therefore sometimes implemented, with the challenge of increase room occupation and cost. Two operator setups are sometimes implemented, but increase procedural costs and room occupation. Methods: ICE catheter robotic control system is developed with automated catheter tip repositioning (i.e. view recovery) method, which can reproduce important views previously navigated to and saved by the user. The performance of the proposed method is demonstrated and evaluated in a combination of heart phantom and animal experiments. Results: Automated ICE view recovery achieved catheter tip position accuracy of 2.09 +/-0.90 mm and catheter image orientation accuracy of 3.93 +/- 2.07 degree in animal studies, and 0.67 +/- 0.79 mm and 0.37 +/- 0.19 degree in heart phantom studies, respectively. Our proposed method is also successfully used during transeptal puncture in animals without complications, showing the possibility for fluoro-less transeptal puncture with ICE catheter robot. Conclusion: Robotic ICE imaging has the potential to provide precise and reproducible anatomical views, which can reduce overall execution time, labor burden of procedures, and x-ray usage for a range of cardiac procedures. Keywords: Automated View Recovery, Path Planning, Intra-cardiac echocardiography (ICE), Catheter, Tendon-driven manipulator, Cardiac Imaging

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge