Zhengyou Zhang

ProAct: A Benchmark and Multimodal Framework for Structure-Aware Proactive Response

Feb 03, 2026Abstract:While passive agents merely follow instructions, proactive agents align with higher-level objectives, such as assistance and safety by continuously monitoring the environment to determine when and how to act. However, developing proactive agents is hindered by the lack of specialized resources. To address this, we introduce ProAct-75, a benchmark designed to train and evaluate proactive agents across diverse domains, including assistance, maintenance, and safety monitoring. Spanning 75 tasks, our dataset features 91,581 step-level annotations enriched with explicit task graphs. These graphs encode step dependencies and parallel execution possibilities, providing the structural grounding necessary for complex decision-making. Building on this benchmark, we propose ProAct-Helper, a reference baseline powered by a Multimodal Large Language Model (MLLM) that grounds decision-making in state detection, and leveraging task graphs to enable entropy-driven heuristic search for action selection, allowing agents to execute parallel threads independently rather than mirroring the human's next step. Extensive experiments demonstrate that ProAct-Helper outperforms strong closed-source models, improving trigger detection mF1 by 6.21%, saving 0.25 more steps in online one-step decision, and increasing the rate of parallel actions by 15.58%.

ESearch-R1: Learning Cost-Aware MLLM Agents for Interactive Embodied Search via Reinforcement Learning

Dec 21, 2025Abstract:Multimodal Large Language Models (MLLMs) have empowered embodied agents with remarkable capabilities in planning and reasoning. However, when facing ambiguous natural language instructions (e.g., "fetch the tool" in a cluttered room), current agents often fail to balance the high cost of physical exploration against the cognitive cost of human interaction. They typically treat disambiguation as a passive perception problem, lacking the strategic reasoning to minimize total task execution costs. To bridge this gap, we propose ESearch-R1, a cost-aware embodied reasoning framework that unifies interactive dialogue (Ask), episodic memory retrieval (GetMemory), and physical navigation (Navigate) into a single decision process. We introduce HC-GRPO (Heterogeneous Cost-Aware Group Relative Policy Optimization). Unlike traditional PPO which relies on a separate value critic, HC-GRPO optimizes the MLLM by sampling groups of reasoning trajectories and reinforcing those that achieve the optimal trade-off between information gain and heterogeneous costs (e.g., navigate time, and human attention). Extensive experiments in AI2-THOR demonstrate that ESearch-R1 significantly outperforms standard ReAct-based agents. It improves task success rates while reducing total operational costs by approximately 50\%, validating the effectiveness of GRPO in aligning MLLM agents with physical world constraints.

Compliance while resisting: a shear-thickening fluid controller for physical human-robot interaction

Feb 03, 2025

Abstract:Physical human-robot interaction (pHRI) is widely needed in many fields, such as industrial manipulation, home services, and medical rehabilitation, and puts higher demands on the safety of robots. Due to the uncertainty of the working environment, the pHRI may receive unexpected impact interference, which affects the safety and smoothness of the task execution. The commonly used linear admittance control (L-AC) can cope well with high-frequency small-amplitude noise, but for medium-frequency high-intensity impact, the effect is not as good. Inspired by the solid-liquid phase change nature of shear-thickening fluid, we propose a Shear-thickening Fluid Control (SFC) that can achieve both an easy human-robot collaboration and resistance to impact interference. The SFC's stability, passivity, and phase trajectory are analyzed in detail, the frequency and time domain properties are quantified, and parameter constraints in discrete control and coupled stability conditions are provided. We conducted simulations to compare the frequency and time domain characteristics of L-AC, nonlinear admittance controller (N-AC), and SFC, and validated their dynamic properties. In real-world experiments, we compared the performance of L-AC, N-AC, and SFC in both fixed and mobile manipulators. L-AC exhibits weak resistance to impact. N-AC can resist moderate impacts but not high-intensity ones, and may exhibit self-excited oscillations. In contrast, SFC demonstrated superior impact resistance and maintained stable collaboration, enhancing comfort in cooperative water delivery tasks. Additionally, a case study was conducted in a factory setting, further affirming the SFC's capability in facilitating human-robot collaborative manipulation and underscoring its potential in industrial applications.

Whole-Body Impedance Coordinative Control of Wheel-Legged Robot on Uncertain Terrain

Nov 15, 2024

Abstract:This article propose a whole-body impedance coordinative control framework for a wheel-legged humanoid robot to achieve adaptability on complex terrains while maintaining robot upper body stability. The framework contains a bi-level control strategy. The outer level is a variable damping impedance controller, which optimizes the damping parameters to ensure the stability of the upper body while holding an object. The inner level employs Whole-Body Control (WBC) optimization that integrates real-time terrain estimation based on wheel-foot position and force data. It generates motor torques while accounting for dynamic constraints, joint limits,friction cones, real-time terrain updates, and a model-free friction compensation strategy. The proposed whole-body coordinative control method has been tested on a recently developed quadruped humanoid robot. The results demonstrate that the proposed algorithm effectively controls the robot, maintaining upper body stability to successfully complete a water-carrying task while adapting to varying terrains.

RiskAwareBench: Towards Evaluating Physical Risk Awareness for High-level Planning of LLM-based Embodied Agents

Aug 08, 2024

Abstract:The integration of large language models (LLMs) into robotics significantly enhances the capabilities of embodied agents in understanding and executing complex natural language instructions. However, the unmitigated deployment of LLM-based embodied systems in real-world environments may pose potential physical risks, such as property damage and personal injury. Existing security benchmarks for LLMs overlook risk awareness for LLM-based embodied agents. To address this gap, we propose RiskAwareBench, an automated framework designed to assess physical risks awareness in LLM-based embodied agents. RiskAwareBench consists of four modules: safety tips generation, risky scene generation, plan generation, and evaluation, enabling comprehensive risk assessment with minimal manual intervention. Utilizing this framework, we compile the PhysicalRisk dataset, encompassing diverse scenarios with associated safety tips, observations, and instructions. Extensive experiments reveal that most LLMs exhibit insufficient physical risk awareness, and baseline risk mitigation strategies yield limited enhancement, which emphasizes the urgency and cruciality of improving risk awareness in LLM-based embodied agents in the future.

TencentLLMEval: A Hierarchical Evaluation of Real-World Capabilities for Human-Aligned LLMs

Nov 09, 2023

Abstract:Large language models (LLMs) have shown impressive capabilities across various natural language tasks. However, evaluating their alignment with human preferences remains a challenge. To this end, we propose a comprehensive human evaluation framework to assess LLMs' proficiency in following instructions on diverse real-world tasks. We construct a hierarchical task tree encompassing 7 major areas covering over 200 categories and over 800 tasks, which covers diverse capabilities such as question answering, reasoning, multiturn dialogue, and text generation, to evaluate LLMs in a comprehensive and in-depth manner. We also design detailed evaluation standards and processes to facilitate consistent, unbiased judgments from human evaluators. A test set of over 3,000 instances is released, spanning different difficulty levels and knowledge domains. Our work provides a standardized methodology to evaluate human alignment in LLMs for both English and Chinese. We also analyze the feasibility of automating parts of evaluation with a strong LLM (GPT-4). Our framework supports a thorough assessment of LLMs as they are integrated into real-world applications. We have made publicly available the task tree, TencentLLMEval dataset, and evaluation methodology which have been demonstrated as effective in assessing the performance of Tencent Hunyuan LLMs. By doing so, we aim to facilitate the benchmarking of advances in the development of safe and human-aligned LLMs.

Lifelike Agility and Play on Quadrupedal Robots using Reinforcement Learning and Generative Pre-trained Models

Aug 29, 2023Abstract:Summarizing knowledge from animals and human beings inspires robotic innovations. In this work, we propose a framework for driving legged robots act like real animals with lifelike agility and strategy in complex environments. Inspired by large pre-trained models witnessed with impressive performance in language and image understanding, we introduce the power of advanced deep generative models to produce motor control signals stimulating legged robots to act like real animals. Unlike conventional controllers and end-to-end RL methods that are task-specific, we propose to pre-train generative models over animal motion datasets to preserve expressive knowledge of animal behavior. The pre-trained model holds sufficient primitive-level knowledge yet is environment-agnostic. It is then reused for a successive stage of learning to align with the environments by traversing a number of challenging obstacles that are rarely considered in previous approaches, including creeping through narrow spaces, jumping over hurdles, freerunning over scattered blocks, etc. Finally, a task-specific controller is trained to solve complex downstream tasks by reusing the knowledge from previous stages. Enriching the knowledge regarding each stage does not affect the usage of other levels of knowledge. This flexible framework offers the possibility of continual knowledge accumulation at different levels. We successfully apply the trained multi-level controllers to the MAX robot, a quadrupedal robot developed in-house, to mimic animals, traverse complex obstacles, and play in a designed challenging multi-agent Chase Tag Game, where lifelike agility and strategy emerge on the robots. The present research pushes the frontier of robot control with new insights on reusing multi-level pre-trained knowledge and solving highly complex downstream tasks in the real world.

Ground-Challenge: A Multi-sensor SLAM Dataset Focusing on Corner Cases for Ground Robots

Jul 08, 2023Abstract:High-quality datasets can speed up breakthroughs and reveal potential developing directions in SLAM research. To support the research on corner cases of visual SLAM systems, this paper presents Ground-Challenge: a challenging dataset comprising 36 trajectories with diverse corner cases such as aggressive motion, severe occlusion, changing illumination, few textures, pure rotation, motion blur, wheel suspension, etc. The dataset was collected by a ground robot with multiple sensors including an RGB-D camera, an inertial measurement unit (IMU), a wheel odometer and a 3D LiDAR. All of these sensors were well-calibrated and synchronized, and their data were recorded simultaneously. To evaluate the performance of cutting-edge SLAM systems, we tested them on our dataset and demonstrated that these systems are prone to drift and fail on specific sequences. We will release the full dataset and relevant materials upon paper publication to benefit the research community. For more information, visit our project website at https://github.com/sjtuyinjie/Ground-Challenge.

DA$^2$ Dataset: Toward Dexterity-Aware Dual-Arm Grasping

Jul 31, 2022

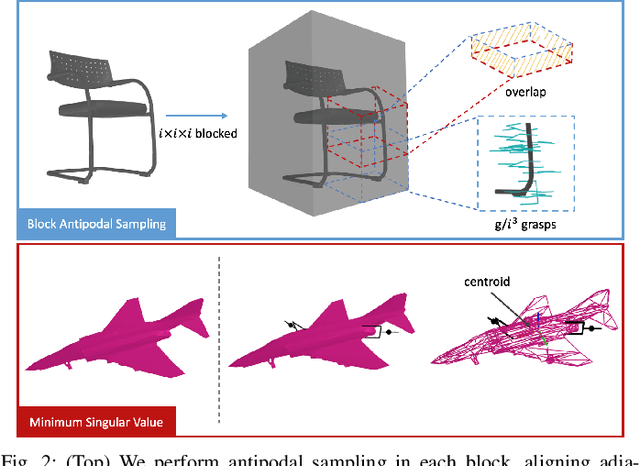

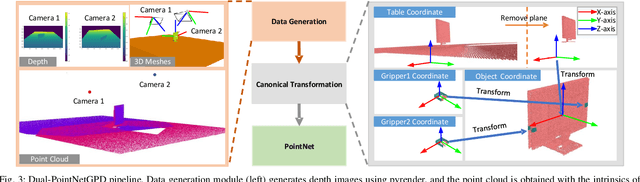

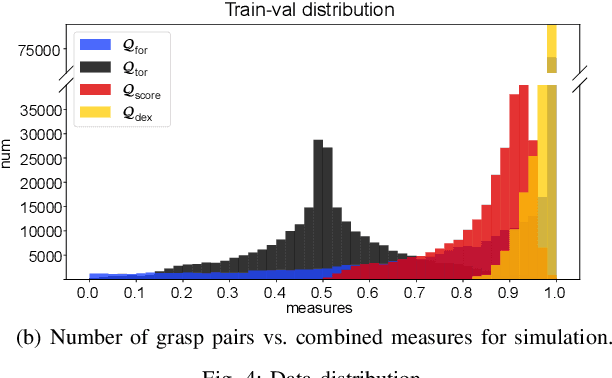

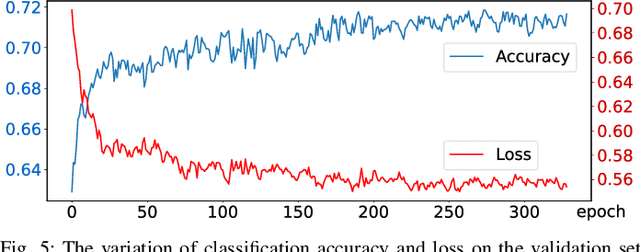

Abstract:In this paper, we introduce DA$^2$, the first large-scale dual-arm dexterity-aware dataset for the generation of optimal bimanual grasping pairs for arbitrary large objects. The dataset contains about 9M pairs of parallel-jaw grasps, generated from more than 6000 objects and each labeled with various grasp dexterity measures. In addition, we propose an end-to-end dual-arm grasp evaluation model trained on the rendered scenes from this dataset. We utilize the evaluation model as our baseline to show the value of this novel and nontrivial dataset by both online analysis and real robot experiments. All data and related code will be open-sourced at https://sites.google.com/view/da2dataset.

Relative Policy-Transition Optimization for Fast Policy Transfer

Jun 13, 2022

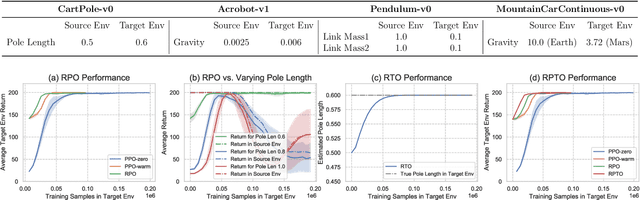

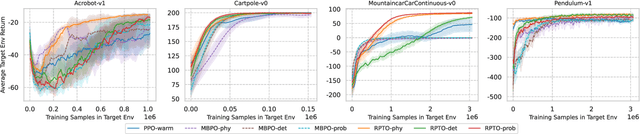

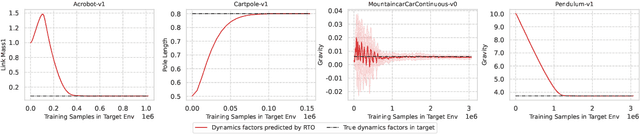

Abstract:We consider the problem of policy transfer between two Markov Decision Processes (MDPs). We introduce a lemma based on existing theoretical results in reinforcement learning (RL) to measure the relativity between two arbitrary MDPs, that is the difference between any two cumulative expected returns defined on different policies and environment dynamics. Based on this lemma, we propose two new algorithms referred to as Relative Policy Optimization (RPO) and Relative Transition Optimization (RTO), which can offer fast policy transfer and dynamics modeling, respectively. RPO updates the policy using the relative policy gradient to transfer the policy evaluated in one environment to maximize the return in another, while RTO updates the parameterized dynamics model (if there exists) using the relative transition gradient to reduce the gap between the dynamics of the two environments. Then, integrating the two algorithms offers the complete algorithm Relative Policy-Transition Optimization (RPTO), in which the policy interacts with the two environments simultaneously, such that data collections from two environments, policy and transition updates are completed in one closed loop to form a principled learning framework for policy transfer. We demonstrate the effectiveness of RPTO in OpenAI gym's classic control tasks by creating policy transfer problems via variant dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge