GENIE: Large Scale Pre-training for Text Generation with Diffusion Model

Dec 22, 2022Zhenghao Lin, Yeyun Gong, Yelong Shen, Tong Wu, Zhihao Fan, Chen Lin, Weizhu Chen, Nan Duan

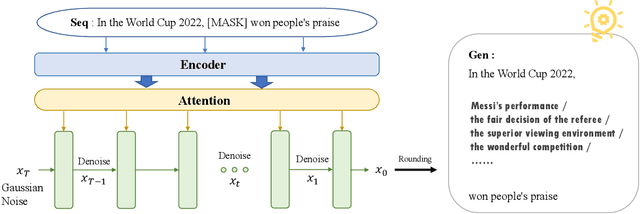

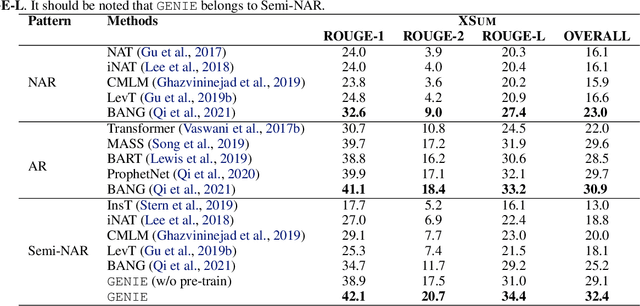

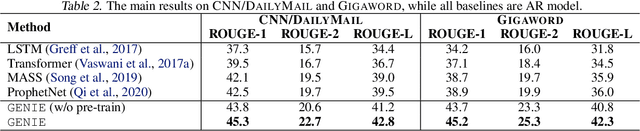

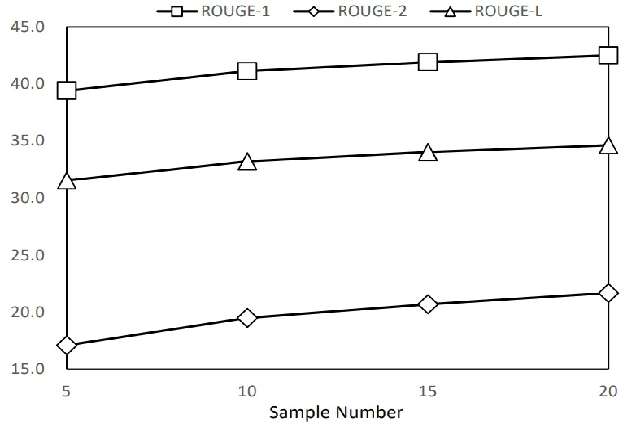

In this paper, we propose a large-scale language pre-training for text GENeration using dIffusion modEl, which is named GENIE. GENIE is a pre-training sequence-to-sequence text generation model which combines Transformer and diffusion. The diffusion model accepts the latent information from the encoder, which is used to guide the denoising of the current time step. After multiple such denoise iterations, the diffusion model can restore the Gaussian noise to the diverse output text which is controlled by the input text. Moreover, such architecture design also allows us to adopt large scale pre-training on the GENIE. We propose a novel pre-training method named continuous paragraph denoise based on the characteristics of the diffusion model. Extensive experiments on the XSum, CNN/DailyMail, and Gigaword benchmarks shows that GENIE can achieves comparable performance with various strong baselines, especially after pre-training, the generation quality of GENIE is greatly improved. We have also conduct a lot of experiments on the generation diversity and parameter impact of GENIE. The code for GENIE will be made publicly available.

Generation-Augmented Query Expansion For Code Retrieval

Dec 20, 2022Dong Li, Yelong Shen, Ruoming Jin, Yi Mao, Kuan Wang, Weizhu Chen

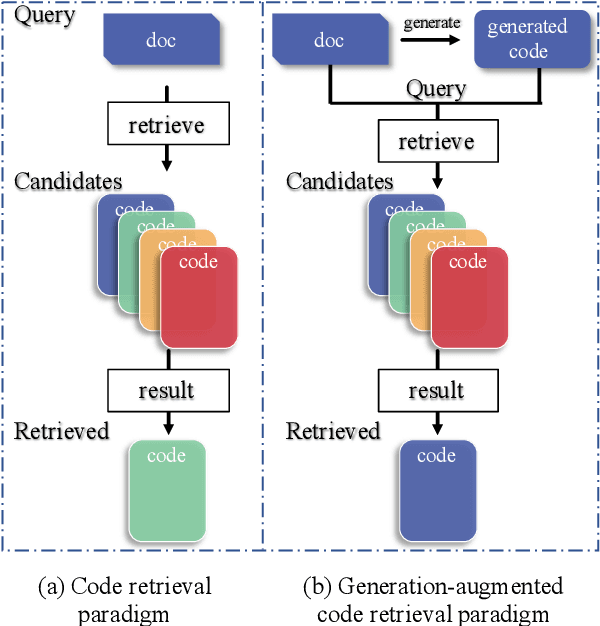

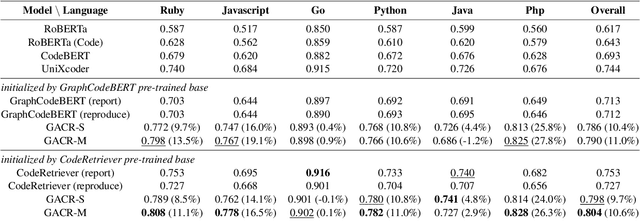

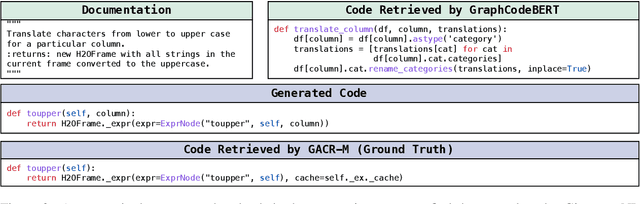

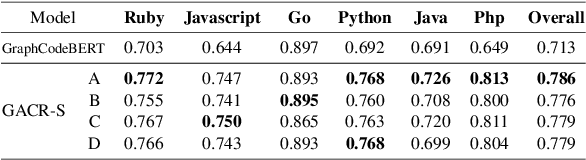

Pre-trained language models have achieved promising success in code retrieval tasks, where a natural language documentation query is given to find the most relevant existing code snippet. However, existing models focus only on optimizing the documentation code pairs by embedding them into latent space, without the association of external knowledge. In this paper, we propose a generation-augmented query expansion framework. Inspired by the human retrieval process - sketching an answer before searching, in this work, we utilize the powerful code generation model to benefit the code retrieval task. Specifically, we demonstrate that rather than merely retrieving the target code snippet according to the documentation query, it would be helpful to augment the documentation query with its generation counterpart - generated code snippets from the code generation model. To the best of our knowledge, this is the first attempt that leverages the code generation model to enhance the code retrieval task. We achieve new state-of-the-art results on the CodeSearchNet benchmark and surpass the baselines significantly.

GENIUS: Sketch-based Language Model Pre-training via Extreme and Selective Masking for Text Generation and Augmentation

Nov 18, 2022Biyang Guo, Yeyun Gong, Yelong Shen, Songqiao Han, Hailiang Huang, Nan Duan, Weizhu Chen

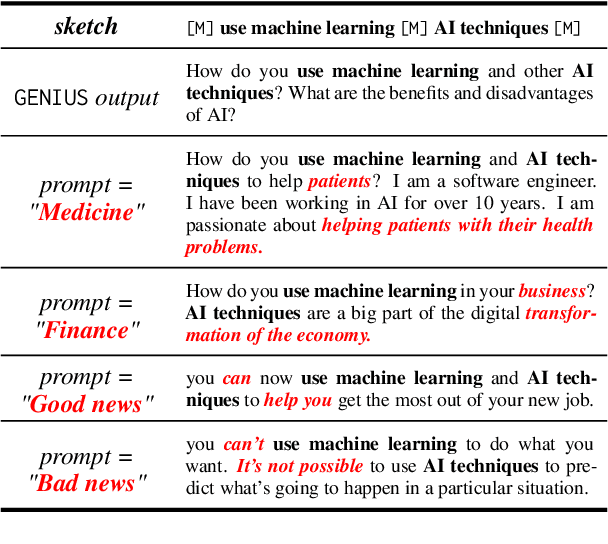

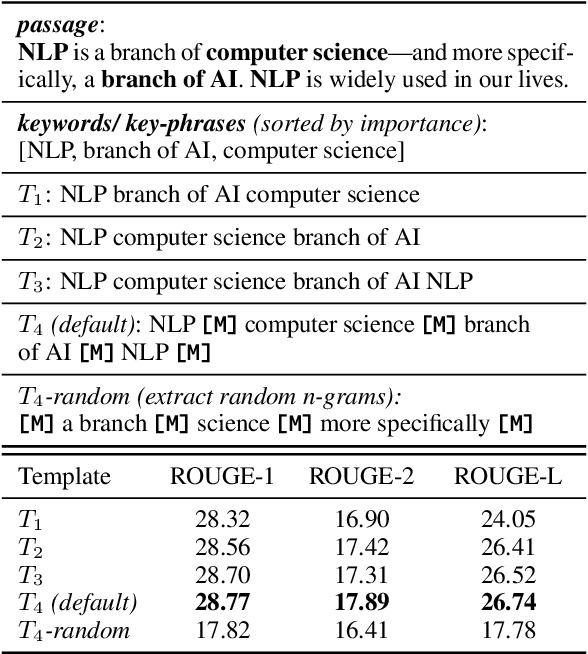

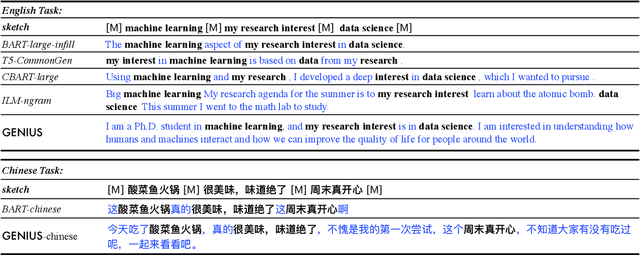

We introduce GENIUS: a conditional text generation model using sketches as input, which can fill in the missing contexts for a given sketch (key information consisting of textual spans, phrases, or words, concatenated by mask tokens). GENIUS is pre-trained on a large-scale textual corpus with a novel reconstruction from sketch objective using an extreme and selective masking strategy, enabling it to generate diverse and high-quality texts given sketches. Comparison with other competitive conditional language models (CLMs) reveals the superiority of GENIUS's text generation quality. We further show that GENIUS can be used as a strong and ready-to-use data augmentation tool for various natural language processing (NLP) tasks. Most existing textual data augmentation methods are either too conservative, by making small changes to the original text, or too aggressive, by creating entirely new samples. With GENIUS, we propose GeniusAug, which first extracts the target-aware sketches from the original training set and then generates new samples based on the sketches. Empirical experiments on 6 text classification datasets show that GeniusAug significantly improves the models' performance in both in-distribution (ID) and out-of-distribution (OOD) settings. We also demonstrate the effectiveness of GeniusAug on named entity recognition (NER) and machine reading comprehension (MRC) tasks. (Code and models are publicly available at https://github.com/microsoft/SCGLab and https://github.com/beyondguo/genius)

Soft-Labeled Contrastive Pre-training for Function-level Code Representation

Oct 18, 2022Xiaonan Li, Daya Guo, Yeyun Gong, Yun Lin, Yelong Shen, Xipeng Qiu, Daxin Jiang, Weizhu Chen, Nan Duan

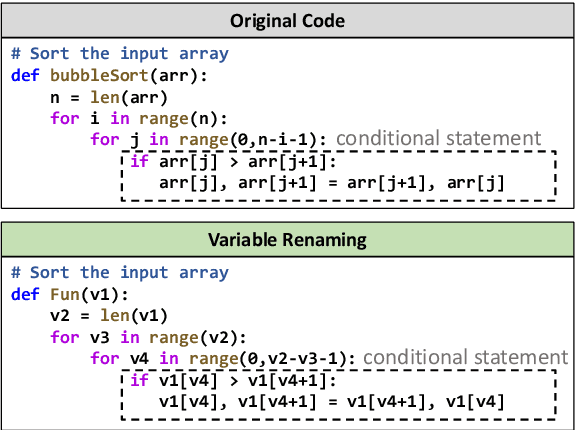

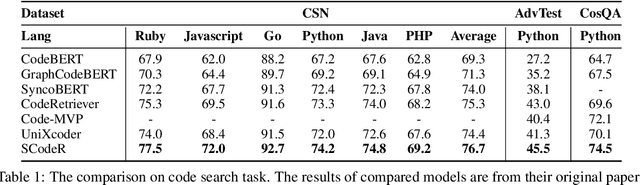

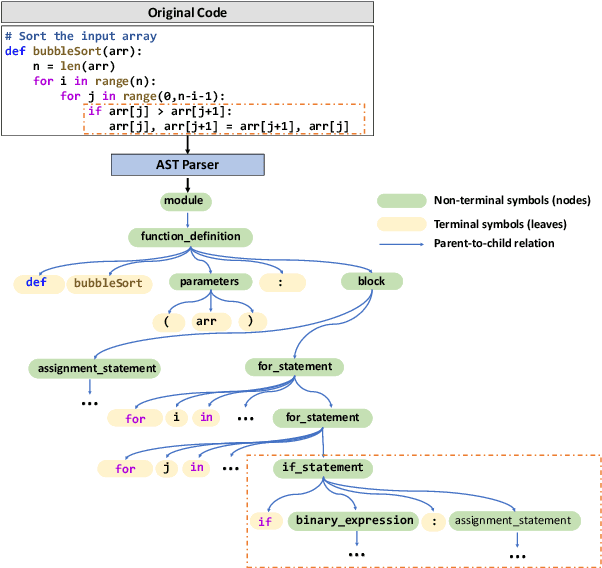

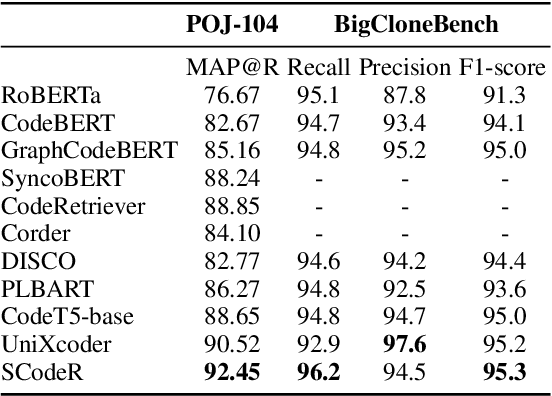

Code contrastive pre-training has recently achieved significant progress on code-related tasks. In this paper, we present \textbf{SCodeR}, a \textbf{S}oft-labeled contrastive pre-training framework with two positive sample construction methods to learn functional-level \textbf{Code} \textbf{R}epresentation. Considering the relevance between codes in a large-scale code corpus, the soft-labeled contrastive pre-training can obtain fine-grained soft-labels through an iterative adversarial manner and use them to learn better code representation. The positive sample construction is another key for contrastive pre-training. Previous works use transformation-based methods like variable renaming to generate semantically equal positive codes. However, they usually result in the generated code with a highly similar surface form, and thus mislead the model to focus on superficial code structure instead of code semantics. To encourage SCodeR to capture semantic information from the code, we utilize code comments and abstract syntax sub-trees of the code to build positive samples. We conduct experiments on four code-related tasks over seven datasets. Extensive experimental results show that SCodeR achieves new state-of-the-art performance on all of them, which illustrates the effectiveness of the proposed pre-training method.

Explanations from Large Language Models Make Small Reasoners Better

Oct 13, 2022Shiyang Li, Jianshu Chen, Yelong Shen, Zhiyu Chen, Xinlu Zhang, Zekun Li, Hong Wang, Jing Qian, Baolin Peng, Yi Mao, Wenhu Chen, Xifeng Yan

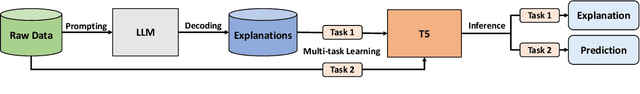

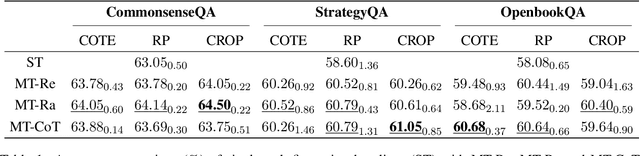

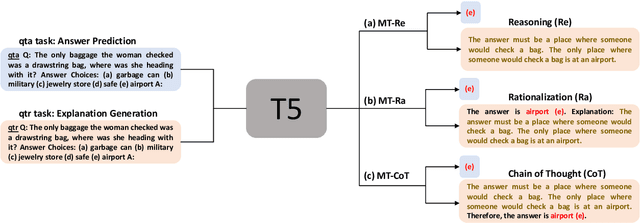

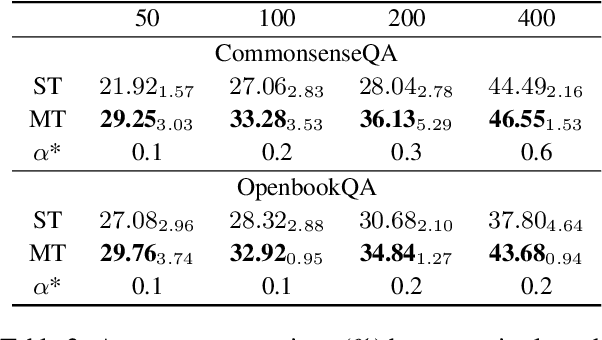

Integrating free-text explanations to in-context learning of large language models (LLM) is shown to elicit strong reasoning capabilities along with reasonable explanations. In this paper, we consider the problem of leveraging the explanations generated by LLM to improve the training of small reasoners, which are more favorable in real-production deployment due to their low cost. We systematically explore three explanation generation approaches from LLM and utilize a multi-task learning framework to facilitate small models to acquire strong reasoning power together with explanation generation capabilities. Experiments on multiple reasoning tasks show that our method can consistently and significantly outperform finetuning baselines across different settings, and even perform better than finetuning/prompting a 60x larger GPT-3 (175B) model by up to 9.5% in accuracy. As a side benefit, human evaluation further shows that our method can generate high-quality explanations to justify its predictions, moving towards the goal of explainable AI.

Joint Generator-Ranker Learning for Natural Language Generation

Jun 28, 2022Weizhou Shen, Yeyun Gong, Yelong Shen, Song Wang, Xiaojun Quan, Nan Duan, Weizhu Chen

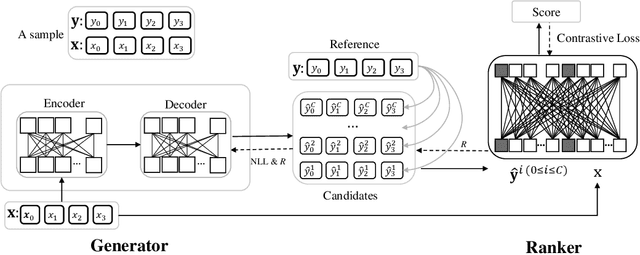

Due to exposure bias, most existing natural language generation (NLG) models trained by maximizing the likelihood objective predict poor text results during the inference stage. In this paper, to tackle this problem, we revisit the generate-then-rank framework and propose a joint generator-ranker (JGR) training algorithm for text generation tasks. In JGR, the generator model is trained by maximizing two objectives: the likelihood of the training corpus and the expected reward given by the ranker model. Meanwhile, the ranker model takes input samples from the generator model and learns to distinguish good samples from the generation pool. The generator and ranker models are alternately optimized till convergence. In the empirical study, the proposed JGR model achieves new state-of-the-art performance on five public benchmarks covering three popular generation tasks: summarization, question generation, and response generation. We will make code, data, and models available at https://github.com/microsoft/AdvNLG.

A Self-Paced Mixed Distillation Method for Non-Autoregressive Generation

May 23, 2022Weizhen Qi, Yeyun Gong, Yelong Shen, Jian Jiao, Yu Yan, Houqiang Li, Ruofei Zhang, Weizhu Chen, Nan Duan

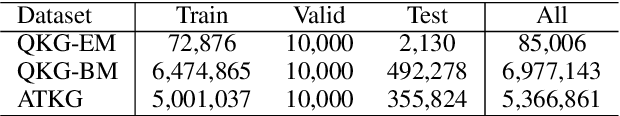

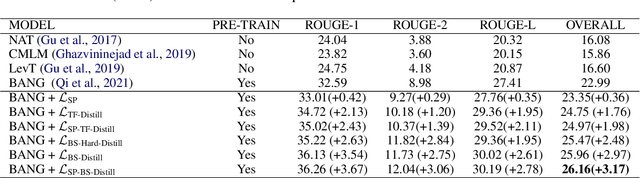

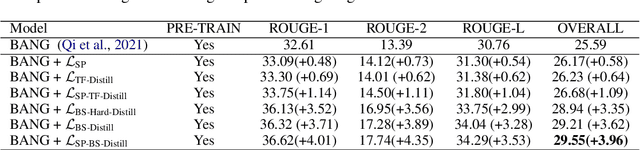

Non-Autoregressive generation is a sequence generation paradigm, which removes the dependency between target tokens. It could efficiently reduce the text generation latency with parallel decoding in place of token-by-token sequential decoding. However, due to the known multi-modality problem, Non-Autoregressive (NAR) models significantly under-perform Auto-regressive (AR) models on various language generation tasks. Among the NAR models, BANG is the first large-scale pre-training model on English un-labeled raw text corpus. It considers different generation paradigms as its pre-training tasks including Auto-regressive (AR), Non-Autoregressive (NAR), and semi-Non-Autoregressive (semi-NAR) information flow with multi-stream strategy. It achieves state-of-the-art performance without any distillation techniques. However, AR distillation has been shown to be a very effective solution for improving NAR performance. In this paper, we propose a novel self-paced mixed distillation method to further improve the generation quality of BANG. Firstly, we propose the mixed distillation strategy based on the AR stream knowledge. Secondly, we encourage the model to focus on the samples with the same modality by self-paced learning. The proposed self-paced mixed distillation algorithm improves the generation quality and has no influence on the inference latency. We carry out extensive experiments on summarization and question generation tasks to validate the effectiveness. To further illustrate the commercial value of our approach, we conduct experiments on three generation tasks in real-world advertisements applications. Experimental results on commercial data show the effectiveness of the proposed model. Compared with BANG, it achieves significant BLEU score improvement. On the other hand, compared with auto-regressive generation method, it achieves more than 7x speedup.

CAMERO: Consistency Regularized Ensemble of Perturbed Language Models with Weight Sharing

Apr 18, 2022Chen Liang, Pengcheng He, Yelong Shen, Weizhu Chen, Tuo Zhao

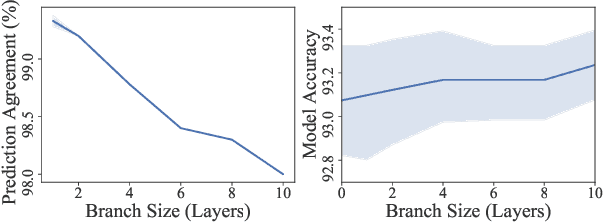

Model ensemble is a popular approach to produce a low-variance and well-generalized model. However, it induces large memory and inference costs, which are often not affordable for real-world deployment. Existing work has resorted to sharing weights among models. However, when increasing the proportion of the shared weights, the resulting models tend to be similar, and the benefits of using model ensemble diminish. To retain ensemble benefits while maintaining a low memory cost, we propose a consistency-regularized ensemble learning approach based on perturbed models, named CAMERO. Specifically, we share the weights of bottom layers across all models and apply different perturbations to the hidden representations for different models, which can effectively promote the model diversity. Meanwhile, we apply a prediction consistency regularizer across the perturbed models to control the variance due to the model diversity. Our experiments using large language models demonstrate that CAMERO significantly improves the generalization performance of the ensemble model. Specifically, CAMERO outperforms the standard ensemble of 8 BERT-base models on the GLUE benchmark by 0.7 with a significantly smaller model size (114.2M vs. 880.6M).

Controllable Natural Language Generation with Contrastive Prefixes

Feb 27, 2022Jing Qian, Li Dong, Yelong Shen, Furu Wei, Weizhu Chen

To guide the generation of large pretrained language models (LM), previous work has focused on directly fine-tuning the language model or utilizing an attribute discriminator. In this work, we propose a novel lightweight framework for controllable GPT2 generation, which utilizes a set of small attribute-specific vectors, called prefixes, to steer natural language generation. Different from prefix-tuning, where each prefix is trained independently, we take the relationship among prefixes into consideration and train multiple prefixes simultaneously. We propose a novel supervised method and also an unsupervised method to train the prefixes for single-aspect control while the combination of these two methods can achieve multi-aspect control. Experimental results on both single-aspect and multi-aspect control show that our methods can guide generation towards the desired attributes while keeping high linguistic quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge