Plot Retrieval as an Assessment of Abstract Semantic Association

Nov 03, 2023Shicheng Xu, Liang Pang, Jiangnan Li, Mo Yu, Fandong Meng, Huawei Shen, Xueqi Cheng, Jie Zhou

Retrieving relevant plots from the book for a query is a critical task, which can improve the reading experience and efficiency of readers. Readers usually only give an abstract and vague description as the query based on their own understanding, summaries, or speculations of the plot, which requires the retrieval model to have a strong ability to estimate the abstract semantic associations between the query and candidate plots. However, existing information retrieval (IR) datasets cannot reflect this ability well. In this paper, we propose Plot Retrieval, a labeled dataset to train and evaluate the performance of IR models on the novel task Plot Retrieval. Text pairs in Plot Retrieval have less word overlap and more abstract semantic association, which can reflect the ability of the IR models to estimate the abstract semantic association, rather than just traditional lexical or semantic matching. Extensive experiments across various lexical retrieval, sparse retrieval, dense retrieval, and cross-encoder methods compared with human studies on Plot Retrieval show current IR models still struggle in capturing abstract semantic association between texts. Plot Retrieval can be the benchmark for further research on the semantic association modeling ability of IR models.

Exploring Unified Perspective For Fast Shapley Value Estimation

Nov 02, 2023Borui Zhang, Baotong Tian, Wenzhao Zheng, Jie Zhou, Jiwen Lu

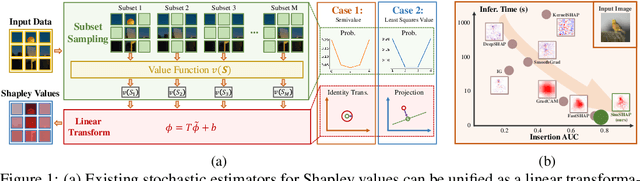

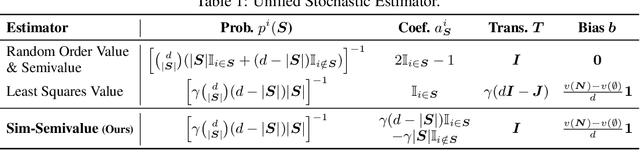

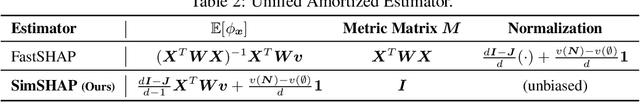

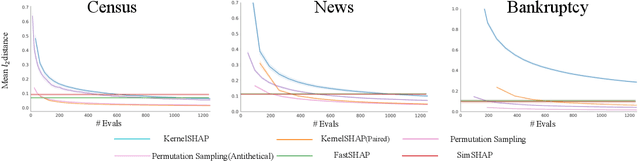

Shapley values have emerged as a widely accepted and trustworthy tool, grounded in theoretical axioms, for addressing challenges posed by black-box models like deep neural networks. However, computing Shapley values encounters exponential complexity in the number of features. Various approaches, including ApproSemivalue, KernelSHAP, and FastSHAP, have been explored to expedite the computation. We analyze the consistency of existing works and conclude that stochastic estimators can be unified as the linear transformation of importance sampling of feature subsets. Based on this, we investigate the possibility of designing simple amortized estimators and propose a straightforward and efficient one, SimSHAP, by eliminating redundant techniques. Extensive experiments conducted on tabular and image datasets validate the effectiveness of our SimSHAP, which significantly accelerates the computation of accurate Shapley values.

MCUFormer: Deploying Vision Tranformers on Microcontrollers with Limited Memory

Oct 27, 2023Yinan Liang, Ziwei Wang, Xiuwei Xu, Yansong Tang, Jie Zhou, Jiwen Lu

Due to the high price and heavy energy consumption of GPUs, deploying deep models on IoT devices such as microcontrollers makes significant contributions for ecological AI. Conventional methods successfully enable convolutional neural network inference of high resolution images on microcontrollers, while the framework for vision transformers that achieve the state-of-the-art performance in many vision applications still remains unexplored. In this paper, we propose a hardware-algorithm co-optimizations method called MCUFormer to deploy vision transformers on microcontrollers with extremely limited memory, where we jointly design transformer architecture and construct the inference operator library to fit the memory resource constraint. More specifically, we generalize the one-shot network architecture search (NAS) to discover the optimal architecture with highest task performance given the memory budget from the microcontrollers, where we enlarge the existing search space of vision transformers by considering the low-rank decomposition dimensions and patch resolution for memory reduction. For the construction of the inference operator library of vision transformers, we schedule the memory buffer during inference through operator integration, patch embedding decomposition, and token overwriting, allowing the memory buffer to be fully utilized to adapt to the forward pass of the vision transformer. Experimental results demonstrate that our MCUFormer achieves 73.62\% top-1 accuracy on ImageNet for image classification with 320KB memory on STM32F746 microcontroller. Code is available at https://github.com/liangyn22/MCUFormer.

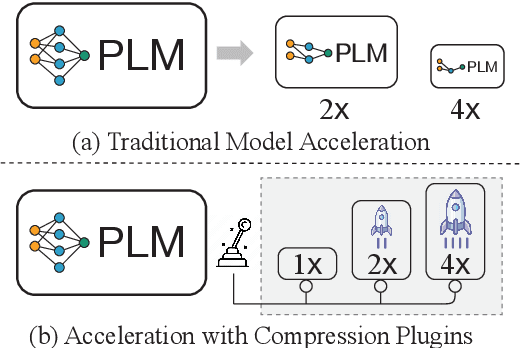

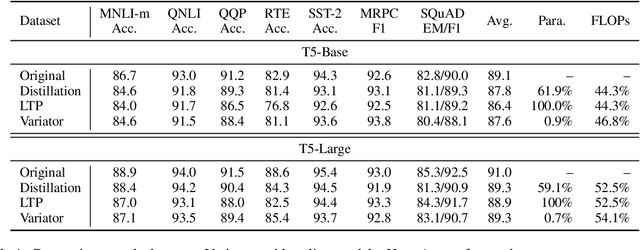

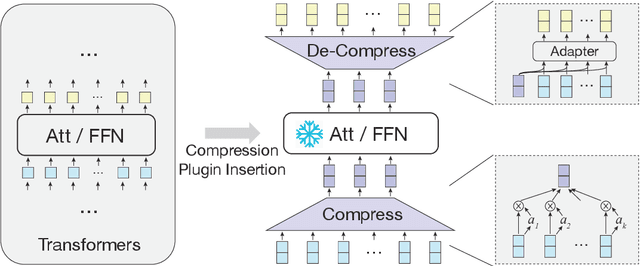

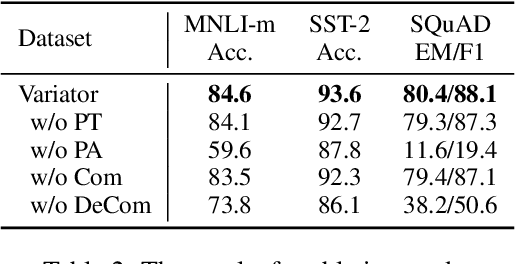

Variator: Accelerating Pre-trained Models with Plug-and-Play Compression Modules

Oct 24, 2023Chaojun Xiao, Yuqi Luo, Wenbin Zhang, Pengle Zhang, Xu Han, Yankai Lin, Zhengyan Zhang, Ruobing Xie, Zhiyuan Liu, Maosong Sun, Jie Zhou

Pre-trained language models (PLMs) have achieved remarkable results on NLP tasks but at the expense of huge parameter sizes and the consequent computational costs. In this paper, we propose Variator, a parameter-efficient acceleration method that enhances computational efficiency through plug-and-play compression plugins. Compression plugins are designed to reduce the sequence length via compressing multiple hidden vectors into one and trained with original PLMs frozen. Different from traditional model acceleration methods, which compress PLMs to smaller sizes, Variator offers two distinct advantages: (1) In real-world applications, the plug-and-play nature of our compression plugins enables dynamic selection of different compression plugins with varying acceleration ratios based on the current workload. (2) The compression plugin comprises a few compact neural network layers with minimal parameters, significantly saving storage and memory overhead, particularly in scenarios with a growing number of tasks. We validate the effectiveness of Variator on seven datasets. Experimental results show that Variator can save 53% computational costs using only 0.9% additional parameters with a performance drop of less than 2%. Moreover, when the model scales to billions of parameters, Variator matches the strong performance of uncompressed PLMs.

Thoroughly Modeling Multi-domain Pre-trained Recommendation as Language

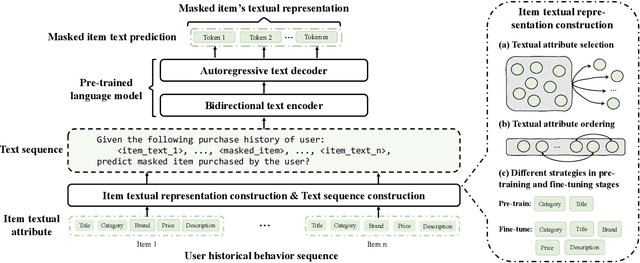

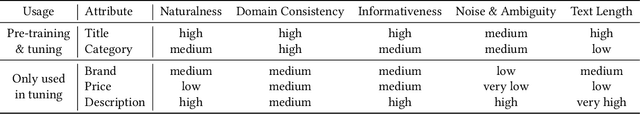

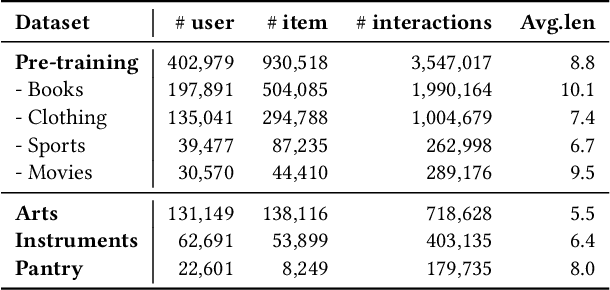

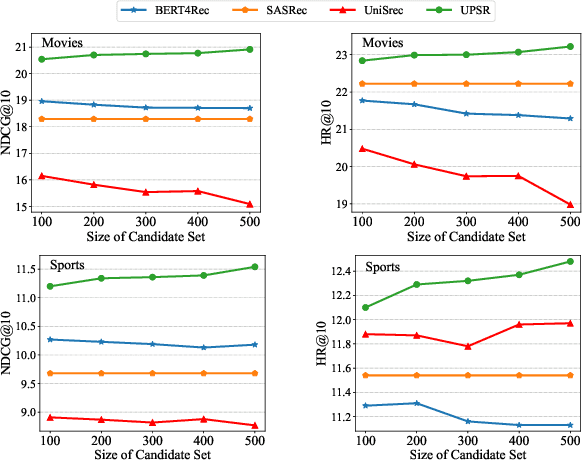

Oct 20, 2023Zekai Qu, Ruobing Xie, Chaojun Xiao, Yuan Yao, Zhiyuan Liu, Fengzong Lian, Zhanhui Kang, Jie Zhou

With the thriving of pre-trained language model (PLM) widely verified in various of NLP tasks, pioneer efforts attempt to explore the possible cooperation of the general textual information in PLM with the personalized behavioral information in user historical behavior sequences to enhance sequential recommendation (SR). However, despite the commonalities of input format and task goal, there are huge gaps between the behavioral and textual information, which obstruct thoroughly modeling SR as language modeling via PLM. To bridge the gap, we propose a novel Unified pre-trained language model enhanced sequential recommendation (UPSR), aiming to build a unified pre-trained recommendation model for multi-domain recommendation tasks. We formally design five key indicators, namely naturalness, domain consistency, informativeness, noise & ambiguity, and text length, to guide the text->item adaptation and behavior sequence->text sequence adaptation differently for pre-training and fine-tuning stages, which are essential but under-explored by previous works. In experiments, we conduct extensive evaluations on seven datasets with both tuning and zero-shot settings and achieve the overall best performance. Comprehensive model analyses also provide valuable insights for behavior modeling via PLM, shedding light on large pre-trained recommendation models. The source codes will be released in the future.

Boosting Inference Efficiency: Unleashing the Power of Parameter-Shared Pre-trained Language Models

Oct 19, 2023Weize Chen, Xiaoyue Xu, Xu Han, Yankai Lin, Ruobing Xie, Zhiyuan Liu, Maosong Sun, Jie Zhou

Parameter-shared pre-trained language models (PLMs) have emerged as a successful approach in resource-constrained environments, enabling substantial reductions in model storage and memory costs without significant performance compromise. However, it is important to note that parameter sharing does not alleviate computational burdens associated with inference, thus impeding its practicality in situations characterized by limited stringent latency requirements or computational resources. Building upon neural ordinary differential equations (ODEs), we introduce a straightforward technique to enhance the inference efficiency of parameter-shared PLMs. Additionally, we propose a simple pre-training technique that leads to fully or partially shared models capable of achieving even greater inference acceleration. The experimental results demonstrate the effectiveness of our methods on both autoregressive and autoencoding PLMs, providing novel insights into more efficient utilization of parameter-shared models in resource-constrained settings.

DCRNN: A Deep Cross approach based on RNN for Partial Parameter Sharing in Multi-task Learning

Oct 18, 2023Jie Zhou, Qian Yu

In recent years, DL has developed rapidly, and personalized services are exploring using DL algorithms to improve the performance of the recommendation system. For personalized services, a successful recommendation consists of two parts: attracting users to click the item and users being willing to consume the item. If both tasks need to be predicted at the same time, traditional recommendation systems generally train two independent models. This approach is cumbersome and does not effectively model the relationship between the two subtasks of "click-consumption". Therefore, in order to improve the success rate of recommendation and reduce computational costs, researchers are trying to model multi-task learning. At present, existing multi-task learning models generally adopt hard parameter sharing or soft parameter sharing architecture, but these two architectures each have certain problems. Therefore, in this work, we propose a novel recommendation model based on real recommendation scenarios, Deep Cross network based on RNN for partial parameter sharing (DCRNN). The model has three innovations: 1) It adopts the idea of cross network and uses RNN network to cross-process the features, thereby effectively improves the expressive ability of the model; 2) It innovatively proposes the structure of partial parameter sharing; 3) It can effectively capture the potential correlation between different tasks to optimize the efficiency and methods for learning different tasks.

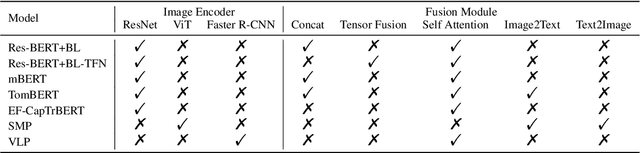

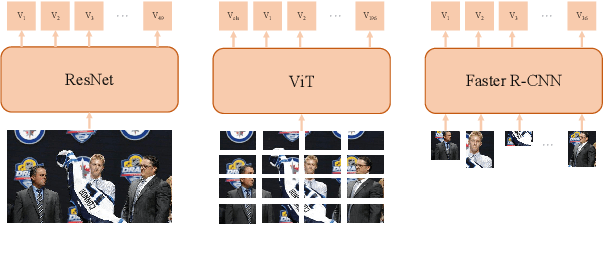

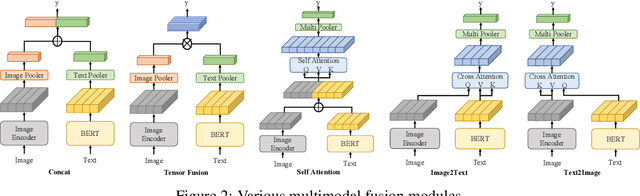

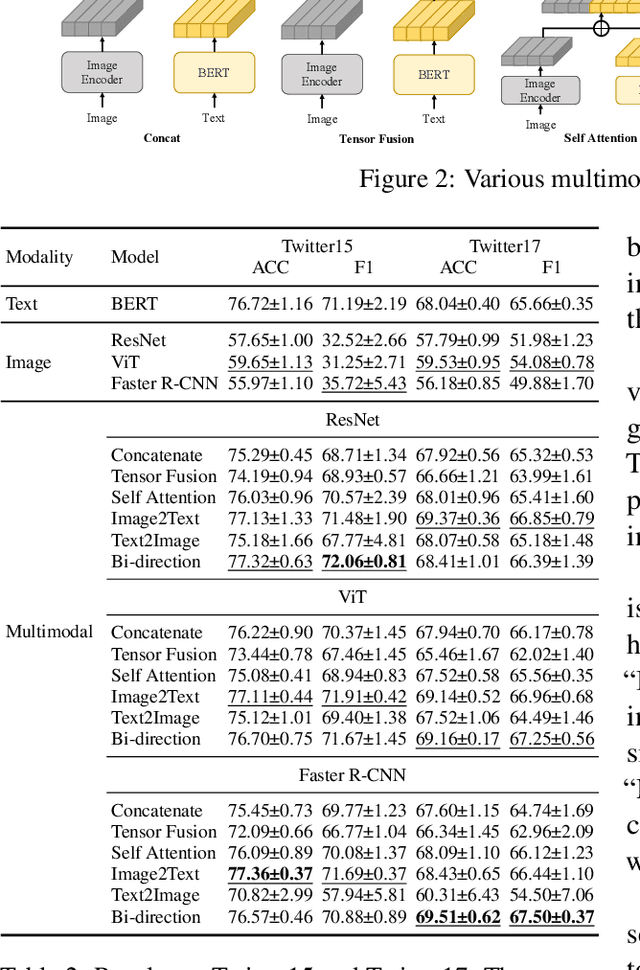

RethinkingTMSC: An Empirical Study for Target-Oriented Multimodal Sentiment Classification

Oct 14, 2023Junjie Ye, Jie Zhou, Junfeng Tian, Rui Wang, Qi Zhang, Tao Gui, Xuanjing Huang

Recently, Target-oriented Multimodal Sentiment Classification (TMSC) has gained significant attention among scholars. However, current multimodal models have reached a performance bottleneck. To investigate the causes of this problem, we perform extensive empirical evaluation and in-depth analysis of the datasets to answer the following questions: Q1: Are the modalities equally important for TMSC? Q2: Which multimodal fusion modules are more effective? Q3: Do existing datasets adequately support the research? Our experiments and analyses reveal that the current TMSC systems primarily rely on the textual modality, as most of targets' sentiments can be determined solely by text. Consequently, we point out several directions to work on for the TMSC task in terms of model design and dataset construction. The code and data can be found in https://github.com/Junjie-Ye/RethinkingTMSC.

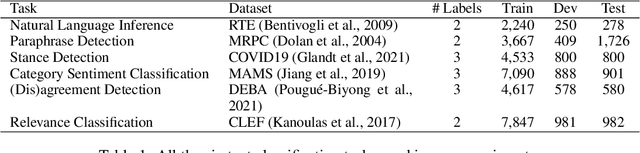

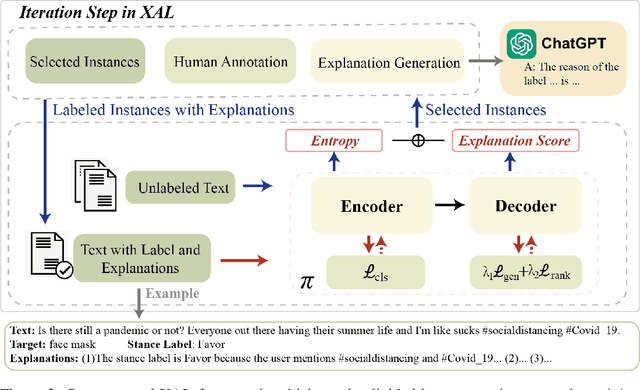

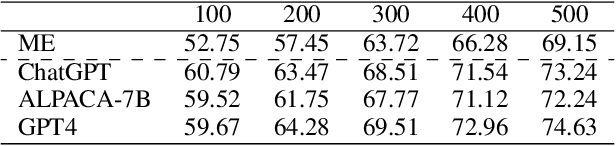

XAL: EXplainable Active Learning Makes Classifiers Better Low-resource Learners

Oct 09, 2023Yun Luo, Zhen Yang, Fandong Meng, Yingjie Li, Fang Guo, Qinglin Qi, Jie Zhou, Yue Zhang

Active learning aims to construct an effective training set by iteratively curating the most informative unlabeled data for annotation, which is practical in low-resource tasks. Most active learning techniques in classification rely on the model's uncertainty or disagreement to choose unlabeled data. However, previous work indicates that existing models are poor at quantifying predictive uncertainty, which can lead to over-confidence in superficial patterns and a lack of exploration. Inspired by the cognitive processes in which humans deduce and predict through causal information, we propose a novel Explainable Active Learning framework (XAL) for low-resource text classification, which aims to encourage classifiers to justify their inferences and delve into unlabeled data for which they cannot provide reasonable explanations. Specifically, besides using a pre-trained bi-directional encoder for classification, we employ a pre-trained uni-directional decoder to generate and score the explanation. A ranking loss is proposed to enhance the decoder's capability in scoring explanations. During the selection of unlabeled data, we combine the predictive uncertainty of the encoder and the explanation score of the decoder to acquire informative data for annotation. As XAL is a general framework for text classification, we test our methods on six different classification tasks. Extensive experiments show that XAL achieves substantial improvement on all six tasks over previous AL methods. Ablation studies demonstrate the effectiveness of each component, and human evaluation shows that the model trained in XAL performs surprisingly well in explaining its prediction.

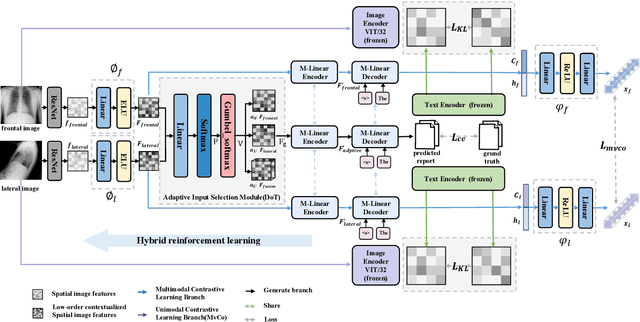

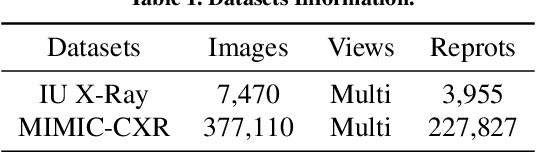

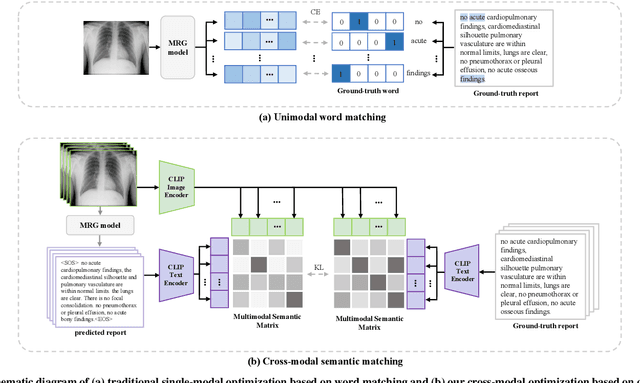

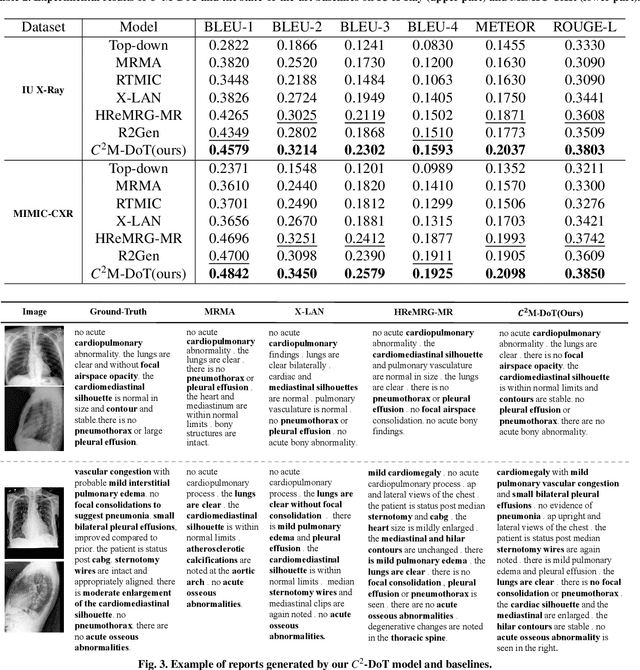

C^2M-DoT: Cross-modal consistent multi-view medical report generation with domain transfer network

Oct 09, 2023Ruizhi Wang, Xiangtao Wang, Jie Zhou, Thomas Lukasiewicz, Zhenghua Xu

In clinical scenarios, multiple medical images with different views are usually generated simultaneously, and these images have high semantic consistency. However, most existing medical report generation methods only consider single-view data. The rich multi-view mutual information of medical images can help generate more accurate reports, however, the dependence of multi-view models on multi-view data in the inference stage severely limits their application in clinical practice. In addition, word-level optimization based on numbers ignores the semantics of reports and medical images, and the generated reports often cannot achieve good performance. Therefore, we propose a cross-modal consistent multi-view medical report generation with a domain transfer network (C^2M-DoT). Specifically, (i) a semantic-based multi-view contrastive learning medical report generation framework is adopted to utilize cross-view information to learn the semantic representation of lesions; (ii) a domain transfer network is further proposed to ensure that the multi-view report generation model can still achieve good inference performance under single-view input; (iii) meanwhile, optimization using a cross-modal consistency loss facilitates the generation of textual reports that are semantically consistent with medical images. Extensive experimental studies on two public benchmark datasets demonstrate that C^2M-DoT substantially outperforms state-of-the-art baselines in all metrics. Ablation studies also confirmed the validity and necessity of each component in C^2M-DoT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge