Hao Tian

Sichuan University

Graph Kernels Based on Multi-scale Graph Embeddings

Jun 02, 2022

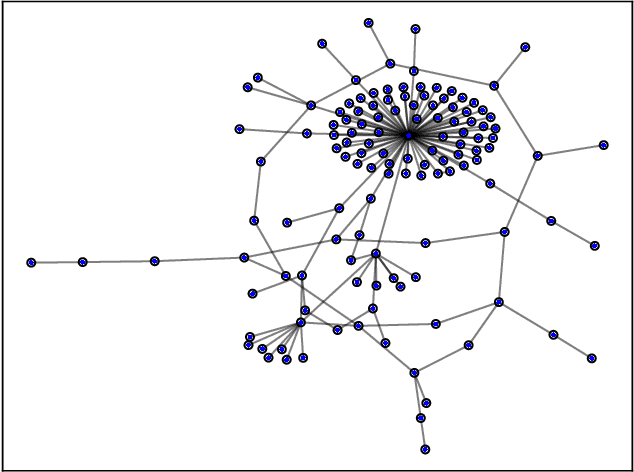

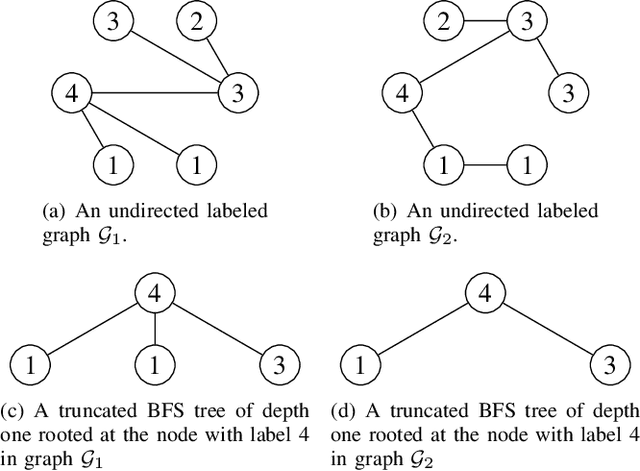

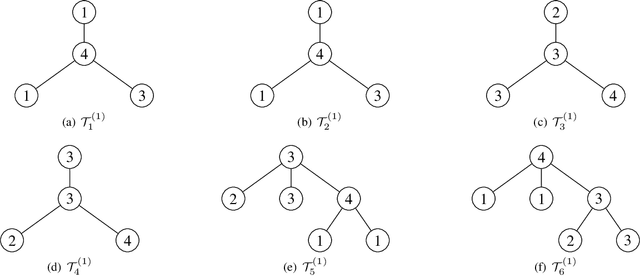

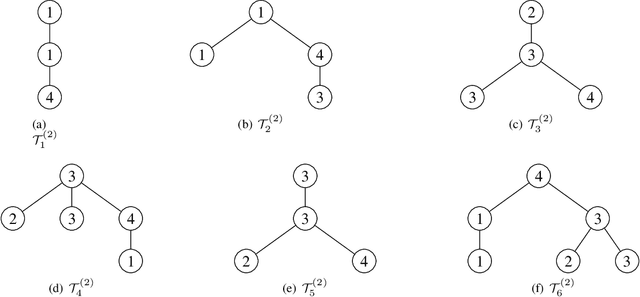

Abstract:Graph kernels are conventional methods for computing graph similarities. However, most of the R-convolution graph kernels face two challenges: 1) They cannot compare graphs at multiple different scales, and 2) they do not consider the distributions of substructures when computing the kernel matrix. These two challenges limit their performances. To mitigate the two challenges, we propose a novel graph kernel called the Multi-scale Path-pattern Graph kernel (MPG), at the heart of which is the multi-scale path-pattern node feature map. Each element of the path-pattern node feature map is the number of occurrences of a path-pattern around a node. A path-pattern is constructed by the concatenation of all the node labels in a path of a truncated BFS tree rooted at each node. Since the path-pattern node feature map can only compare graphs at local scales, we incorporate into it the multiple different scales of the graph structure, which are captured by the truncated BFS trees of different depth. We use the Wasserstein distance to compute the similarity between the multi-scale path-pattern node feature maps of two graphs, considering the distributions of substructures. We empirically validate MPG on various benchmark graph datasets and demonstrate that it achieves state-of-the-art performance.

ERNIE-Search: Bridging Cross-Encoder with Dual-Encoder via Self On-the-fly Distillation for Dense Passage Retrieval

May 18, 2022

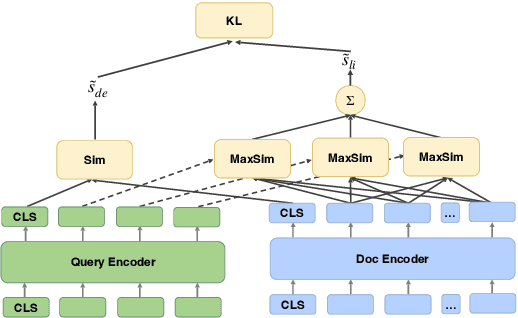

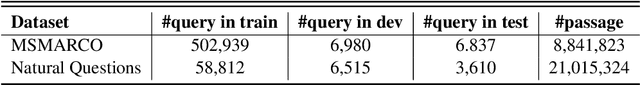

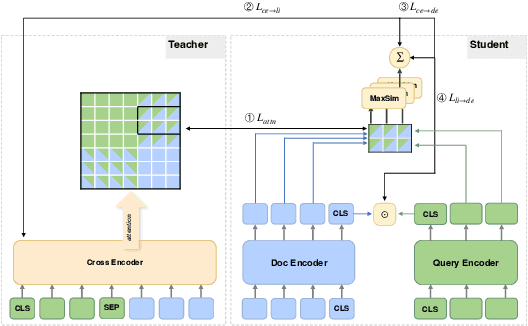

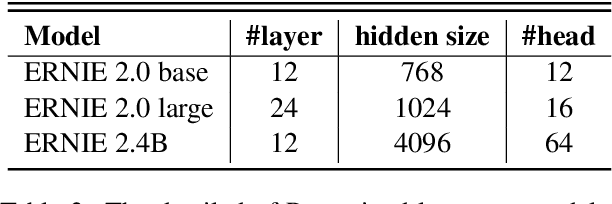

Abstract:Neural retrievers based on pre-trained language models (PLMs), such as dual-encoders, have achieved promising performance on the task of open-domain question answering (QA). Their effectiveness can further reach new state-of-the-arts by incorporating cross-architecture knowledge distillation. However, most of the existing studies just directly apply conventional distillation methods. They fail to consider the particular situation where the teacher and student have different structures. In this paper, we propose a novel distillation method that significantly advances cross-architecture distillation for dual-encoders. Our method 1) introduces a self on-the-fly distillation method that can effectively distill late interaction (i.e., ColBERT) to vanilla dual-encoder, and 2) incorporates a cascade distillation process to further improve the performance with a cross-encoder teacher. Extensive experiments are conducted to validate that our proposed solution outperforms strong baselines and establish a new state-of-the-art on open-domain QA benchmarks.

Accurate ADMET Prediction with XGBoost

Apr 15, 2022

Abstract:The absorption, distribution, metabolism, excretion, and toxicity (ADMET) properties are important in drug discovery as they define efficacy and safety. Here, we apply an ensemble of features, including fingerprints and descriptors, and a tree-based machine learning model, extreme gradient boosting, for accurate ADMET prediction. Our model performs well in the Therapeutics Data Commons ADMET benchmark group. For 22 tasks, our model is ranked first in 10 tasks and top 3 in 18 tasks.

ERNIE-SPARSE: Learning Hierarchical Efficient Transformer Through Regularized Self-Attention

Mar 23, 2022

Abstract:Sparse Transformer has recently attracted a lot of attention since the ability for reducing the quadratic dependency on the sequence length. We argue that two factors, information bottleneck sensitivity and inconsistency between different attention topologies, could affect the performance of the Sparse Transformer. This paper proposes a well-designed model named ERNIE-Sparse. It consists of two distinctive parts: (i) Hierarchical Sparse Transformer (HST) to sequentially unify local and global information. (ii) Self-Attention Regularization (SAR) method, a novel regularization designed to minimize the distance for transformers with different attention topologies. To evaluate the effectiveness of ERNIE-Sparse, we perform extensive evaluations. Firstly, we perform experiments on a multi-modal long sequence modeling task benchmark, Long Range Arena (LRA). Experimental results demonstrate that ERNIE-Sparse significantly outperforms a variety of strong baseline methods including the dense attention and other efficient sparse attention methods and achieves improvements by 2.77% (57.78% vs. 55.01%). Secondly, to further show the effectiveness of our method, we pretrain ERNIE-Sparse and verified it on 3 text classification and 2 QA downstream tasks, achieve improvements on classification benchmark by 0.83% (92.46% vs. 91.63%), on QA benchmark by 3.24% (74.67% vs. 71.43%). Experimental results continue to demonstrate its superior performance.

Indicative Image Retrieval: Turning Blackbox Learning into Grey

Jan 28, 2022

Abstract:Deep learning became the game changer for image retrieval soon after it was introduced. It promotes the feature extraction (by representation learning) as the core of image retrieval, with the relevance/matching evaluation being degenerated into simple similarity metrics. In many applications, we need the matching evidence to be indicated rather than just have the ranked list (e.g., the locations of the target proteins/cells/lesions in medical images). It is like the matched words need to be highlighted in search engines. However, this is not easy to implement without explicit relevance/matching modeling. The deep representation learning models are not feasible because of their blackbox nature. In this paper, we revisit the importance of relevance/matching modeling in deep learning era with an indicative retrieval setting. The study shows that it is possible to skip the representation learning and model the matching evidence directly. By removing the dependency on the pre-trained models, it has avoided a lot of related issues (e.g., the domain gap between classification and retrieval, the detail-diffusion caused by convolution, and so on). More importantly, the study demonstrates that the matching can be explicitly modeled and backtracked later for generating the matching evidence indications. It can improve the explainability of deep inference. Our method obtains a best performance in literature on both Oxford-5k and Paris-6k, and sets a new record of 97.77% on Oxford-5k (97.81% on Paris-6k) without extracting any deep features.

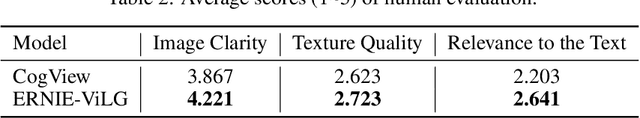

ERNIE-ViLG: Unified Generative Pre-training for Bidirectional Vision-Language Generation

Dec 31, 2021

Abstract:Conventional methods for the image-text generation tasks mainly tackle the naturally bidirectional generation tasks separately, focusing on designing task-specific frameworks to improve the quality and fidelity of the generated samples. Recently, Vision-Language Pre-training models have greatly improved the performance of the image-to-text generation tasks, but large-scale pre-training models for text-to-image synthesis task are still under-developed. In this paper, we propose ERNIE-ViLG, a unified generative pre-training framework for bidirectional image-text generation with transformer model. Based on the image quantization models, we formulate both image generation and text generation as autoregressive generative tasks conditioned on the text/image input. The bidirectional image-text generative modeling eases the semantic alignments across vision and language. For the text-to-image generation process, we further propose an end-to-end training method to jointly learn the visual sequence generator and the image reconstructor. To explore the landscape of large-scale pre-training for bidirectional text-image generation, we train a 10-billion parameter ERNIE-ViLG model on a large-scale dataset of 145 million (Chinese) image-text pairs which achieves state-of-the-art performance for both text-to-image and image-to-text tasks, obtaining an FID of 7.9 on MS-COCO for text-to-image synthesis and best results on COCO-CN and AIC-ICC for image captioning.

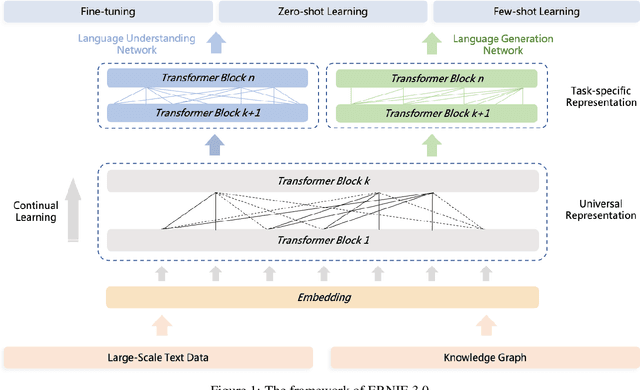

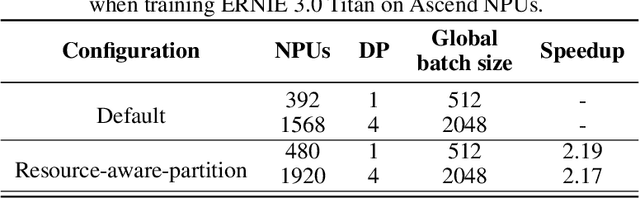

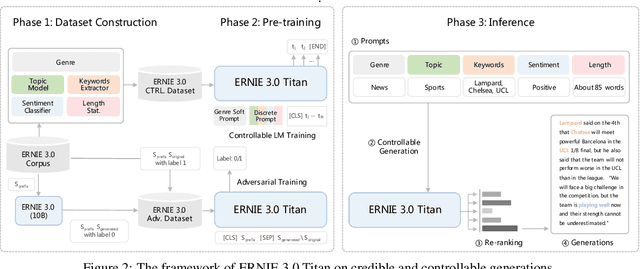

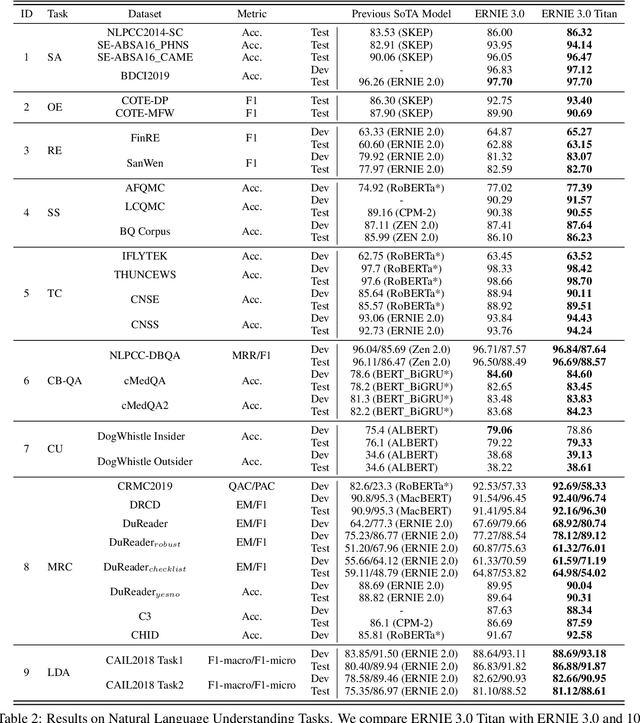

ERNIE 3.0 Titan: Exploring Larger-scale Knowledge Enhanced Pre-training for Language Understanding and Generation

Dec 23, 2021

Abstract:Pre-trained language models have achieved state-of-the-art results in various Natural Language Processing (NLP) tasks. GPT-3 has shown that scaling up pre-trained language models can further exploit their enormous potential. A unified framework named ERNIE 3.0 was recently proposed for pre-training large-scale knowledge enhanced models and trained a model with 10 billion parameters. ERNIE 3.0 outperformed the state-of-the-art models on various NLP tasks. In order to explore the performance of scaling up ERNIE 3.0, we train a hundred-billion-parameter model called ERNIE 3.0 Titan with up to 260 billion parameters on the PaddlePaddle platform. Furthermore, we design a self-supervised adversarial loss and a controllable language modeling loss to make ERNIE 3.0 Titan generate credible and controllable texts. To reduce the computation overhead and carbon emission, we propose an online distillation framework for ERNIE 3.0 Titan, where the teacher model will teach students and train itself simultaneously. ERNIE 3.0 Titan is the largest Chinese dense pre-trained model so far. Empirical results show that the ERNIE 3.0 Titan outperforms the state-of-the-art models on 68 NLP datasets.

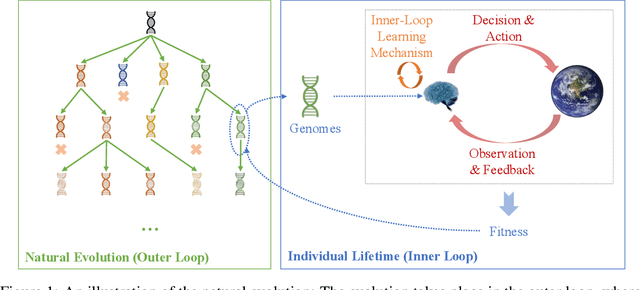

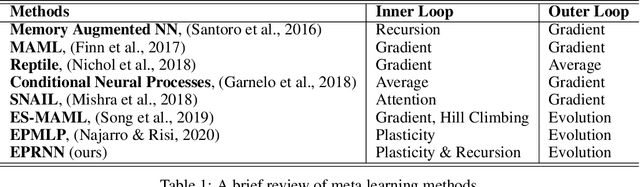

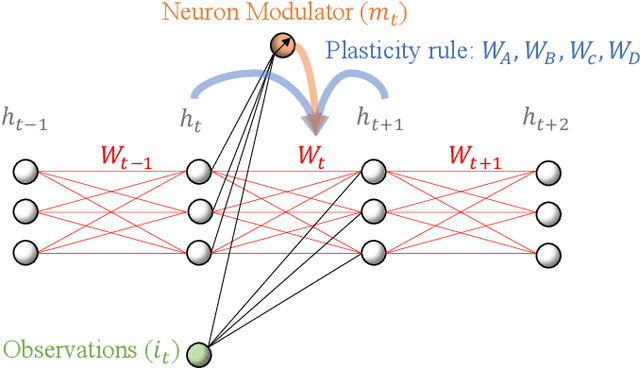

Do What Nature Did To Us: Evolving Plastic Recurrent Neural Networks For Task Generalization

Sep 29, 2021

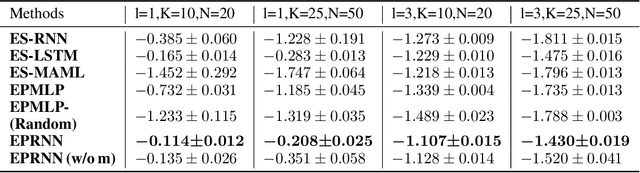

Abstract:While artificial neural networks (ANNs) have been widely adopted in machine learning, researchers are increasingly obsessed by the gaps between ANNs and biological neural networks (BNNs). In this paper, we propose a framework named as Evolutionary Plastic Recurrent Neural Networks} (EPRNN). Inspired by BNN, EPRNN composes Evolution Strategies, Plasticity Rules, and Recursion-based Learning all in one meta learning framework for generalization to different tasks. More specifically, EPRNN incorporates with nested loops for meta learning -- an outer loop searches for optimal initial parameters of the neural network and learning rules; an inner loop adapts to specific tasks. In the inner loop of EPRNN, we effectively attain both long term memory and short term memory by forging plasticity with recursion-based learning mechanisms, both of which are believed to be responsible for memristance in BNNs. The inner-loop setting closely simulate that of BNNs, which neither query from any gradient oracle for optimization nor require the exact forms of learning objectives. To evaluate the performance of EPRNN, we carry out extensive experiments in two groups of tasks: Sequence Predicting, and Wheeled Robot Navigating. The experiment results demonstrate the unique advantage of EPRNN compared to state-of-the-arts based on plasticity and recursion while yielding comparably good performance against deep learning based approaches in the tasks. The experiment results suggest the potential of EPRNN to generalize to variety of tasks and encourage more efforts in plasticity and recursion based learning mechanisms.

Reinforcement Learning with Evolutionary Trajectory Generator: A General Approach for Quadrupedal Locomotion

Sep 16, 2021

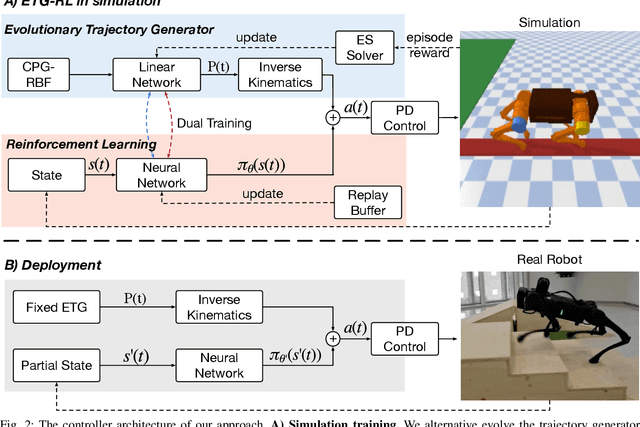

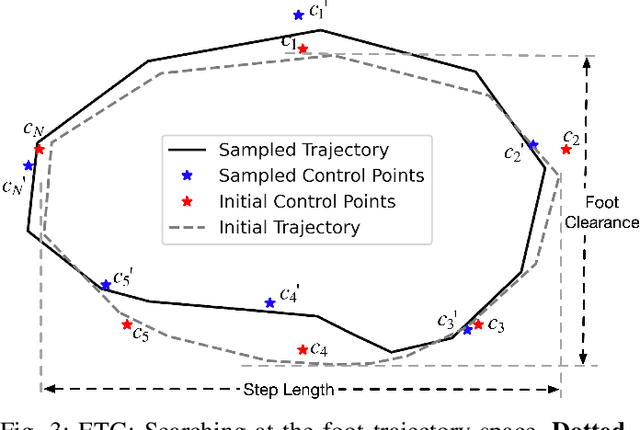

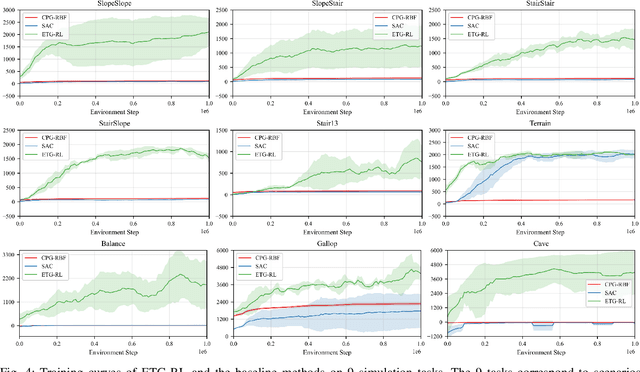

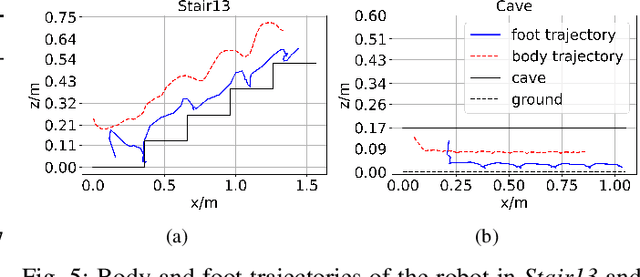

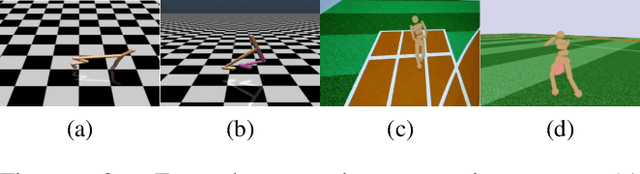

Abstract:Recently reinforcement learning (RL) has emerged as a promising approach for quadrupedal locomotion, which can save the manual effort in conventional approaches such as designing skill-specific controllers. However, due to the complex nonlinear dynamics in quadrupedal robots and reward sparsity, it is still difficult for RL to learn effective gaits from scratch, especially in challenging tasks such as walking over the balance beam. To alleviate such difficulty, we propose a novel RL-based approach that contains an evolutionary foot trajectory generator. Unlike prior methods that use a fixed trajectory generator, the generator continually optimizes the shape of the output trajectory for the given task, providing diversified motion priors to guide the policy learning. The policy is trained with reinforcement learning to output residual control signals that fit different gaits. We then optimize the trajectory generator and policy network alternatively to stabilize the training and share the exploratory data to improve sample efficiency. As a result, our approach can solve a range of challenging tasks in simulation by learning from scratch, including walking on a balance beam and crawling through the cave. To further verify the effectiveness of our approach, we deploy the controller learned in the simulation on a 12-DoF quadrupedal robot, and it can successfully traverse challenging scenarios with efficient gaits.

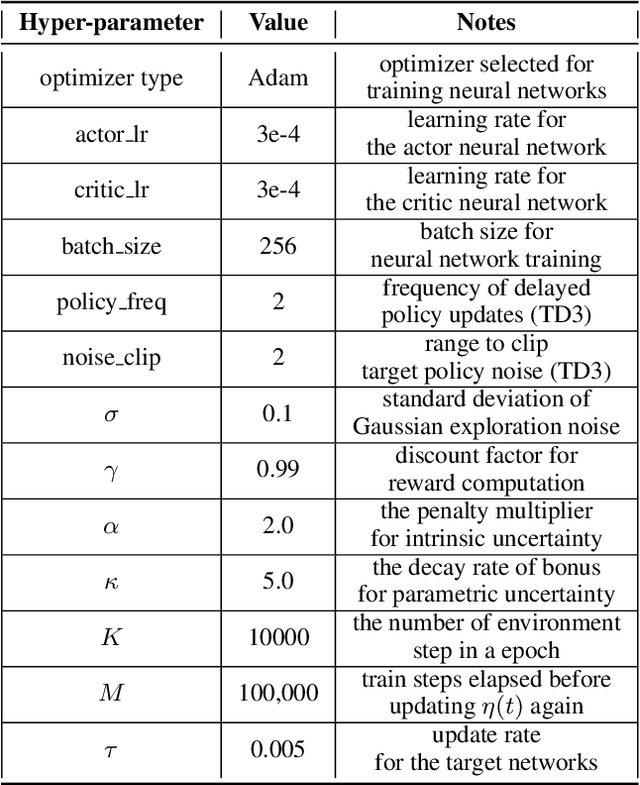

ADER:Adapting between Exploration and Robustness for Actor-Critic Methods

Sep 08, 2021

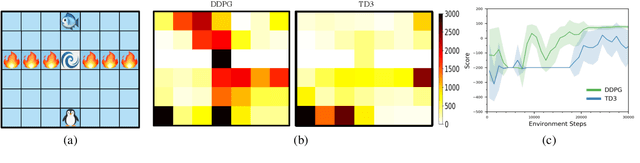

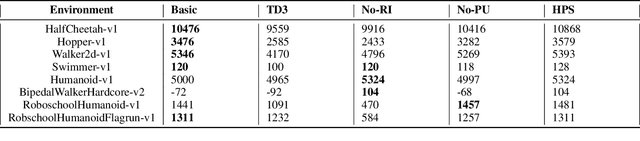

Abstract:Combining off-policy reinforcement learning methods with function approximators such as neural networks has been found to lead to overestimation of the value function and sub-optimal solutions. Improvement such as TD3 has been proposed to address this issue. However, we surprisingly find that its performance lags behind the vanilla actor-critic methods (such as DDPG) in some primitive environments. In this paper, we show that the failure of some cases can be attributed to insufficient exploration. We reveal the culprit of insufficient exploration in TD3, and propose a novel algorithm toward this problem that ADapts between Exploration and Robustness, namely ADER. To enhance the exploration ability while eliminating the overestimation bias, we introduce a dynamic penalty term in value estimation calculated from estimated uncertainty, which takes into account different compositions of the uncertainty in different learning stages. Experiments in several challenging environments demonstrate the supremacy of the proposed method in continuous control tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge