George K. Karagiannidis

A Survey of Pinching-Antenna Systems (PASS)

Jan 26, 2026Abstract:The pinching-antenna system (PASS), recently proposed as a flexible-antenna technology, has been regarded as a promising solution for several challenges in next-generation wireless networks. It provides large-scale antenna reconfiguration, establishes stable line-of-sight links, mitigates signal blockage, and exploits near-field advantages through its distinctive architecture. This article aims to present a comprehensive overview of the state of the art in PASS. The fundamental principles of PASS are first discussed, including its hardware architecture, circuit and physical models, and signal models. Several emerging PASS designs, such as segmented PASS (S-PASS), center-fed PASS (C-PASS), and multi-mode PASS (M-PASS), are subsequently introduced, and their design features are discussed. In addition, the properties and promising applications of PASS for wireless sensing are reviewed. On this basis, recent progress in the performance analysis of PASS for both communications and sensing is surveyed, and the performance gains achieved by PASS are highlighted. Existing research contributions in optimization and machine learning are also summarized, with the practical challenges of beamforming and resource allocation being identified in relation to the unique transmission structure and propagation characteristics of PASS. Finally, several variants of PASS are presented, and key implementation challenges that remain open for future study are discussed.

Physics-Aware RIS Codebook Compilation for Near-Field Beam Focusing under Mutual Coupling and Specular Reflections

Jan 19, 2026Abstract:Next-generation wireless networks are envisioned to achieve reliable, low-latency connectivity within environments characterized by strong multipath and severe channel variability. Programmable wireless environments (PWEs) address this challenge by enabling deterministic control of electromagnetic (EM) propagation through software-defined reconfigurable intelligent surfaces (RISs). However, effectively configuring RISs in real time remains a major bottleneck, particularly under near-field conditions where mutual coupling and specular reflections alter the intended response. To overcome this limitation, this paper introduces MATCH, a physics-based codebook compilation algorithm that explicitly accounts for the EM coupling among RIS unit cells and the reflective interactions with surrounding structures, ensuring that the resulting codebooks remain consistent with the physical characteristics of the environment. Finally, MATCH is evaluated under a full-wave simulation framework incorporating mutual coupling and secondary reflections, demonstrating its ability to concentrate scattered energy within the focal region, confirming that physics-consistent, codebook-based optimization constitutes an effective approach for practical and efficient RIS configuration.

A novel RF-enabled Non-Destructive Inspection Method through Machine Learning and Programmable Wireless Environments

Jan 10, 2026Abstract:Contemporary industrial Non-Destructive Inspection (NDI) methods require sensing capabilities that operate in occluded, hazardous, or access restricted environments. Yet, the current visual inspection based on optical cameras offers limited quality of service to that respect. In that sense, novel methods for workpiece inspection, suitable, for smart manufacturing are needed. Programmable Wireless Environments (PWE) could help towards that direction, by redefining the wireless Radio Frequency (RF) wave propagation as a controllable inspector entity. In this work, we propose a novel approach to Non-Destructive Inspection, leveraging an RF sensing pipeline based on RF wavefront encoding for retrieving workpiece-image entries from a designated database. This approach combines PWE-enabled RF wave manipulation with machine learning (ML) tools trained to produce visual outputs for quality inspection. Specifically, we establish correlation relationships between RF wavefronts and target industrial assets, hence yielding a dataset which links wavefronts to their corresponding images in a structured manner. Subsequently, a Generative Adversarial Network (GAN) derives visual representations closely matching the database entries. Our results indicate that the proposed method achieves an SSIM 99.5% matching score in visual outputs, paving the way for next-generation quality control workflows in industry.

Pinching Antenna-aided NOMA Systems with Internal Eavesdropping

Dec 25, 2025

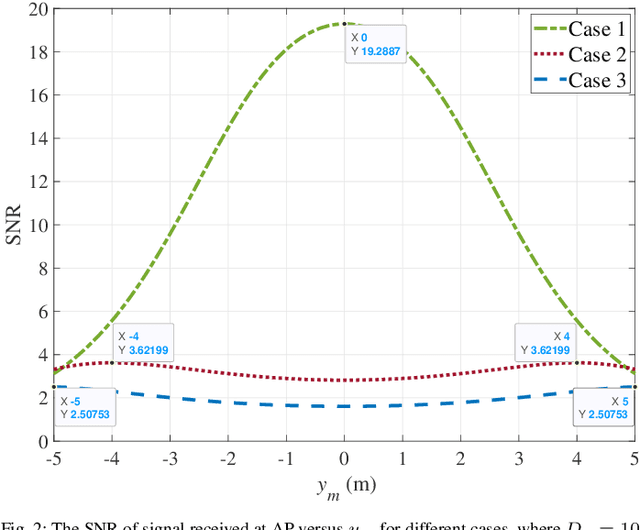

Abstract:As a novel member of flexible antennas, the pinching antenna (PA) is realized by integrating small dielectric particles on a waveguide, offering unique regulatory capabilities on constructing line-of-sight (LoS) links and enhancing transceiver channels, reducing path loss and signal blockage. Meanwhile, non-orthogonal multiple access (NOMA) has become a potential technology of next-generation communications due to its remarkable advantages in spectrum efficiency and user access capability. The integration of PA and NOMA enables synergistic leveraging of PA's channel regulation capability and NOMA's multi-user multiplexing advantage, forming a complementary technical framework to deliver high-performance communication solutions. However, the use of successive interference cancellation (SIC) introduces significant security risks to power-domain NOMA systems when internal eavesdropping is present. To this end, this paper investigates the physical layer security of a PA-aided NOMA system where a nearby user is considered as an internal eavesdropper. We enhance the security of the NOMA system through optimizing the radiated power of PAs and analyze the secrecy performance by deriving the closed-form expressions for the secrecy outage probability (SOP). Furthermore, we extend the characterization of PA flexibility beyond deployment and scale adjustment to include flexible regulation of PA coupling length. Based on two conventional PA power models, i.e., the equal power model and the proportional power model, we propose a flexible power strategy to achieve secure transmission. The results highlight the potential of the PA-aided NOMA system in mitigating internal eavesdropping risks, and provide an effective strategy for optimizing power allocation and cell range of user activity.

How Many Pinching Antennas Are Enough?

Dec 21, 2025

Abstract:Programmable wireless environments (PWEs) have emerged as a key paradigm for next-generation communication networks, aiming to transform wireless propagation from an uncontrollable phenomenon into a reconfigurable process that can adapt to diverse service requirements. In this framework, pinching-antenna systems (PASs) have recently been proposed as a promising enabling technology, as they allow the radiation location and effective propagation distance to be adjusted by selectively exciting radiating points along a dielectric waveguide. However, most existing studies on PASs rely on the idealized assumption that pinching-antenna (PA) positions can be continuously adjusted along the waveguide, while realistically only a finite set of pinching locations is available. Motivated by this, this paper analyzes the performance of two-state PASs, where the PA positions are fixed and only their activation state can be controlled. By explicitly accounting for the spatial discreteness of the available pinching points, closed-form analytical expressions for the outage probability and the ergodic achievable data rate are derived. In addition, we introduce the pinching discretization efficiency to quantify the performance gap between discrete and continuous pinching configurations, enabling a direct assessment of the number of PAs required to approximate the ideal continuous case. Finally, numerical results validate the analytical framework and show that near-continuous performance can be achieved with a limited number of PAs, offering useful insights for the design and deployment of PASs in PWEs.

Chirp Delay-Doppler Domain Modulation Based Joint Communication and Radar for Autonomous Vehicles

Dec 20, 2025Abstract:This paper introduces a sensing-centric joint communication and millimeter-wave radar paradigm to facilitate collaboration among intelligent vehicles. We first propose a chirp waveform-based delay-Doppler quadrature amplitude modulation (DD-QAM) that modulates data across delay, Doppler, and amplitude dimensions. Building upon this modulation scheme, we derive its achievable rate to quantify the communication performance. We then introduce an extended Kalman filter-based scheme for four-dimensional (4D) parameter estimation in dynamic environments, enabling the active vehicles to accurately estimate orientation and tangential-velocity beyond traditional 4D radar systems. Furthermore, in terms of communication, we propose a dual-compensation-based demodulation and tracking scheme that allows the passive vehicles to effectively demodulate data without compromising their sensing functions. Simulation results underscore the feasibility and superior performance of our proposed methods, marking a significant advancement in the field of autonomous vehicles. Simulation codes are provided to reproduce the results in this paper: \href{https://github.com/LiZhuoRan0/2026-IEEE-TWC-ChirpDelayDopplerModulationISAC}{https://github.com/LiZhuoRan0}.

Constellation Design and Detection under Generalized Hardware Impairments

Nov 12, 2025Abstract:This paper presents a maximum-likelihood detection framework that jointly mitigates hardware (HW) impairments in both amplitude and phase. By modeling transceiver distortions as residual amplitude and phase noise, we introduce the approximate phase-and-amplitude distortion detector (PAD-D), which operates in the polar domain and effectively mitigates both distortion components through distortion-aware weighting. The proposed detector performs reliable detection under generalized HW impairment conditions, achieving substantial performance gains over the conventional Euclidean detector (EUC-D) and the Gaussian-assumption phase noise detector (GAP-D), which is primarily designed to address phase distortions. In addition, we derive a closed-form high-SNR symbol error probability (SEP) approximation, which offers a generic analytical expression applicable to arbitrary constellations. Simulation results demonstrate that the PAD-D achieves up to an order-of-magnitude reduction in the error floor relative to EUC-D and GAP-D for both high-order quadrature amplitude modulation (QAM) and super amplitude phase-shift keying (SAPSK) constellations, establishing a unified and practical framework for detection under realistic transceiver impairments. Building on this framework, we further develop optimized constellations tailored to PAD-D, where the symbol positions are optimized in the complex plane to minimize SEP. The optimality of these constellations is confirmed through extensive simulations, which also verify the accuracy of the proposed analytical SEP approximation, even for the optimized designs.

Performance Analysis of Wireless-Powered Pinching Antenna Systems

Nov 05, 2025

Abstract:Pinching antenna system (PAS) serves as a groundbreaking paradigm that enhances wireless communications by flexibly adjusting the position of pinching antenna (PA) and establishing a strong line-of-sight (LoS) link, thereby reducing the free-space path loss. This paper introduces the concept of wireless-powered PAS, and investigates the reliability of wireless-powered PAS to explore the advantages of PA in improving the performance of wireless-powered communication (WPC) system. In addition, we derive the closed-form expressions of outage probability and ergodic rate for the practical lossy waveguide case and ideal lossless waveguide case, respectively, and analyze the optimal deployment of waveguides and user to provide valuable insights for guiding their deployments. The results show that an increase in the absorption coefficient and in the dimensions of the user area leads to higher in-waveguide and free-space propagation losses, respectively, which in turn increase the outage probability and reduce the ergodic rate of the wireless-powered PAS. However, the performance of wireless-powered PAS is severely affected by the absorption coefficient and the waveguide length, e.g., under conditions of high absorption coefficient and long waveguide, the outage probability of wireless-powered PAS is even worse than that of traditional WPC system. While the ergodic rate of wireless-powered PAS is better than that of traditional WPC system under conditions of high absorption coefficient and long waveguide. Interestingly, the wireless-powered PAS has the optimal time allocation factor and optimal distance between power station (PS) and access point (AP) to minimize the outage probability or maximize the ergodic rate. Moreover, the system performance of PS and AP separated at the optimal distance between PS and AP is superior to that of PS and AP integrated into a hybrid access point.

Adaptive Phase Shift Information Compression for IRS Systems: A Prompt Conditioned Variable Rate Framework

Nov 05, 2025Abstract:Intelligent reflecting surfaces (IRSs) have become a vital technology for improving the spectrum and energy efficiency of forthcoming wireless networks. Nevertheless, practical implementation is obstructed by the excessive overhead associated with the frequent transmission of phase shift information (PSI) over bandwidth-constrained control lines. Current deep learning-based compression methods mitigate this problem but are constrained by elevated decoder complexity, inadequate flexibility to dynamic channels, and static compression ratios. This research presents a prompt-conditioned PSI compression system that integrates prompt learning inspired by large models into the PSI compression process to address these difficulties. A hybrid prompt technique that integrates soft prompt concatenation with feature-wise linear modulation (FiLM) facilitates adaptive encoding across diverse signal-to-noise ratios (SNRs), fading kinds, and compression ratios. Furthermore, a variable rate technique incorporates the compression ratio into the prompt embeddings through latent masking, enabling a singular model to adeptly balance reconstruction accuracy. Additionally, a lightweight depthwise convolutional gating (DWCG) decoder facilitates precise feature reconstruction with minimal complexity. Comprehensive simulations indicate that the proposed framework significantly reduces NMSE compared to traditional autoencoder baselines, while ensuring robustness across various channel circumstances and accommodating variable compression ratios within a single model. These findings underscore the framework's promise as a scalable and efficient solution for real-time IRS control in next-generation wireless networks.

Generalized Pinching-Antenna Systems: A Tutorial on Principles, Design Strategies, and Future Directions

Oct 15, 2025Abstract:Pinching-antenna systems have emerged as a novel and transformative flexible-antenna architecture for next-generation wireless networks. They offer unprecedented flexibility and spatial reconfigurability by enabling dynamic positioning and activation of radiating elements along a signal-guiding medium (e.g., dielectric waveguides), which is not possible with conventional fixed antenna systems. In this paper, we introduce the concept of generalized pinching antenna systems, which retain the core principle of creating localized radiation points on demand, but can be physically realized in a variety of settings. These include implementations based on dielectric waveguides, leaky coaxial cables, surface-wave guiding structures, and other types of media, employing different feeding methods and activation mechanisms (e.g., mechanical, electronic, or hybrid). Despite differences in their physical realizations, they all share the same inherent ability to form, reposition, or deactivate radiation sites as needed, enabling user-centric and dynamic coverage. We first describe the underlying physical mechanisms of representative generalized pinching-antenna realizations and their associated wireless channel models, highlighting their unique propagation and reconfigurability characteristics compared with conventional antennas. Then, we review several representative pinching-antenna system architectures, ranging from single- to multiple-waveguide configurations, and discuss advanced design strategies tailored to these flexible deployments. Furthermore, we examine their integration with emerging wireless technologies to enable synergistic, user-centric solutions. Finally, we identify key open research challenges and outline future directions, charting a pathway toward the practical deployment of generalized pinching antennas in next-generation wireless networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge