photo

Papers and Code

Assessing the Geolocation Capabilities, Limitations and Societal Risks of Generative Vision-Language Models

Aug 27, 2025Geo-localization is the task of identifying the location of an image using visual cues alone. It has beneficial applications, such as improving disaster response, enhancing navigation, and geography education. Recently, Vision-Language Models (VLMs) are increasingly demonstrating capabilities as accurate image geo-locators. This brings significant privacy risks, including those related to stalking and surveillance, considering the widespread uses of AI models and sharing of photos on social media. The precision of these models is likely to improve in the future. Despite these risks, there is little work on systematically evaluating the geolocation precision of Generative VLMs, their limits and potential for unintended inferences. To bridge this gap, we conduct a comprehensive assessment of the geolocation capabilities of 25 state-of-the-art VLMs on four benchmark image datasets captured in diverse environments. Our results offer insight into the internal reasoning of VLMs and highlight their strengths, limitations, and potential societal risks. Our findings indicate that current VLMs perform poorly on generic street-level images yet achieve notably high accuracy (61\%) on images resembling social media content, raising significant and urgent privacy concerns.

Synthetic Image Detection via Spectral Gaps of QC-RBIM Nishimori Bethe-Hessian Operators

Aug 27, 2025

The rapid advance of deep generative models such as GANs and diffusion networks now produces images that are virtually indistinguishable from genuine photographs, undermining media forensics and biometric security. Supervised detectors quickly lose effectiveness on unseen generators or after adversarial post-processing, while existing unsupervised methods that rely on low-level statistical cues remain fragile. We introduce a physics-inspired, model-agnostic detector that treats synthetic-image identification as a community-detection problem on a sparse weighted graph. Image features are first extracted with pretrained CNNs and reduced to 32 dimensions, each feature vector becomes a node of a Multi-Edge Type QC-LDPC graph. Pairwise similarities are transformed into edge couplings calibrated at the Nishimori temperature, producing a Random Bond Ising Model (RBIM) whose Bethe-Hessian spectrum exhibits a characteristic gap when genuine community structure (real images) is present. Synthetic images violate the Nishimori symmetry and therefore lack such gaps. We validate the approach on binary tasks cat versus dog and male versus female using real photos from Flickr-Faces-HQ and CelebA and synthetic counterparts generated by GANs and diffusion models. Without any labeled synthetic data or retraining of the feature extractor, the detector achieves over 94% accuracy. Spectral analysis shows multiple well separated gaps for real image sets and a collapsed spectrum for generated ones. Our contributions are threefold: a novel LDPC graph construction that embeds deep image features, an analytical link between Nishimori temperature RBIM and the Bethe-Hessian spectrum providing a Bayes optimal detection criterion; and a practical, unsupervised synthetic image detector robust to new generative architectures. Future work will extend the framework to video streams and multi-class anomaly detection.

RDDM: Practicing RAW Domain Diffusion Model for Real-world Image Restoration

Aug 26, 2025We present the RAW domain diffusion model (RDDM), an end-to-end diffusion model that restores photo-realistic images directly from the sensor RAW data. While recent sRGB-domain diffusion methods achieve impressive results, they are caught in a dilemma between high fidelity and realistic generation. As these models process lossy sRGB inputs and neglect the accessibility of the sensor RAW images in many scenarios, e.g., in image and video capturing in edge devices, resulting in sub-optimal performance. RDDM bypasses this limitation by directly restoring images in the RAW domain, replacing the conventional two-stage image signal processing (ISP) + IR pipeline. However, a simple adaptation of pre-trained diffusion models to the RAW domain confronts the out-of-distribution (OOD) issues. To this end, we propose: (1) a RAW-domain VAE (RVAE) learning optimal latent representations, (2) a differentiable Post Tone Processing (PTP) module enabling joint RAW and sRGB space optimization. To compensate for the deficiency in the dataset, we develop a scalable degradation pipeline synthesizing RAW LQ-HQ pairs from existing sRGB datasets for large-scale training. Furthermore, we devise a configurable multi-bayer (CMB) LoRA module handling diverse RAW patterns such as RGGB, BGGR, etc. Extensive experiments demonstrate RDDM's superiority over state-of-the-art sRGB diffusion methods, yielding higher fidelity results with fewer artifacts.

MoCHA-former: Moiré-Conditioned Hybrid Adaptive Transformer for Video Demoiréing

Aug 21, 2025Recent advances in portable imaging have made camera-based screen capture ubiquitous. Unfortunately, frequency aliasing between the camera's color filter array (CFA) and the display's sub-pixels induces moir\'e patterns that severely degrade captured photos and videos. Although various demoir\'eing models have been proposed to remove such moir\'e patterns, these approaches still suffer from several limitations: (i) spatially varying artifact strength within a frame, (ii) large-scale and globally spreading structures, (iii) channel-dependent statistics and (iv) rapid temporal fluctuations across frames. We address these issues with the Moir\'e Conditioned Hybrid Adaptive Transformer (MoCHA-former), which comprises two key components: Decoupled Moir\'e Adaptive Demoir\'eing (DMAD) and Spatio-Temporal Adaptive Demoir\'eing (STAD). DMAD separates moir\'e and content via a Moir\'e Decoupling Block (MDB) and a Detail Decoupling Block (DDB), then produces moir\'e-adaptive features using a Moir\'e Conditioning Block (MCB) for targeted restoration. STAD introduces a Spatial Fusion Block (SFB) with window attention to capture large-scale structures, and a Feature Channel Attention (FCA) to model channel dependence in RAW frames. To ensure temporal consistency, MoCHA-former performs implicit frame alignment without any explicit alignment module. We analyze moir\'e characteristics through qualitative and quantitative studies, and evaluate on two video datasets covering RAW and sRGB domains. MoCHA-former consistently surpasses prior methods across PSNR, SSIM, and LPIPS.

Structure-preserving Feature Alignment for Old Photo Colorization

Aug 18, 2025Deep learning techniques have made significant advancements in reference-based colorization by training on large-scale datasets. However, directly applying these methods to the task of colorizing old photos is challenging due to the lack of ground truth and the notorious domain gap between natural gray images and old photos. To address this issue, we propose a novel CNN-based algorithm called SFAC, i.e., Structure-preserving Feature Alignment Colorizer. SFAC is trained on only two images for old photo colorization, eliminating the reliance on big data and allowing direct processing of the old photo itself to overcome the domain gap problem. Our primary objective is to establish semantic correspondence between the two images, ensuring that semantically related objects have similar colors. We achieve this through a feature distribution alignment loss that remains robust to different metric choices. However, utilizing robust semantic correspondence to transfer color from the reference to the old photo can result in inevitable structure distortions. To mitigate this, we introduce a structure-preserving mechanism that incorporates a perceptual constraint at the feature level and a frozen-updated pyramid at the pixel level. Extensive experiments demonstrate the effectiveness of our method for old photo colorization, as confirmed by qualitative and quantitative metrics.

Communication Efficient Robotic Mixed Reality with Gaussian Splatting Cross-Layer Optimization

Aug 12, 2025

Realizing low-cost communication in robotic mixed reality (RoboMR) systems presents a challenge, due to the necessity of uploading high-resolution images through wireless channels. This paper proposes Gaussian splatting (GS) RoboMR (GSMR), which enables the simulator to opportunistically render a photo-realistic view from the robot's pose by calling ``memory'' from a GS model, thus reducing the need for excessive image uploads. However, the GS model may involve discrepancies compared to the actual environments. To this end, a GS cross-layer optimization (GSCLO) framework is further proposed, which jointly optimizes content switching (i.e., deciding whether to upload image or not) and power allocation (i.e., adjusting to content profiles) across different frames by minimizing a newly derived GSMR loss function. The GSCLO problem is addressed by an accelerated penalty optimization (APO) algorithm that reduces computational complexity by over $10$x compared to traditional branch-and-bound and search algorithms. Moreover, variants of GSCLO are presented to achieve robust, low-power, and multi-robot GSMR. Extensive experiments demonstrate that the proposed GSMR paradigm and GSCLO method achieve significant improvements over existing benchmarks on both wheeled and legged robots in terms of diverse metrics in various scenarios. For the first time, it is found that RoboMR can be achieved with ultra-low communication costs, and mixture of data is useful for enhancing GS performance in dynamic scenarios.

TechOps: Technical Documentation Templates for the AI Act

Aug 12, 2025Operationalizing the EU AI Act requires clear technical documentation to ensure AI systems are transparent, traceable, and accountable. Existing documentation templates for AI systems do not fully cover the entire AI lifecycle while meeting the technical documentation requirements of the AI Act. This paper addresses those shortcomings by introducing open-source templates and examples for documenting data, models, and applications to provide sufficient documentation for certifying compliance with the AI Act. These templates track the system status over the entire AI lifecycle, ensuring traceability, reproducibility, and compliance with the AI Act. They also promote discoverability and collaboration, reduce risks, and align with best practices in AI documentation and governance. The templates are evaluated and refined based on user feedback to enable insights into their usability and implementability. We then validate the approach on real-world scenarios, providing examples that further guide their implementation: the data template is followed to document a skin tones dataset created to support fairness evaluations of downstream computer vision models and human-centric applications; the model template is followed to document a neural network for segmenting human silhouettes in photos. The application template is tested on a system deployed for construction site safety using real-time video analytics and sensor data. Our results show that TechOps can serve as a practical tool to enable oversight for regulatory compliance and responsible AI development.

NeeCo: Image Synthesis of Novel Instrument States Based on Dynamic and Deformable 3D Gaussian Reconstruction

Aug 11, 2025

Computer vision-based technologies significantly enhance surgical automation by advancing tool tracking, detection, and localization. However, Current data-driven approaches are data-voracious, requiring large, high-quality labeled image datasets, which limits their application in surgical data science. Our Work introduces a novel dynamic Gaussian Splatting technique to address the data scarcity in surgical image datasets. We propose a dynamic Gaussian model to represent dynamic surgical scenes, enabling the rendering of surgical instruments from unseen viewpoints and deformations with real tissue backgrounds. We utilize a dynamic training adjustment strategy to address challenges posed by poorly calibrated camera poses from real-world scenarios. Additionally, we propose a method based on dynamic Gaussians for automatically generating annotations for our synthetic data. For evaluation, we constructed a new dataset featuring seven scenes with 14,000 frames of tool and camera motion and tool jaw articulation, with a background of an ex-vivo porcine model. Using this dataset, we synthetically replicate the scene deformation from the ground truth data, allowing direct comparisons of synthetic image quality. Experimental results illustrate that our method generates photo-realistic labeled image datasets with the highest values in Peak-Signal-to-Noise Ratio (29.87). We further evaluate the performance of medical-specific neural networks trained on real and synthetic images using an unseen real-world image dataset. Our results show that the performance of models trained on synthetic images generated by the proposed method outperforms those trained with state-of-the-art standard data augmentation by 10%, leading to an overall improvement in model performances by nearly 15%.

Undress to Redress: A Training-Free Framework for Virtual Try-On

Aug 11, 2025Virtual try-on (VTON) is a crucial task for enhancing user experience in online shopping by generating realistic garment previews on personal photos. Although existing methods have achieved impressive results, they struggle with long-sleeve-to-short-sleeve conversions-a common and practical scenario-often producing unrealistic outputs when exposed skin is underrepresented in the original image. We argue that this challenge arises from the ''majority'' completion rule in current VTON models, which leads to inaccurate skin restoration in such cases. To address this, we propose UR-VTON (Undress-Redress Virtual Try-ON), a novel, training-free framework that can be seamlessly integrated with any existing VTON method. UR-VTON introduces an ''undress-to-redress'' mechanism: it first reveals the user's torso by virtually ''undressing,'' then applies the target short-sleeve garment, effectively decomposing the conversion into two more manageable steps. Additionally, we incorporate Dynamic Classifier-Free Guidance scheduling to balance diversity and image quality during DDPM sampling, and employ Structural Refiner to enhance detail fidelity using high-frequency cues. Finally, we present LS-TON, a new benchmark for long-sleeve-to-short-sleeve try-on. Extensive experiments demonstrate that UR-VTON outperforms state-of-the-art methods in both detail preservation and image quality. Code will be released upon acceptance.

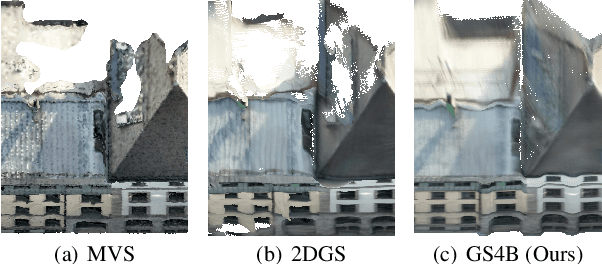

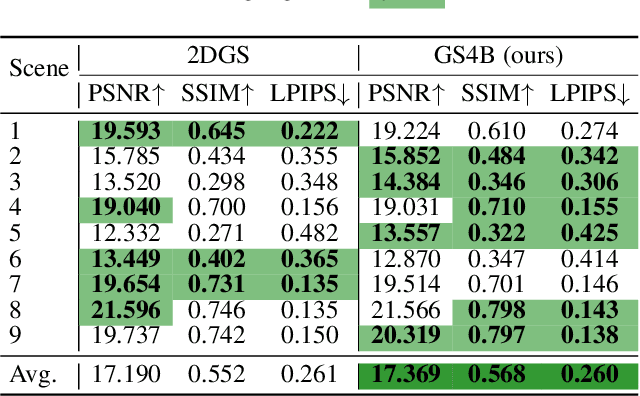

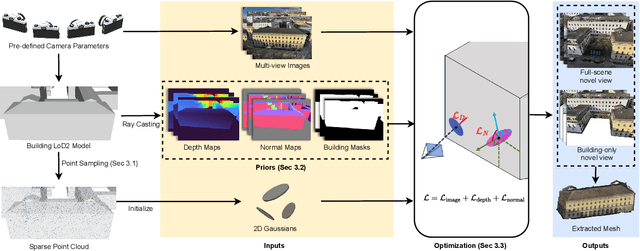

GS4Buildings: Prior-Guided Gaussian Splatting for 3D Building Reconstruction

Aug 10, 2025

Recent advances in Gaussian Splatting (GS) have demonstrated its effectiveness in photo-realistic rendering and 3D reconstruction. Among these, 2D Gaussian Splatting (2DGS) is particularly suitable for surface reconstruction due to its flattened Gaussian representation and integrated normal regularization. However, its performance often degrades in large-scale and complex urban scenes with frequent occlusions, leading to incomplete building reconstructions. We propose GS4Buildings, a novel prior-guided Gaussian Splatting method leveraging the ubiquity of semantic 3D building models for robust and scalable building surface reconstruction. Instead of relying on traditional Structure-from-Motion (SfM) pipelines, GS4Buildings initializes Gaussians directly from low-level Level of Detail (LoD)2 semantic 3D building models. Moreover, we generate prior depth and normal maps from the planar building geometry and incorporate them into the optimization process, providing strong geometric guidance for surface consistency and structural accuracy. We also introduce an optional building-focused mode that limits reconstruction to building regions, achieving a 71.8% reduction in Gaussian primitives and enabling a more efficient and compact representation. Experiments on urban datasets demonstrate that GS4Buildings improves reconstruction completeness by 20.5% and geometric accuracy by 32.8%. These results highlight the potential of semantic building model integration to advance GS-based reconstruction toward real-world urban applications such as smart cities and digital twins. Our project is available: https://github.com/zqlin0521/GS4Buildings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge