Xing Zhou

MemTrust: A Zero-Trust Architecture for Unified AI Memory System

Jan 11, 2026Abstract:AI memory systems are evolving toward unified context layers that enable efficient cross-agent collaboration and multi-tool workflows, facilitating better accumulation of personal data and learning of user preferences. However, centralization creates a trust crisis where users must entrust cloud providers with sensitive digital memory data. We identify a core tension between personalization demands and data sovereignty: centralized memory systems enable efficient cross-agent collaboration but expose users' sensitive data to cloud provider risks, while private deployments provide security but limit collaboration. To resolve this tension, we aim to achieve local-equivalent security while enabling superior maintenance efficiency and collaborative capabilities. We propose a five-layer architecture abstracting common functional components of AI memory systems: Storage, Extraction, Learning, Retrieval, and Governance. By applying TEE protection to each layer, we establish a trustworthy framework. Based on this, we design MemTrust, a hardware-backed zero-trust architecture that provides cryptographic guarantees across all layers. Our contributions include the five-layer abstraction, "Context from MemTrust" protocol for cross-application sharing, side-channel hardened retrieval with obfuscated access patterns, and comprehensive security analysis. The architecture enables third-party developers to port existing systems with acceptable development costs, achieving system-wide trustworthiness. We believe that AI memory plays a crucial role in enhancing the efficiency and collaboration of agents and AI tools. AI memory will become the foundational infrastructure for AI agents, and MemTrust serves as a universal trusted framework for AI memory systems, with the goal of becoming the infrastructure of memory infrastructure.

Decoupling Constraint from Two Direction in Evolutionary Constrained Multi-objective Optimization

Dec 30, 2025Abstract:Real-world Constrained Multi-objective Optimization Problems (CMOPs) often contain multiple constraints, and understanding and utilizing the coupling between these constraints is crucial for solving CMOPs. However, existing Constrained Multi-objective Evolutionary Algorithms (CMOEAs) typically ignore these couplings and treat all constraints as a single aggregate, which lacks interpretability regarding the specific geometric roles of constraints. To address this limitation, we first analyze how different constraints interact and show that the final Constrained Pareto Front (CPF) depends not only on the Pareto fronts of individual constraints but also on the boundaries of infeasible regions. This insight implies that CMOPs with different coupling types must be solved from different search directions. Accordingly, we propose a novel algorithm named Decoupling Constraint from Two Directions (DCF2D). This method periodically detects constraint couplings and spawns an auxiliary population for each relevant constraint with an appropriate search direction. Extensive experiments on seven challenging CMOP benchmark suites and on a collection of real-world CMOPs demonstrate that DCF2D outperforms five state-of-the-art CMOEAs, including existing decoupling-based methods.

No Cache Left Idle: Accelerating diffusion model via Extreme-slimming Caching

Dec 14, 2025

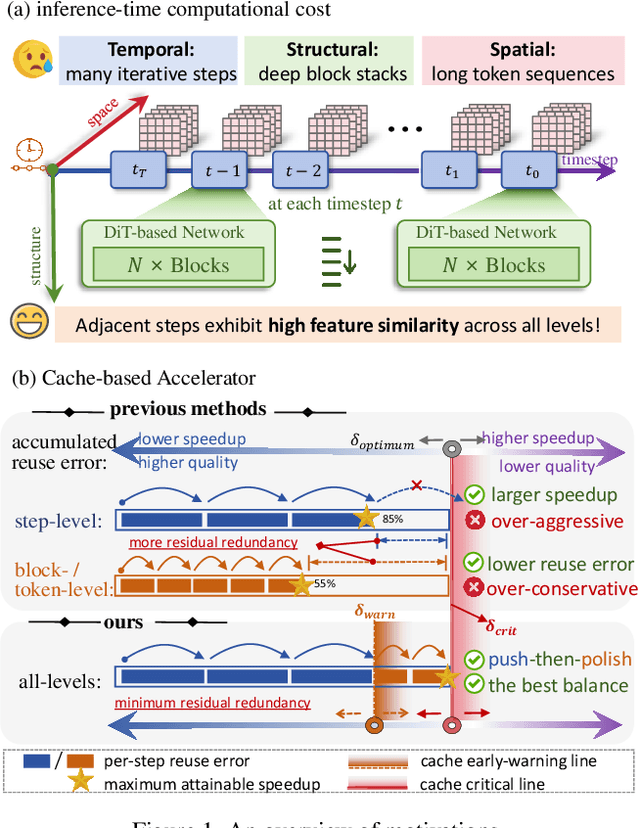

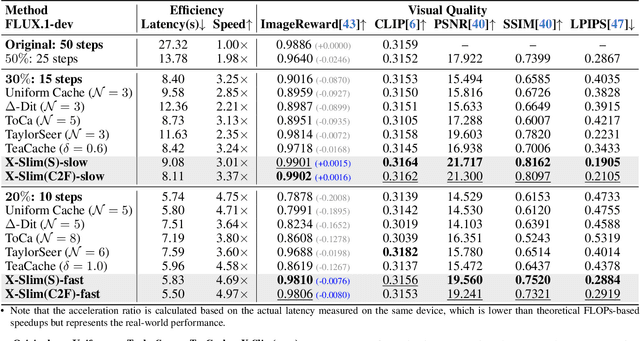

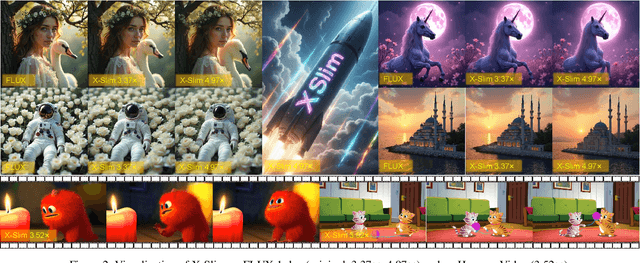

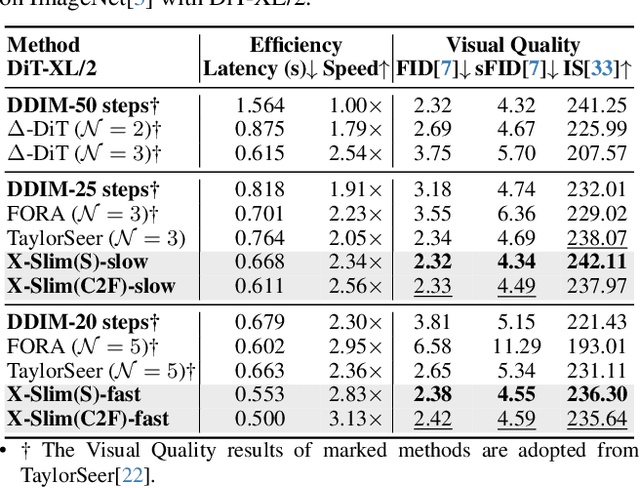

Abstract:Diffusion models achieve remarkable generative quality, but computational overhead scales with step count, model depth, and sequence length. Feature caching is effective since adjacent timesteps yield highly similar features. However, an inherent trade-off remains: aggressive timestep reuse offers large speedups but can easily cross the critical line, hurting fidelity, while block- or token-level reuse is safer but yields limited computational savings. We present X-Slim (eXtreme-Slimming Caching), a training-free, cache-based accelerator that, to our knowledge, is the first unified framework to exploit cacheable redundancy across timesteps, structure (blocks), and space (tokens). Rather than simply mixing levels, X-Slim introduces a dual-threshold controller that turns caching into a push-then-polish process: it first pushes reuse at the timestep level up to an early-warning line, then switches to lightweight block- and token-level refresh to polish the remaining redundancy, and triggers full inference once the critical line is crossed to reset accumulated error. At each level, context-aware indicators decide when and where to cache. Across diverse tasks, X-Slim advances the speed-quality frontier. On FLUX.1-dev and HunyuanVideo, it reduces latency by up to 4.97x and 3.52x with minimal perceptual loss. On DiT-XL/2, it reaches 3.13x acceleration and improves FID by 2.42 over prior methods.

UPETrack: Unidirectional Position Estimation for Tracking Occluded Deformable Linear Objects

Dec 10, 2025Abstract:Real-time state tracking of Deformable Linear Objects (DLOs) is critical for enabling robotic manipulation of DLOs in industrial assembly, medical procedures, and daily-life applications. However, the high-dimensional configuration space, nonlinear dynamics, and frequent partial occlusions present fundamental barriers to robust real-time DLO tracking. To address these limitations, this study introduces UPETrack, a geometry-driven framework based on Unidirectional Position Estimation (UPE), which facilitates tracking without the requirement for physical modeling, virtual simulation, or visual markers. The framework operates in two phases: (1) visible segment tracking is based on a Gaussian Mixture Model (GMM) fitted via the Expectation Maximization (EM) algorithm, and (2) occlusion region prediction employing UPE algorithm we proposed. UPE leverages the geometric continuity inherent in DLO shapes and their temporal evolution patterns to derive a closed-form positional estimator through three principal mechanisms: (i) local linear combination displacement term, (ii) proximal linear constraint term, and (iii) historical curvature term. This analytical formulation allows efficient and stable estimation of occluded nodes through explicit linear combinations of geometric components, eliminating the need for additional iterative optimization. Experimental results demonstrate that UPETrack surpasses two state-of-the-art tracking algorithms, including TrackDLO and CDCPD2, in both positioning accuracy and computational efficiency.

Omne-R1: Learning to Reason with Memory for Multi-hop Question Answering

Aug 24, 2025Abstract:This paper introduces Omne-R1, a novel approach designed to enhance multi-hop question answering capabilities on schema-free knowledge graphs by integrating advanced reasoning models. Our method employs a multi-stage training workflow, including two reinforcement learning phases and one supervised fine-tuning phase. We address the challenge of limited suitable knowledge graphs and QA data by constructing domain-independent knowledge graphs and auto-generating QA pairs. Experimental results show significant improvements in answering multi-hop questions, with notable performance gains on more complex 3+ hop questions. Our proposed training framework demonstrates strong generalization abilities across diverse knowledge domains.

Alita: Generalist Agent Enabling Scalable Agentic Reasoning with Minimal Predefinition and Maximal Self-Evolution

May 26, 2025

Abstract:Recent advances in large language models (LLMs) have enabled agents to autonomously perform complex, open-ended tasks. However, many existing frameworks depend heavily on manually predefined tools and workflows, which hinder their adaptability, scalability, and generalization across domains. In this work, we introduce Alita--a generalist agent designed with the principle of "Simplicity is the ultimate sophistication," enabling scalable agentic reasoning through minimal predefinition and maximal self-evolution. For minimal predefinition, Alita is equipped with only one component for direct problem-solving, making it much simpler and neater than previous approaches that relied heavily on hand-crafted, elaborate tools and workflows. This clean design enhances its potential to generalize to challenging questions, without being limited by tools. For Maximal self-evolution, we enable the creativity of Alita by providing a suite of general-purpose components to autonomously construct, refine, and reuse external capabilities by generating task-related model context protocols (MCPs) from open source, which contributes to scalable agentic reasoning. Notably, Alita achieves 75.15% pass@1 and 87.27% pass@3 accuracy, which is top-ranking among general-purpose agents, on the GAIA benchmark validation dataset, 74.00% and 52.00% pass@1, respectively, on Mathvista and PathVQA, outperforming many agent systems with far greater complexity. More details will be updated at $\href{https://github.com/CharlesQ9/Alita}{https://github.com/CharlesQ9/Alita}$.

AvatarShield: Visual Reinforcement Learning for Human-Centric Video Forgery Detection

May 21, 2025Abstract:The rapid advancement of Artificial Intelligence Generated Content (AIGC) technologies, particularly in video generation, has led to unprecedented creative capabilities but also increased threats to information integrity, identity security, and public trust. Existing detection methods, while effective in general scenarios, lack robust solutions for human-centric videos, which pose greater risks due to their realism and potential for legal and ethical misuse. Moreover, current detection approaches often suffer from poor generalization, limited scalability, and reliance on labor-intensive supervised fine-tuning. To address these challenges, we propose AvatarShield, the first interpretable MLLM-based framework for detecting human-centric fake videos, enhanced via Group Relative Policy Optimization (GRPO). Through our carefully designed accuracy detection reward and temporal compensation reward, it effectively avoids the use of high-cost text annotation data, enabling precise temporal modeling and forgery detection. Meanwhile, we design a dual-encoder architecture, combining high-level semantic reasoning and low-level artifact amplification to guide MLLMs in effective forgery detection. We further collect FakeHumanVid, a large-scale human-centric video benchmark that includes synthesis methods guided by pose, audio, and text inputs, enabling rigorous evaluation of detection methods in real-world scenes. Extensive experiments show that AvatarShield significantly outperforms existing approaches in both in-domain and cross-domain detection, setting a new standard for human-centric video forensics.

Open-Sora Plan: Open-Source Large Video Generation Model

Nov 28, 2024

Abstract:We introduce Open-Sora Plan, an open-source project that aims to contribute a large generation model for generating desired high-resolution videos with long durations based on various user inputs. Our project comprises multiple components for the entire video generation process, including a Wavelet-Flow Variational Autoencoder, a Joint Image-Video Skiparse Denoiser, and various condition controllers. Moreover, many assistant strategies for efficient training and inference are designed, and a multi-dimensional data curation pipeline is proposed for obtaining desired high-quality data. Benefiting from efficient thoughts, our Open-Sora Plan achieves impressive video generation results in both qualitative and quantitative evaluations. We hope our careful design and practical experience can inspire the video generation research community. All our codes and model weights are publicly available at \url{https://github.com/PKU-YuanGroup/Open-Sora-Plan}.

OD-VAE: An Omni-dimensional Video Compressor for Improving Latent Video Diffusion Model

Sep 02, 2024Abstract:Variational Autoencoder (VAE), compressing videos into latent representations, is a crucial preceding component of Latent Video Diffusion Models (LVDMs). With the same reconstruction quality, the more sufficient the VAE's compression for videos is, the more efficient the LVDMs are. However, most LVDMs utilize 2D image VAE, whose compression for videos is only in the spatial dimension and often ignored in the temporal dimension. How to conduct temporal compression for videos in a VAE to obtain more concise latent representations while promising accurate reconstruction is seldom explored. To fill this gap, we propose an omni-dimension compression VAE, named OD-VAE, which can temporally and spatially compress videos. Although OD-VAE's more sufficient compression brings a great challenge to video reconstruction, it can still achieve high reconstructed accuracy by our fine design. To obtain a better trade-off between video reconstruction quality and compression speed, four variants of OD-VAE are introduced and analyzed. In addition, a novel tail initialization is designed to train OD-VAE more efficiently, and a novel inference strategy is proposed to enable OD-VAE to handle videos of arbitrary length with limited GPU memory. Comprehensive experiments on video reconstruction and LVDM-based video generation demonstrate the effectiveness and efficiency of our proposed methods.

ADSNet: Cross-Domain LTV Prediction with an Adaptive Siamese Network in Advertising

Jun 15, 2024

Abstract:Advertising platforms have evolved in estimating Lifetime Value (LTV) to better align with advertisers' true performance metric. However, the sparsity of real-world LTV data presents a significant challenge to LTV predictive model(i.e., pLTV), severely limiting the their capabilities. Therefore, we propose to utilize external data, in addition to the internal data of advertising platform, to expand the size of purchase samples and enhance the LTV prediction model of the advertising platform. To tackle the issue of data distribution shift between internal and external platforms, we introduce an Adaptive Difference Siamese Network (ADSNet), which employs cross-domain transfer learning to prevent negative transfer. Specifically, ADSNet is designed to learn information that is beneficial to the target domain. We introduce a gain evaluation strategy to calculate information gain, aiding the model in learning helpful information for the target domain and providing the ability to reject noisy samples, thus avoiding negative transfer. Additionally, we also design a Domain Adaptation Module as a bridge to connect different domains, reduce the distribution distance between them, and enhance the consistency of representation space distribution. We conduct extensive offline experiments and online A/B tests on a real advertising platform. Our proposed ADSNet method outperforms other methods, improving GINI by 2$\%$. The ablation study highlights the importance of the gain evaluation strategy in negative gain sample rejection and improving model performance. Additionally, ADSNet significantly improves long-tail prediction. The online A/B tests confirm ADSNet's efficacy, increasing online LTV by 3.47$\%$ and GMV by 3.89$\%$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge