Tao Wang

A No-Reference Deep Learning Quality Assessment Method for Super-resolution Images Based on Frequency Maps

Jun 09, 2022

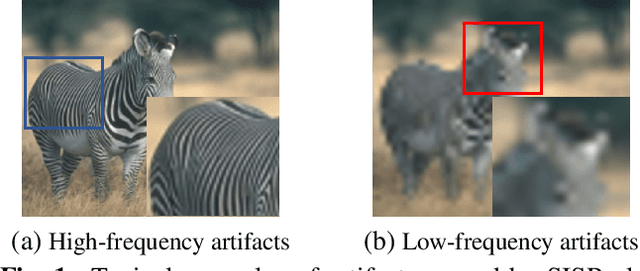

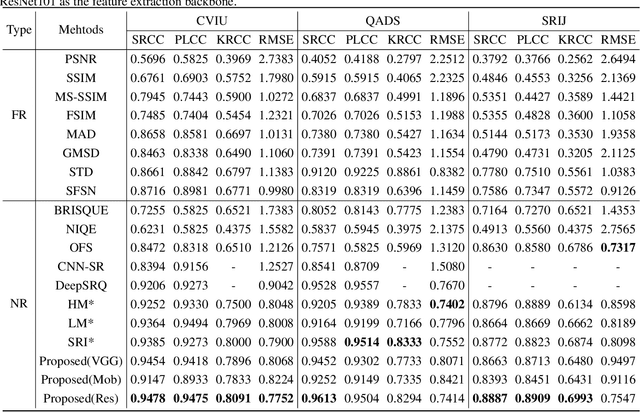

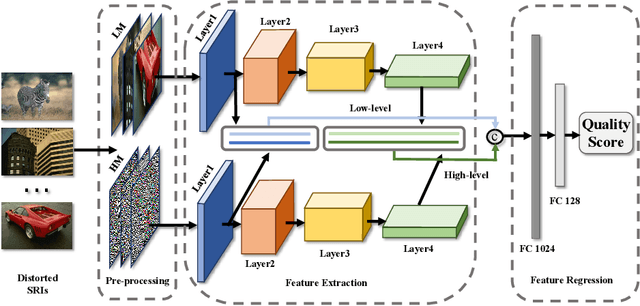

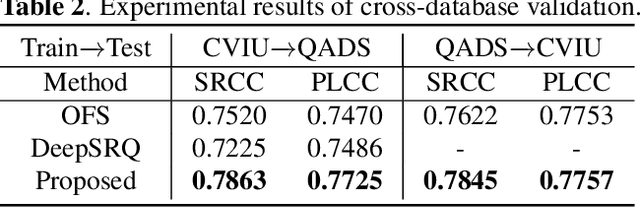

Abstract:To support the application scenarios where high-resolution (HR) images are urgently needed, various single image super-resolution (SISR) algorithms are developed. However, SISR is an ill-posed inverse problem, which may bring artifacts like texture shift, blur, etc. to the reconstructed images, thus it is necessary to evaluate the quality of super-resolution images (SRIs). Note that most existing image quality assessment (IQA) methods were developed for synthetically distorted images, which may not work for SRIs since their distortions are more diverse and complicated. Therefore, in this paper, we propose a no-reference deep-learning image quality assessment method based on frequency maps because the artifacts caused by SISR algorithms are quite sensitive to frequency information. Specifically, we first obtain the high-frequency map (HM) and low-frequency map (LM) of SRI by using Sobel operator and piecewise smooth image approximation. Then, a two-stream network is employed to extract the quality-aware features of both frequency maps. Finally, the features are regressed into a single quality value using fully connected layers. The experimental results show that our method outperforms all compared IQA models on the selected three super-resolution quality assessment (SRQA) databases.

Uncertainty-based Network for Few-shot Image Classification

May 17, 2022

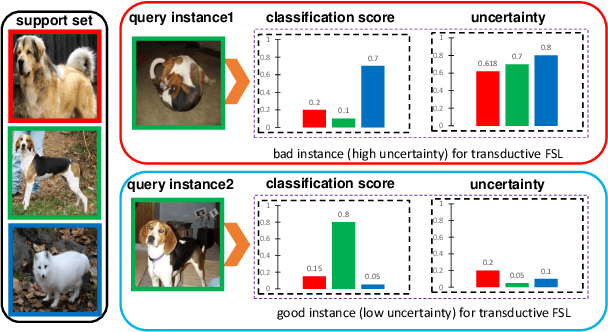

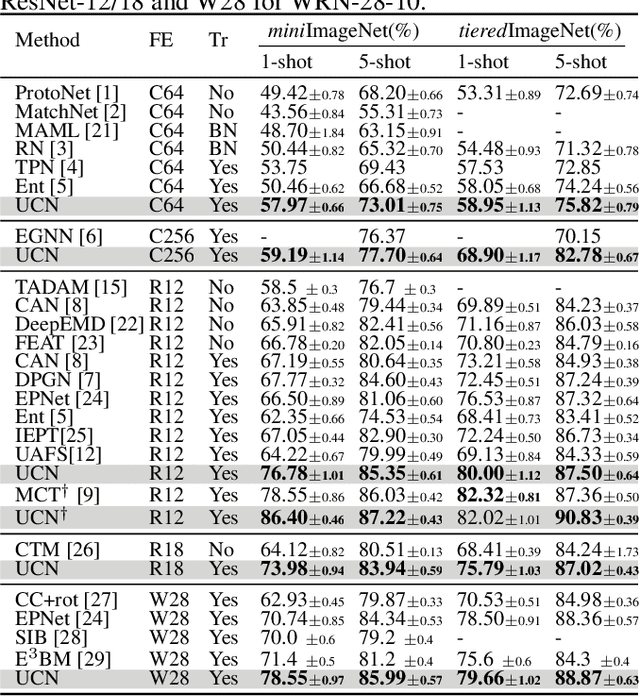

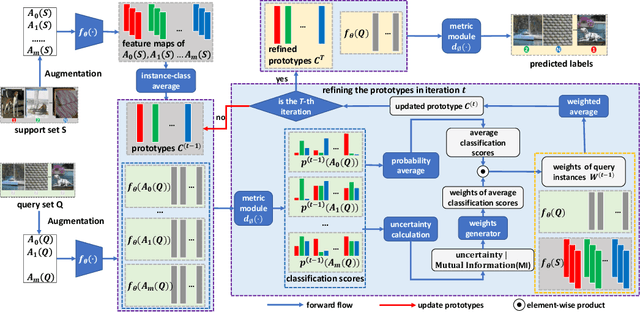

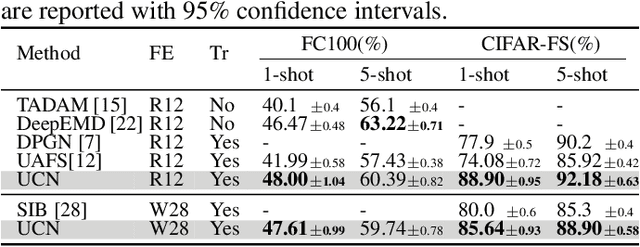

Abstract:The transductive inference is an effective technique in the few-shot learning task, where query sets update prototypes to improve themselves. However, these methods optimize the model by considering only the classification scores of the query instances as confidence while ignoring the uncertainty of these classification scores. In this paper, we propose a novel method called Uncertainty-Based Network, which models the uncertainty of classification results with the help of mutual information. Specifically, we first data augment and classify the query instance and calculate the mutual information of these classification scores. Then, mutual information is used as uncertainty to assign weights to classification scores, and the iterative update strategy based on classification scores and uncertainties assigns the optimal weights to query instances in prototype optimization. Extensive results on four benchmarks show that Uncertainty-Based Network achieves comparable performance in classification accuracy compared to state-of-the-art method.

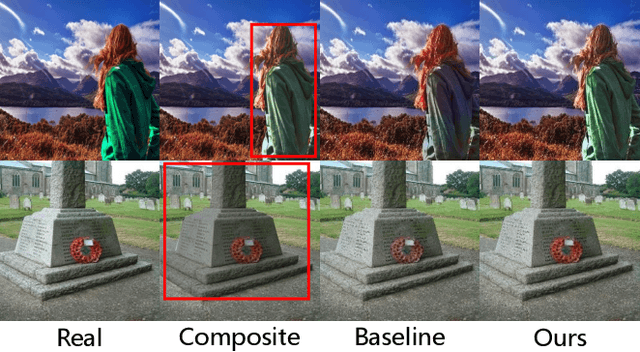

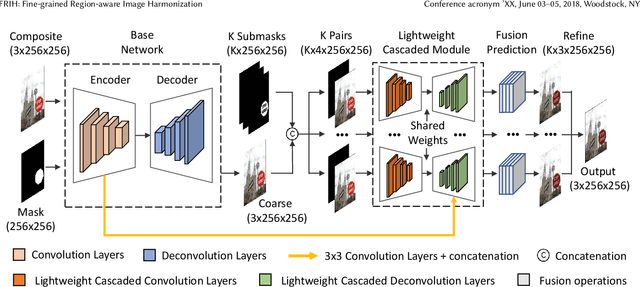

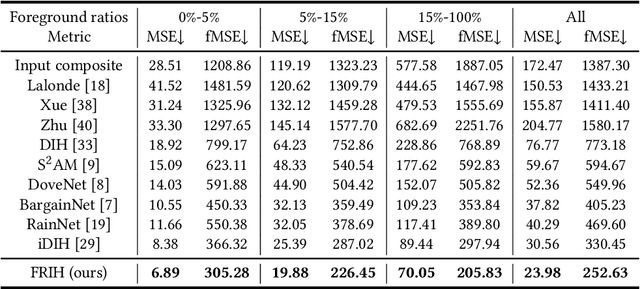

FRIH: Fine-grained Region-aware Image Harmonization

May 13, 2022

Abstract:Image harmonization aims to generate a more realistic appearance of foreground and background for a composite image. Existing methods perform the same harmonization process for the whole foreground. However, the implanted foreground always contains different appearance patterns. All the existing solutions ignore the difference of each color block and losing some specific details. Therefore, we propose a novel global-local two stages framework for Fine-grained Region-aware Image Harmonization (FRIH), which is trained end-to-end. In the first stage, the whole input foreground mask is used to make a global coarse-grained harmonization. In the second stage, we adaptively cluster the input foreground mask into several submasks by the corresponding pixel RGB values in the composite image. Each submask and the coarsely adjusted image are concatenated respectively and fed into a lightweight cascaded module, adjusting the global harmonization performance according to the region-aware local feature. Moreover, we further designed a fusion prediction module by fusing features from all the cascaded decoder layers together to generate the final result, which could utilize the different degrees of harmonization results comprehensively. Without bells and whistles, our FRIH algorithm achieves the best performance on iHarmony4 dataset (PSNR is 38.19 dB) with a lightweight model. The parameters for our model are only 11.98 M, far below the existing methods.

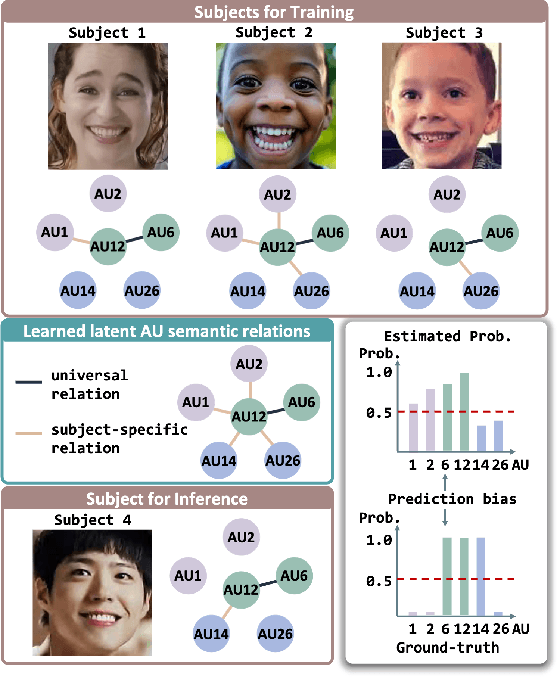

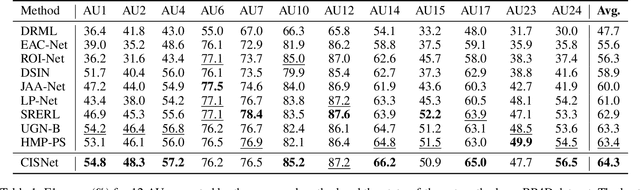

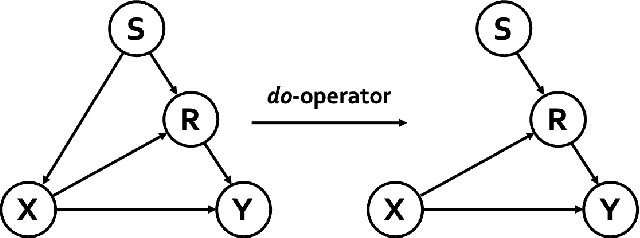

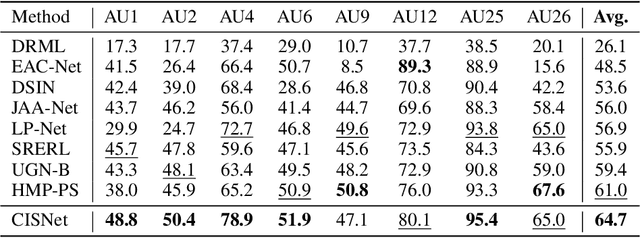

Causal Intervention for Subject-Deconfounded Facial Action Unit Recognition

Apr 17, 2022

Abstract:Subject-invariant facial action unit (AU) recognition remains challenging for the reason that the data distribution varies among subjects. In this paper, we propose a causal inference framework for subject-invariant facial action unit recognition. To illustrate the causal effect existing in AU recognition task, we formulate the causalities among facial images, subjects, latent AU semantic relations, and estimated AU occurrence probabilities via a structural causal model. By constructing such a causal diagram, we clarify the causal effect among variables and propose a plug-in causal intervention module, CIS, to deconfound the confounder \emph{Subject} in the causal diagram. Extensive experiments conducted on two commonly used AU benchmark datasets, BP4D and DISFA, show the effectiveness of our CIS, and the model with CIS inserted, CISNet, has achieved state-of-the-art performance.

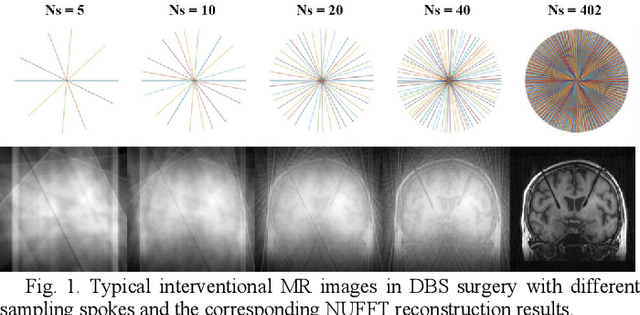

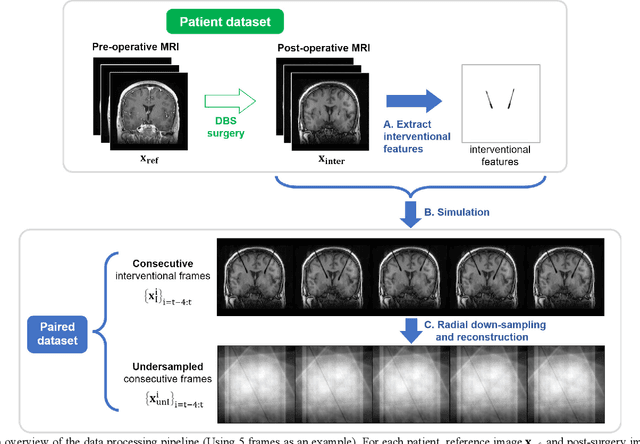

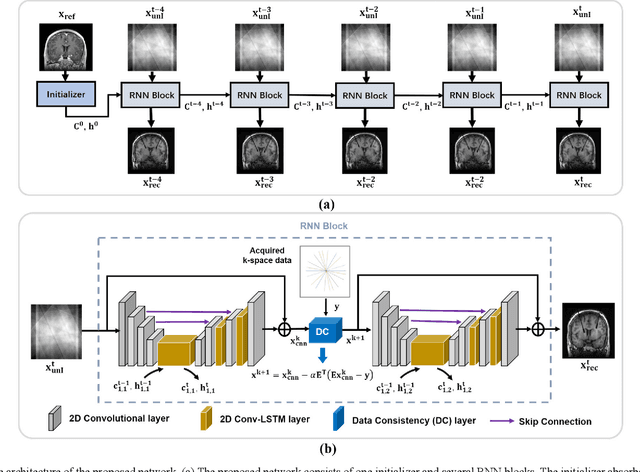

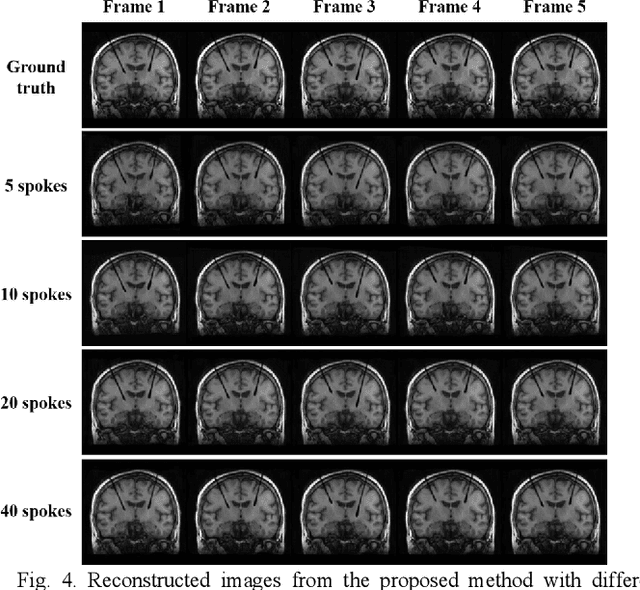

A Long Short-term Memory Based Recurrent Neural Network for Interventional MRI Reconstruction

Apr 12, 2022

Abstract:Interventional magnetic resonance imaging (i-MRI) for surgical guidance could help visualize the interventional process such as deep brain stimulation (DBS), improving the surgery performance and patient outcome. Different from retrospective reconstruction in conventional dynamic imaging, i-MRI for DBS has to acquire and reconstruct the interventional images sequentially online. Here we proposed a convolutional long short-term memory (Conv-LSTM) based recurrent neural network (RNN), or ConvLR, to reconstruct interventional images with golden-angle radial sampling. By using an initializer and Conv-LSTM blocks, the priors from the pre-operative reference image and intra-operative frames were exploited for reconstructing the current frame. Data consistency for radial sampling was implemented by a soft-projection method. To improve the reconstruction accuracy, an adversarial learning strategy was adopted. A set of interventional images based on the pre-operative and post-operative MR images were simulated for algorithm validation. Results showed with only 10 radial spokes, ConvLR provided the best performance compared with state-of-the-art methods, giving an acceleration up to 40 folds. The proposed algorithm has the potential to achieve real-time i-MRI for DBS and can be used for general purpose MR-guided intervention.

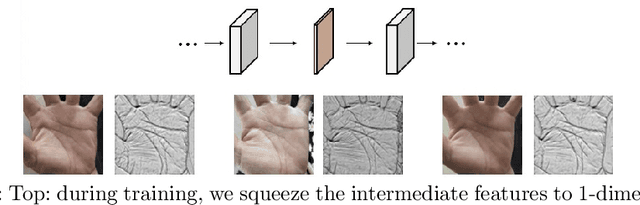

Geometric Synthesis: A Free lunch for Large-scale Palmprint Recognition Model Pretraining

Apr 11, 2022

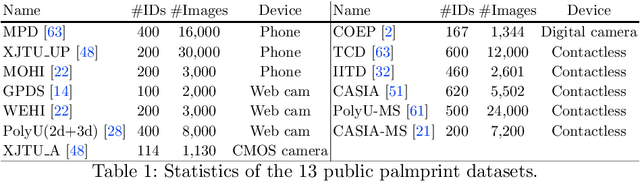

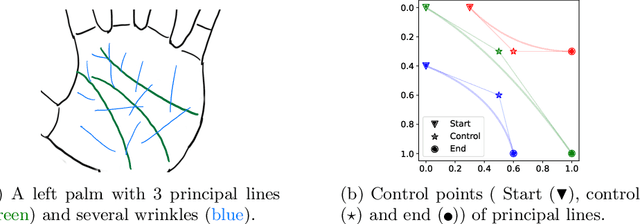

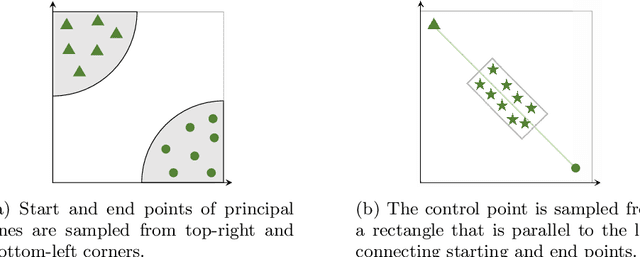

Abstract:Palmprints are private and stable information for biometric recognition. In the deep learning era, the development of palmprint recognition is limited by the lack of sufficient training data. In this paper, by observing that palmar creases are the key information to deep-learning-based palmprint recognition, we propose to synthesize training data by manipulating palmar creases. Concretely, we introduce an intuitive geometric model which represents palmar creases with parameterized B\'ezier curves. By randomly sampling B\'ezier parameters, we can synthesize massive training samples of diverse identities, which enables us to pretrain large-scale palmprint recognition models. Experimental results demonstrate that such synthetically pretrained models have a very strong generalization ability: they can be efficiently transferred to real datasets, leading to significant performance improvements on palmprint recognition. For example, under the open-set protocol, our method improves the strong ArcFace baseline by more than 10\% in terms of TAR@1e-6. And under the closed-set protocol, our method reduces the equal error rate (EER) by an order of magnitude.

GigaST: A 10,000-hour Pseudo Speech Translation Corpus

Apr 08, 2022

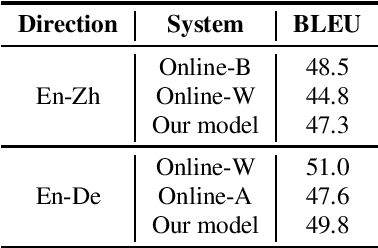

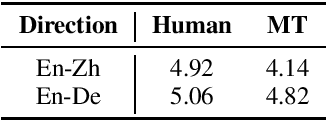

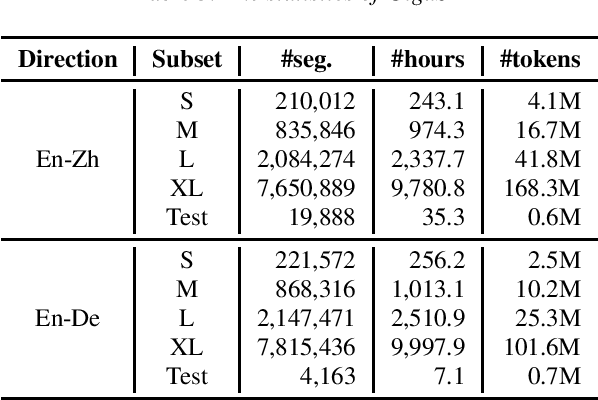

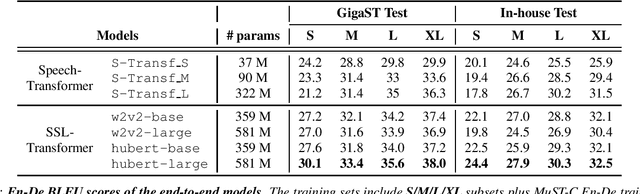

Abstract:This paper introduces GigaST, a large-scale pseudo speech translation (ST) corpus. We create the corpus by translating the text in GigaSpeech, an English ASR corpus, into German and Chinese. The training set is translated by a strong machine translation system and the test set is translated by human. ST models trained with an addition of our corpus obtain new state-of-the-art results on the MuST-C English-German benchmark test set. We provide a detailed description of the translation process and verify its quality. We make the translated text data public and hope to facilitate research in speech translation. Additionally, we also release the training scripts on NeurST to make it easy to replicate our systems. GigaST dataset is available at https://st-benchmark.github.io/resources/GigaST.

PoseTriplet: Co-evolving 3D Human Pose Estimation, Imitation, and Hallucination under Self-supervision

Mar 29, 2022

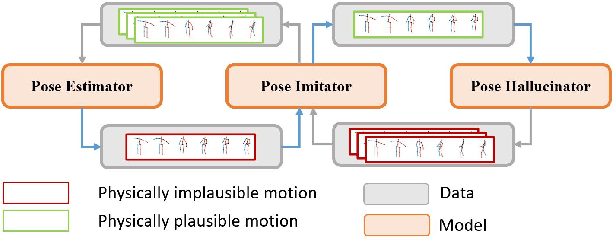

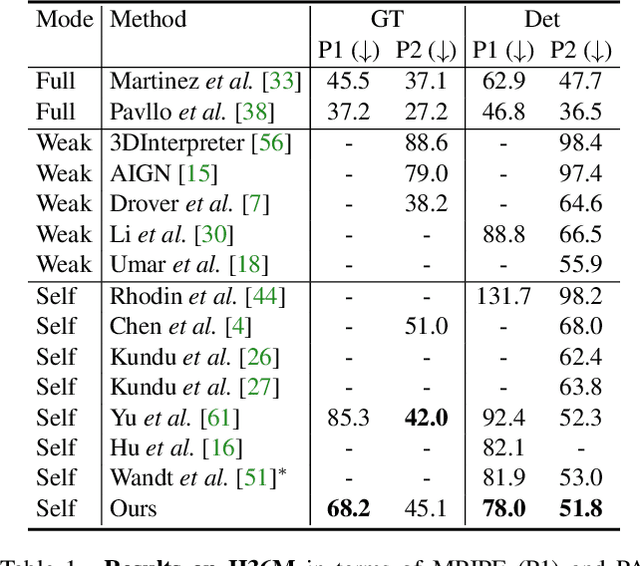

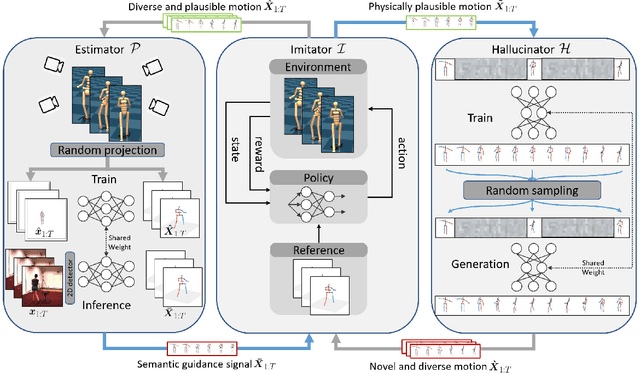

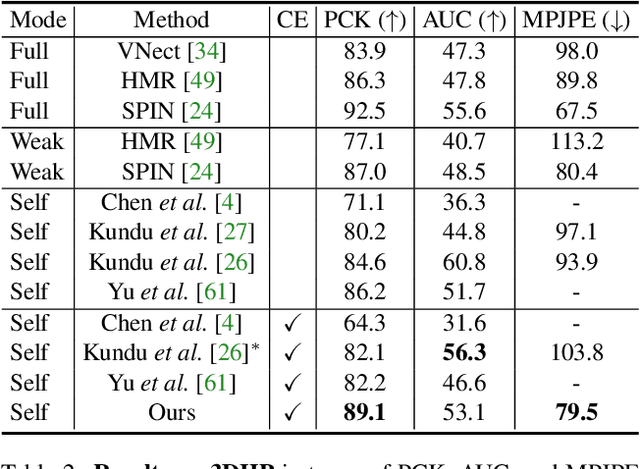

Abstract:Existing self-supervised 3D human pose estimation schemes have largely relied on weak supervisions like consistency loss to guide the learning, which, inevitably, leads to inferior results in real-world scenarios with unseen poses. In this paper, we propose a novel self-supervised approach that allows us to explicitly generate 2D-3D pose pairs for augmenting supervision, through a self-enhancing dual-loop learning framework. This is made possible via introducing a reinforcement-learning-based imitator, which is learned jointly with a pose estimator alongside a pose hallucinator; the three components form two loops during the training process, complementing and strengthening one another. Specifically, the pose estimator transforms an input 2D pose sequence to a low-fidelity 3D output, which is then enhanced by the imitator that enforces physical constraints. The refined 3D poses are subsequently fed to the hallucinator for producing even more diverse data, which are, in turn, strengthened by the imitator and further utilized to train the pose estimator. Such a co-evolution scheme, in practice, enables training a pose estimator on self-generated motion data without relying on any given 3D data. Extensive experiments across various benchmarks demonstrate that our approach yields encouraging results significantly outperforming the state of the art and, in some cases, even on par with results of fully-supervised methods. Notably, it achieves 89.1% 3D PCK on MPI-INF-3DHP under self-supervised cross-dataset evaluation setup, improving upon the previous best self-supervised methods by 8.6%. Code can be found at: https://github.com/Garfield-kh/PoseTriplet

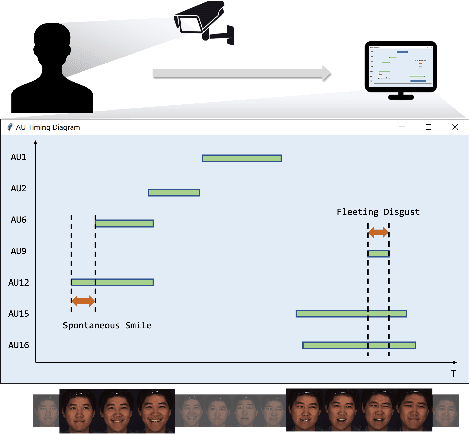

EventFormer: AU Event Transformer for Facial Action Unit Event Detection

Mar 12, 2022

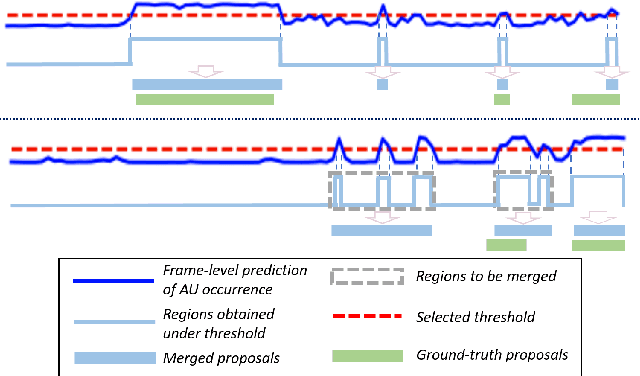

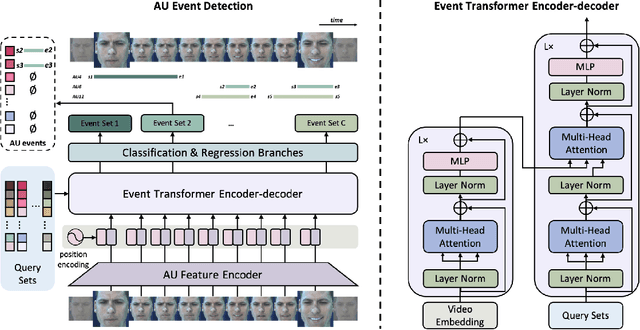

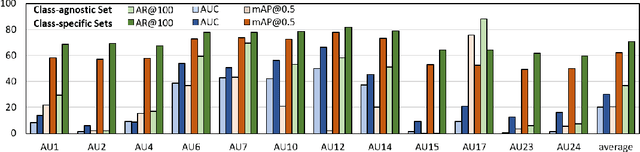

Abstract:Facial action units (AUs) play an indispensable role in human emotion analysis. We observe that although AU-based high-level emotion analysis is urgently needed by real-world applications, frame-level AU results provided by previous works cannot be directly used for such analysis. Moreover, as AUs are dynamic processes, the utilization of global temporal information is important but has been gravely ignored in the literature. To this end, we propose EventFormer for AU event detection, which is the first work directly detecting AU events from a video sequence by viewing AU event detection as a multiple class-specific sets prediction problem. Extensive experiments conducted on a commonly used AU benchmark dataset, BP4D, show the superiority of EventFormer under suitable metrics.

An STDP-Based Supervised Learning Algorithm for Spiking Neural Networks

Mar 07, 2022

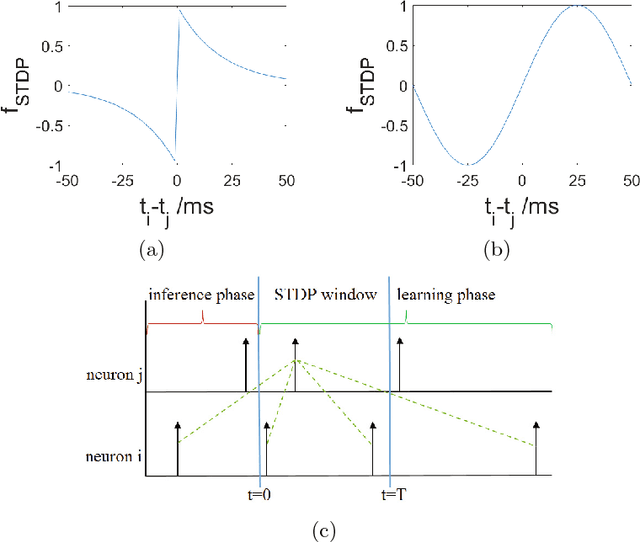

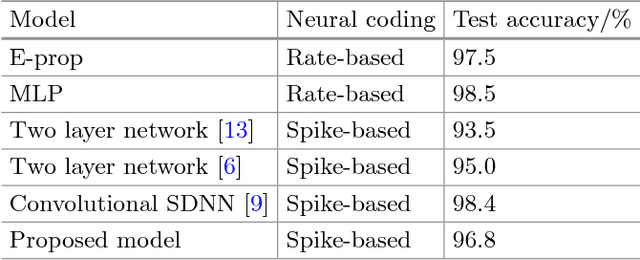

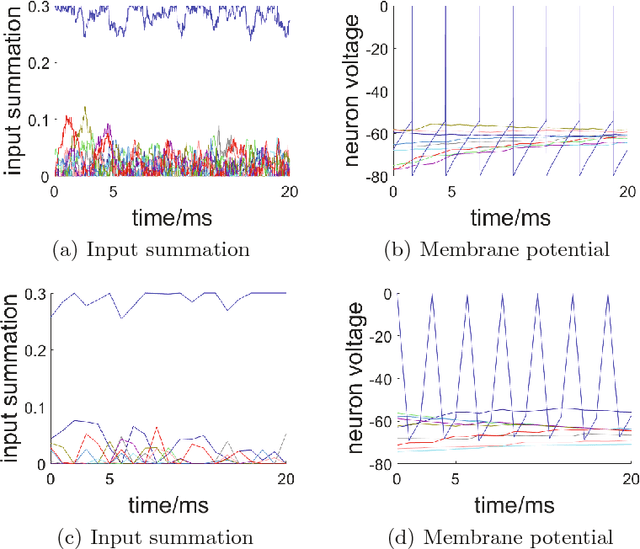

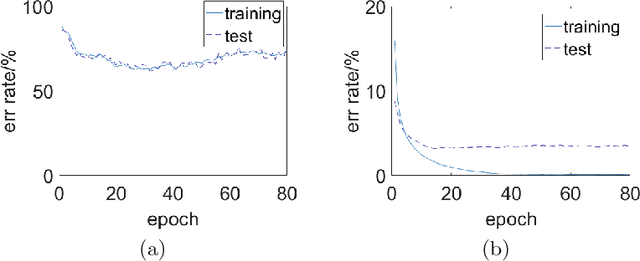

Abstract:Compared with rate-based artificial neural networks, Spiking Neural Networks (SNN) provide a more biological plausible model for the brain. But how they perform supervised learning remains elusive. Inspired by recent works of Bengio et al., we propose a supervised learning algorithm based on Spike-Timing Dependent Plasticity (STDP) for a hierarchical SNN consisting of Leaky Integrate-and-fire (LIF) neurons. A time window is designed for the presynaptic neuron and only the spikes in this window take part in the STDP updating process. The model is trained on the MNIST dataset. The classification accuracy approach that of a Multilayer Perceptron (MLP) with similar architecture trained by the standard back-propagation algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge