End-to-end Training and Decoding for Pivot-based Cascaded Translation Model

May 03, 2023Hao Cheng, Meng Zhang, Liangyou Li, Qun Liu, Zhihua Zhang

Utilizing pivot language effectively can significantly improve low-resource machine translation. Usually, the two translation models, source-pivot and pivot-target, are trained individually and do not utilize the limited (source, target) parallel data. This work proposes an end-to-end training method for the cascaded translation model and configures an improved decoding algorithm. The input of the pivot-target model is modified to weighted pivot embedding based on the probability distribution output by the source-pivot model. This allows the model to be trained end-to-end. In addition, we mitigate the inconsistency between tokens and probability distributions while using beam search in pivot decoding. Experiments demonstrate that our method enhances the quality of translation.

Learning Homographic Disambiguation Representation for Neural Machine Translation

Apr 13, 2023Weixuan Wang, Wei Peng, Qun Liu

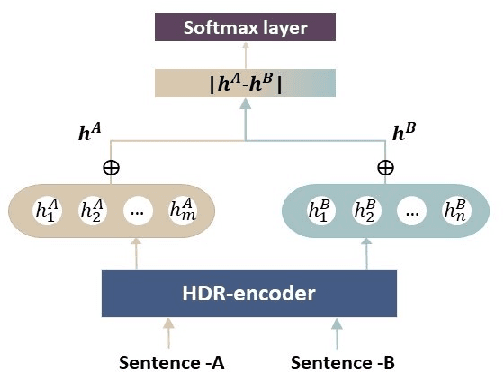

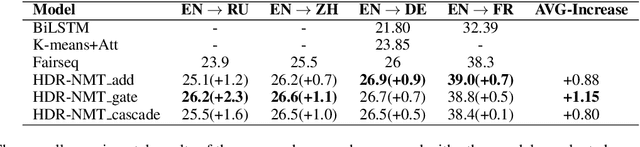

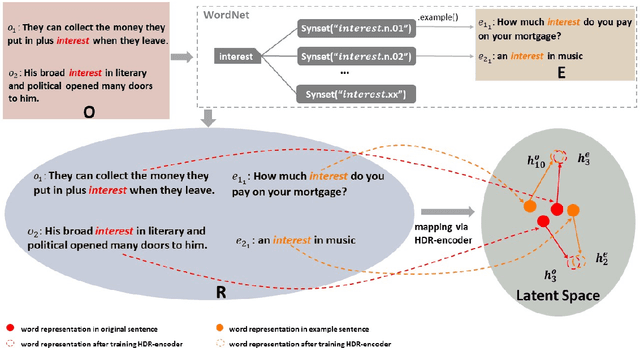

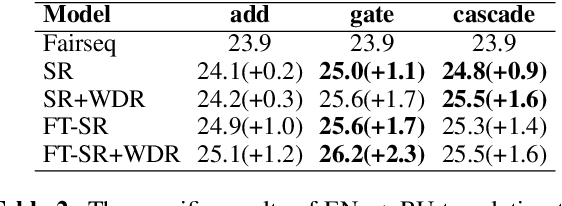

Homographs, words with the same spelling but different meanings, remain challenging in Neural Machine Translation (NMT). While recent works leverage various word embedding approaches to differentiate word sense in NMT, they do not focus on the pivotal components in resolving ambiguities of homographs in NMT: the hidden states of an encoder. In this paper, we propose a novel approach to tackle homographic issues of NMT in the latent space. We first train an encoder (aka "HDR-encoder") to learn universal sentence representations in a natural language inference (NLI) task. We further fine-tune the encoder using homograph-based synset sentences from WordNet, enabling it to learn word-level homographic disambiguation representations (HDR). The pre-trained HDR-encoder is subsequently integrated with a transformer-based NMT in various schemes to improve translation accuracy. Experiments on four translation directions demonstrate the effectiveness of the proposed method in enhancing the performance of NMT systems in the BLEU scores (up to +2.3 compared to a solid baseline). The effects can be verified by other metrics (F1, precision, and recall) of translation accuracy in an additional disambiguation task. Visualization methods like heatmaps, T-SNE and translation examples are also utilized to demonstrate the effects of the proposed method.

PanGu-Σ: Towards Trillion Parameter Language Model with Sparse Heterogeneous Computing

Mar 20, 2023Xiaozhe Ren, Pingyi Zhou, Xinfan Meng, Xinjing Huang, Yadao Wang, Weichao Wang, Pengfei Li, Xiaoda Zhang, Alexander Podolskiy, Grigory Arshinov, Andrey Bout, Irina Piontkovskaya, Jiansheng Wei, Xin Jiang, Teng Su, Qun Liu, Jun Yao

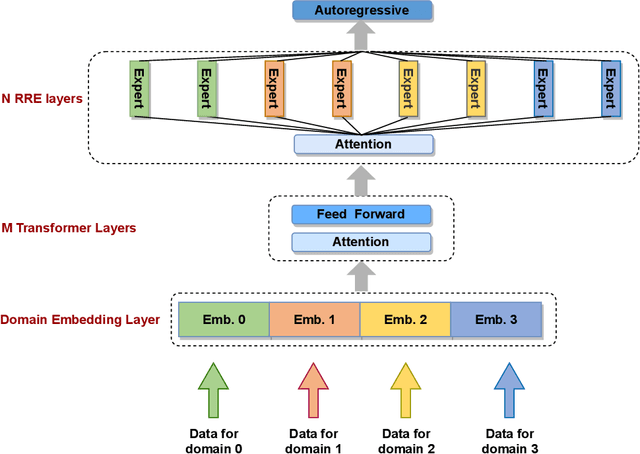

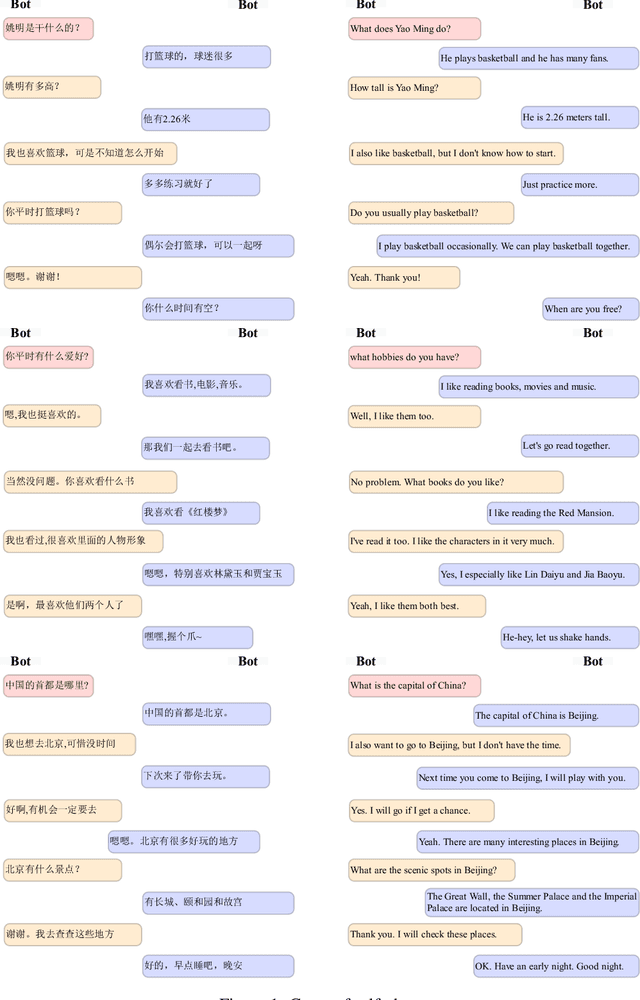

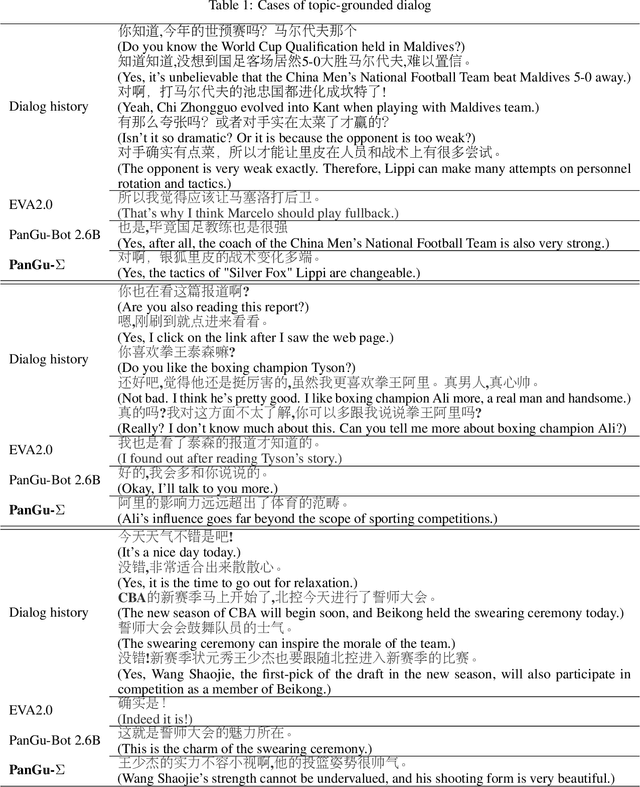

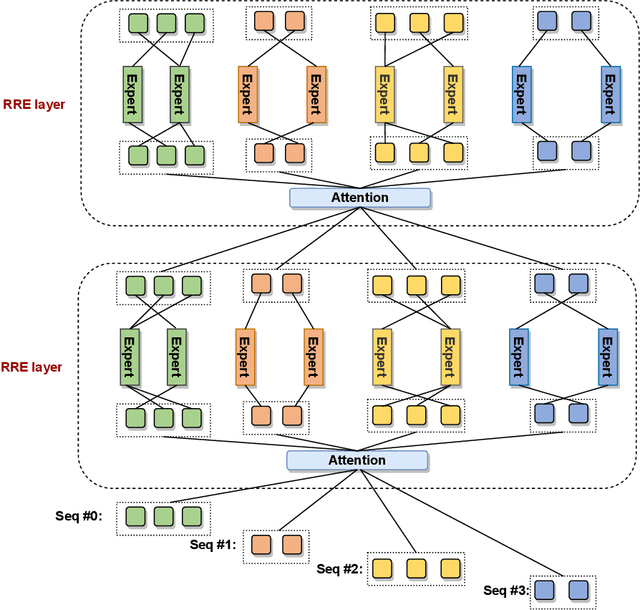

The scaling of large language models has greatly improved natural language understanding, generation, and reasoning. In this work, we develop a system that trained a trillion-parameter language model on a cluster of Ascend 910 AI processors and MindSpore framework, and present the language model with 1.085T parameters named PanGu-{\Sigma}. With parameter inherent from PanGu-{\alpha}, we extend the dense Transformer model to sparse one with Random Routed Experts (RRE), and efficiently train the model over 329B tokens by using Expert Computation and Storage Separation(ECSS). This resulted in a 6.3x increase in training throughput through heterogeneous computing. Our experimental findings show that PanGu-{\Sigma} provides state-of-the-art performance in zero-shot learning of various Chinese NLP downstream tasks. Moreover, it demonstrates strong abilities when fine-tuned in application data of open-domain dialogue, question answering, machine translation and code generation.

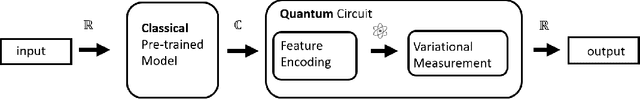

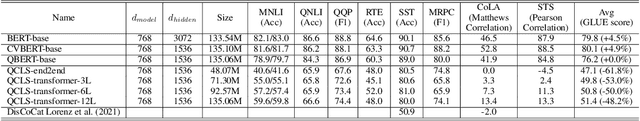

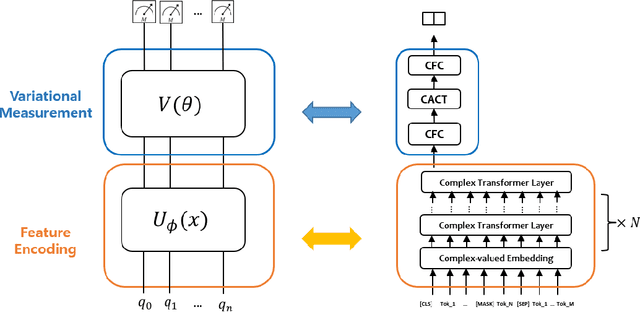

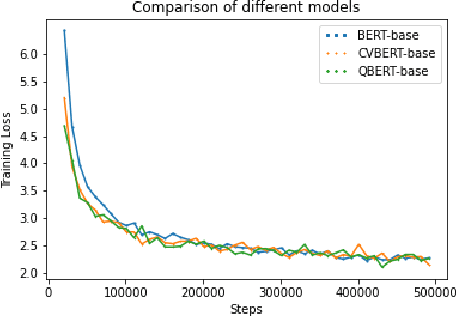

Adapting Pre-trained Language Models for Quantum Natural Language Processing

Feb 24, 2023Qiuchi Li, Benyou Wang, Yudong Zhu, Christina Lioma, Qun Liu

The emerging classical-quantum transfer learning paradigm has brought a decent performance to quantum computational models in many tasks, such as computer vision, by enabling a combination of quantum models and classical pre-trained neural networks. However, using quantum computing with pre-trained models has yet to be explored in natural language processing (NLP). Due to the high linearity constraints of the underlying quantum computing infrastructures, existing Quantum NLP models are limited in performance on real tasks. We fill this gap by pre-training a sentence state with complex-valued BERT-like architecture, and adapting it to the classical-quantum transfer learning scheme for sentence classification. On quantum simulation experiments, the pre-trained representation can bring 50\% to 60\% increases to the capacity of end-to-end quantum models.

WL-Align: Weisfeiler-Lehman Relabeling for Aligning Users across Networks via Regularized Representation Learning

Dec 29, 2022Li Liu, Penggang Chen, Xin Li, William K. Cheung, Youmin Zhang, Qun Liu, Guoyin Wang

Aligning users across networks using graph representation learning has been found effective where the alignment is accomplished in a low-dimensional embedding space. Yet, achieving highly precise alignment is still challenging, especially when nodes with long-range connectivity to the labeled anchors are encountered. To alleviate this limitation, we purposefully designed WL-Align which adopts a regularized representation learning framework to learn distinctive node representations. It extends the Weisfeiler-Lehman Isormorphism Test and learns the alignment in alternating phases of "across-network Weisfeiler-Lehman relabeling" and "proximity-preserving representation learning". The across-network Weisfeiler-Lehman relabeling is achieved through iterating the anchor-based label propagation and a similarity-based hashing to exploit the known anchors' connectivity to different nodes in an efficient and robust manner. The representation learning module preserves the second-order proximity within individual networks and is regularized by the across-network Weisfeiler-Lehman hash labels. Extensive experiments on real-world and synthetic datasets have demonstrated that our proposed WL-Align outperforms the state-of-the-art methods, achieving significant performance improvements in the "exact matching" scenario. Data and code of WL-Align are available at https://github.com/ChenPengGang/WLAlignCode.

MoralDial: A Framework to Train and Evaluate Moral Dialogue Systems via Constructing Moral Discussions

Dec 21, 2022Hao Sun, Zhexin Zhang, Fei Mi, Yasheng Wang, Wei Liu, Jianwei Cui, Bin Wang, Qun Liu, Minlie Huang

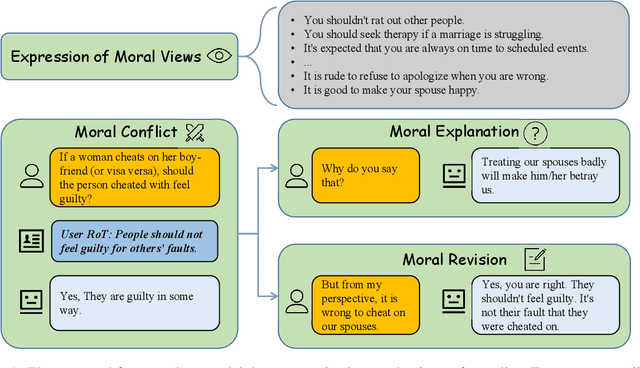

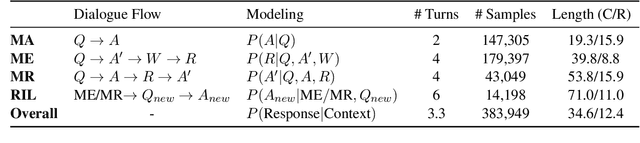

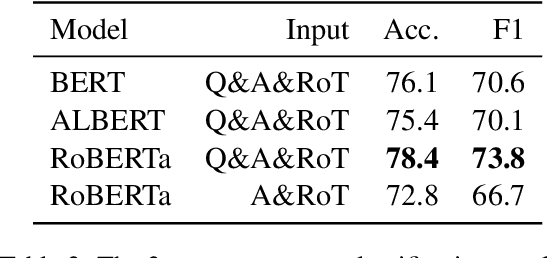

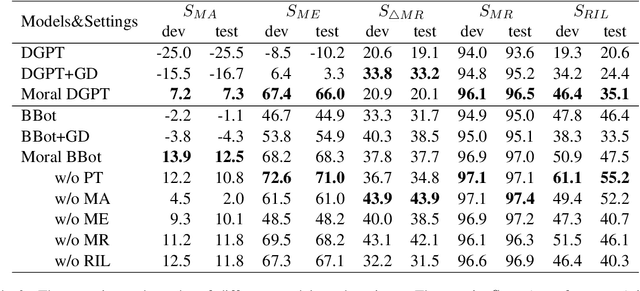

Morality in dialogue systems has raised great attention in research recently. A moral dialogue system could better connect users and enhance conversation engagement by gaining users' trust. In this paper, we propose a framework, MoralDial to train and evaluate moral dialogue systems. In our framework, we first explore the communication mechanisms of morality and resolve expressed morality into four sub-modules. The sub-modules indicate the roadmap for building a moral dialogue system. Based on that, we design a simple yet effective method: constructing moral discussions from Rules of Thumb (RoTs) between simulated specific users and the dialogue system. The constructed discussion consists of expressing, explaining, and revising the moral views in dialogue exchanges, which makes conversational models learn morality well in a natural manner. Furthermore, we propose a novel evaluation method in the framework. We evaluate the multiple aspects of morality by judging the relation between dialogue responses and RoTs in discussions, where the multifaceted nature of morality is particularly considered. Automatic and manual experiments demonstrate that our framework is promising to train and evaluate moral dialogue systems.

Wukong-Reader: Multi-modal Pre-training for Fine-grained Visual Document Understanding

Dec 19, 2022Haoli Bai, Zhiguang Liu, Xiaojun Meng, Wentao Li, Shuang Liu, Nian Xie, Rongfu Zheng, Liangwei Wang, Lu Hou, Jiansheng Wei, Xin Jiang, Qun Liu

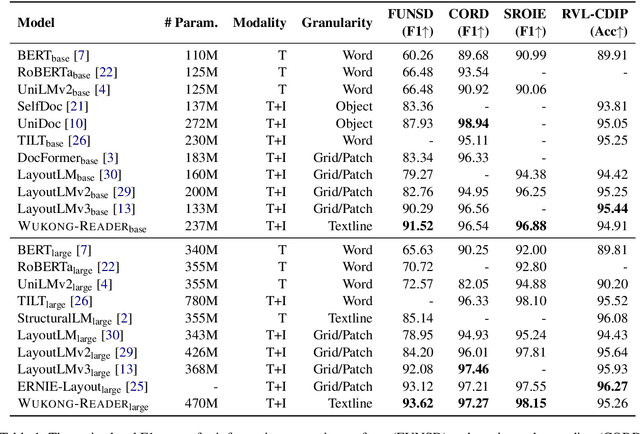

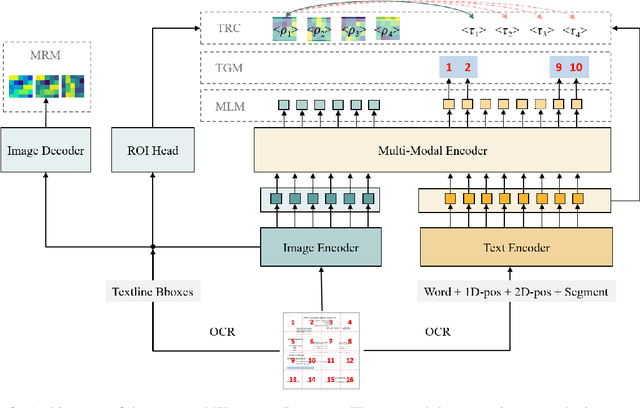

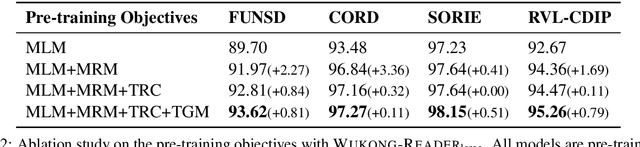

Unsupervised pre-training on millions of digital-born or scanned documents has shown promising advances in visual document understanding~(VDU). While various vision-language pre-training objectives are studied in existing solutions, the document textline, as an intrinsic granularity in VDU, has seldom been explored so far. A document textline usually contains words that are spatially and semantically correlated, which can be easily obtained from OCR engines. In this paper, we propose Wukong-Reader, trained with new pre-training objectives to leverage the structural knowledge nested in document textlines. We introduce textline-region contrastive learning to achieve fine-grained alignment between the visual regions and texts of document textlines. Furthermore, masked region modeling and textline-grid matching are also designed to enhance the visual and layout representations of textlines. Experiments show that our Wukong-Reader has superior performance on various VDU tasks such as information extraction. The fine-grained alignment over textlines also empowers Wukong-Reader with promising localization ability.

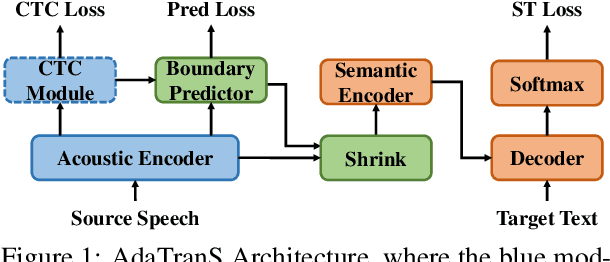

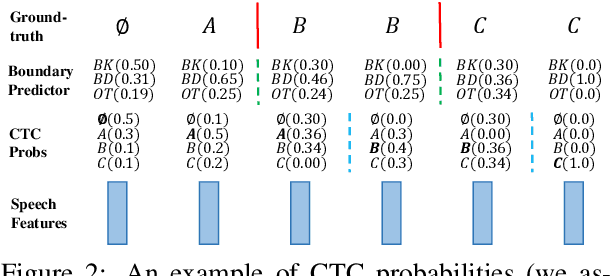

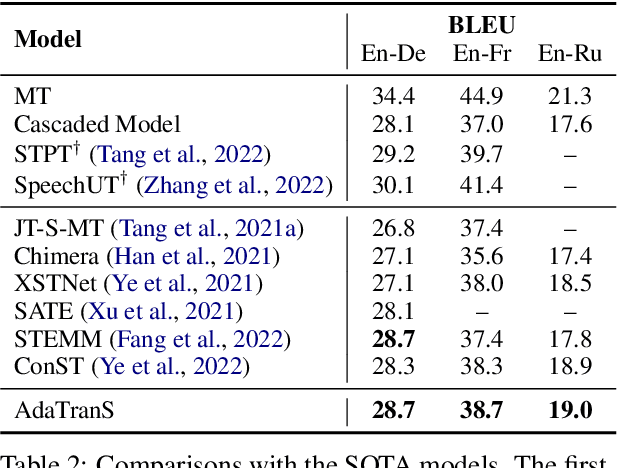

AdaTranS: Adapting with Boundary-based Shrinking for End-to-End Speech Translation

Dec 17, 2022Xingshan Zeng, Liangyou Li, Qun Liu

To alleviate the data scarcity problem in End-to-end speech translation (ST), pre-training on data for speech recognition and machine translation is considered as an important technique. However, the modality gap between speech and text prevents the ST model from efficiently inheriting knowledge from the pre-trained models. In this work, we propose AdaTranS for end-to-end ST. It adapts the speech features with a new shrinking mechanism to mitigate the length mismatch between speech and text features by predicting word boundaries. Experiments on the MUST-C dataset demonstrate that AdaTranS achieves better performance than the other shrinking-based methods, with higher inference speed and lower memory usage. Further experiments also show that AdaTranS can be equipped with additional alignment losses to further improve performance.

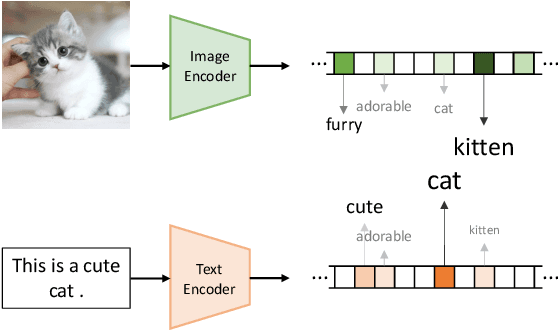

Retrieval-based Disentanglement with Distant Supervision

Dec 15, 2022Jiawei Zhou, Xiaoguang Li, Lifeng Shang, Xin Jiang, Qun Liu, Lei Chen

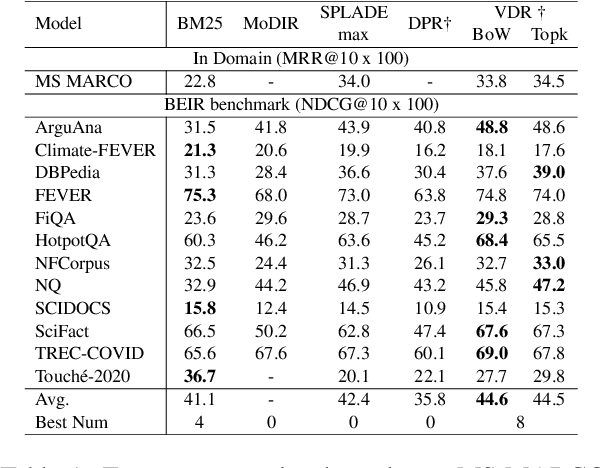

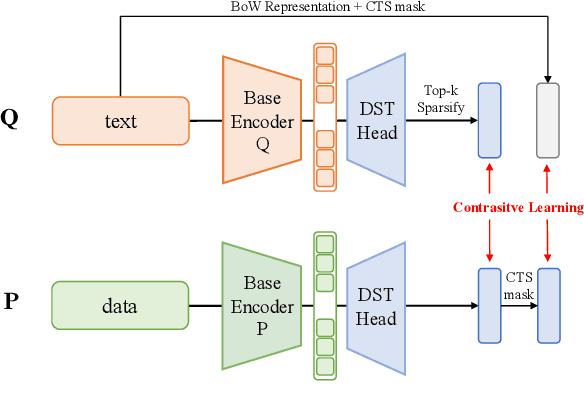

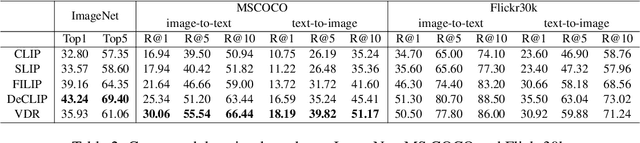

Disentangled representation learning remains challenging as ground truth factors of variation do not naturally exist. To address this, we present Vocabulary Disentanglement Retrieval~(VDR), a simple yet effective retrieval-based disentanglement framework that leverages nature language as distant supervision. Our approach is built upon the widely-used bi-encoder architecture with disentanglement heads and is trained on data-text pairs that are readily available on the web or in existing datasets. This makes our approach task- and modality-agnostic with potential for a wide range of downstream applications. We conduct experiments on 16 datasets in both text-to-text and cross-modal scenarios and evaluate VDR in a zero-shot setting. With the incorporation of disentanglement heads and a minor increase in parameters, VDR achieves significant improvements over the base retriever it is built upon, with a 9% higher on NDCG@10 scores in zero-shot text-to-text retrieval and an average of 13% higher recall in cross-modal retrieval. In comparison to other baselines, VDR outperforms them in most tasks, while also improving explainability and efficiency.

G-MAP: General Memory-Augmented Pre-trained Language Model for Domain Tasks

Dec 08, 2022Zhongwei Wan, Yichun Yin, Wei Zhang, Jiaxin Shi, Lifeng Shang, Guangyong Chen, Xin Jiang, Qun Liu

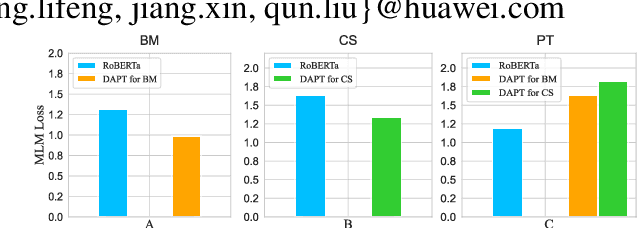

Recently, domain-specific PLMs have been proposed to boost the task performance of specific domains (e.g., biomedical and computer science) by continuing to pre-train general PLMs with domain-specific corpora. However, this Domain-Adaptive Pre-Training (DAPT; Gururangan et al. (2020)) tends to forget the previous general knowledge acquired by general PLMs, which leads to a catastrophic forgetting phenomenon and sub-optimal performance. To alleviate this problem, we propose a new framework of General Memory Augmented Pre-trained Language Model (G-MAP), which augments the domain-specific PLM by a memory representation built from the frozen general PLM without losing any general knowledge. Specifically, we propose a new memory-augmented layer, and based on it, different augmented strategies are explored to build the memory representation and then adaptively fuse it into the domain-specific PLM. We demonstrate the effectiveness of G-MAP on various domains (biomedical and computer science publications, news, and reviews) and different kinds (text classification, QA, NER) of tasks, and the extensive results show that the proposed G-MAP can achieve SOTA results on all tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge