ED2: An Environment Dynamics Decomposition Framework for World Model Construction

Dec 06, 2021Cong Wang, Tianpei Yang, Jianye Hao, Yan Zheng, Hongyao Tang, Fazl Barez, Jinyi Liu, Jiajie Peng, Haiyin Piao, Zhixiao Sun

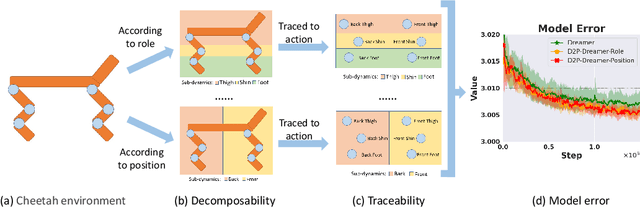

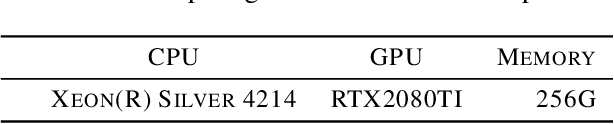

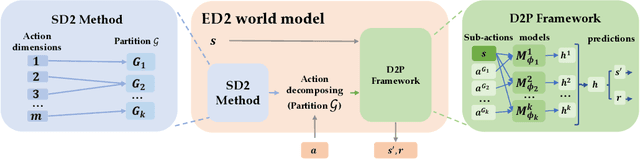

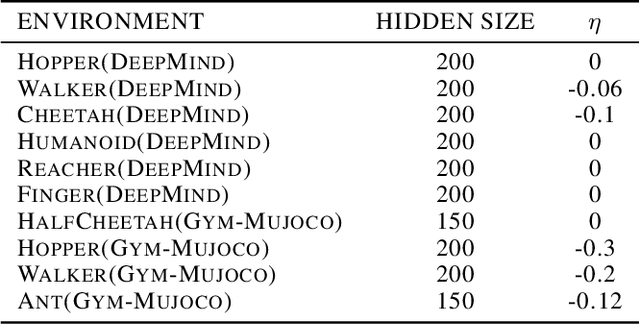

Model-based reinforcement learning methods achieve significant sample efficiency in many tasks, but their performance is often limited by the existence of the model error. To reduce the model error, previous works use a single well-designed network to fit the entire environment dynamics, which treats the environment dynamics as a black box. However, these methods lack to consider the environmental decomposed property that the dynamics may contain multiple sub-dynamics, which can be modeled separately, allowing us to construct the world model more accurately. In this paper, we propose the Environment Dynamics Decomposition (ED2), a novel world model construction framework that models the environment in a decomposing manner. ED2 contains two key components: sub-dynamics discovery (SD2) and dynamics decomposition prediction (D2P). SD2 discovers the sub-dynamics in an environment and then D2P constructs the decomposed world model following the sub-dynamics. ED2 can be easily combined with existing MBRL algorithms and empirical results show that ED2 significantly reduces the model error and boosts the performance of the state-of-the-art MBRL algorithms on various tasks.

Learning State Representations via Retracing in Reinforcement Learning

Nov 24, 2021Changmin Yu, Dong Li, Jianye Hao, Jun Wang, Neil Burgess

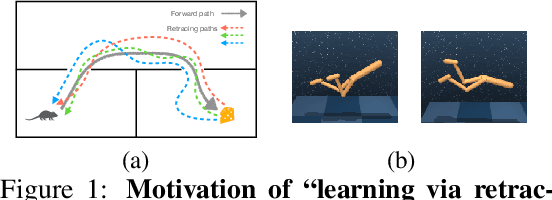

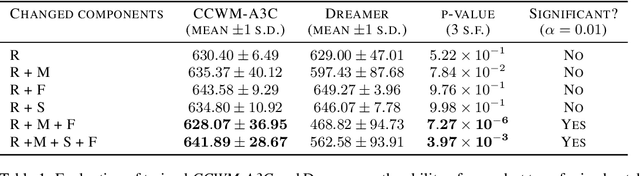

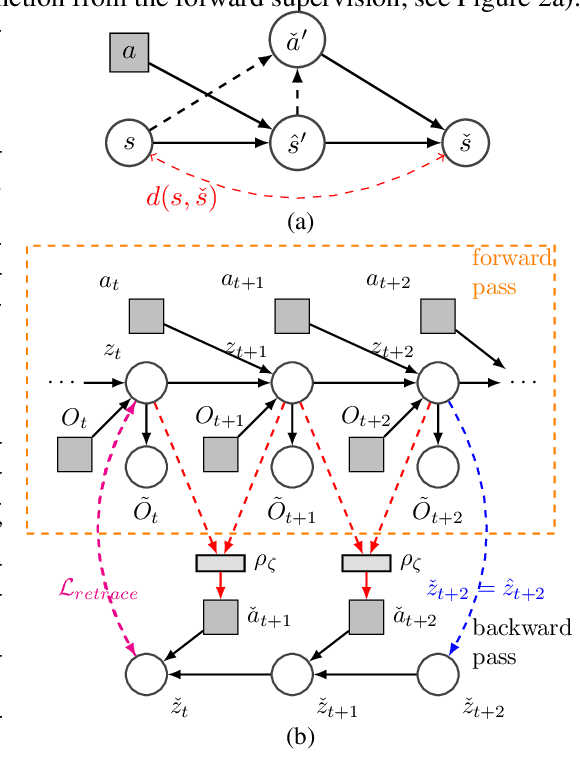

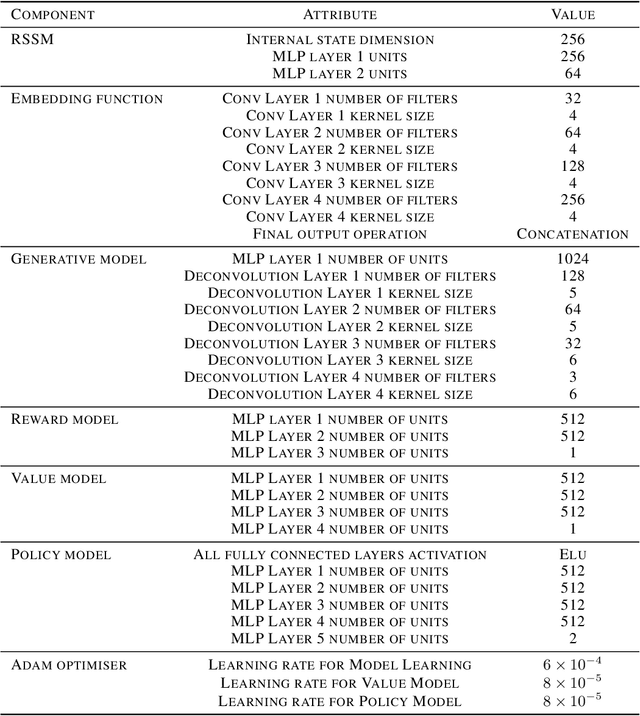

We propose learning via retracing, a novel self-supervised approach for learning the state representation (and the associated dynamics model) for reinforcement learning tasks. In addition to the predictive (reconstruction) supervision in the forward direction, we propose to include `"retraced" transitions for representation/model learning, by enforcing the cycle-consistency constraint between the original and retraced states, hence improve upon the sample efficiency of learning. Moreover, learning via retracing explicitly propagates information about future transitions backward for inferring previous states, thus facilitates stronger representation learning. We introduce Cycle-Consistency World Model (CCWM), a concrete instantiation of learning via retracing implemented under existing model-based reinforcement learning framework. Additionally we propose a novel adaptive "truncation" mechanism for counteracting the negative impacts brought by the "irreversible" transitions such that learning via retracing can be maximally effective. Through extensive empirical studies on continuous control benchmarks, we demonstrates that CCWM achieves state-of-the-art performance in terms of sample efficiency and asymptotic performance.

Uncertainty-aware Low-Rank Q-Matrix Estimation for Deep Reinforcement Learning

Nov 19, 2021Tong Sang, Hongyao Tang, Jianye Hao, Yan Zheng, Zhaopeng Meng

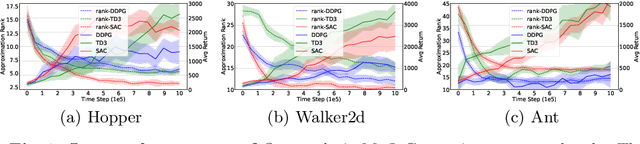

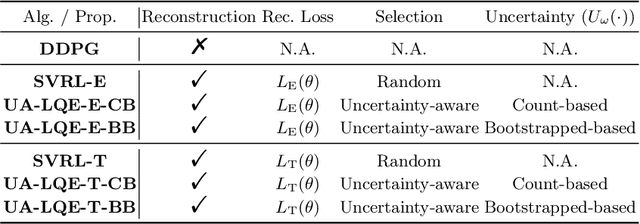

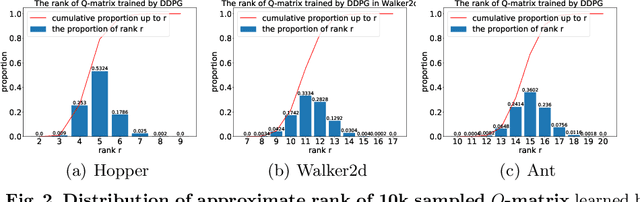

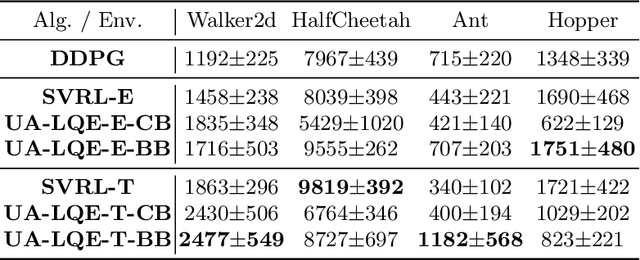

Value estimation is one key problem in Reinforcement Learning. Albeit many successes have been achieved by Deep Reinforcement Learning (DRL) in different fields, the underlying structure and learning dynamics of value function, especially with complex function approximation, are not fully understood. In this paper, we report that decreasing rank of $Q$-matrix widely exists during learning process across a series of continuous control tasks for different popular algorithms. We hypothesize that the low-rank phenomenon indicates the common learning dynamics of $Q$-matrix from stochastic high dimensional space to smooth low dimensional space. Moreover, we reveal a positive correlation between value matrix rank and value estimation uncertainty. Inspired by above evidence, we propose a novel Uncertainty-Aware Low-rank Q-matrix Estimation (UA-LQE) algorithm as a general framework to facilitate the learning of value function. Through quantifying the uncertainty of state-action value estimation, we selectively erase the entries of highly uncertain values in state-action value matrix and conduct low-rank matrix reconstruction for them to recover their values. Such a reconstruction exploits the underlying structure of value matrix to improve the value approximation, thus leading to a more efficient learning process of value function. In the experiments, we evaluate the efficacy of UA-LQE in several representative OpenAI MuJoCo continuous control tasks.

Lifelong Reinforcement Learning with Temporal Logic Formulas and Reward Machines

Nov 18, 2021Xuejing Zheng, Chao Yu, Chen Chen, Jianye Hao, Hankz Hankui Zhuo

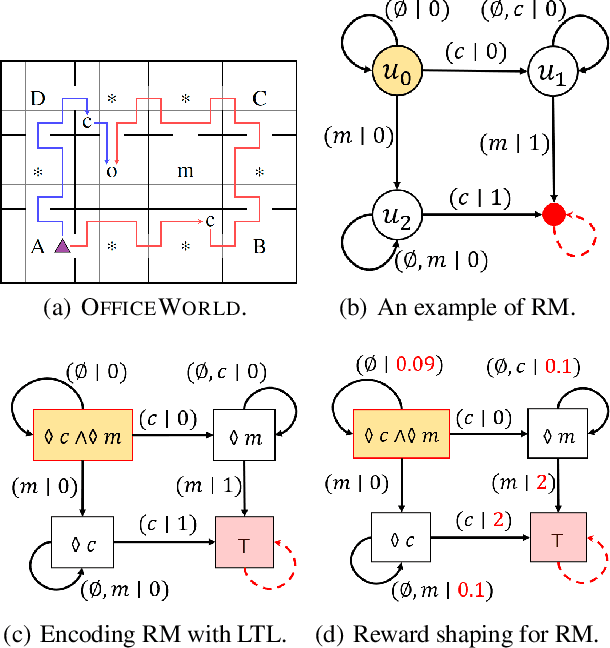

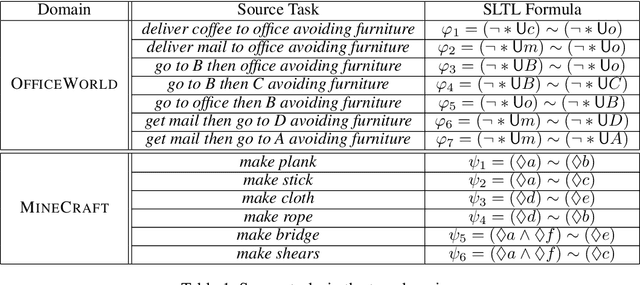

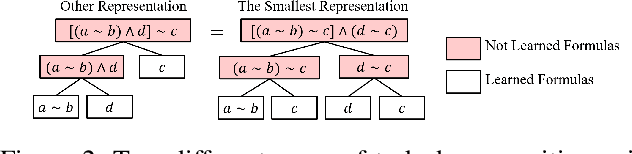

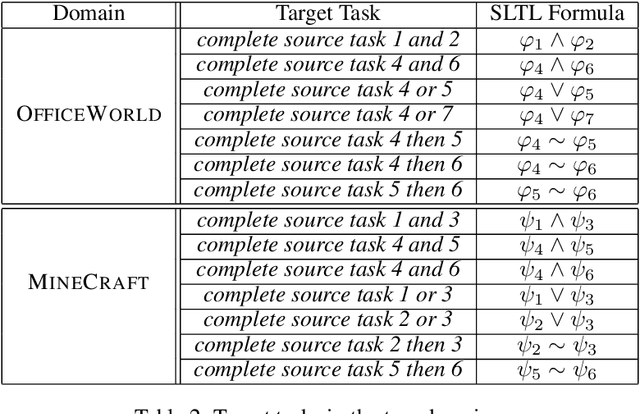

Continuously learning new tasks using high-level ideas or knowledge is a key capability of humans. In this paper, we propose Lifelong reinforcement learning with Sequential linear temporal logic formulas and Reward Machines (LSRM), which enables an agent to leverage previously learned knowledge to fasten learning of logically specified tasks. For the sake of more flexible specification of tasks, we first introduce Sequential Linear Temporal Logic (SLTL), which is a supplement to the existing Linear Temporal Logic (LTL) formal language. We then utilize Reward Machines (RM) to exploit structural reward functions for tasks encoded with high-level events, and propose automatic extension of RM and efficient knowledge transfer over tasks for continuous learning in lifetime. Experimental results show that LSRM outperforms the methods that learn the target tasks from scratch by taking advantage of the task decomposition using SLTL and knowledge transfer over RM during the lifelong learning process.

SEIHAI: A Sample-efficient Hierarchical AI for the MineRL Competition

Nov 17, 2021Hangyu Mao, Chao Wang, Xiaotian Hao, Yihuan Mao, Yiming Lu, Chengjie Wu, Jianye Hao, Dong Li, Pingzhong Tang

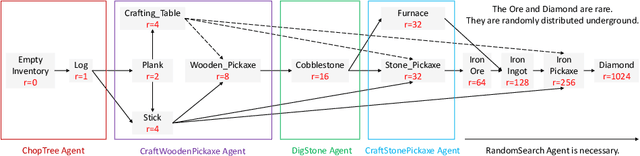

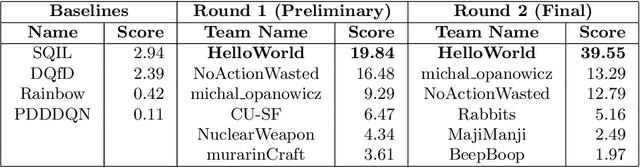

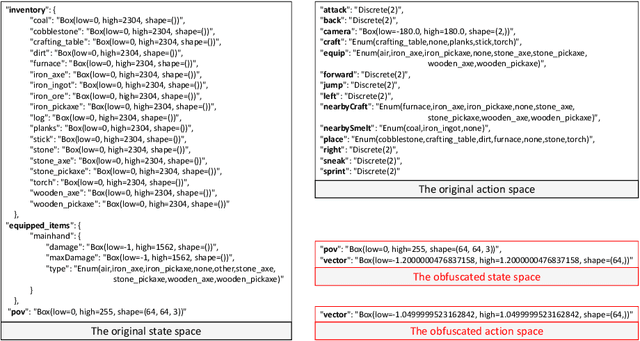

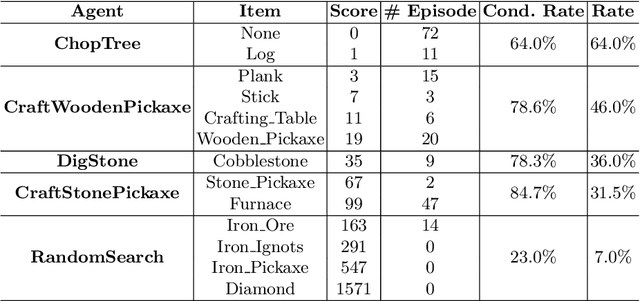

The MineRL competition is designed for the development of reinforcement learning and imitation learning algorithms that can efficiently leverage human demonstrations to drastically reduce the number of environment interactions needed to solve the complex \emph{ObtainDiamond} task with sparse rewards. To address the challenge, in this paper, we present \textbf{SEIHAI}, a \textbf{S}ample-\textbf{e}ff\textbf{i}cient \textbf{H}ierarchical \textbf{AI}, that fully takes advantage of the human demonstrations and the task structure. Specifically, we split the task into several sequentially dependent subtasks, and train a suitable agent for each subtask using reinforcement learning and imitation learning. We further design a scheduler to select different agents for different subtasks automatically. SEIHAI takes the first place in the preliminary and final of the NeurIPS-2020 MineRL competition.

Dynamic Bottleneck for Robust Self-Supervised Exploration

Oct 25, 2021Chenjia Bai, Lingxiao Wang, Lei Han, Animesh Garg, Jianye Hao, Peng Liu, Zhaoran Wang

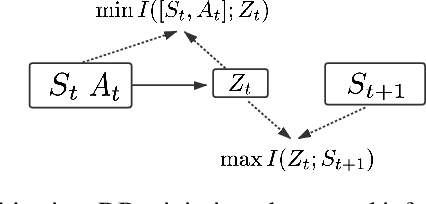

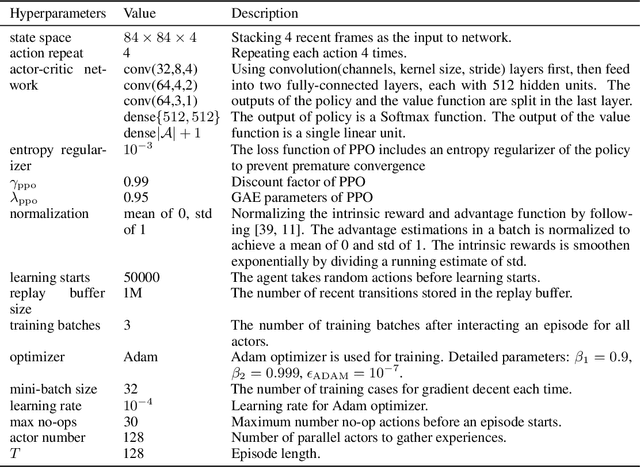

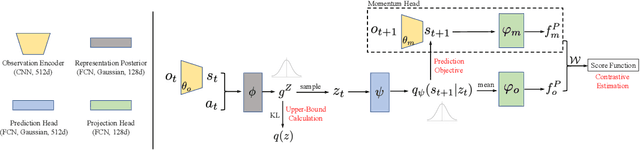

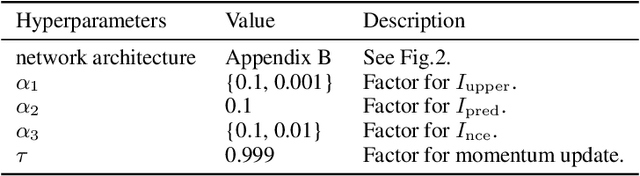

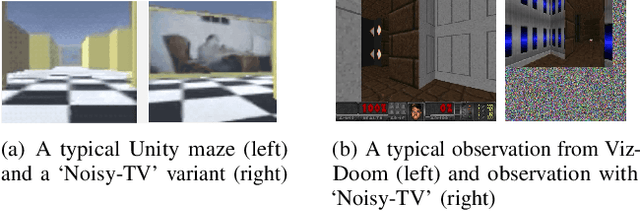

Exploration methods based on pseudo-count of transitions or curiosity of dynamics have achieved promising results in solving reinforcement learning with sparse rewards. However, such methods are usually sensitive to environmental dynamics-irrelevant information, e.g., white-noise. To handle such dynamics-irrelevant information, we propose a Dynamic Bottleneck (DB) model, which attains a dynamics-relevant representation based on the information-bottleneck principle. Based on the DB model, we further propose DB-bonus, which encourages the agent to explore state-action pairs with high information gain. We establish theoretical connections between the proposed DB-bonus, the upper confidence bound (UCB) for linear case, and the visiting count for tabular case. We evaluate the proposed method on Atari suits with dynamics-irrelevant noises. Our experiments show that exploration with DB bonus outperforms several state-of-the-art exploration methods in noisy environments.

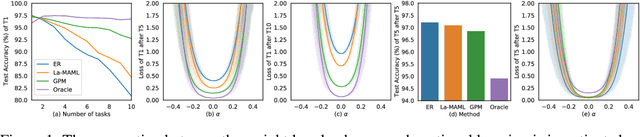

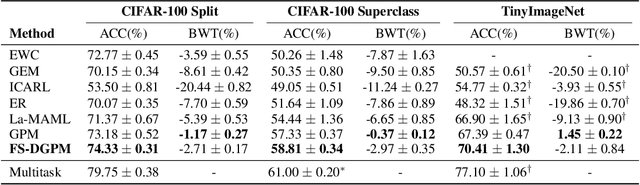

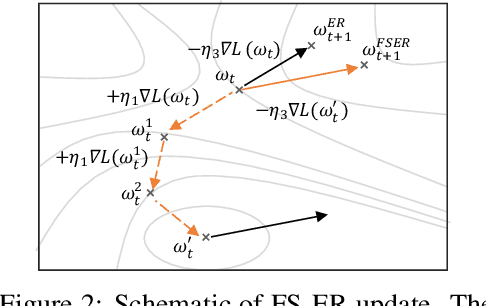

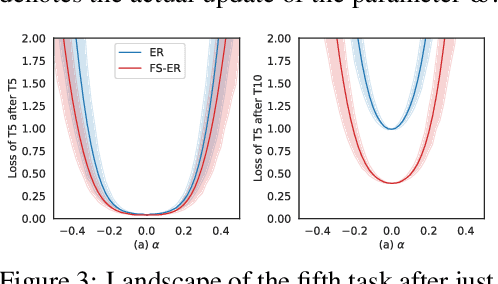

Flattening Sharpness for Dynamic Gradient Projection Memory Benefits Continual Learning

Oct 09, 2021Danruo Deng, Guangyong Chen, Jianye Hao, Qiong Wang, Pheng-Ann Heng

The backpropagation networks are notably susceptible to catastrophic forgetting, where networks tend to forget previously learned skills upon learning new ones. To address such the 'sensitivity-stability' dilemma, most previous efforts have been contributed to minimizing the empirical risk with different parameter regularization terms and episodic memory, but rarely exploring the usages of the weight loss landscape. In this paper, we investigate the relationship between the weight loss landscape and sensitivity-stability in the continual learning scenario, based on which, we propose a novel method, Flattening Sharpness for Dynamic Gradient Projection Memory (FS-DGPM). In particular, we introduce a soft weight to represent the importance of each basis representing past tasks in GPM, which can be adaptively learned during the learning process, so that less important bases can be dynamically released to improve the sensitivity of new skill learning. We further introduce Flattening Sharpness (FS) to reduce the generalization gap by explicitly regulating the flatness of the weight loss landscape of all seen tasks. As demonstrated empirically, our proposed method consistently outperforms baselines with the superior ability to learn new skills while alleviating forgetting effectively.

Ranking Cost: Building An Efficient and Scalable Circuit Routing Planner with Evolution-Based Optimization

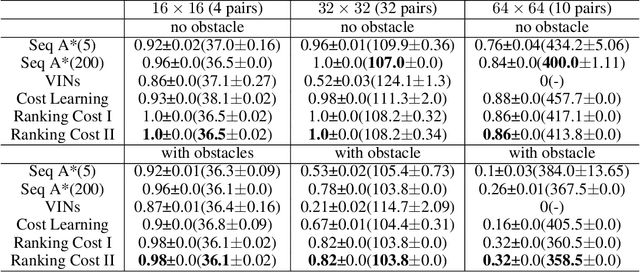

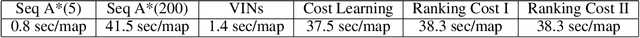

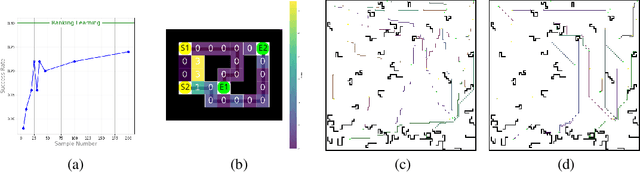

Oct 08, 2021Shiyu Huang, Bin Wang, Dong Li, Jianye Hao, Ting Chen, Jun Zhu

Circuit routing has been a historically challenging problem in designing electronic systems such as very large-scale integration (VLSI) and printed circuit boards (PCBs). The main challenge is that connecting a large number of electronic components under specific design rules involves a very large search space. Early solutions are typically designed with hard-coded heuristics, which suffer from problems of non-optimal solutions and lack of flexibility for new design needs. Although a few learning-based methods have been proposed recently, they are typically cumbersome and hard to extend to large-scale applications. In this work, we propose a new algorithm for circuit routing, named Ranking Cost, which innovatively combines search-based methods (i.e., A* algorithm) and learning-based methods (i.e., Evolution Strategies) to form an efficient and trainable router. In our method, we introduce a new set of variables called cost maps, which can help the A* router to find out proper paths to achieve the global objective. We also train a ranking parameter, which can produce the ranking order and further improve the performance of our method. Our algorithm is trained in an end-to-end manner and does not use any artificial data or human demonstration. In the experiments, we compare with the sequential A* algorithm and a canonical reinforcement learning approach, and results show that our method outperforms these baselines with higher connectivity rates and better scalability.

Exploration in Deep Reinforcement Learning: A Comprehensive Survey

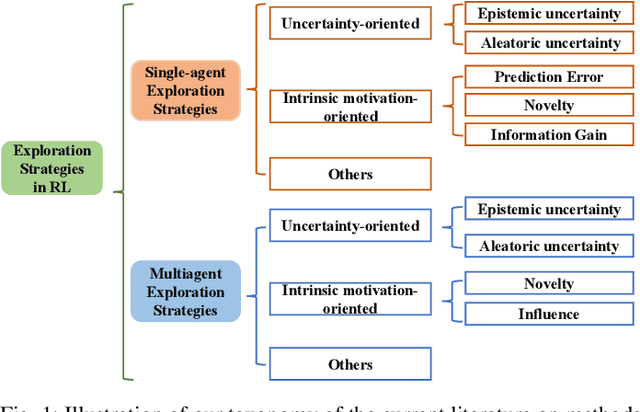

Sep 15, 2021Tianpei Yang, Hongyao Tang, Chenjia Bai, Jinyi Liu, Jianye Hao, Zhaopeng Meng, Peng Liu

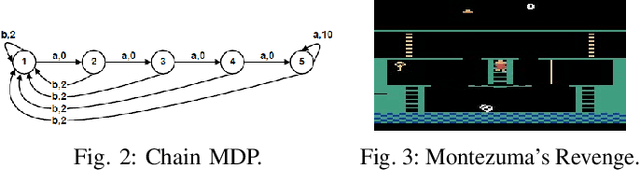

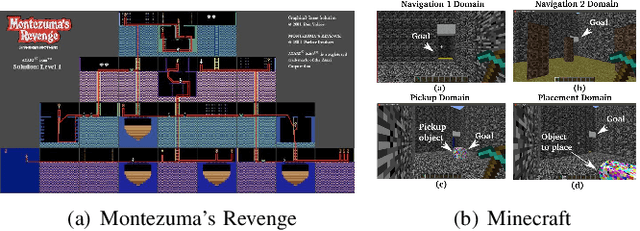

Deep Reinforcement Learning (DRL) and Deep Multi-agent Reinforcement Learning (MARL) have achieved significant success across a wide range of domains, such as game AI, autonomous vehicles, robotics and finance. However, DRL and deep MARL agents are widely known to be sample-inefficient and millions of interactions are usually needed even for relatively simple game settings, thus preventing the wide application in real-industry scenarios. One bottleneck challenge behind is the well-known exploration problem, i.e., how to efficiently explore the unknown environments and collect informative experiences that could benefit the policy learning most. In this paper, we conduct a comprehensive survey on existing exploration methods in DRL and deep MARL for the purpose of providing understandings and insights on the critical problems and solutions. We first identify several key challenges to achieve efficient exploration, which most of the exploration methods aim at addressing. Then we provide a systematic survey of existing approaches by classifying them into two major categories: uncertainty-oriented exploration and intrinsic motivation-oriented exploration. The essence of uncertainty-oriented exploration is to leverage the quantification of the epistemic and aleatoric uncertainty to derive efficient exploration. By contrast, intrinsic motivation-oriented exploration methods usually incorporate different reward agnostic information for intrinsic exploration guidance. Beyond the above two main branches, we also conclude other exploration methods which adopt sophisticated techniques but are difficult to be classified into the above two categories. In addition, we provide a comprehensive empirical comparison of exploration methods for DRL on a set of commonly used benchmarks. Finally, we summarize the open problems of exploration in DRL and deep MARL and point out a few future directions.

HyAR: Addressing Discrete-Continuous Action Reinforcement Learning via Hybrid Action Representation

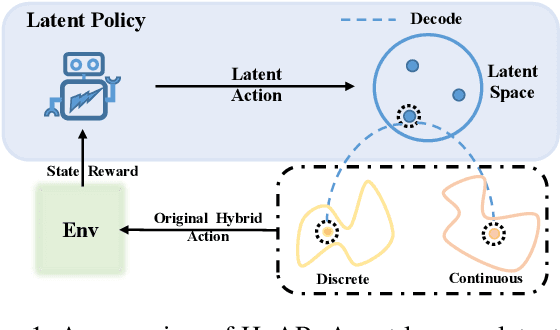

Sep 12, 2021Boyan Li, Hongyao Tang, Yan Zheng, Jianye Hao, Pengyi Li, Zhen Wang, Zhaopeng Meng, Li Wang

Discrete-continuous hybrid action space is a natural setting in many practical problems, such as robot control and game AI. However, most previous Reinforcement Learning (RL) works only demonstrate the success in controlling with either discrete or continuous action space, while seldom take into account the hybrid action space. One naive way to address hybrid action RL is to convert the hybrid action space into a unified homogeneous action space by discretization or continualization, so that conventional RL algorithms can be applied. However, this ignores the underlying structure of hybrid action space and also induces the scalability issue and additional approximation difficulties, thus leading to degenerated results. In this paper, we propose Hybrid Action Representation (HyAR) to learn a compact and decodable latent representation space for the original hybrid action space. HyAR constructs the latent space and embeds the dependence between discrete action and continuous parameter via an embedding table and conditional Variantional Auto-Encoder (VAE). To further improve the effectiveness, the action representation is trained to be semantically smooth through unsupervised environmental dynamics prediction. Finally, the agent then learns its policy with conventional DRL algorithms in the learned representation space and interacts with the environment by decoding the hybrid action embeddings to the original action space. We evaluate HyAR in a variety of environments with discrete-continuous action space. The results demonstrate the superiority of HyAR when compared with previous baselines, especially for high-dimensional action spaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge