Yichang Zhang

additional authors not shown

Qwen Technical Report

Sep 28, 2023

Abstract:Large language models (LLMs) have revolutionized the field of artificial intelligence, enabling natural language processing tasks that were previously thought to be exclusive to humans. In this work, we introduce Qwen, the first installment of our large language model series. Qwen is a comprehensive language model series that encompasses distinct models with varying parameter counts. It includes Qwen, the base pretrained language models, and Qwen-Chat, the chat models finetuned with human alignment techniques. The base language models consistently demonstrate superior performance across a multitude of downstream tasks, and the chat models, particularly those trained using Reinforcement Learning from Human Feedback (RLHF), are highly competitive. The chat models possess advanced tool-use and planning capabilities for creating agent applications, showcasing impressive performance even when compared to bigger models on complex tasks like utilizing a code interpreter. Furthermore, we have developed coding-specialized models, Code-Qwen and Code-Qwen-Chat, as well as mathematics-focused models, Math-Qwen-Chat, which are built upon base language models. These models demonstrate significantly improved performance in comparison with open-source models, and slightly fall behind the proprietary models.

Transferring General Multimodal Pretrained Models to Text Recognition

Dec 19, 2022Abstract:This paper proposes a new method, OFA-OCR, to transfer multimodal pretrained models to text recognition. Specifically, we recast text recognition as image captioning and directly transfer a unified vision-language pretrained model to the end task. Without pretraining on large-scale annotated or synthetic text recognition data, OFA-OCR outperforms the baselines and achieves state-of-the-art performance in the Chinese text recognition benchmark. Additionally, we construct an OCR pipeline with OFA-OCR, and we demonstrate that it can achieve competitive performance with the product-level API. The code (https://github.com/OFA-Sys/OFA) and demo (https://modelscope.cn/studios/damo/ofa_ocr_pipeline/summary) are publicly available.

OFASys: A Multi-Modal Multi-Task Learning System for Building Generalist Models

Dec 08, 2022

Abstract:Generalist models, which are capable of performing diverse multi-modal tasks in a task-agnostic way within a single model, have been explored recently. Being, hopefully, an alternative to approaching general-purpose AI, existing generalist models are still at an early stage, where modality and task coverage is limited. To empower multi-modal task-scaling and speed up this line of research, we release a generalist model learning system, OFASys, built on top of a declarative task interface named multi-modal instruction. At the core of OFASys is the idea of decoupling multi-modal task representations from the underlying model implementations. In OFASys, a task involving multiple modalities can be defined declaratively even with just a single line of code. The system automatically generates task plans from such instructions for training and inference. It also facilitates multi-task training for diverse multi-modal workloads. As a starting point, we provide presets of 7 different modalities and 23 highly-diverse example tasks in OFASys, with which we also develop a first-in-kind, single model, OFA+, that can handle text, image, speech, video, and motion data. The single OFA+ model achieves 95% performance in average with only 16% parameters of 15 task-finetuned models, showcasing the performance reliability of multi-modal task-scaling provided by OFASys. Available at https://github.com/OFA-Sys/OFASys

Chinese CLIP: Contrastive Vision-Language Pretraining in Chinese

Nov 03, 2022

Abstract:The tremendous success of CLIP (Radford et al., 2021) has promoted the research and application of contrastive learning for vision-language pretraining. In this work, we construct a large-scale dataset of image-text pairs in Chinese, where most data are retrieved from publicly available datasets, and we pretrain Chinese CLIP models on the new dataset. We develop 5 Chinese CLIP models of multiple sizes, spanning from 77 to 958 million parameters. Furthermore, we propose a two-stage pretraining method, where the model is first trained with the image encoder frozen and then trained with all parameters being optimized, to achieve enhanced model performance. Our comprehensive experiments demonstrate that Chinese CLIP can achieve the state-of-the-art performance on MUGE, Flickr30K-CN, and COCO-CN in the setups of zero-shot learning and finetuning, and it is able to achieve competitive performance in zero-shot image classification based on the evaluation on the ELEVATER benchmark (Li et al., 2022). We have released our codes, models, and demos in https://github.com/OFA-Sys/Chinese-CLIP

Sketch and Refine: Towards Faithful and Informative Table-to-Text Generation

May 31, 2021

Abstract:Table-to-text generation refers to generating a descriptive text from a key-value table. Traditional autoregressive methods, though can generate text with high fluency, suffer from low coverage and poor faithfulness problems. To mitigate these problems, we propose a novel Skeleton-based two-stage method that combines both Autoregressive and Non-Autoregressive generations (SANA). Our approach includes: (1) skeleton generation with an autoregressive pointer network to select key tokens from the source table; (2) edit-based non-autoregressive generation model to produce texts via iterative insertion and deletion operations. By integrating hard constraints from the skeleton, the non-autoregressive model improves the generation's coverage over the source table and thus enhances its faithfulness. We conduct automatic and human evaluations on both WikiPerson and WikiBio datasets. Experimental results demonstrate that our method outperforms the previous state-of-the-art methods in both automatic and human evaluation, especially on coverage and faithfulness. In particular, we achieve PARENT-T recall of 99.47 in WikiPerson, improving over the existing best results by more than 10 points.

M6: A Chinese Multimodal Pretrainer

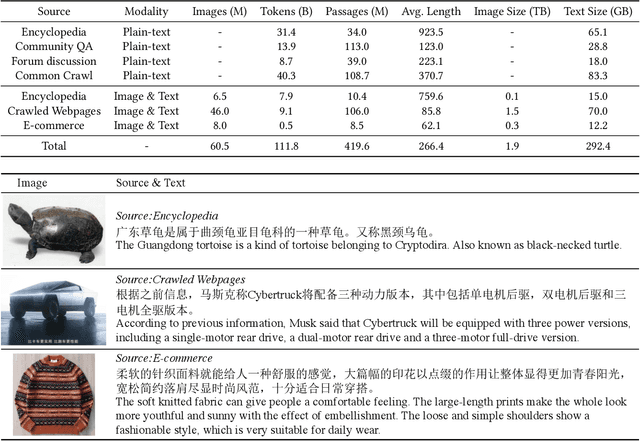

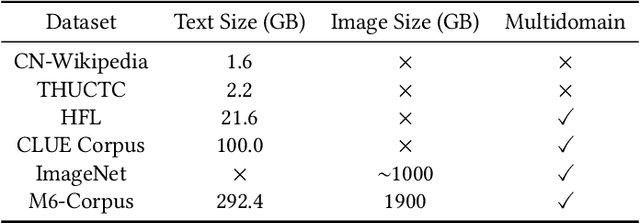

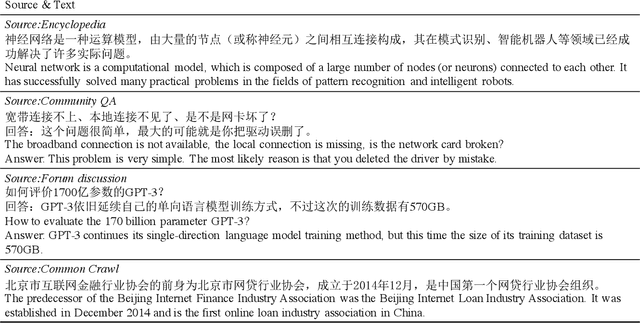

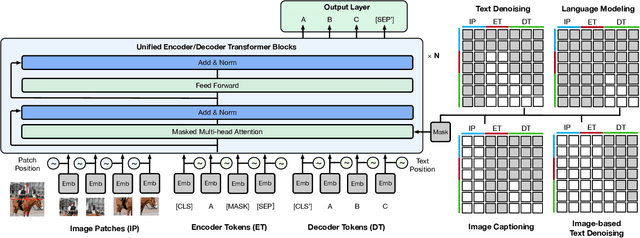

Mar 02, 2021

Abstract:In this work, we construct the largest dataset for multimodal pretraining in Chinese, which consists of over 1.9TB images and 292GB texts that cover a wide range of domains. We propose a cross-modal pretraining method called M6, referring to Multi-Modality to Multi-Modality Multitask Mega-transformer, for unified pretraining on the data of single modality and multiple modalities. We scale the model size up to 10 billion and 100 billion parameters, and build the largest pretrained model in Chinese. We apply the model to a series of downstream applications, and demonstrate its outstanding performance in comparison with strong baselines. Furthermore, we specifically design a downstream task of text-guided image generation, and show that the finetuned M6 can create high-quality images with high resolution and abundant details.

Meta-KD: A Meta Knowledge Distillation Framework for Language Model Compression across Domains

Dec 02, 2020

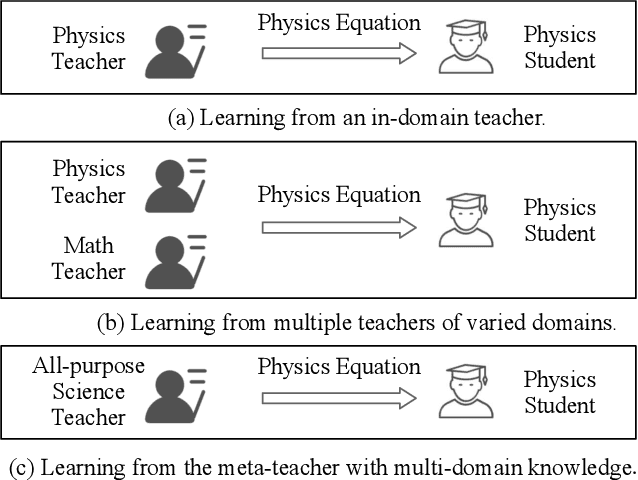

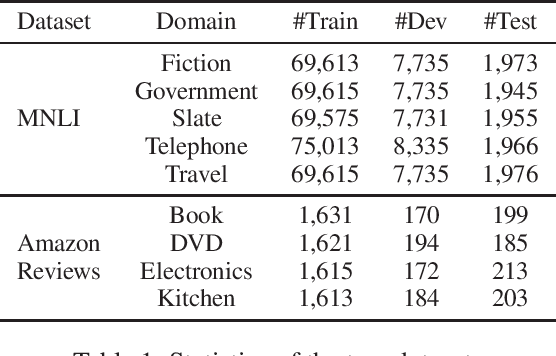

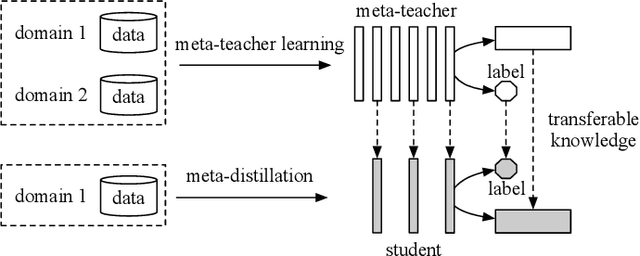

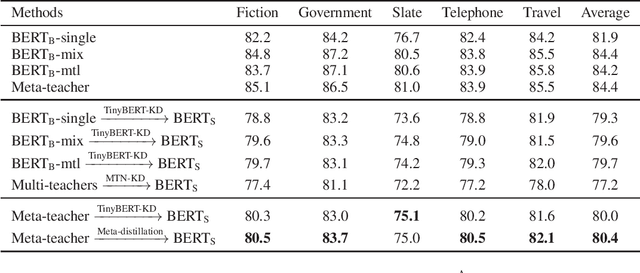

Abstract:Pre-trained language models have been applied to various NLP tasks with considerable performance gains. However, the large model sizes, together with the long inference time, limit the deployment of such models in real-time applications. Typical approaches consider knowledge distillation to distill large teacher models into small student models. However, most of these studies focus on single-domain only, which ignores the transferable knowledge from other domains. We argue that training a teacher with transferable knowledge digested across domains can achieve better generalization capability to help knowledge distillation. To this end, we propose a Meta-Knowledge Distillation (Meta-KD) framework to build a meta-teacher model that captures transferable knowledge across domains inspired by meta-learning and use it to pass knowledge to students. Specifically, we first leverage a cross-domain learning process to train the meta-teacher on multiple domains, and then propose a meta-distillation algorithm to learn single-domain student models with guidance from the meta-teacher. Experiments on two public multi-domain NLP tasks show the effectiveness and superiority of the proposed Meta-KD framework. We also demonstrate the capability of Meta-KD in both few-shot and zero-shot learning settings.

Graph-based Multi-hop Reasoning for Long Text Generation

Sep 28, 2020

Abstract:Long text generation is an important but challenging task.The main problem lies in learning sentence-level semantic dependencies which traditional generative models often suffer from. To address this problem, we propose a Multi-hop Reasoning Generation (MRG) approach that incorporates multi-hop reasoning over a knowledge graph to learn semantic dependencies among sentences. MRG consists of twoparts, a graph-based multi-hop reasoning module and a path-aware sentence realization module. The reasoning module is responsible for searching skeleton paths from a knowledge graph to imitate the imagination process in the human writing for semantic transfer. Based on the inferred paths, the sentence realization module then generates a complete sentence. Unlike previous black-box models, MRG explicitly infers the skeleton path, which provides explanatory views tounderstand how the proposed model works. We conduct experiments on three representative tasks, including story generation, review generation, and product description generation. Automatic and manual evaluation show that our proposed method can generate more informative and coherentlong text than strong baselines, such as pre-trained models(e.g. GPT-2) and knowledge-enhanced models.

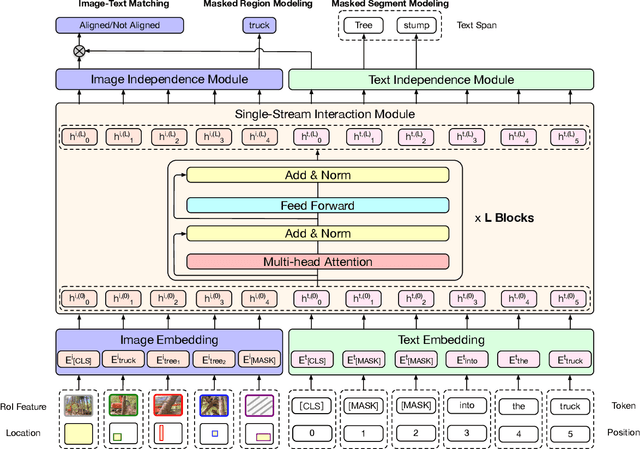

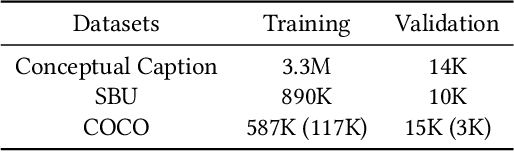

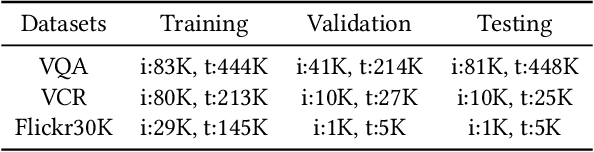

InterBERT: Vision-and-Language Interaction for Multi-modal Pretraining

Mar 30, 2020

Abstract:Multi-modal pretraining for learning high-level multi-modal representation is a further step towards deep learning and artificial intelligence. In this work, we propose a novel model, namely InterBERT (BERT for Interaction), which owns strong capability of modeling interaction between the information flows of different modalities. The single-stream interaction module is capable of effectively processing information of multiple modalilties, and the two-stream module on top preserves the independence of each modality to avoid performance downgrade in single-modal tasks. We pretrain the model with three pretraining tasks, including masked segment modeling (MSM), masked region modeling (MRM) and image-text matching (ITM); and finetune the model on a series of vision-and-language downstream tasks. Experimental results demonstrate that InterBERT outperforms a series of strong baselines, including the most recent multi-modal pretraining methods, and the analysis shows that MSM and MRM are effective for pretraining and our method can achieve performances comparable to BERT in single-modal tasks. Besides, we propose a large-scale dataset for multi-modal pretraining in Chinese, and we develop the Chinese InterBERT which is the first Chinese multi-modal pretrained model. We pretrain the Chinese InterBERT on our proposed dataset of 3.1M image-text pairs from the mobile Taobao, the largest Chinese e-commerce platform. We finetune the model for text-based image retrieval, and recently we deployed the model online for topic-based recommendation.

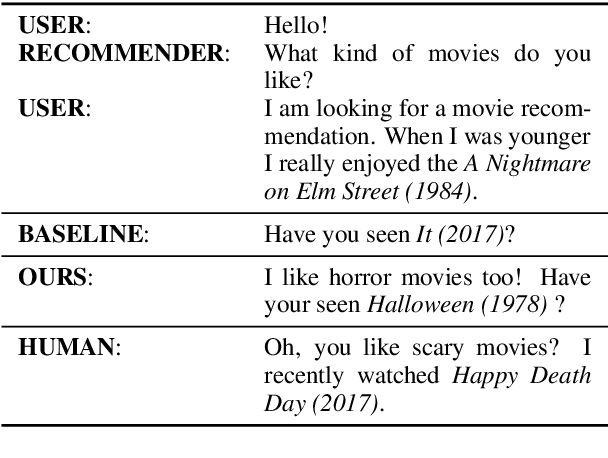

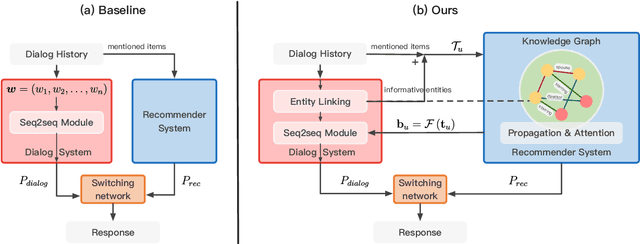

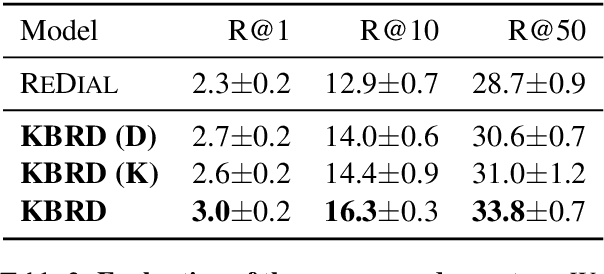

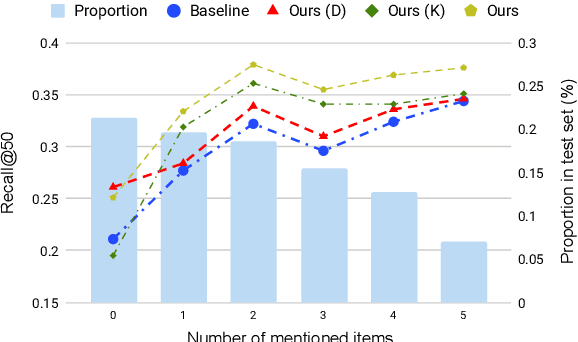

Towards Knowledge-Based Recommender Dialog System

Sep 03, 2019

Abstract:In this paper, we propose a novel end-to-end framework called KBRD, which stands for Knowledge-Based Recommender Dialog System. It integrates the recommender system and the dialog generation system. The dialog system can enhance the performance of the recommendation system by introducing knowledge-grounded information about users' preferences, and the recommender system can improve that of the dialog generation system by providing recommendation-aware vocabulary bias. Experimental results demonstrate that our proposed model has significant advantages over the baselines in both the evaluation of dialog generation and recommendation. A series of analyses show that the two systems can bring mutual benefits to each other, and the introduced knowledge contributes to both their performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge