Planning Immediate Landmarks of Targets for Model-Free Skill Transfer across Agents

Dec 18, 2022Minghuan Liu, Zhengbang Zhu, Menghui Zhu, Yuzheng Zhuang, Weinan Zhang, Jianye Hao

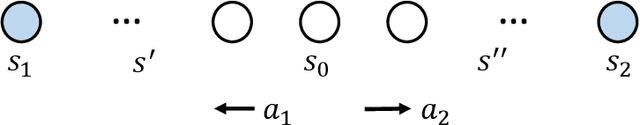

In reinforcement learning applications like robotics, agents usually need to deal with various input/output features when specified with different state/action spaces by their developers or physical restrictions. This indicates unnecessary re-training from scratch and considerable sample inefficiency, especially when agents follow similar solution steps to achieve tasks. In this paper, we aim to transfer similar high-level goal-transition knowledge to alleviate the challenge. Specifically, we propose PILoT, i.e., Planning Immediate Landmarks of Targets. PILoT utilizes the universal decoupled policy optimization to learn a goal-conditioned state planner; then, distills a goal-planner to plan immediate landmarks in a model-free style that can be shared among different agents. In our experiments, we show the power of PILoT on various transferring challenges, including few-shot transferring across action spaces and dynamics, from low-dimensional vector states to image inputs, from simple robot to complicated morphology; and we also illustrate a zero-shot transfer solution from a simple 2D navigation task to the harder Ant-Maze task.

State-Aware Proximal Pessimistic Algorithms for Offline Reinforcement Learning

Nov 28, 2022Chen Chen, Hongyao Tang, Yi Ma, Chao Wang, Qianli Shen, Dong Li, Jianye Hao

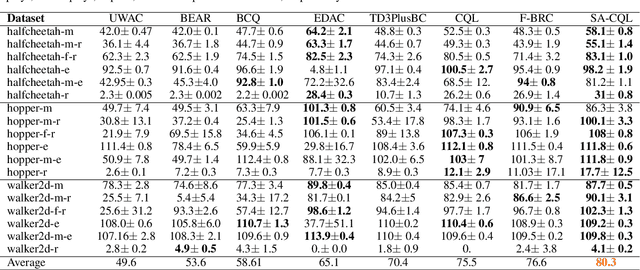

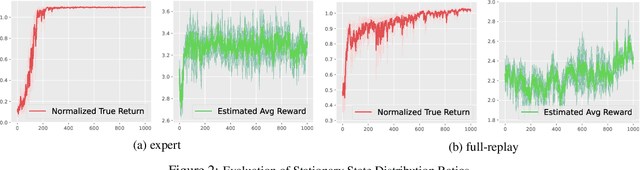

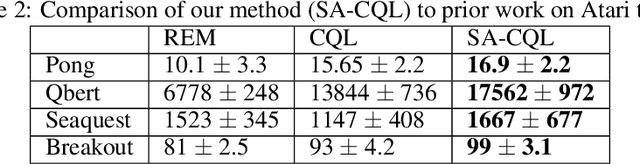

Pessimism is of great importance in offline reinforcement learning (RL). One broad category of offline RL algorithms fulfills pessimism by explicit or implicit behavior regularization. However, most of them only consider policy divergence as behavior regularization, ignoring the effect of how the offline state distribution differs with that of the learning policy, which may lead to under-pessimism for some states and over-pessimism for others. Taking account of this problem, we propose a principled algorithmic framework for offline RL, called \emph{State-Aware Proximal Pessimism} (SA-PP). The key idea of SA-PP is leveraging discounted stationary state distribution ratios between the learning policy and the offline dataset to modulate the degree of behavior regularization in a state-wise manner, so that pessimism can be implemented in a more appropriate way. We first provide theoretical justifications on the superiority of SA-PP over previous algorithms, demonstrating that SA-PP produces a lower suboptimality upper bound in a broad range of settings. Furthermore, we propose a new algorithm named \emph{State-Aware Conservative Q-Learning} (SA-CQL), by building SA-PP upon representative CQL algorithm with the help of DualDICE for estimating discounted stationary state distribution ratios. Extensive experiments on standard offline RL benchmark show that SA-CQL outperforms the popular baselines on a large portion of benchmarks and attains the highest average return.

Prototypical context-aware dynamics generalization for high-dimensional model-based reinforcement learning

Nov 23, 2022Junjie Wang, Yao Mu, Dong Li, Qichao Zhang, Dongbin Zhao, Yuzheng Zhuang, Ping Luo, Bin Wang, Jianye Hao

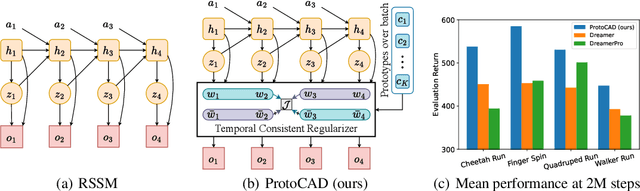

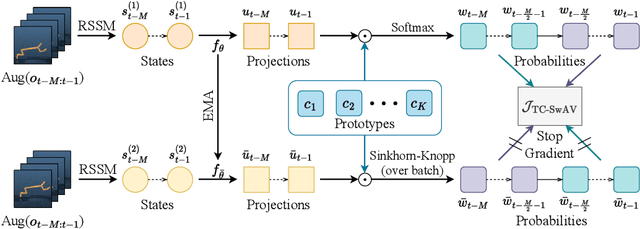

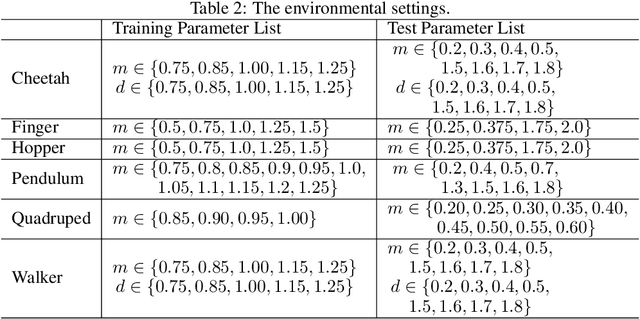

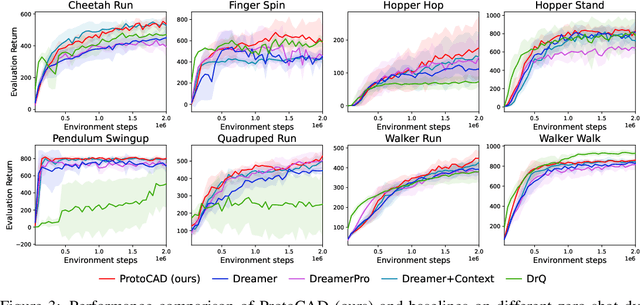

The latent world model provides a promising way to learn policies in a compact latent space for tasks with high-dimensional observations, however, its generalization across diverse environments with unseen dynamics remains challenging. Although the recurrent structure utilized in current advances helps to capture local dynamics, modeling only state transitions without an explicit understanding of environmental context limits the generalization ability of the dynamics model. To address this issue, we propose a Prototypical Context-Aware Dynamics (ProtoCAD) model, which captures the local dynamics by time consistent latent context and enables dynamics generalization in high-dimensional control tasks. ProtoCAD extracts useful contextual information with the help of the prototypes clustered over batch and benefits model-based RL in two folds: 1) It utilizes a temporally consistent prototypical regularizer that encourages the prototype assignments produced for different time parts of the same latent trajectory to be temporally consistent instead of comparing the features; 2) A context representation is designed which combines both the projection embedding of latent states and aggregated prototypes and can significantly improve the dynamics generalization ability. Extensive experiments show that ProtoCAD surpasses existing methods in terms of dynamics generalization. Compared with the recurrent-based model RSSM, ProtoCAD delivers 13.2% and 26.7% better mean and median performance across all dynamics generalization tasks.

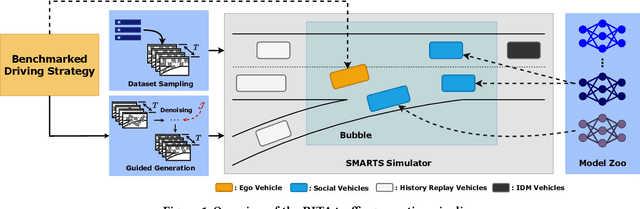

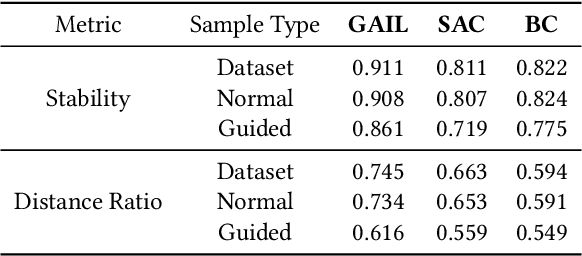

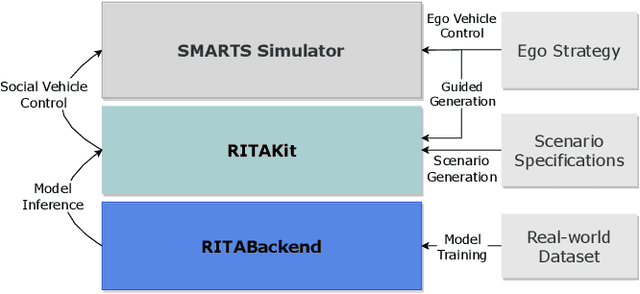

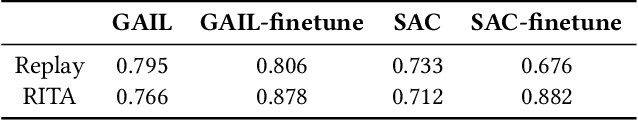

RITA: Boost Autonomous Driving Simulators with Realistic Interactive Traffic Flow

Nov 11, 2022Zhengbang Zhu, Shenyu Zhang, Yuzheng Zhuang, Yuecheng Liu, Minghuan Liu, Liyuan Mao, Ziqing Gong, Weinan Zhang, Shixiong Kai, Qiang Gu, Bin Wang, Siyuan Cheng, Xinyu Wang, Jianye Hao, Yong Yu

High-quality traffic flow generation is the core module in building simulators for autonomous driving. However, the majority of available simulators are incapable of replicating traffic patterns that accurately reflect the various features of real-world data while also simulating human-like reactive responses to the tested autopilot driving strategies. Taking one step forward to addressing such a problem, we propose Realistic Interactive TrAffic flow (RITA) as an integrated component of existing driving simulators to provide high-quality traffic flow for the evaluation and optimization of the tested driving strategies. RITA is developed with fidelity, diversity, and controllability in consideration, and consists of two core modules called RITABackend and RITAKit. RITABackend is built to support vehicle-wise control and provide traffic generation models from real-world datasets, while RITAKit is developed with easy-to-use interfaces for controllable traffic generation via RITABackend. We demonstrate RITA's capacity to create diversified and high-fidelity traffic simulations in several highly interactive highway scenarios. The experimental findings demonstrate that our produced RITA traffic flows meet all three design goals, hence enhancing the completeness of driving strategy evaluation. Moreover, we showcase the possibility for further improvement of baseline strategies through online fine-tuning with RITA traffic flows.

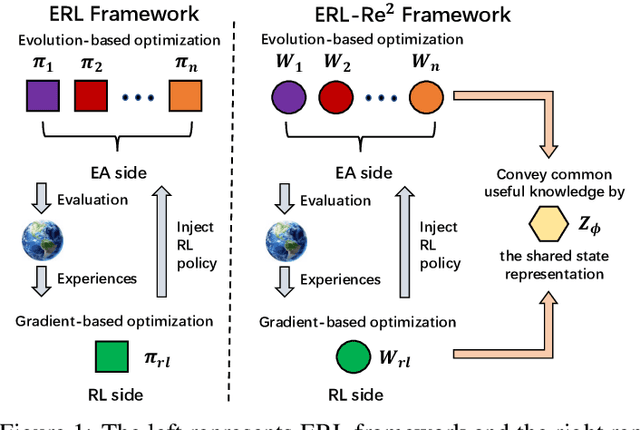

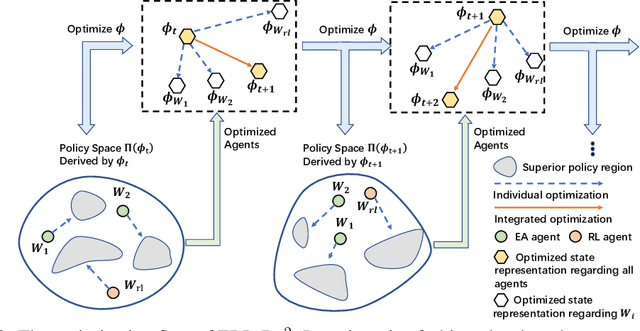

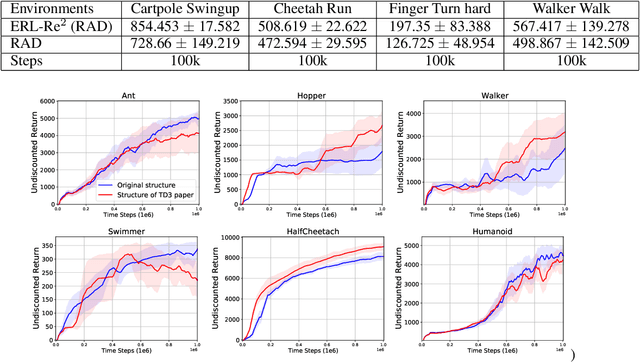

ERL-Re$^2$: Efficient Evolutionary Reinforcement Learning with Shared State Representation and Individual Policy Representation

Oct 26, 2022Pengyi Li, Hongyao Tang, Jianye Hao, Yan Zheng, Xian Fu, Zhaopeng Meng

Deep Reinforcement Learning (Deep RL) and Evolutionary Algorithm (EA) are two major paradigms of policy optimization with distinct learning principles, i.e., gradient-based v.s. gradient free. An appealing research direction is integrating Deep RL and EA to devise new methods by fusing their complementary advantages. However, existing works on combining Deep RL and EA have two common drawbacks: 1) the RL agent and EA agents learn their policies individually, neglecting efficient sharing of useful common knowledge; 2) parameter-level policy optimization guarantees no semantic level of behavior evolution for the EA side. In this paper, we propose Evolutionary Reinforcement Learning with Two-scale State Representation and Policy Representation (ERL-Re2), a novel solution to the aforementioned two drawbacks. The key idea of ERL-Re2 is two-scale representation: all EA and RL policies share the same nonlinear state representation while maintaining individual linear policy representations. The state representation conveys expressive common features of the environment learned by all the agents collectively; the linear policy representation provides a favorable space for efficient policy optimization, where novel behavior-level crossover and mutation operations can be performed. Moreover, the linear policy representation allows convenient generalization of policy fitness with the help of Policy-extended Value Function Approximator (PeVFA), further improving the sample efficiency of fitness estimation. The experiments on a range of continuous control tasks show that ERL-Re2 consistently outperforms strong baselines and achieves significant improvement over both its Deep RL and EA components.

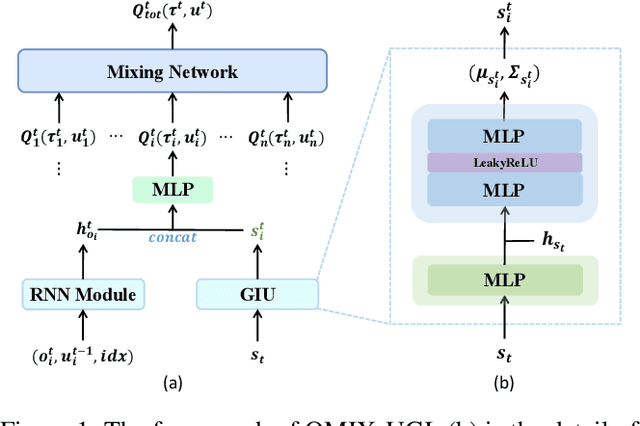

PTDE: Personalized Training with Distillated Execution for Multi-Agent Reinforcement Learning

Oct 17, 2022Yiqun Chen, Hangyu Mao, Tianle Zhang, Shiguang Wu, Bin Zhang, Jianye Hao, Dong Li, Bin Wang, Hongxing Chang

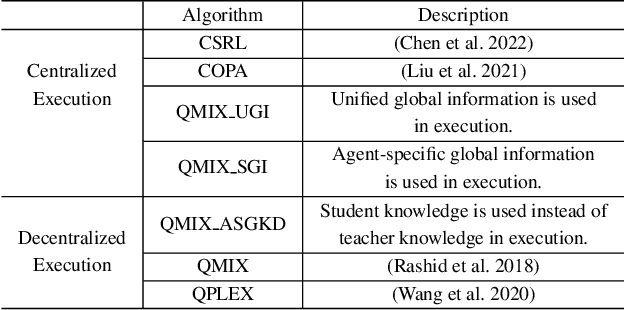

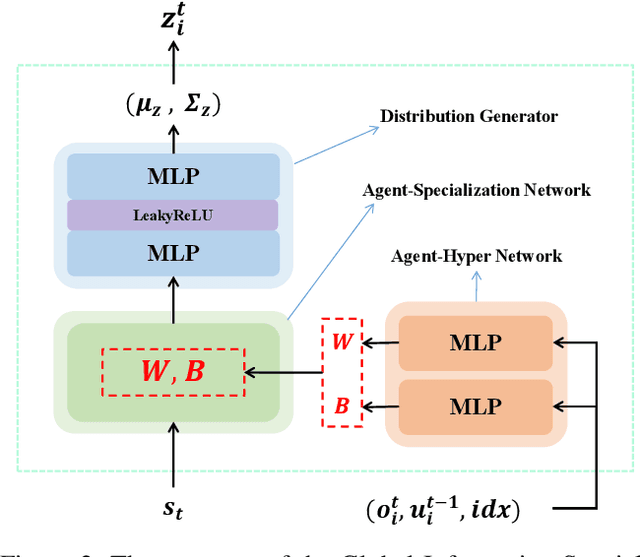

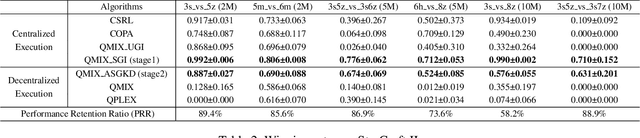

Centralized Training with Decentralized Execution (CTDE) has been a very popular paradigm for multi-agent reinforcement learning. One of its main features is making full use of the global information to learn a better joint $Q$-function or centralized critic. In this paper, we in turn explore how to leverage the global information to directly learn a better individual $Q$-function or individual actor. We find that applying the same global information to all agents indiscriminately is not enough for good performance, and thus propose to specify the global information for each agent to obtain agent-specific global information for better performance. Furthermore, we distill such agent-specific global information into the agent's local information, which is used during decentralized execution without too much performance degradation. We call this new paradigm Personalized Training with Distillated Execution (PTDE). PTDE can be easily combined with many state-of-the-art algorithms to further improve their performance, which is verified in both SMAC and Google Research Football scenarios.

GFlowCausal: Generative Flow Networks for Causal Discovery

Oct 15, 2022Wenqian Li, Yinchuan Li, Shengyu Zhu, Yunfeng Shao, Jianye Hao, Yan Pang

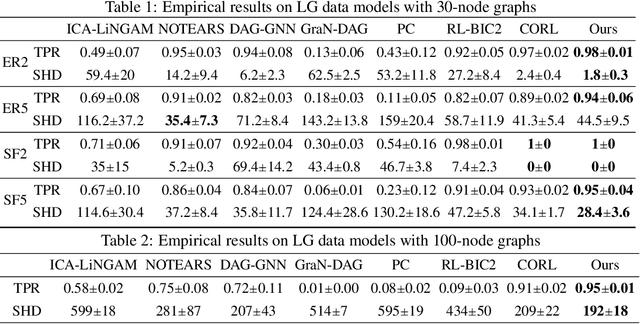

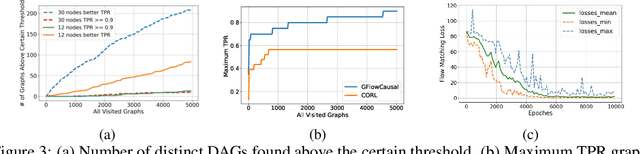

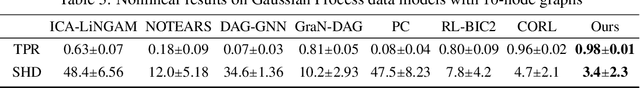

Causal discovery aims to uncover causal structure among a set of variables. Score-based approaches mainly focus on searching for the best Directed Acyclic Graph (DAG) based on a predefined score function. However, most of them are not applicable on a large scale due to the limited searchability. Inspired by the active learning in generative flow networks, we propose a novel approach to learning a DAG from observational data called GFlowCausal. It converts the graph search problem to a generation problem, in which direct edges are added gradually. GFlowCausal aims to learn the best policy to generate high-reward DAGs by sequential actions with probabilities proportional to predefined rewards. We propose a plug-and-play module based on transitive closure to ensure efficient sampling. Theoretical analysis shows that this module could guarantee acyclicity properties effectively and the consistency between final states and fully-connected graphs. We conduct extensive experiments on both synthetic and real datasets, and results show the proposed approach to be superior and also performs well in a large-scale setting.

Decomposed Mutual Information Optimization for Generalized Context in Meta-Reinforcement Learning

Oct 09, 2022Yao Mu, Yuzheng Zhuang, Fei Ni, Bin Wang, Jianyu Chen, Jianye Hao, Ping Luo

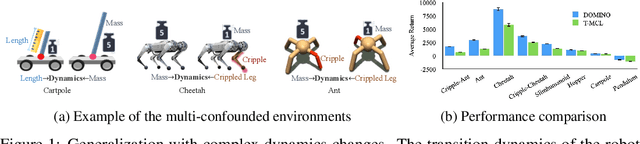

Adapting to the changes in transition dynamics is essential in robotic applications. By learning a conditional policy with a compact context, context-aware meta-reinforcement learning provides a flexible way to adjust behavior according to dynamics changes. However, in real-world applications, the agent may encounter complex dynamics changes. Multiple confounders can influence the transition dynamics, making it challenging to infer accurate context for decision-making. This paper addresses such a challenge by Decomposed Mutual INformation Optimization (DOMINO) for context learning, which explicitly learns a disentangled context to maximize the mutual information between the context and historical trajectories, while minimizing the state transition prediction error. Our theoretical analysis shows that DOMINO can overcome the underestimation of the mutual information caused by multi-confounded challenges via learning disentangled context and reduce the demand for the number of samples collected in various environments. Extensive experiments show that the context learned by DOMINO benefits both model-based and model-free reinforcement learning algorithms for dynamics generalization in terms of sample efficiency and performance in unseen environments.

EUCLID: Towards Efficient Unsupervised Reinforcement Learning with Multi-choice Dynamics Model

Oct 02, 2022Yifu Yuan, Jianye Hao, Fei Ni, Yao Mu, Yan Zheng, Yujing Hu, Jinyi Liu, Yingfeng Chen, Changjie Fan

Unsupervised reinforcement learning (URL) poses a promising paradigm to learn useful behaviors in a task-agnostic environment without the guidance of extrinsic rewards to facilitate the fast adaptation of various downstream tasks. Previous works focused on the pre-training in a model-free manner while lacking the study of transition dynamics modeling that leaves a large space for the improvement of sample efficiency in downstream tasks. To this end, we propose an Efficient Unsupervised Reinforcement Learning Framework with Multi-choice Dynamics model (EUCLID), which introduces a novel model-fused paradigm to jointly pre-train the dynamics model and unsupervised exploration policy in the pre-training phase, thus better leveraging the environmental samples and improving the downstream task sampling efficiency. However, constructing a generalizable model which captures the local dynamics under different behaviors remains a challenging problem. We introduce the multi-choice dynamics model that covers different local dynamics under different behaviors concurrently, which uses different heads to learn the state transition under different behaviors during unsupervised pre-training and selects the most appropriate head for prediction in the downstream task. Experimental results in the manipulation and locomotion domains demonstrate that EUCLID achieves state-of-the-art performance with high sample efficiency, basically solving the state-based URLB benchmark and reaching a mean normalized score of 104.0$\pm$1.2$\%$ in downstream tasks with 100k fine-tuning steps, which is equivalent to DDPG's performance at 2M interactive steps with 20x more data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge