Zeyu Cui

additional authors not shown

Enabling Large Language Models to Perform Power System Simulations with Previously Unseen Tools: A Case of Daline

Jun 26, 2024Abstract:The integration of experiment technologies with large language models (LLMs) is transforming scientific research, offering AI capabilities beyond specialized problem-solving to becoming research assistants for human scientists. In power systems, simulations are essential for research. However, LLMs face significant challenges in power system simulations due to limited pre-existing knowledge and the complexity of power grids. To address this issue, this work proposes a modular framework that integrates expertise from both the power system and LLM domains. This framework enhances LLMs' ability to perform power system simulations on previously unseen tools. Validated using 34 simulation tasks in Daline, a (optimal) power flow simulation and linearization toolbox not yet exposed to LLMs, the proposed framework improved GPT-4o's simulation coding accuracy from 0% to 96.07%, also outperforming the ChatGPT-4o web interface's 33.8% accuracy (with the entire knowledge base uploaded). These results highlight the potential of LLMs as research assistants in power systems.

Qwen Technical Report

Sep 28, 2023

Abstract:Large language models (LLMs) have revolutionized the field of artificial intelligence, enabling natural language processing tasks that were previously thought to be exclusive to humans. In this work, we introduce Qwen, the first installment of our large language model series. Qwen is a comprehensive language model series that encompasses distinct models with varying parameter counts. It includes Qwen, the base pretrained language models, and Qwen-Chat, the chat models finetuned with human alignment techniques. The base language models consistently demonstrate superior performance across a multitude of downstream tasks, and the chat models, particularly those trained using Reinforcement Learning from Human Feedback (RLHF), are highly competitive. The chat models possess advanced tool-use and planning capabilities for creating agent applications, showcasing impressive performance even when compared to bigger models on complex tasks like utilizing a code interpreter. Furthermore, we have developed coding-specialized models, Code-Qwen and Code-Qwen-Chat, as well as mathematics-focused models, Math-Qwen-Chat, which are built upon base language models. These models demonstrate significantly improved performance in comparison with open-source models, and slightly fall behind the proprietary models.

OFASys: A Multi-Modal Multi-Task Learning System for Building Generalist Models

Dec 08, 2022

Abstract:Generalist models, which are capable of performing diverse multi-modal tasks in a task-agnostic way within a single model, have been explored recently. Being, hopefully, an alternative to approaching general-purpose AI, existing generalist models are still at an early stage, where modality and task coverage is limited. To empower multi-modal task-scaling and speed up this line of research, we release a generalist model learning system, OFASys, built on top of a declarative task interface named multi-modal instruction. At the core of OFASys is the idea of decoupling multi-modal task representations from the underlying model implementations. In OFASys, a task involving multiple modalities can be defined declaratively even with just a single line of code. The system automatically generates task plans from such instructions for training and inference. It also facilitates multi-task training for diverse multi-modal workloads. As a starting point, we provide presets of 7 different modalities and 23 highly-diverse example tasks in OFASys, with which we also develop a first-in-kind, single model, OFA+, that can handle text, image, speech, video, and motion data. The single OFA+ model achieves 95% performance in average with only 16% parameters of 15 task-finetuned models, showcasing the performance reliability of multi-modal task-scaling provided by OFASys. Available at https://github.com/OFA-Sys/OFASys

MMSpeech: Multi-modal Multi-task Encoder-Decoder Pre-training for Speech Recognition

Nov 29, 2022Abstract:In this paper, we propose a novel multi-modal multi-task encoder-decoder pre-training framework (MMSpeech) for Mandarin automatic speech recognition (ASR), which employs both unlabeled speech and text data. The main difficulty in speech-text joint pre-training comes from the significant difference between speech and text modalities, especially for Mandarin speech and text. Unlike English and other languages with an alphabetic writing system, Mandarin uses an ideographic writing system where character and sound are not tightly mapped to one another. Therefore, we propose to introduce the phoneme modality into pre-training, which can help capture modality-invariant information between Mandarin speech and text. Specifically, we employ a multi-task learning framework including five self-supervised and supervised tasks with speech and text data. For end-to-end pre-training, we introduce self-supervised speech-to-pseudo-codes (S2C) and phoneme-to-text (P2T) tasks utilizing unlabeled speech and text data, where speech-pseudo-codes pairs and phoneme-text pairs are a supplement to the supervised speech-text pairs. To train the encoder to learn better speech representation, we introduce self-supervised masked speech prediction (MSP) and supervised phoneme prediction (PP) tasks to learn to map speech into phonemes. Besides, we directly add the downstream supervised speech-to-text (S2T) task into the pre-training process, which can further improve the pre-training performance and achieve better recognition results even without fine-tuning. Experiments on AISHELL-1 show that our proposed method achieves state-of-the-art performance, with a more than 40% relative improvement compared with other pre-training methods.

Contextual Expressive Text-to-Speech

Nov 26, 2022Abstract:The goal of expressive Text-to-speech (TTS) is to synthesize natural speech with desired content, prosody, emotion, or timbre, in high expressiveness. Most of previous studies attempt to generate speech from given labels of styles and emotions, which over-simplifies the problem by classifying styles and emotions into a fixed number of pre-defined categories. In this paper, we introduce a new task setting, Contextual TTS (CTTS). The main idea of CTTS is that how a person speaks depends on the particular context she is in, where the context can typically be represented as text. Thus, in the CTTS task, we propose to utilize such context to guide the speech synthesis process instead of relying on explicit labels of styles and emotions. To achieve this task, we construct a synthetic dataset and develop an effective framework. Experiments show that our framework can generate high-quality expressive speech based on the given context both in synthetic datasets and real-world scenarios.

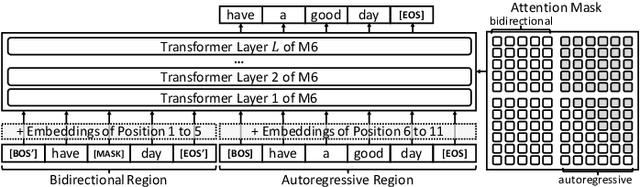

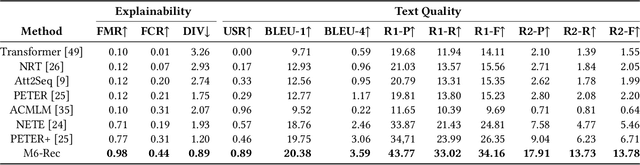

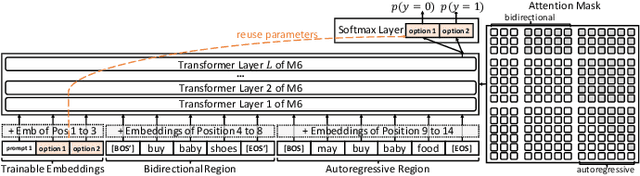

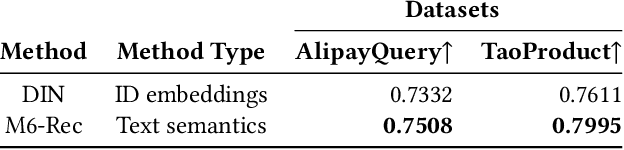

M6-Rec: Generative Pretrained Language Models are Open-Ended Recommender Systems

May 19, 2022

Abstract:Industrial recommender systems have been growing increasingly complex, may involve \emph{diverse domains} such as e-commerce products and user-generated contents, and can comprise \emph{a myriad of tasks} such as retrieval, ranking, explanation generation, and even AI-assisted content production. The mainstream approach so far is to develop individual algorithms for each domain and each task. In this paper, we explore the possibility of developing a unified foundation model to support \emph{open-ended domains and tasks} in an industrial recommender system, which may reduce the demand on downstream settings' data and can minimize the carbon footprint by avoiding training a separate model from scratch for every task. Deriving a unified foundation is challenging due to (i) the potentially unlimited set of downstream domains and tasks, and (ii) the real-world systems' emphasis on computational efficiency. We thus build our foundation upon M6, an existing large-scale industrial pretrained language model similar to GPT-3 and T5, and leverage M6's pretrained ability for sample-efficient downstream adaptation, by representing user behavior data as plain texts and converting the tasks to either language understanding or generation. To deal with a tight hardware budget, we propose an improved version of prompt tuning that outperforms fine-tuning with negligible 1\% task-specific parameters, and employ techniques such as late interaction, early exiting, parameter sharing, and pruning to further reduce the inference time and the model size. We demonstrate the foundation model's versatility on a wide range of tasks such as retrieval, ranking, zero-shot recommendation, explanation generation, personalized content creation, and conversational recommendation, and manage to deploy it on both cloud servers and mobile devices.

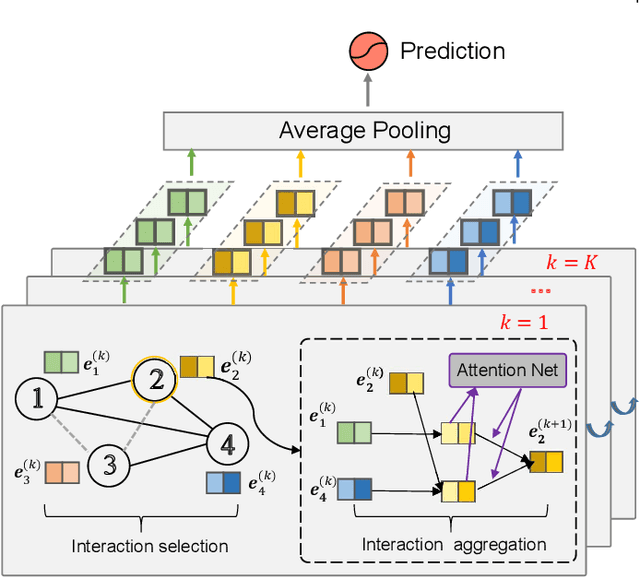

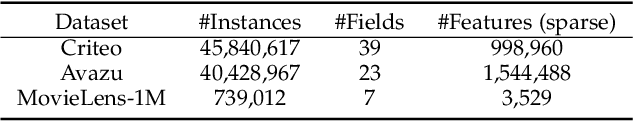

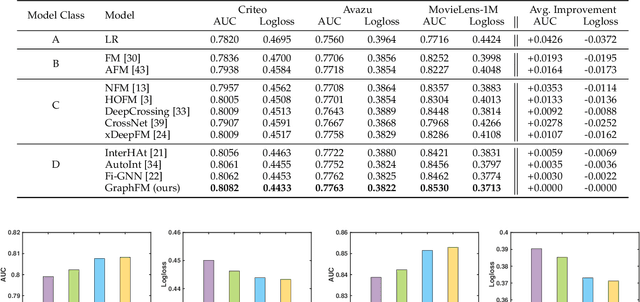

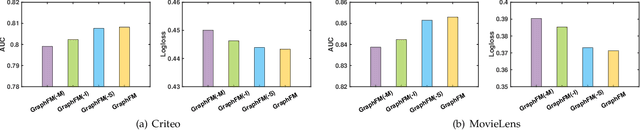

GraphFM: Graph Factorization Machines for Feature Interaction Modeling

May 25, 2021

Abstract:Factorization machine (FM) is a prevalent approach to modeling pairwise (second-order) feature interactions when dealing with high-dimensional sparse data. However, on the one hand, FM fails to capture higher-order feature interactions suffering from combinatorial expansion, on the other hand, taking into account interaction between every pair of features may introduce noise and degrade prediction accuracy. To solve the problems, we propose a novel approach Graph Factorization Machine (GraphFM) by naturally representing features in the graph structure. In particular, a novel mechanism is designed to select the beneficial feature interactions and formulate them as edges between features. Then our proposed model which integrates the interaction function of FM into the feature aggregation strategy of Graph Neural Network (GNN), can model arbitrary-order feature interactions on the graph-structured features by stacking layers. Experimental results on several real-world datasets has demonstrated the rationality and effectiveness of our proposed approach.

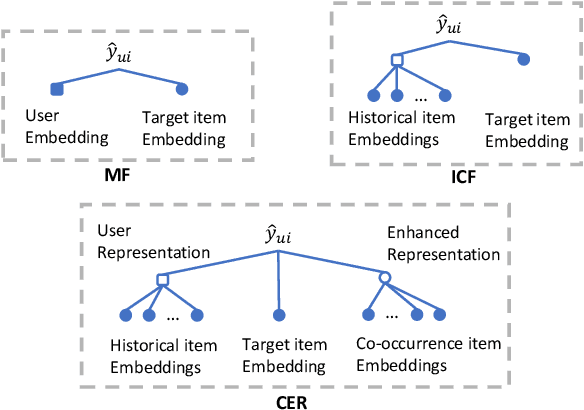

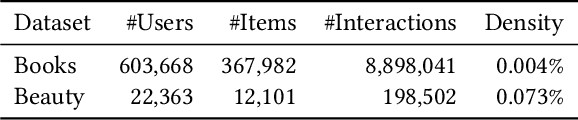

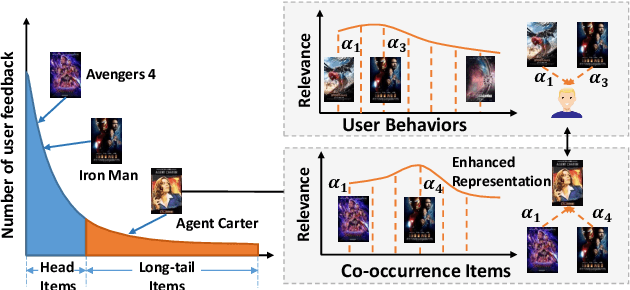

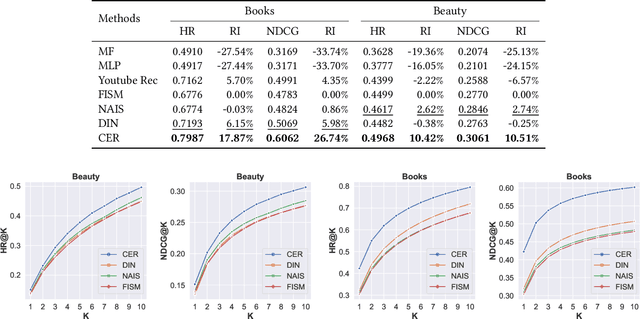

Represent Items by Items: An Enhanced Representation of the Target Item for Recommendation

Apr 26, 2021

Abstract:Item-based collaborative filtering (ICF) has been widely used in industrial applications such as recommender system and online advertising. It models users' preference on target items by the items they have interacted with. Recent models use methods such as attention mechanism and deep neural network to learn the user representation and scoring function more accurately. However, despite their effectiveness, such models still overlook a problem that performance of ICF methods heavily depends on the quality of item representation especially the target item representation. In fact, due to the long-tail distribution in the recommendation, most item embeddings can not represent the semantics of items accurately and thus degrade the performance of current ICF methods. In this paper, we propose an enhanced representation of the target item which distills relevant information from the co-occurrence items. We design sampling strategies to sample fix number of co-occurrence items for the sake of noise reduction and computational cost. Considering the different importance of sampled items to the target item, we apply attention mechanism to selectively adopt the semantic information of the sampled items. Our proposed Co-occurrence based Enhanced Representation model (CER) learns the scoring function by a deep neural network with the attentive user representation and fusion of raw representation and enhanced representation of target item as input. With the enhanced representation, CER has stronger representation power for the tail items compared to the state-of-the-art ICF methods. Extensive experiments on two public benchmarks demonstrate the effectiveness of CER.

DyGCN: Dynamic Graph Embedding with Graph Convolutional Network

Apr 07, 2021

Abstract:Graph embedding, aiming to learn low-dimensional representations (aka. embeddings) of nodes, has received significant attention recently. Recent years have witnessed a surge of efforts made on static graphs, among which Graph Convolutional Network (GCN) has emerged as an effective class of models. However, these methods mainly focus on the static graph embedding. In this work, we propose an efficient dynamic graph embedding approach, Dynamic Graph Convolutional Network (DyGCN), which is an extension of GCN-based methods. We naturally generalizes the embedding propagation scheme of GCN to dynamic setting in an efficient manner, which is to propagate the change along the graph to update node embeddings. The most affected nodes are first updated, and then their changes are propagated to the further nodes and leads to their update. Extensive experiments conducted on various dynamic graphs demonstrate that our model can update the node embeddings in a time-saving and performance-preserving way.

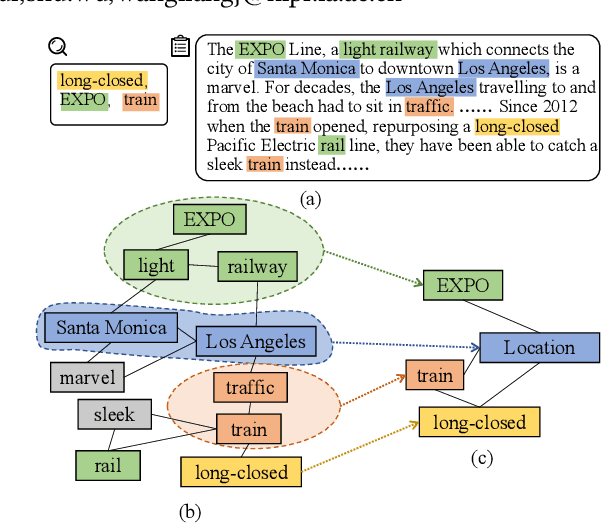

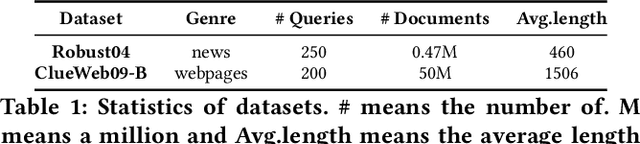

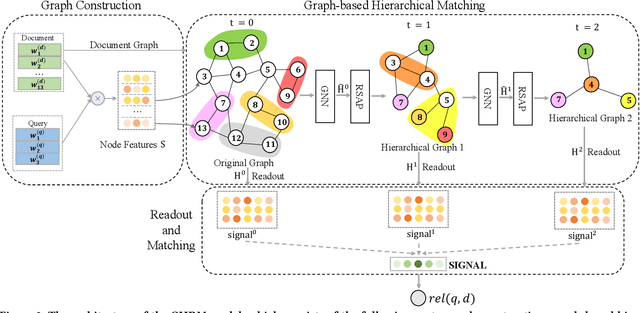

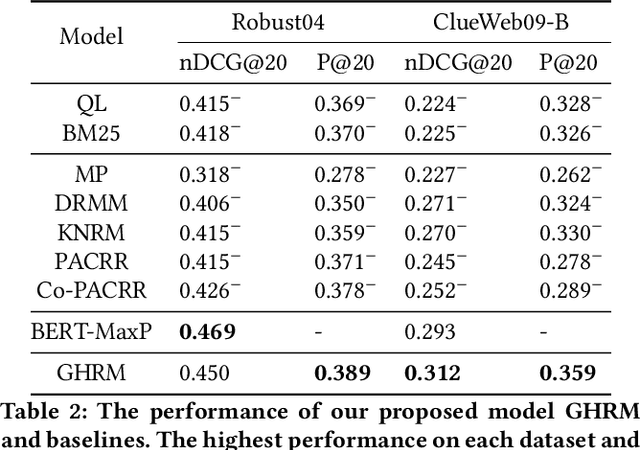

Graph-based Hierarchical Relevance Matching Signals for Ad-hoc Retrieval

Feb 22, 2021

Abstract:The ad-hoc retrieval task is to rank related documents given a query and a document collection. A series of deep learning based approaches have been proposed to solve such problem and gained lots of attention. However, we argue that they are inherently based on local word sequences, ignoring the subtle long-distance document-level word relationships. To solve the problem, we explicitly model the document-level word relationship through the graph structure, capturing the subtle information via graph neural networks. In addition, due to the complexity and scale of the document collections, it is considerable to explore the different grain-sized hierarchical matching signals at a more general level. Therefore, we propose a Graph-based Hierarchical Relevance Matching model (GHRM) for ad-hoc retrieval, by which we can capture the subtle and general hierarchical matching signals simultaneously. We validate the effects of GHRM over two representative ad-hoc retrieval benchmarks, the comprehensive experiments and results demonstrate its superiority over state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge