Yi He

On Stability and Robustness of Diffusion Posterior Sampling for Bayesian Inverse Problems

Feb 02, 2026Abstract:Diffusion models have recently emerged as powerful learned priors for Bayesian inverse problems (BIPs). Diffusion-based solvers rely on a presumed likelihood for the observations in BIPs to guide the generation process. However, the link between likelihood and recovery quality for BIPs is unclear in previous works. We bridge this gap by characterizing the posterior approximation error and proving the \emph{stability} of the diffusion-based solvers. Meanwhile, an immediate result of our findings on stability demonstrates the lack of robustness in diffusion-based solvers, which remains unexplored. This can degrade performance when the presumed likelihood mismatches the unknown true data generation processes. To address this issue, we propose a simple yet effective solution, \emph{robust diffusion posterior sampling}, which is provably \emph{robust} and compatible with existing gradient-based posterior samplers. Empirical results on scientific inverse problems and natural image tasks validate the effectiveness and robustness of our method, showing consistent performance improvements under challenging likelihood misspecifications.

Relink: Constructing Query-Driven Evidence Graph On-the-Fly for GraphRAG

Jan 12, 2026Abstract:Graph-based Retrieval-Augmented Generation (GraphRAG) mitigates hallucinations in Large Language Models (LLMs) by grounding them in structured knowledge. However, current GraphRAG methods are constrained by a prevailing \textit{build-then-reason} paradigm, which relies on a static, pre-constructed Knowledge Graph (KG). This paradigm faces two critical challenges. First, the KG's inherent incompleteness often breaks reasoning paths. Second, the graph's low signal-to-noise ratio introduces distractor facts, presenting query-relevant but misleading knowledge that disrupts the reasoning process. To address these challenges, we argue for a \textit{reason-and-construct} paradigm and propose Relink, a framework that dynamically builds a query-specific evidence graph. To tackle incompleteness, \textbf{Relink} instantiates required facts from a latent relation pool derived from the original text corpus, repairing broken paths on the fly. To handle misleading or distractor facts, Relink employs a unified, query-aware evaluation strategy that jointly considers candidates from both the KG and latent relations, selecting those most useful for answering the query rather than relying on their pre-existence. This empowers Relink to actively discard distractor facts and construct the most faithful and precise evidence path for each query. Extensive experiments on five Open-Domain Question Answering benchmarks show that Relink achieves significant average improvements of 5.4\% in EM and 5.2\% in F1 over leading GraphRAG baselines, demonstrating the superiority of our proposed framework.

Improved Bounds for Private and Robust Alignment

Dec 29, 2025Abstract:In this paper, we study the private and robust alignment of language models from a theoretical perspective by establishing upper bounds on the suboptimality gap in both offline and online settings. We consider preference labels subject to privacy constraints and/or adversarial corruption, and analyze two distinct interplays between them: privacy-first and corruption-first. For the privacy-only setting, we show that log loss with an MLE-style algorithm achieves near-optimal rates, in contrast to conventional wisdom. For the joint privacy-and-corruption setting, we first demonstrate that existing offline algorithms in fact provide stronger guarantees -- simultaneously in terms of corruption level and privacy parameters -- than previously known, which further yields improved bounds in the corruption-only regime. In addition, we also present the first set of results for private and robust online alignment. Our results are enabled by new uniform convergence guarantees for log loss and square loss under privacy and corruption, which we believe have broad applicability across learning theory and statistics.

Fast-Forward Lattice Boltzmann: Learning Kinetic Behaviour with Physics-Informed Neural Operators

Sep 26, 2025

Abstract:The lattice Boltzmann equation (LBE), rooted in kinetic theory, provides a powerful framework for capturing complex flow behaviour by describing the evolution of single-particle distribution functions (PDFs). Despite its success, solving the LBE numerically remains computationally intensive due to strict time-step restrictions imposed by collision kernels. Here, we introduce a physics-informed neural operator framework for the LBE that enables prediction over large time horizons without step-by-step integration, effectively bypassing the need to explicitly solve the collision kernel. We incorporate intrinsic moment-matching constraints of the LBE, along with global equivariance of the full distribution field, enabling the model to capture the complex dynamics of the underlying kinetic system. Our framework is discretization-invariant, enabling models trained on coarse lattices to generalise to finer ones (kinetic super-resolution). In addition, it is agnostic to the specific form of the underlying collision model, which makes it naturally applicable across different kinetic datasets regardless of the governing dynamics. Our results demonstrate robustness across complex flow scenarios, including von Karman vortex shedding, ligament breakup, and bubble adhesion. This establishes a new data-driven pathway for modelling kinetic systems.

Landmark Guided Visual Feature Extractor for Visual Speech Recognition with Limited Resource

Aug 10, 2025Abstract:Visual speech recognition is a technique to identify spoken content in silent speech videos, which has raised significant attention in recent years. Advancements in data-driven deep learning methods have significantly improved both the speed and accuracy of recognition. However, these deep learning methods can be effected by visual disturbances, such as lightning conditions, skin texture and other user-specific features. Data-driven approaches could reduce the performance degradation caused by these visual disturbances using models pretrained on large-scale datasets. But these methods often require large amounts of training data and computational resources, making them costly. To reduce the influence of user-specific features and enhance performance with limited data, this paper proposed a landmark guided visual feature extractor. Facial landmarks are used as auxiliary information to aid in training the visual feature extractor. A spatio-temporal multi-graph convolutional network is designed to fully exploit the spatial locations and spatio-temporal features of facial landmarks. Additionally, a multi-level lip dynamic fusion framework is introduced to combine the spatio-temporal features of the landmarks with the visual features extracted from the raw video frames. Experimental results show that this approach performs well with limited data and also improves the model's accuracy on unseen speakers.

Generative molecule evolution using 3D pharmacophore for efficient Structure-Based Drug Design

Jul 27, 2025

Abstract:Recent advances in generative models, particularly diffusion and auto-regressive models, have revolutionized fields like computer vision and natural language processing. However, their application to structure-based drug design (SBDD) remains limited due to critical data constraints. To address the limitation of training data for models targeting SBDD tasks, we propose an evolutionary framework named MEVO, which bridges the gap between billion-scale small molecule dataset and the scarce protein-ligand complex dataset, and effectively increase the abundance of training data for generative SBDD models. MEVO is composed of three key components: a high-fidelity VQ-VAE for molecule representation in latent space, a diffusion model for pharmacophore-guided molecule generation, and a pocket-aware evolutionary strategy for molecule optimization with physics-based scoring function. This framework efficiently generate high-affinity binders for various protein targets, validated with predicted binding affinities using free energy perturbation (FEP) methods. In addition, we showcase the capability of MEVO in designing potent inhibitors to KRAS$^{\textrm{G12D}}$, a challenging target in cancer therapeutics, with similar affinity to the known highly active inhibitor evaluated by FEP calculations. With high versatility and generalizability, MEVO offers an effective and data-efficient model for various tasks in structure-based ligand design.

Uncertainty-Aware Complex Scientific Table Data Extraction

Jul 02, 2025Abstract:Table structure recognition (TSR) and optical character recognition (OCR) play crucial roles in extracting structured data from tables in scientific documents. However, existing extraction frameworks built on top of TSR and OCR methods often fail to quantify the uncertainties of extracted results. To obtain highly accurate data for scientific domains, all extracted data must be manually verified, which can be time-consuming and labor-intensive. We propose a framework that performs uncertainty-aware data extraction for complex scientific tables, built on conformal prediction, a model-agnostic method for uncertainty quantification (UQ). We explored various uncertainty scoring methods to aggregate the uncertainties introduced by TSR and OCR. We rigorously evaluated the framework using a standard benchmark and an in-house dataset consisting of complex scientific tables in six scientific domains. The results demonstrate the effectiveness of using UQ for extraction error detection, and by manually verifying only 47\% of extraction results, the data quality can be improved by 30\%. Our work quantitatively demonstrates the role of UQ with the potential of improving the efficiency in the human-machine cooperation process to obtain scientifically usable data from complex tables in scientific documents. All code and data are available on GitHub at https://github.com/lamps-lab/TSR-OCR-UQ/tree/main.

Topology-aware Neural Flux Prediction Guided by Physics

Jun 06, 2025Abstract:Graph Neural Networks (GNNs) often struggle in preserving high-frequency components of nodal signals when dealing with directed graphs. Such components are crucial for modeling flow dynamics, without which a traditional GNN tends to treat a graph with forward and reverse topologies equal.To make GNNs sensitive to those high-frequency components thereby being capable to capture detailed topological differences, this paper proposes a novel framework that combines 1) explicit difference matrices that model directional gradients and 2) implicit physical constraints that enforce messages passing within GNNs to be consistent with natural laws. Evaluations on two real-world directed graph data, namely, water flux network and urban traffic flow network, demonstrate the effectiveness of our proposal.

Topological Structure Learning Should Be A Research Priority for LLM-Based Multi-Agent Systems

May 29, 2025Abstract:Large Language Model-based Multi-Agent Systems (MASs) have emerged as a powerful paradigm for tackling complex tasks through collaborative intelligence. Nevertheless, the question of how agents should be structurally organized for optimal cooperation remains largely unexplored. In this position paper, we aim to gently redirect the focus of the MAS research community toward this critical dimension: develop topology-aware MASs for specific tasks. Specifically, the system consists of three core components - agents, communication links, and communication patterns - that collectively shape its coordination performance and efficiency. To this end, we introduce a systematic, three-stage framework: agent selection, structure profiling, and topology synthesis. Each stage would trigger new research opportunities in areas such as language models, reinforcement learning, graph learning, and generative modeling; together, they could unleash the full potential of MASs in complicated real-world applications. Then, we discuss the potential challenges and opportunities in the evaluation of multiple systems. We hope our perspective and framework can offer critical new insights in the era of agentic AI.

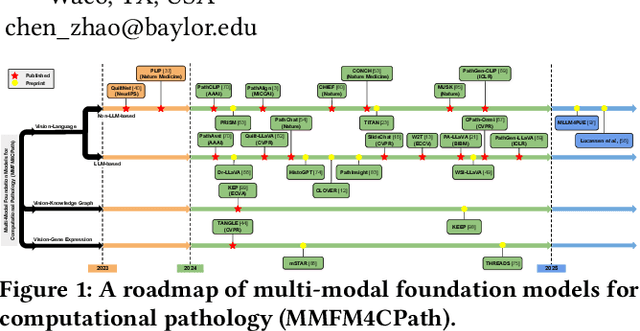

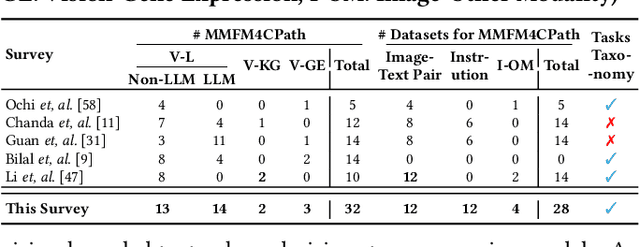

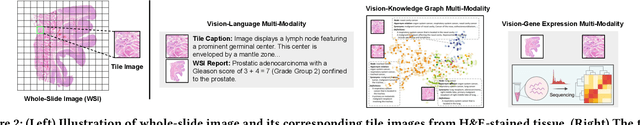

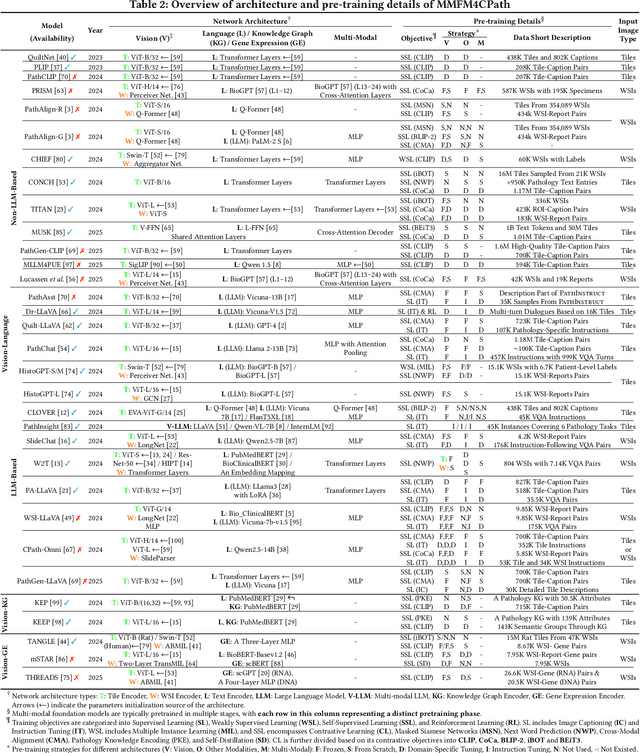

Multi-Modal Foundation Models for Computational Pathology: A Survey

Mar 12, 2025

Abstract:Foundation models have emerged as a powerful paradigm in computational pathology (CPath), enabling scalable and generalizable analysis of histopathological images. While early developments centered on uni-modal models trained solely on visual data, recent advances have highlighted the promise of multi-modal foundation models that integrate heterogeneous data sources such as textual reports, structured domain knowledge, and molecular profiles. In this survey, we provide a comprehensive and up-to-date review of multi-modal foundation models in CPath, with a particular focus on models built upon hematoxylin and eosin (H&E) stained whole slide images (WSIs) and tile-level representations. We categorize 32 state-of-the-art multi-modal foundation models into three major paradigms: vision-language, vision-knowledge graph, and vision-gene expression. We further divide vision-language models into non-LLM-based and LLM-based approaches. Additionally, we analyze 28 available multi-modal datasets tailored for pathology, grouped into image-text pairs, instruction datasets, and image-other modality pairs. Our survey also presents a taxonomy of downstream tasks, highlights training and evaluation strategies, and identifies key challenges and future directions. We aim for this survey to serve as a valuable resource for researchers and practitioners working at the intersection of pathology and AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge