A K-variate Time Series Is Worth K Words: Evolution of the Vanilla Transformer Architecture for Long-term Multivariate Time Series Forecasting

Dec 06, 2022Zanwei Zhou, Ruizhe Zhong, Chen Yang, Yan Wang, Xiaokang Yang, Wei Shen

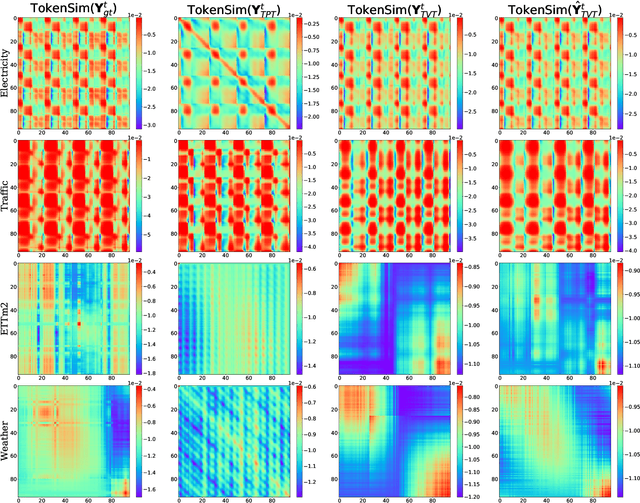

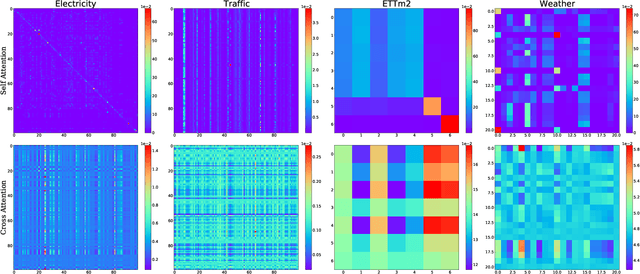

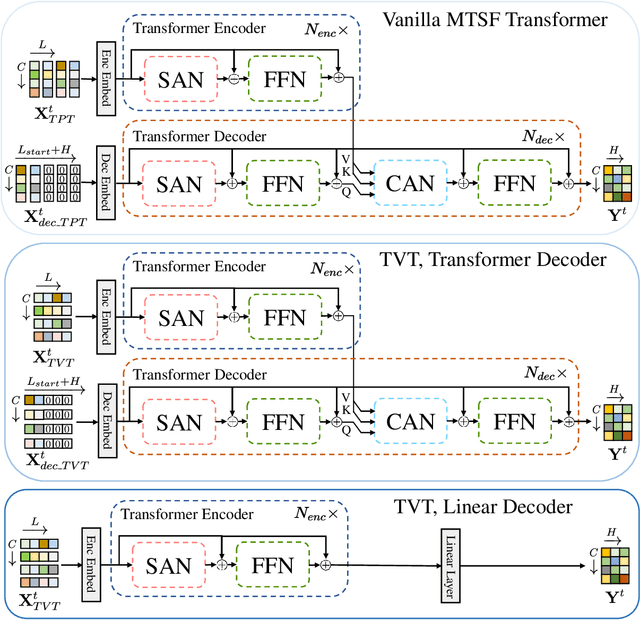

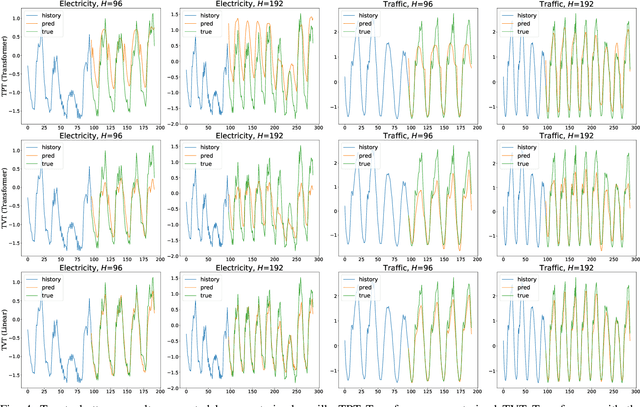

Multivariate time series forecasting (MTSF) is a fundamental problem in numerous real-world applications. Recently, Transformer has become the de facto solution for MTSF, especially for the long-term cases. However, except for the one forward operation, the basic configurations in existing MTSF Transformer architectures were barely carefully verified. In this study, we point out that the current tokenization strategy in MTSF Transformer architectures ignores the token uniformity inductive bias of Transformers. Therefore, the vanilla MTSF transformer struggles to capture details in time series and presents inferior performance. Based on this observation, we make a series of evolution on the basic architecture of the vanilla MTSF transformer. We vary the flawed tokenization strategy, along with the decoder structure and embeddings. Surprisingly, the evolved simple transformer architecture is highly effective, which successfully avoids the over-smoothing phenomena in the vanilla MTSF transformer, achieves a more detailed and accurate prediction, and even substantially outperforms the state-of-the-art Transformers that are well-designed for MTSF.

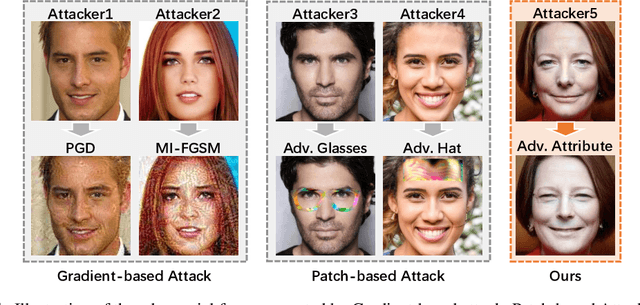

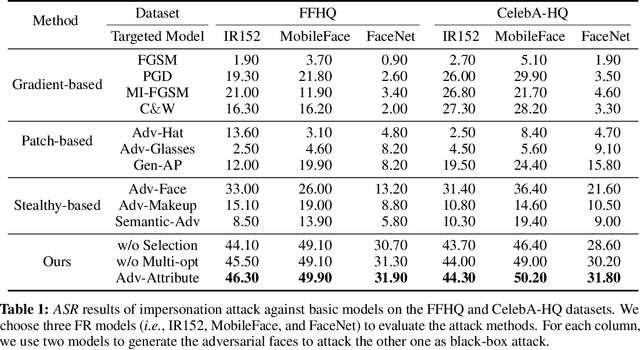

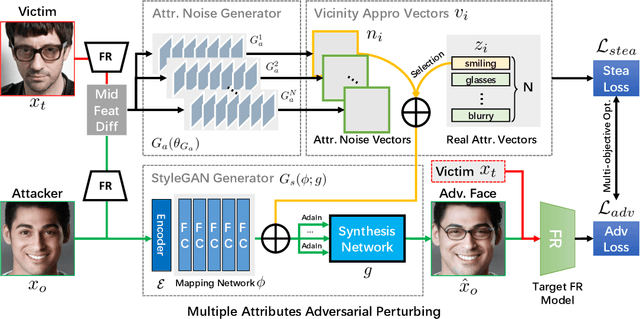

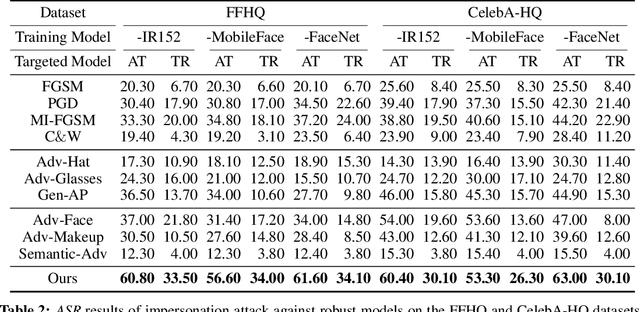

Adv-Attribute: Inconspicuous and Transferable Adversarial Attack on Face Recognition

Oct 13, 2022Shuai Jia, Bangjie Yin, Taiping Yao, Shouhong Ding, Chunhua Shen, Xiaokang Yang, Chao Ma

Deep learning models have shown their vulnerability when dealing with adversarial attacks. Existing attacks almost perform on low-level instances, such as pixels and super-pixels, and rarely exploit semantic clues. For face recognition attacks, existing methods typically generate the l_p-norm perturbations on pixels, however, resulting in low attack transferability and high vulnerability to denoising defense models. In this work, instead of performing perturbations on the low-level pixels, we propose to generate attacks through perturbing on the high-level semantics to improve attack transferability. Specifically, a unified flexible framework, Adversarial Attributes (Adv-Attribute), is designed to generate inconspicuous and transferable attacks on face recognition, which crafts the adversarial noise and adds it into different attributes based on the guidance of the difference in face recognition features from the target. Moreover, the importance-aware attribute selection and the multi-objective optimization strategy are introduced to further ensure the balance of stealthiness and attacking strength. Extensive experiments on the FFHQ and CelebA-HQ datasets show that the proposed Adv-Attribute method achieves the state-of-the-art attacking success rates while maintaining better visual effects against recent attack methods.

Perceptual Attacks of No-Reference Image Quality Models with Human-in-the-Loop

Oct 03, 2022Weixia Zhang, Dingquan Li, Xiongkuo Min, Guangtao Zhai, Guodong Guo, Xiaokang Yang, Kede Ma

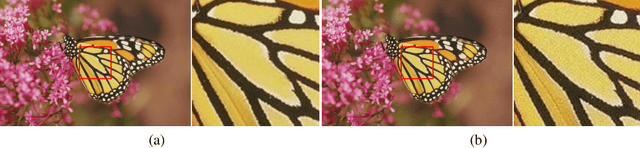

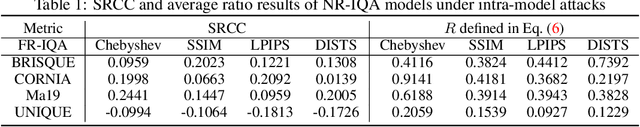

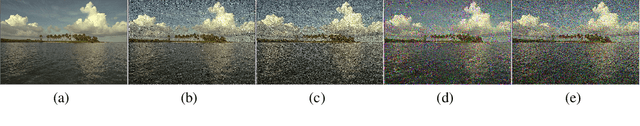

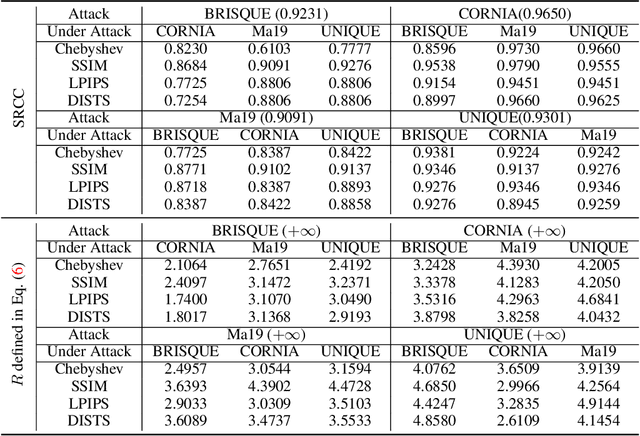

No-reference image quality assessment (NR-IQA) aims to quantify how humans perceive visual distortions of digital images without access to their undistorted references. NR-IQA models are extensively studied in computational vision, and are widely used for performance evaluation and perceptual optimization of man-made vision systems. Here we make one of the first attempts to examine the perceptual robustness of NR-IQA models. Under a Lagrangian formulation, we identify insightful connections of the proposed perceptual attack to previous beautiful ideas in computer vision and machine learning. We test one knowledge-driven and three data-driven NR-IQA methods under four full-reference IQA models (as approximations to human perception of just-noticeable differences). Through carefully designed psychophysical experiments, we find that all four NR-IQA models are vulnerable to the proposed perceptual attack. More interestingly, we observe that the generated counterexamples are not transferable, manifesting themselves as distinct design flows of respective NR-IQA methods.

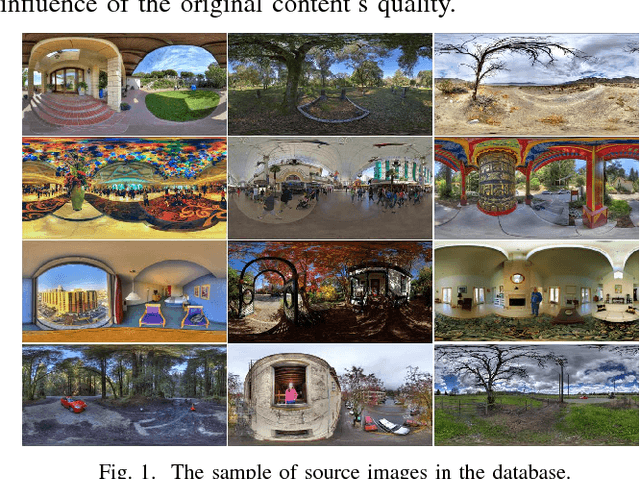

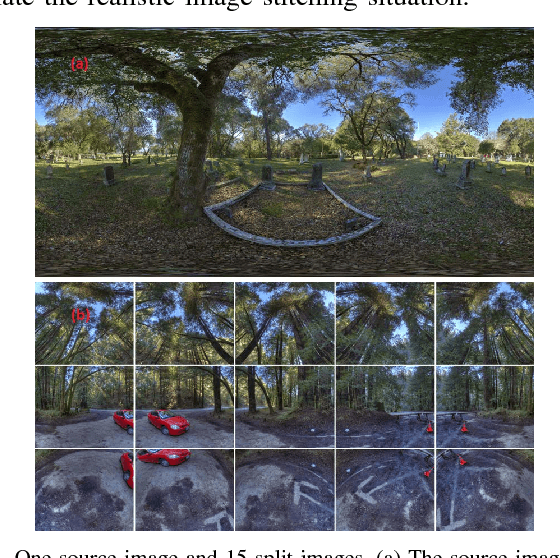

Perceptual Quality Assessment of Omnidirectional Images

Jul 06, 2022Huiyu Duan, Guangtao Zhai, Xiongkuo Min, Yucheng Zhu, Yi Fang, Xiaokang Yang

Omnidirectional images and videos can provide immersive experience of real-world scenes in Virtual Reality (VR) environment. We present a perceptual omnidirectional image quality assessment (IQA) study in this paper since it is extremely important to provide a good quality of experience under the VR environment. We first establish an omnidirectional IQA (OIQA) database, which includes 16 source images and 320 distorted images degraded by 4 commonly encountered distortion types, namely JPEG compression, JPEG2000 compression, Gaussian blur and Gaussian noise. Then a subjective quality evaluation study is conducted on the OIQA database in the VR environment. Considering that humans can only see a part of the scene at one movement in the VR environment, visual attention becomes extremely important. Thus we also track head and eye movement data during the quality rating experiments. The original and distorted omnidirectional images, subjective quality ratings, and the head and eye movement data together constitute the OIQA database. State-of-the-art full-reference (FR) IQA measures are tested on the OIQA database, and some new observations different from traditional IQA are made.

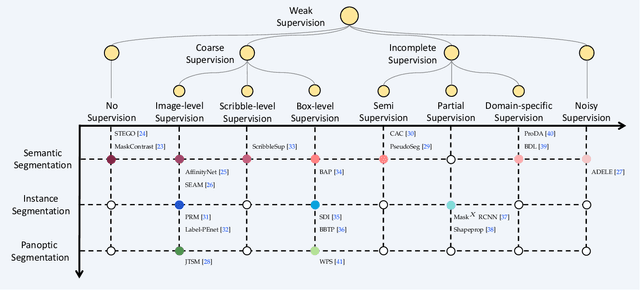

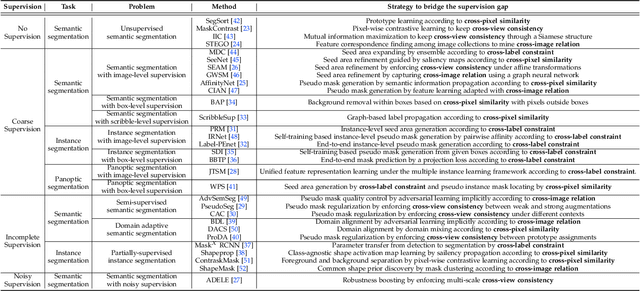

A Survey on Label-efficient Deep Segmentation: Bridging the Gap between Weak Supervision and Dense Prediction

Jul 04, 2022Wei Shen, Zelin Peng, Xuehui Wang, Huayu Wang, Jiazhong Cen, Dongsheng Jiang, Lingxi Xie, Xiaokang Yang, Qi Tian

The rapid development of deep learning has made a great progress in segmentation, one of the fundamental tasks of computer vision. However, the current segmentation algorithms mostly rely on the availability of pixel-level annotations, which are often expensive, tedious, and laborious. To alleviate this burden, the past years have witnessed an increasing attention in building label-efficient, deep-learning-based segmentation algorithms. This paper offers a comprehensive review on label-efficient segmentation methods. To this end, we first develop a taxonomy to organize these methods according to the supervision provided by different types of weak labels (including no supervision, coarse supervision, incomplete supervision and noisy supervision) and supplemented by the types of segmentation problems (including semantic segmentation, instance segmentation and panoptic segmentation). Next, we summarize the existing label-efficient segmentation methods from a unified perspective that discusses an important question: how to bridge the gap between weak supervision and dense prediction -- the current methods are mostly based on heuristic priors, such as cross-pixel similarity, cross-label constraint, cross-view consistency, cross-image relation, etc. Finally, we share our opinions about the future research directions for label-efficient deep segmentation.

Isolating and Leveraging Controllable and Noncontrollable Visual Dynamics in World Models

May 27, 2022Minting Pan, Xiangming Zhu, Yunbo Wang, Xiaokang Yang

World models learn the consequences of actions in vision-based interactive systems. However, in practical scenarios such as autonomous driving, there commonly exists noncontrollable dynamics independent of the action signals, making it difficult to learn effective world models. To tackle this problem, we present a novel reinforcement learning approach named Iso-Dream, which improves the Dream-to-Control framework in two aspects. First, by optimizing the inverse dynamics, we encourage the world model to learn controllable and noncontrollable sources of spatiotemporal changes on isolated state transition branches. Second, we optimize the behavior of the agent on the decoupled latent imaginations of the world model. Specifically, to estimate state values, we roll-out the noncontrollable states into the future and associate them with the current controllable state. In this way, the isolation of dynamics sources can greatly benefit long-horizon decision-making of the agent, such as a self-driving car that can avoid potential risks by anticipating the movement of other vehicles. Experiments show that Iso-Dream is effective in decoupling the mixed dynamics and remarkably outperforms existing approaches in a wide range of visual control and prediction domains.

DOTIN: Dropping Task-Irrelevant Nodes for GNNs

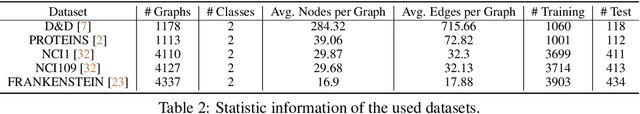

Apr 28, 2022Shaofeng Zhang, Feng Zhu, Junchi Yan, Rui Zhao, Xiaokang Yang

Scalability is an important consideration for deep graph neural networks. Inspired by the conventional pooling layers in CNNs, many recent graph learning approaches have introduced the pooling strategy to reduce the size of graphs for learning, such that the scalability and efficiency can be improved. However, these pooling-based methods are mainly tailored to a single graph-level task and pay more attention to local information, limiting their performance in multi-task settings which often require task-specific global information. In this paper, departure from these pooling-based efforts, we design a new approach called DOTIN (\underline{D}r\underline{o}pping \underline{T}ask-\underline{I}rrelevant \underline{N}odes) to reduce the size of graphs. Specifically, by introducing $K$ learnable virtual nodes to represent the graph embeddings targeted to $K$ different graph-level tasks, respectively, up to 90\% raw nodes with low attentiveness with an attention model -- a transformer in this paper, can be adaptively dropped without notable performance decreasing. Achieving almost the same accuracy, our method speeds up GAT by about 50\% on graph-level tasks including graph classification and graph edit distance (GED) with about 60\% less memory, on D\&D dataset. Code will be made publicly available in https://github.com/Sherrylone/DOTIN.

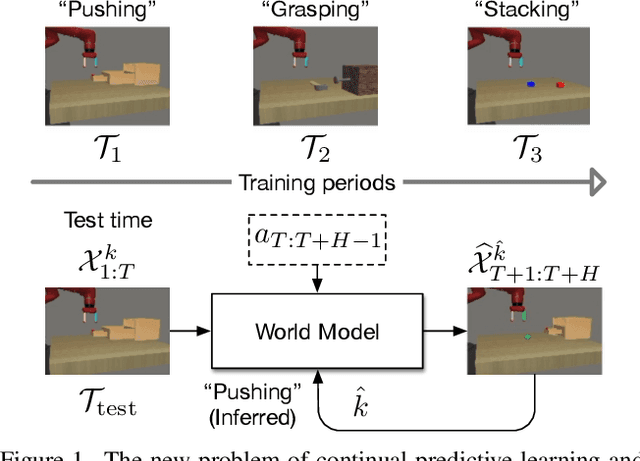

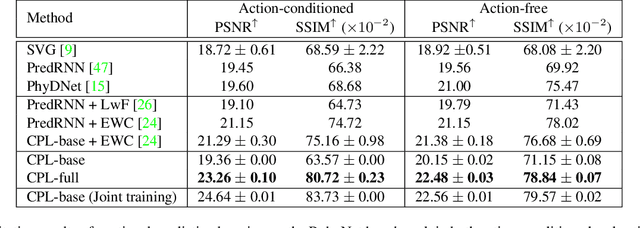

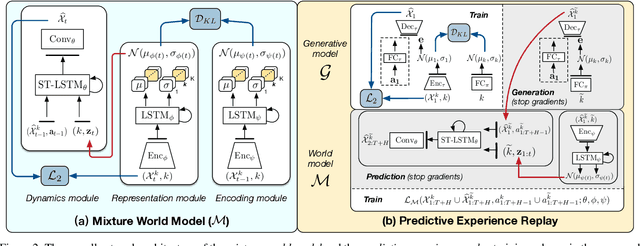

Continual Predictive Learning from Videos

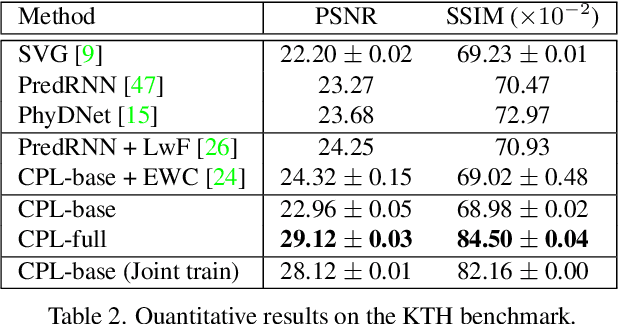

Apr 12, 2022Geng Chen, Wendong Zhang, Han Lu, Siyu Gao, Yunbo Wang, Mingsheng Long, Xiaokang Yang

Predictive learning ideally builds the world model of physical processes in one or more given environments. Typical setups assume that we can collect data from all environments at all times. In practice, however, different prediction tasks may arrive sequentially so that the environments may change persistently throughout the training procedure. Can we develop predictive learning algorithms that can deal with more realistic, non-stationary physical environments? In this paper, we study a new continual learning problem in the context of video prediction, and observe that most existing methods suffer from severe catastrophic forgetting in this setup. To tackle this problem, we propose the continual predictive learning (CPL) approach, which learns a mixture world model via predictive experience replay and performs test-time adaptation with non-parametric task inference. We construct two new benchmarks based on RoboNet and KTH, in which different tasks correspond to different physical robotic environments or human actions. Our approach is shown to effectively mitigate forgetting and remarkably outperform the na\"ive combinations of previous art in video prediction and continual learning.

Confusing Image Quality Assessment: Towards Better Augmented Reality Experience

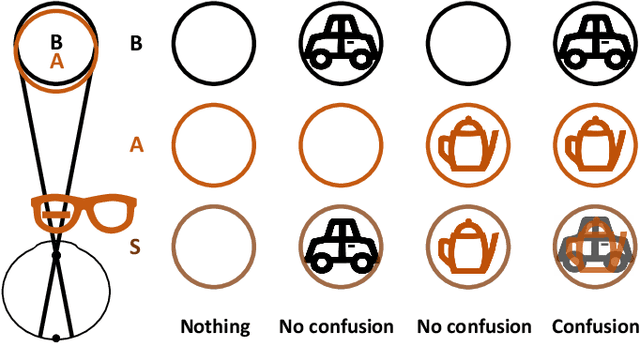

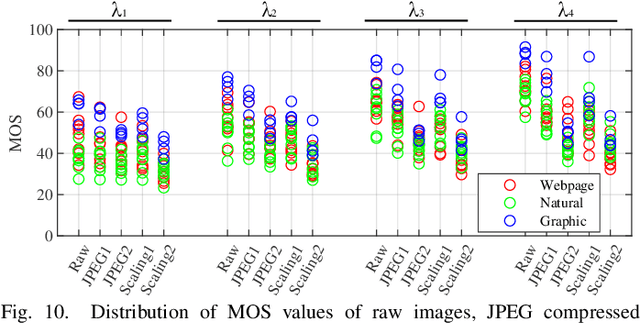

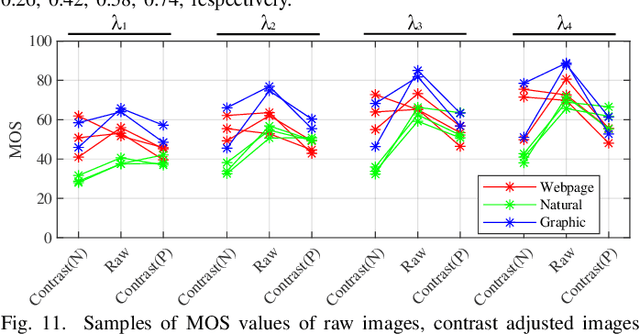

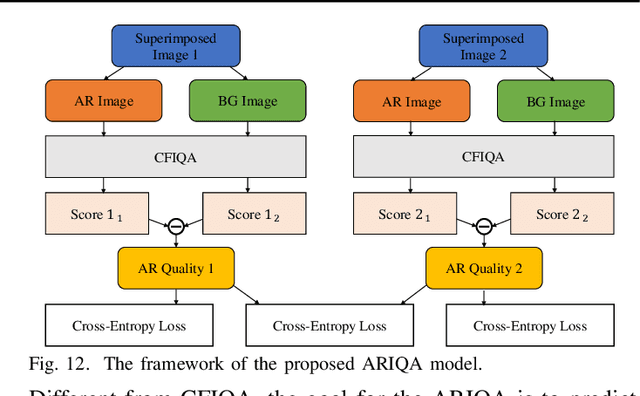

Apr 11, 2022Huiyu Duan, Xiongkuo Min, Yucheng Zhu, Guangtao Zhai, Xiaokang Yang, Patrick Le Callet

With the development of multimedia technology, Augmented Reality (AR) has become a promising next-generation mobile platform. The primary value of AR is to promote the fusion of digital contents and real-world environments, however, studies on how this fusion will influence the Quality of Experience (QoE) of these two components are lacking. To achieve better QoE of AR, whose two layers are influenced by each other, it is important to evaluate its perceptual quality first. In this paper, we consider AR technology as the superimposition of virtual scenes and real scenes, and introduce visual confusion as its basic theory. A more general problem is first proposed, which is evaluating the perceptual quality of superimposed images, i.e., confusing image quality assessment. A ConFusing Image Quality Assessment (CFIQA) database is established, which includes 600 reference images and 300 distorted images generated by mixing reference images in pairs. Then a subjective quality perception study and an objective model evaluation experiment are conducted towards attaining a better understanding of how humans perceive the confusing images. An objective metric termed CFIQA is also proposed to better evaluate the confusing image quality. Moreover, an extended ARIQA study is further conducted based on the CFIQA study. We establish an ARIQA database to better simulate the real AR application scenarios, which contains 20 AR reference images, 20 background (BG) reference images, and 560 distorted images generated from AR and BG references, as well as the correspondingly collected subjective quality ratings. We also design three types of full-reference (FR) IQA metrics to study whether we should consider the visual confusion when designing corresponding IQA algorithms. An ARIQA metric is finally proposed for better evaluating the perceptual quality of AR images.

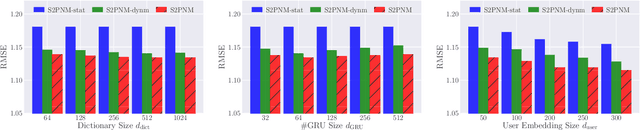

Modeling Dynamic User Preference via Dictionary Learning for Sequential Recommendation

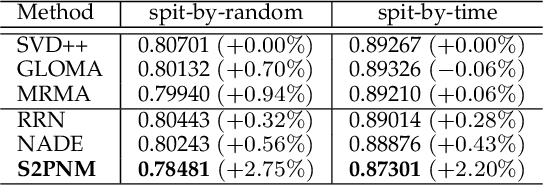

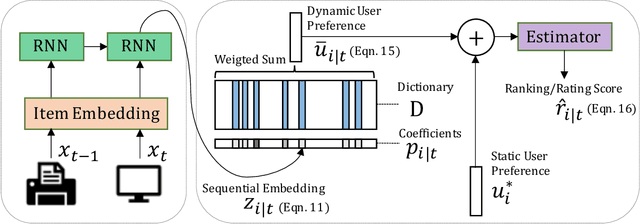

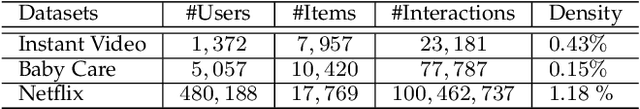

Apr 02, 2022Chao Chen, Dongsheng Li, Junchi Yan, Xiaokang Yang

Capturing the dynamics in user preference is crucial to better predict user future behaviors because user preferences often drift over time. Many existing recommendation algorithms -- including both shallow and deep ones -- often model such dynamics independently, i.e., user static and dynamic preferences are not modeled under the same latent space, which makes it difficult to fuse them for recommendation. This paper considers the problem of embedding a user's sequential behavior into the latent space of user preferences, namely translating sequence to preference. To this end, we formulate the sequential recommendation task as a dictionary learning problem, which learns: 1) a shared dictionary matrix, each row of which represents a partial signal of user dynamic preferences shared across users; and 2) a posterior distribution estimator using a deep autoregressive model integrated with Gated Recurrent Unit (GRU), which can select related rows of the dictionary to represent a user's dynamic preferences conditioned on his/her past behaviors. Qualitative studies on the Netflix dataset demonstrate that the proposed method can capture the user preference drifts over time and quantitative studies on multiple real-world datasets demonstrate that the proposed method can achieve higher accuracy compared with state-of-the-art factorization and neural sequential recommendation methods. The code is available at https://github.com/cchao0116/S2PNM-TKDE2021.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge