Suiyi Zhao

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

The Tenth NTIRE 2025 Efficient Super-Resolution Challenge Report

Apr 14, 2025Abstract:This paper presents a comprehensive review of the NTIRE 2025 Challenge on Single-Image Efficient Super-Resolution (ESR). The challenge aimed to advance the development of deep models that optimize key computational metrics, i.e., runtime, parameters, and FLOPs, while achieving a PSNR of at least 26.90 dB on the $\operatorname{DIV2K\_LSDIR\_valid}$ dataset and 26.99 dB on the $\operatorname{DIV2K\_LSDIR\_test}$ dataset. A robust participation saw \textbf{244} registered entrants, with \textbf{43} teams submitting valid entries. This report meticulously analyzes these methods and results, emphasizing groundbreaking advancements in state-of-the-art single-image ESR techniques. The analysis highlights innovative approaches and establishes benchmarks for future research in the field.

MIPI 2024 Challenge on Nighttime Flare Removal: Methods and Results

Apr 30, 2024

Abstract:The increasing demand for computational photography and imaging on mobile platforms has led to the widespread development and integration of advanced image sensors with novel algorithms in camera systems. However, the scarcity of high-quality data for research and the rare opportunity for in-depth exchange of views from industry and academia constrain the development of mobile intelligent photography and imaging (MIPI). Building on the achievements of the previous MIPI Workshops held at ECCV 2022 and CVPR 2023, we introduce our third MIPI challenge including three tracks focusing on novel image sensors and imaging algorithms. In this paper, we summarize and review the Nighttime Flare Removal track on MIPI 2024. In total, 170 participants were successfully registered, and 14 teams submitted results in the final testing phase. The developed solutions in this challenge achieved state-of-the-art performance on Nighttime Flare Removal. More details of this challenge and the link to the dataset can be found at https://mipi-challenge.org/MIPI2024/.

NTIRE 2024 Challenge on Low Light Image Enhancement: Methods and Results

Apr 22, 2024

Abstract:This paper reviews the NTIRE 2024 low light image enhancement challenge, highlighting the proposed solutions and results. The aim of this challenge is to discover an effective network design or solution capable of generating brighter, clearer, and visually appealing results when dealing with a variety of conditions, including ultra-high resolution (4K and beyond), non-uniform illumination, backlighting, extreme darkness, and night scenes. A notable total of 428 participants registered for the challenge, with 22 teams ultimately making valid submissions. This paper meticulously evaluates the state-of-the-art advancements in enhancing low-light images, reflecting the significant progress and creativity in this field.

The Ninth NTIRE 2024 Efficient Super-Resolution Challenge Report

Apr 16, 2024

Abstract:This paper provides a comprehensive review of the NTIRE 2024 challenge, focusing on efficient single-image super-resolution (ESR) solutions and their outcomes. The task of this challenge is to super-resolve an input image with a magnification factor of x4 based on pairs of low and corresponding high-resolution images. The primary objective is to develop networks that optimize various aspects such as runtime, parameters, and FLOPs, while still maintaining a peak signal-to-noise ratio (PSNR) of approximately 26.90 dB on the DIV2K_LSDIR_valid dataset and 26.99 dB on the DIV2K_LSDIR_test dataset. In addition, this challenge has 4 tracks including the main track (overall performance), sub-track 1 (runtime), sub-track 2 (FLOPs), and sub-track 3 (parameters). In the main track, all three metrics (ie runtime, FLOPs, and parameter count) were considered. The ranking of the main track is calculated based on a weighted sum-up of the scores of all other sub-tracks. In sub-track 1, the practical runtime performance of the submissions was evaluated, and the corresponding score was used to determine the ranking. In sub-track 2, the number of FLOPs was considered. The score calculated based on the corresponding FLOPs was used to determine the ranking. In sub-track 3, the number of parameters was considered. The score calculated based on the corresponding parameters was used to determine the ranking. RLFN is set as the baseline for efficiency measurement. The challenge had 262 registered participants, and 34 teams made valid submissions. They gauge the state-of-the-art in efficient single-image super-resolution. To facilitate the reproducibility of the challenge and enable other researchers to build upon these findings, the code and the pre-trained model of validated solutions are made publicly available at https://github.com/Amazingren/NTIRE2024_ESR/.

NTIRE 2024 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 15, 2024

Abstract:This paper reviews the NTIRE 2024 challenge on image super-resolution ($\times$4), highlighting the solutions proposed and the outcomes obtained. The challenge involves generating corresponding high-resolution (HR) images, magnified by a factor of four, from low-resolution (LR) inputs using prior information. The LR images originate from bicubic downsampling degradation. The aim of the challenge is to obtain designs/solutions with the most advanced SR performance, with no constraints on computational resources (e.g., model size and FLOPs) or training data. The track of this challenge assesses performance with the PSNR metric on the DIV2K testing dataset. The competition attracted 199 registrants, with 20 teams submitting valid entries. This collective endeavour not only pushes the boundaries of performance in single-image SR but also offers a comprehensive overview of current trends in this field.

GT-Rain Single Image Deraining Challenge Report

Mar 18, 2024

Abstract:This report reviews the results of the GT-Rain challenge on single image deraining at the UG2+ workshop at CVPR 2023. The aim of this competition is to study the rainy weather phenomenon in real world scenarios, provide a novel real world rainy image dataset, and to spark innovative ideas that will further the development of single image deraining methods on real images. Submissions were trained on the GT-Rain dataset and evaluated on an extension of the dataset consisting of 15 additional scenes. Scenes in GT-Rain are comprised of real rainy image and ground truth image captured moments after the rain had stopped. 275 participants were registered in the challenge and 55 competed in the final testing phase.

NoiSER: Noise is All You Need for Enhancing Low-Light Images Without Task-Related Data

Nov 09, 2022

Abstract:This paper is about an extraordinary phenomenon. Suppose we don't use any low-light images as training data, can we enhance a low-light image by deep learning? Obviously, current methods cannot do this, since deep neural networks require to train their scads of parameters using copious amounts of training data, especially task-related data. In this paper, we show that in the context of fundamental deep learning, it is possible to enhance a low-light image without any task-related training data. Technically, we propose a new, magical, effective and efficient method, termed \underline{Noi}se \underline{SE}lf-\underline{R}egression (NoiSER), which learns a gray-world mapping from Gaussian distribution for low-light image enhancement (LLIE). Specifically, a self-regression model is built as a carrier to learn a gray-world mapping during training, which is performed by simply iteratively feeding random noise. During inference, a low-light image is directly fed into the learned mapping to yield a normal-light one. Extensive experiments show that our NoiSER is highly competitive to current task-related data based LLIE models in terms of quantitative and visual results, while outperforming them in terms of the number of parameters, training time and inference speed. With only about 1K parameters, NoiSER realizes about 1 minute for training and 1.2 ms for inference with 600$\times$400 resolution on RTX 2080 Ti. Besides, NoiSER has an inborn automated exposure suppression capability and can automatically adjust too bright or too dark, without additional manipulations.

FCL-GAN: A Lightweight and Real-Time Baseline for Unsupervised Blind Image Deblurring

Apr 16, 2022

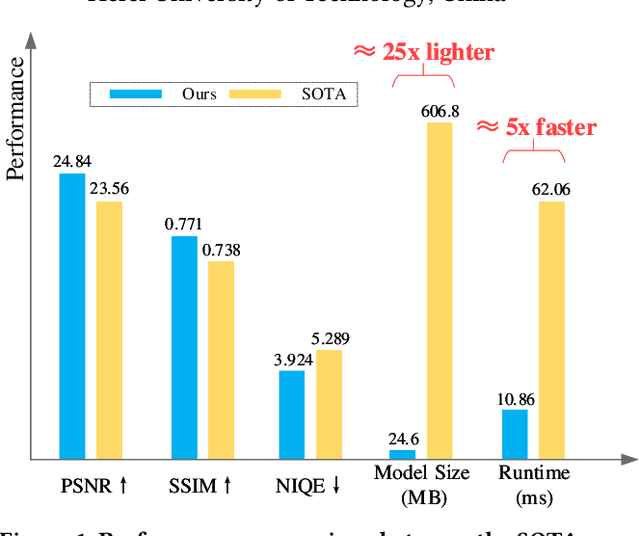

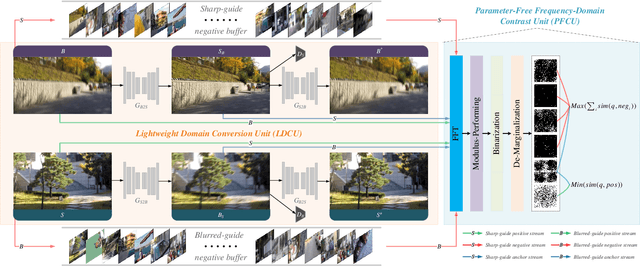

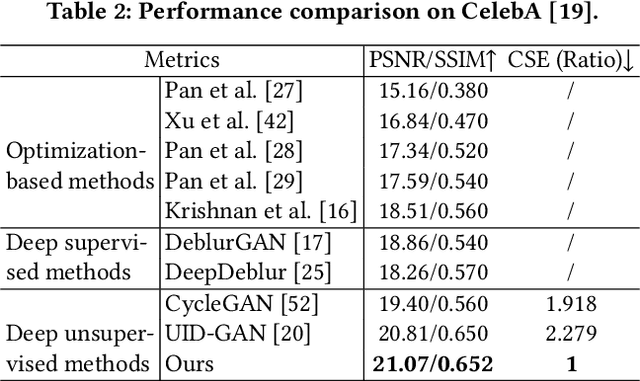

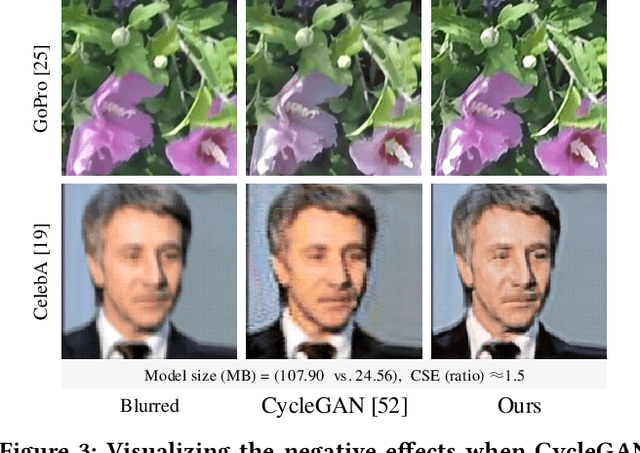

Abstract:Blind image deblurring (BID) remains a challenging and significant task. Benefiting from the strong fitting ability of deep learning, paired data-driven supervised BID method has obtained great progress. However, paired data are usually synthesized by hand, and the realistic blurs are more complex than synthetic ones, which makes the supervised methods inept at modeling realistic blurs and hinders their real-world applications. As such, unsupervised deep BID method without paired data offers certain advantages, but current methods still suffer from some drawbacks, e.g., bulky model size, long inference time, and strict image resolution and domain requirements. In this paper, we propose a lightweight and real-time unsupervised BID baseline, termed Frequency-domain Contrastive Loss Constrained Lightweight CycleGAN (shortly, FCL-GAN), with attractive properties, i.e., no image domain limitation, no image resolution limitation, 25x lighter than SOTA, and 5x faster than SOTA. To guarantee the lightweight property and performance superiority, two new collaboration units called lightweight domain conversion unit(LDCU) and parameter-free frequency-domain contrastive unit(PFCU) are designed. LDCU mainly implements inter-domain conversion in lightweight manner. PFCU further explores the similarity measure, external difference and internal connection between the blurred domain and sharp domain images in frequency domain, without involving extra parameters. Extensive experiments on several image datasets demonstrate the effectiveness of our FCL-GAN in terms of performance, model size and reference time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge