Li Wang

Northeast Normal University

Transfer Learning-based Channel Estimation in Orthogonal Frequency Division Multiplexing Systems Using Data-nulling Superimposed Pilots

May 28, 2022

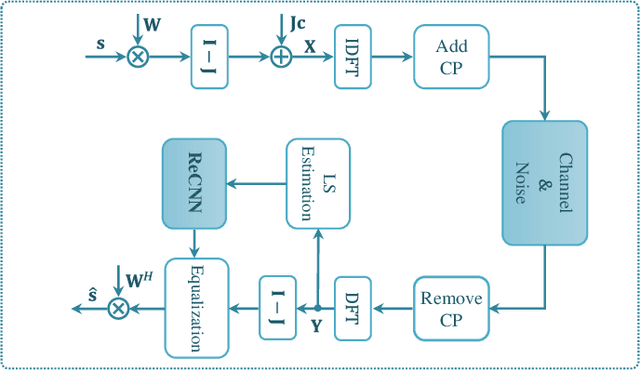

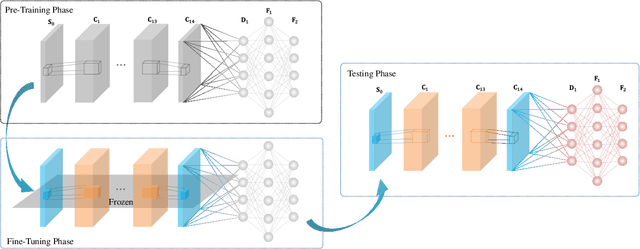

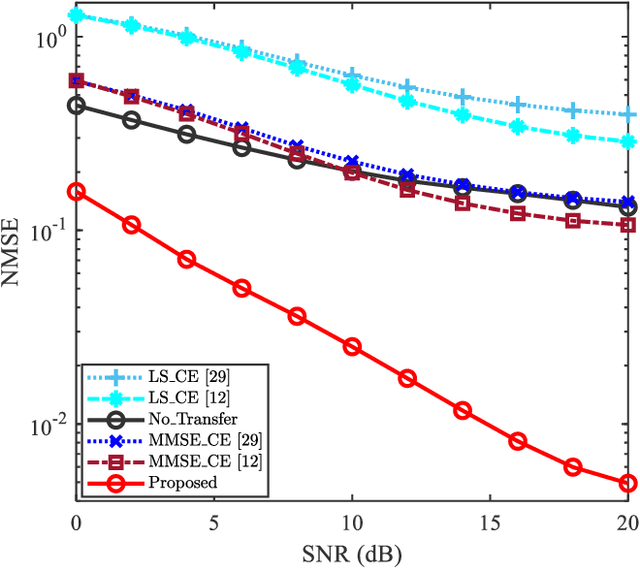

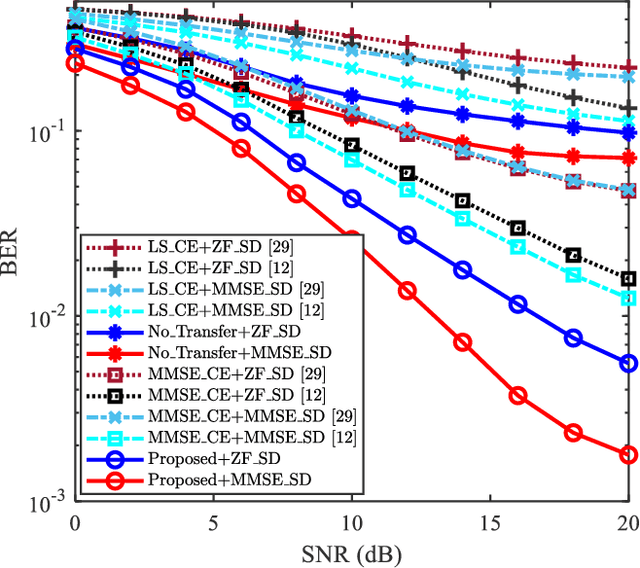

Abstract:Data-nulling superimposed pilot (DNSP) effectively alleviates the superimposed interference of superimposed training (ST)-based channel estimation (CE) in orthogonal frequency division multiplexing (OFDM) systems, while facing the challenges of the estimation accuracy and computational complexity. By developing the promising solutions of deep learning (DL) in the physical layer of wireless communication, we fuse the DNSP and DL to tackle these challenges in this paper. Nevertheless, due to the changes of wireless scenarios, the model mismatch of DL leads to the performance degradation of CE, and thus faces the issue of network retraining. To address this issue, a lightweight transfer learning (TL) network is further proposed for the DL-based DNSP scheme, and thus structures a TL-based CE in OFDM systems. Specifically, based on the linear receiver, the least squares estimation is first employed to extract the initial features of CE. With the extracted features, we develop a convolutional neural network (CNN) to fuse the solutions of DLbased CE and the CE of DNSP. Finally, a lightweight TL network is constructed to address the model mismatch. To this end, a novel CE network for the DNSP scheme in OFDM systems is structured, which improves its estimation accuracy and alleviates the model mismatch. The experimental results show that in all signal-to-noise-ratio (SNR) regions, the proposed method achieves lower normalized mean squared error (NMSE) than the existing DNSP schemes with minimum mean square error (MMSE)-based CE. For example, when the SNR is 0 decibel (dB), the proposed scheme achieves similar NMSE as that of the MMSE-based CE scheme at 20 dB, thereby significantly improving the estimation accuracy of CE. In addition, relative to the existing schemes, the improvement of the proposed scheme presents its robustness against the impacts of parameter variations.

NTIRE 2022 Challenge on Efficient Super-Resolution: Methods and Results

May 11, 2022

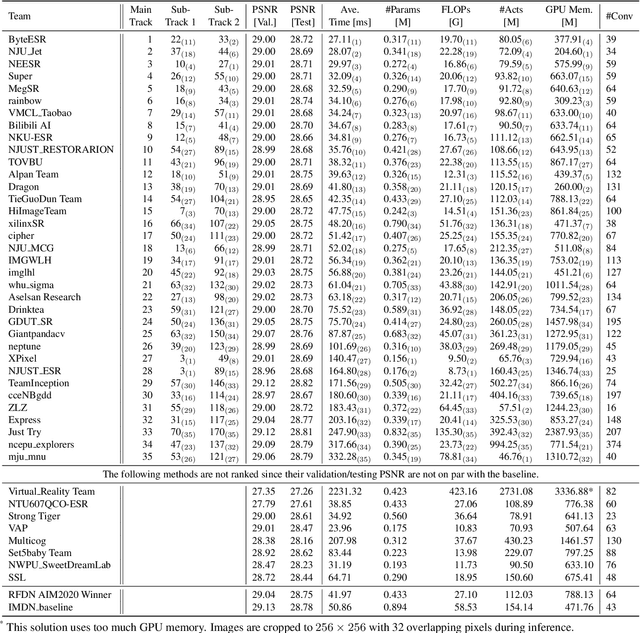

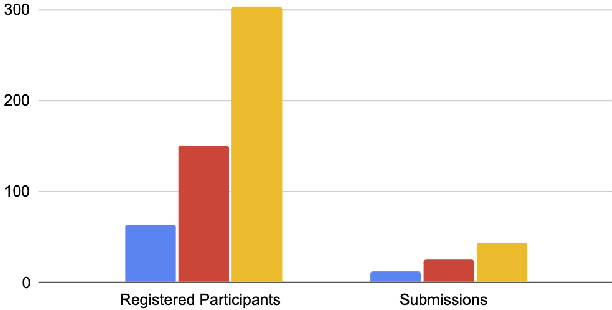

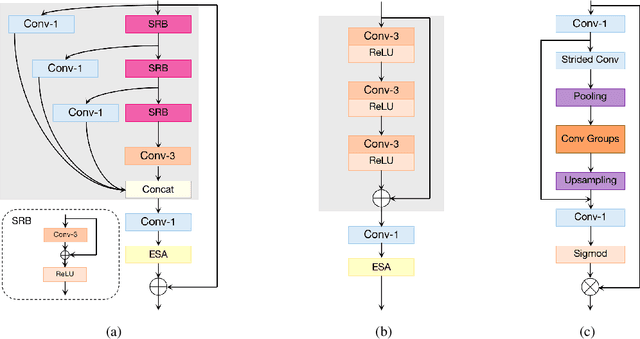

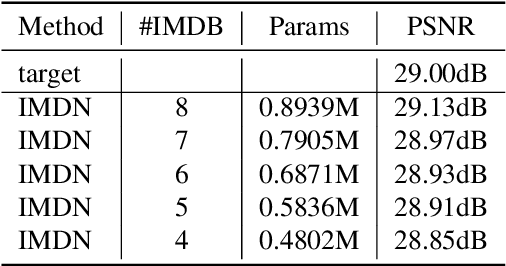

Abstract:This paper reviews the NTIRE 2022 challenge on efficient single image super-resolution with focus on the proposed solutions and results. The task of the challenge was to super-resolve an input image with a magnification factor of $\times$4 based on pairs of low and corresponding high resolution images. The aim was to design a network for single image super-resolution that achieved improvement of efficiency measured according to several metrics including runtime, parameters, FLOPs, activations, and memory consumption while at least maintaining the PSNR of 29.00dB on DIV2K validation set. IMDN is set as the baseline for efficiency measurement. The challenge had 3 tracks including the main track (runtime), sub-track one (model complexity), and sub-track two (overall performance). In the main track, the practical runtime performance of the submissions was evaluated. The rank of the teams were determined directly by the absolute value of the average runtime on the validation set and test set. In sub-track one, the number of parameters and FLOPs were considered. And the individual rankings of the two metrics were summed up to determine a final ranking in this track. In sub-track two, all of the five metrics mentioned in the description of the challenge including runtime, parameter count, FLOPs, activations, and memory consumption were considered. Similar to sub-track one, the rankings of five metrics were summed up to determine a final ranking. The challenge had 303 registered participants, and 43 teams made valid submissions. They gauge the state-of-the-art in efficient single image super-resolution.

ImpDet: Exploring Implicit Fields for 3D Object Detection

Mar 31, 2022

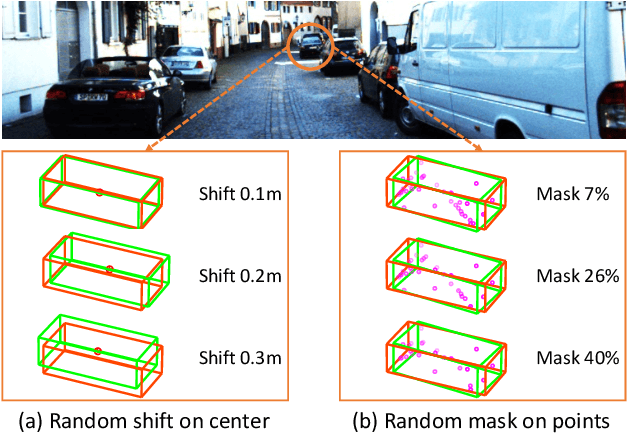

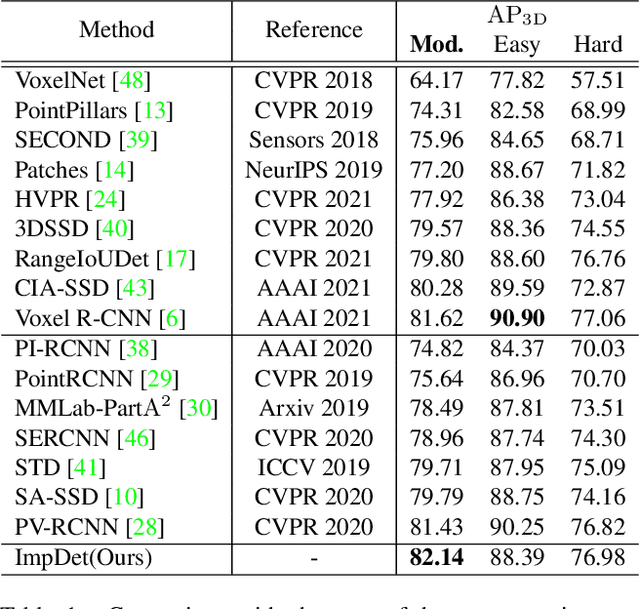

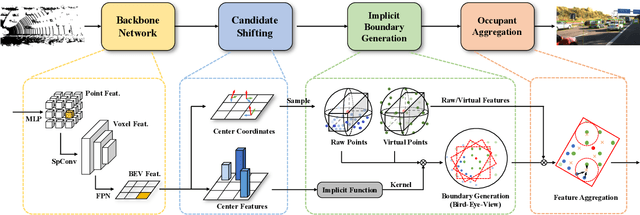

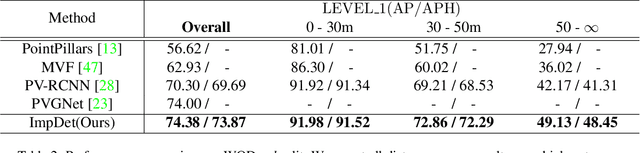

Abstract:Conventional 3D object detection approaches concentrate on bounding boxes representation learning with several parameters, i.e., localization, dimension, and orientation. Despite its popularity and universality, such a straightforward paradigm is sensitive to slight numerical deviations, especially in localization. By exploiting the property that point clouds are naturally captured on the surface of objects along with accurate location and intensity information, we introduce a new perspective that views bounding box regression as an implicit function. This leads to our proposed framework, termed Implicit Detection or ImpDet, which leverages implicit field learning for 3D object detection. Our ImpDet assigns specific values to points in different local 3D spaces, thereby high-quality boundaries can be generated by classifying points inside or outside the boundary. To solve the problem of sparsity on the object surface, we further present a simple yet efficient virtual sampling strategy to not only fill the empty region, but also learn rich semantic features to help refine the boundaries. Extensive experimental results on KITTI and Waymo benchmarks demonstrate the effectiveness and robustness of unifying implicit fields into object detection.

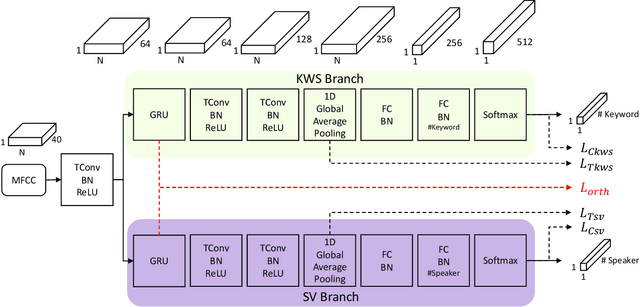

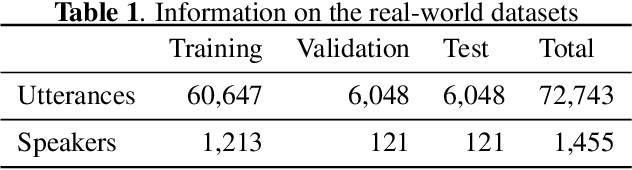

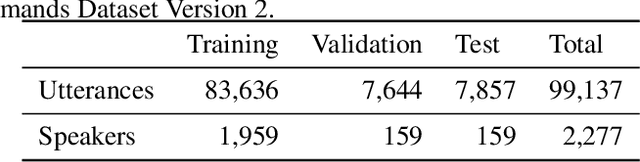

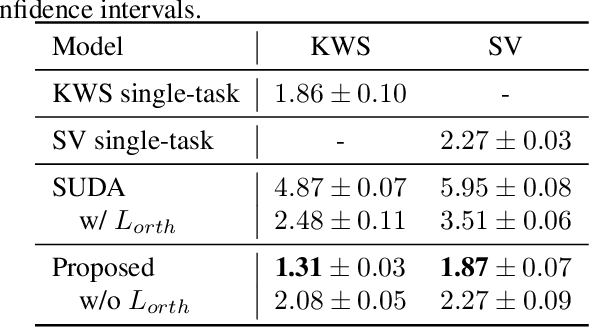

Learning Decoupling Features Through Orthogonality Regularization

Mar 31, 2022

Abstract:Keyword spotting (KWS) and speaker verification (SV) are two important tasks in speech applications. Research shows that the state-of-art KWS and SV models are trained independently using different datasets since they expect to learn distinctive acoustic features. However, humans can distinguish language content and the speaker identity simultaneously. Motivated by this, we believe it is important to explore a method that can effectively extract common features while decoupling task-specific features. Bearing this in mind, a two-branch deep network (KWS branch and SV branch) with the same network structure is developed and a novel decoupling feature learning method is proposed to push up the performance of KWS and SV simultaneously where speaker-invariant keyword representations and keyword-invariant speaker representations are expected respectively. Experiments are conducted on Google Speech Commands Dataset (GSCD). The results demonstrate that the orthogonality regularization helps the network to achieve SOTA EER of 1.31% and 1.87% on KWS and SV, respectively.

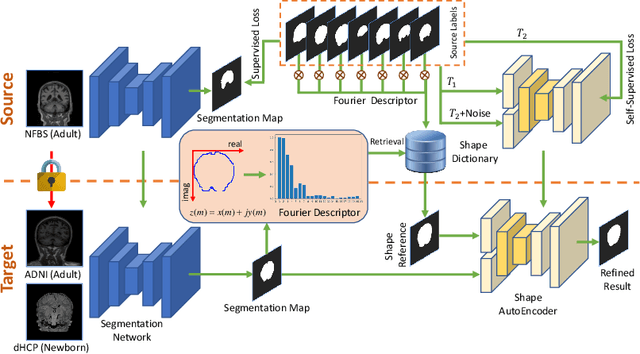

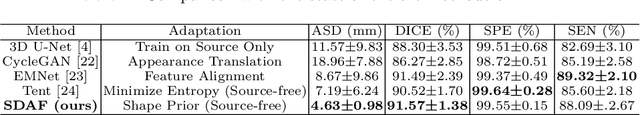

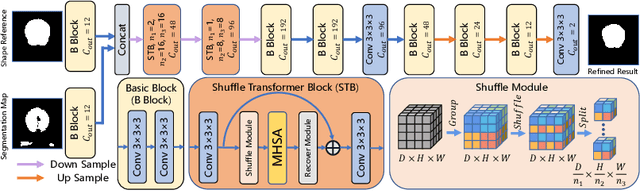

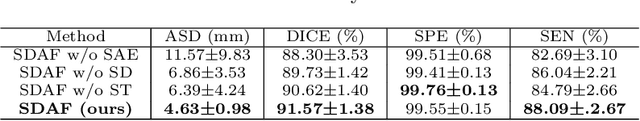

Source-free Domain Adaptation for Multi-site and Lifespan Brain Skull Stripping

Mar 11, 2022

Abstract:Skull stripping is a crucial prerequisite step in the analysis of brain magnetic resonance (MR) images. Although many excellent works or tools have been proposed, they suffer from low generalization capability. For instance, the model trained on a dataset with specific imaging parameters (source domain) cannot be well applied to other datasets with different imaging parameters (target domain). Especially, for the lifespan datasets, the model trained on an adult dataset is not applicable to an infant dataset due to the large domain difference. To address this issue, numerous domain adaptation (DA) methods have been proposed to align the extracted features between the source and target domains, requiring concurrent access to the input images of both domains. Unfortunately, it is problematic to share the images due to privacy. In this paper, we design a source-free domain adaptation framework (SDAF) for multi-site and lifespan skull stripping that can accomplish domain adaptation without access to source domain images. Our method only needs to share the source labels as shape dictionaries and the weights trained on the source data, without disclosing private information from source domain subjects. To deal with the domain shift between multi-site lifespan datasets, we take advantage of the brain shape prior which is invariant to imaging parameters and ages. Experiments demonstrate that our framework can significantly outperform the state-of-the-art methods on multi-site lifespan datasets.

Computer-Aided Road Inspection: Systems and Algorithms

Mar 04, 2022

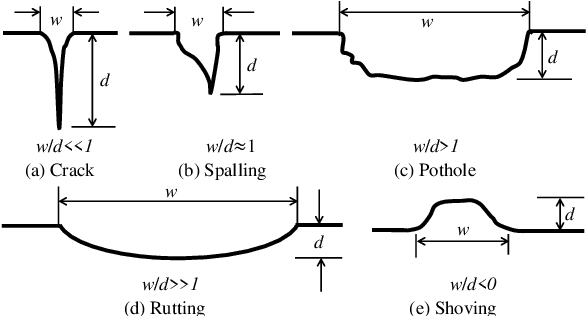

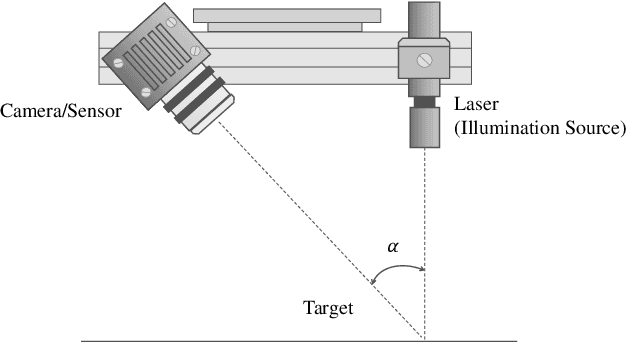

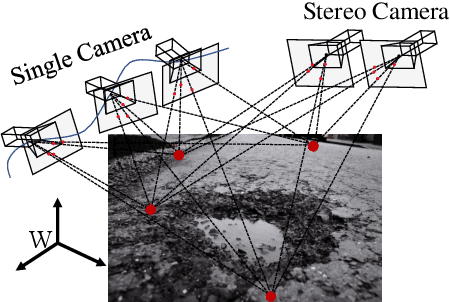

Abstract:Road damage is an inconvenience and a safety hazard, severely affecting vehicle condition, driving comfort, and traffic safety. The traditional manual visual road inspection process is pricey, dangerous, exhausting, and cumbersome. Also, manual road inspection results are qualitative and subjective, as they depend entirely on the inspector's personal experience. Therefore, there is an ever-increasing need for automated road inspection systems. This chapter first compares the five most common road damage types. Then, 2-D/3-D road imaging systems are discussed. Finally, state-of-the-art machine vision and intelligence-based road damage detection algorithms are introduced.

A Framework for Multi-stage Bonus Allocation in meal delivery Platform

Feb 22, 2022

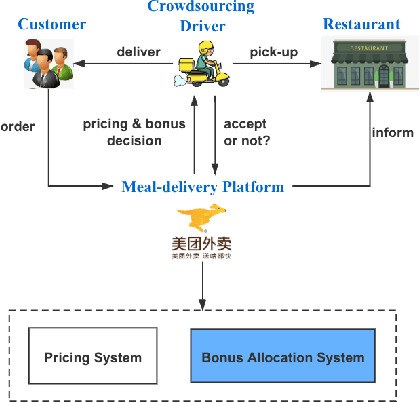

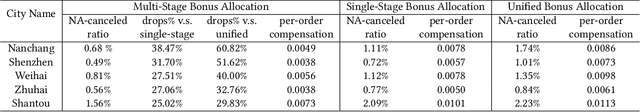

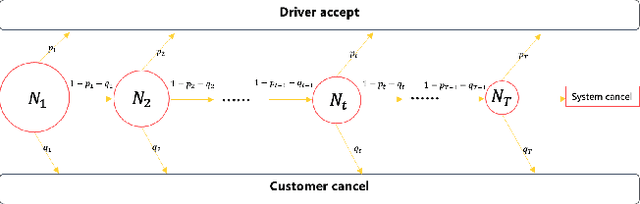

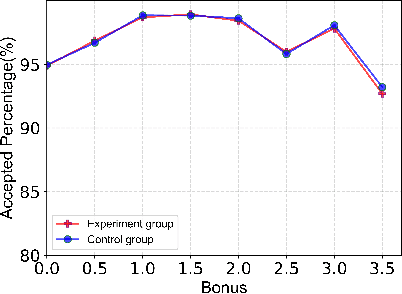

Abstract:Online meal delivery is undergoing explosive growth, as this service is becoming increasingly popular. A meal delivery platform aims to provide excellent and stable services for customers and restaurants. However, in reality, several hundred thousand orders are canceled per day in the Meituan meal delivery platform since they are not accepted by the crowd soucing drivers. The cancellation of the orders is incredibly detrimental to the customer's repurchase rate and the reputation of the Meituan meal delivery platform. To solve this problem, a certain amount of specific funds is provided by Meituan's business managers to encourage the crowdsourcing drivers to accept more orders. To make better use of the funds, in this work, we propose a framework to deal with the multi-stage bonus allocation problem for a meal delivery platform. The objective of this framework is to maximize the number of accepted orders within a limited bonus budget. This framework consists of a semi-black-box acceptance probability model, a Lagrangian dual-based dynamic programming algorithm, and an online allocation algorithm. The semi-black-box acceptance probability model is employed to forecast the relationship between the bonus allocated to order and its acceptance probability, the Lagrangian dual-based dynamic programming algorithm aims to calculate the empirical Lagrangian multiplier for each allocation stage offline based on the historical data set, and the online allocation algorithm uses the results attained in the offline part to calculate a proper delivery bonus for each order. To verify the effectiveness and efficiency of our framework, both offline experiments on a real-world data set and online A/B tests on the Meituan meal delivery platform are conducted. Our results show that using the proposed framework, the total order cancellations can be decreased by more than 25\% in reality.

Seeing is Living? Rethinking the Security of Facial Liveness Verification in the Deepfake Era

Feb 22, 2022

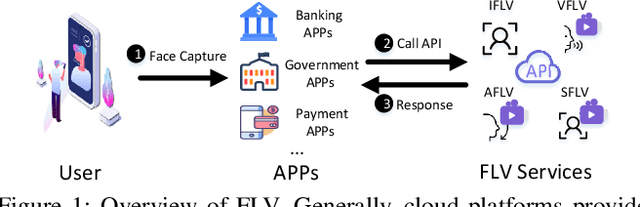

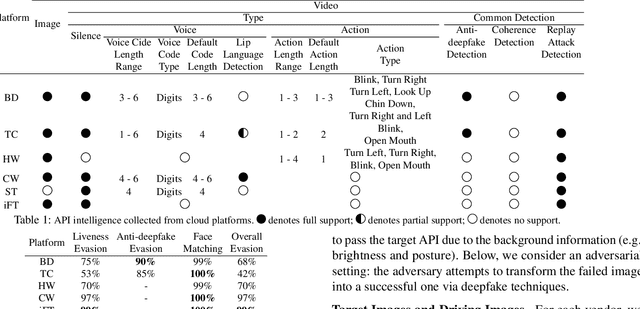

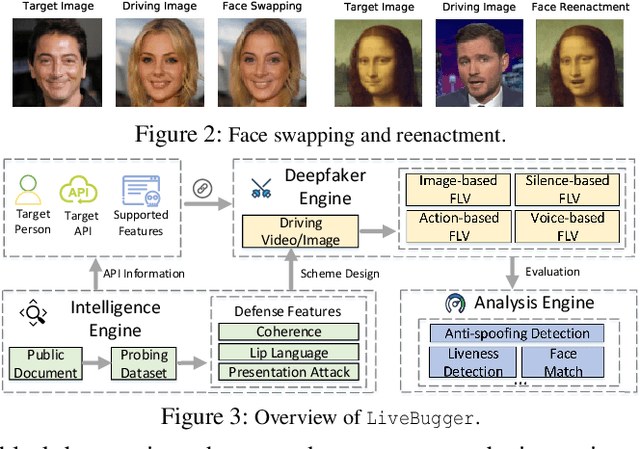

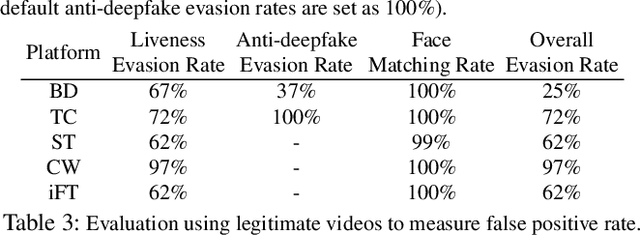

Abstract:Facial Liveness Verification (FLV) is widely used for identity authentication in many security-sensitive domains and offered as Platform-as-a-Service (PaaS) by leading cloud vendors. Yet, with the rapid advances in synthetic media techniques (e.g., deepfake), the security of FLV is facing unprecedented challenges, about which little is known thus far. To bridge this gap, in this paper, we conduct the first systematic study on the security of FLV in real-world settings. Specifically, we present LiveBugger, a new deepfake-powered attack framework that enables customizable, automated security evaluation of FLV. Leveraging LiveBugger, we perform a comprehensive empirical assessment of representative FLV platforms, leading to a set of interesting findings. For instance, most FLV APIs do not use anti-deepfake detection; even for those with such defenses, their effectiveness is concerning (e.g., it may detect high-quality synthesized videos but fail to detect low-quality ones). We then conduct an in-depth analysis of the factors impacting the attack performance of LiveBugger: a) the bias (e.g., gender or race) in FLV can be exploited to select victims; b) adversarial training makes deepfake more effective to bypass FLV; c) the input quality has a varying influence on different deepfake techniques to bypass FLV. Based on these findings, we propose a customized, two-stage approach that can boost the attack success rate by up to 70%. Further, we run proof-of-concept attacks on several representative applications of FLV (i.e., the clients of FLV APIs) to illustrate the practical implications: due to the vulnerability of the APIs, many downstream applications are vulnerable to deepfake. Finally, we discuss potential countermeasures to improve the security of FLV. Our findings have been confirmed by the corresponding vendors.

Exploiting Data Sparsity in Secure Cross-Platform Social Recommendation

Feb 15, 2022

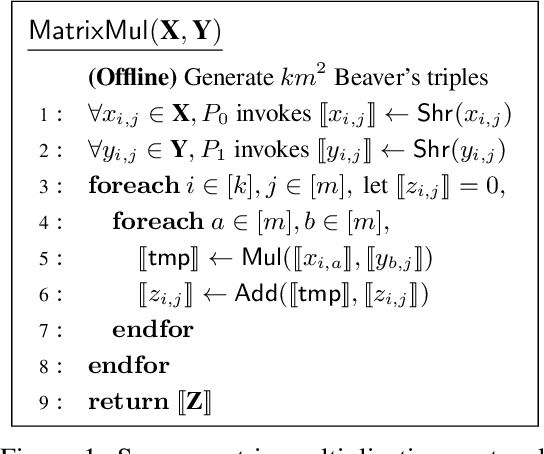

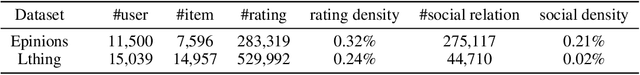

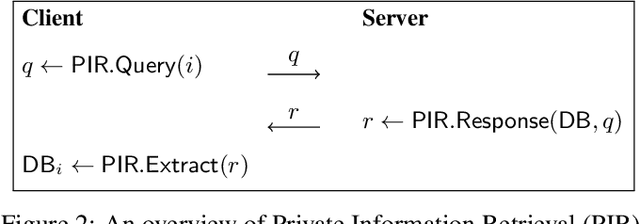

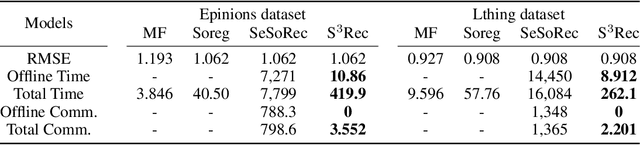

Abstract:Social recommendation has shown promising improvements over traditional systems since it leverages social correlation data as an additional input. Most existing work assumes that all data are available to the recommendation platform. However, in practice, user-item interaction data (e.g.,rating) and user-user social data are usually generated by different platforms, and both of which contain sensitive information. Therefore, "How to perform secure and efficient social recommendation across different platforms, where the data are highly-sparse in nature" remains an important challenge. In this work, we bring secure computation techniques into social recommendation, and propose S3Rec, a sparsity-aware secure cross-platform social recommendation framework. As a result, our model can not only improve the recommendation performance of the rating platform by incorporating the sparse social data on the social platform, but also protect data privacy of both platforms. Moreover, to further improve model training efficiency, we propose two secure sparse matrix multiplication protocols based on homomorphic encryption and private information retrieval. Our experiments on two benchmark datasets demonstrate the effectiveness of S3Rec.

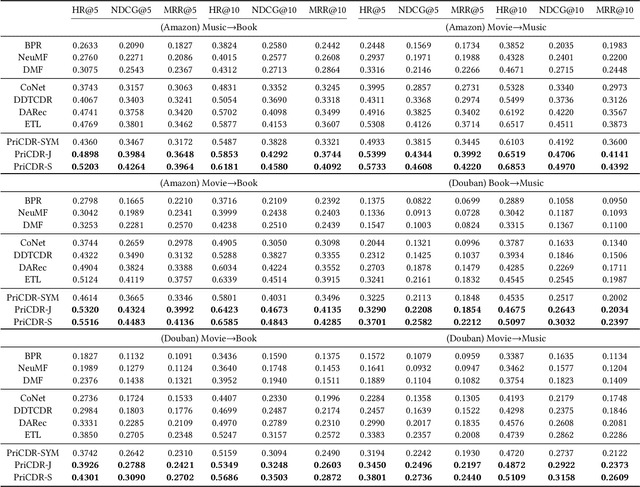

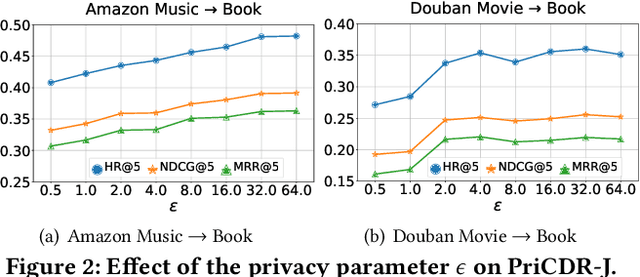

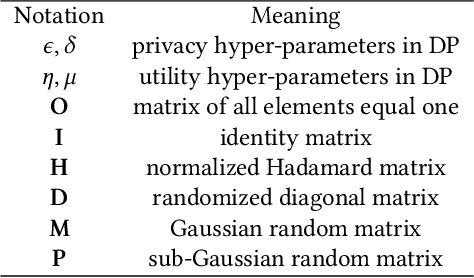

Differential Private Knowledge Transfer for Privacy-Preserving Cross-Domain Recommendation

Feb 10, 2022

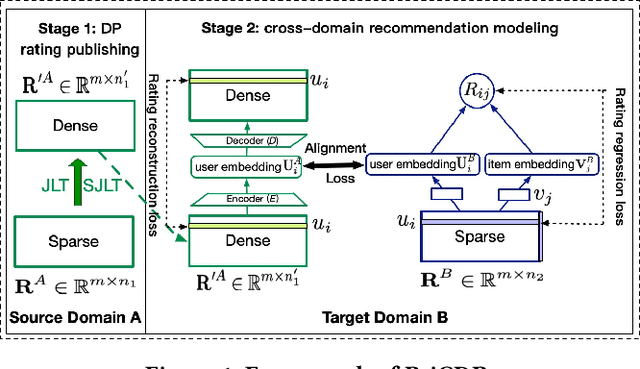

Abstract:Cross Domain Recommendation (CDR) has been popularly studied to alleviate the cold-start and data sparsity problem commonly existed in recommender systems. CDR models can improve the recommendation performance of a target domain by leveraging the data of other source domains. However, most existing CDR models assume information can directly 'transfer across the bridge', ignoring the privacy issues. To solve the privacy concern in CDR, in this paper, we propose a novel two stage based privacy-preserving CDR framework (PriCDR). In the first stage, we propose two methods, i.e., Johnson-Lindenstrauss Transform (JLT) based and Sparse-awareJLT (SJLT) based, to publish the rating matrix of the source domain using differential privacy. We theoretically analyze the privacy and utility of our proposed differential privacy based rating publishing methods. In the second stage, we propose a novel heterogeneous CDR model (HeteroCDR), which uses deep auto-encoder and deep neural network to model the published source rating matrix and target rating matrix respectively. To this end, PriCDR can not only protect the data privacy of the source domain, but also alleviate the data sparsity of the source domain. We conduct experiments on two benchmark datasets and the results demonstrate the effectiveness of our proposed PriCDR and HeteroCDR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge