Zhihao Zhang

Steering LLMs via Scalable Interactive Oversight

Feb 04, 2026Abstract:As Large Language Models increasingly automate complex, long-horizon tasks such as \emph{vibe coding}, a supervision gap has emerged. While models excel at execution, users often struggle to guide them effectively due to insufficient domain expertise, the difficulty of articulating precise intent, and the inability to reliably validate complex outputs. It presents a critical challenge in scalable oversight: enabling humans to responsibly steer AI systems on tasks that surpass their own ability to specify or verify. To tackle this, we propose Scalable Interactive Oversight, a framework that decomposes complex intent into a recursive tree of manageable decisions to amplify human supervision. Rather than relying on open-ended prompting, our system elicits low-burden feedback at each node and recursively aggregates these signals into precise global guidance. Validated in web development task, our framework enables non-experts to produce expert-level Product Requirement Documents, achieving a 54\% improvement in alignment. Crucially, we demonstrate that this framework can be optimized via Reinforcement Learning using only online user feedback, offering a practical pathway for maintaining human control as AI scales.

Aligning Microscopic Vehicle and Macroscopic Traffic Statistics: Reconstructing Driving Behavior from Partial Data

Jan 29, 2026Abstract:A driving algorithm that aligns with good human driving practices, or at the very least collaborates effectively with human drivers, is crucial for developing safe and efficient autonomous vehicles. In practice, two main approaches are commonly adopted: (i) supervised or imitation learning, which requires comprehensive naturalistic driving data capturing all states that influence a vehicle's decisions and corresponding actions, and (ii) reinforcement learning (RL), where the simulated driving environment either matches or is intentionally more challenging than real-world conditions. Both methods depend on high-quality observations of real-world driving behavior, which are often difficult and costly to obtain. State-of-the-art sensors on individual vehicles can gather microscopic data, but they lack context about the surrounding conditions. Conversely, roadside sensors can capture traffic flow and other macroscopic characteristics, but they cannot associate this information with individual vehicles on a microscopic level. Motivated by this complementarity, we propose a framework that reconstructs unobserved microscopic states from macroscopic observations, using microscopic data to anchor observed vehicle behaviors, and learns a shared policy whose behavior is microscopically consistent with the partially observed trajectories and actions and macroscopically aligned with target traffic statistics when deployed population-wide. Such constrained and regularized policies promote realistic flow patterns and safe coordination with human drivers at scale.

Health-ORSC-Bench: A Benchmark for Measuring Over-Refusal and Safety Completion in Health Context

Jan 25, 2026Abstract:Safety alignment in Large Language Models is critical for healthcare; however, reliance on binary refusal boundaries often results in \emph{over-refusal} of benign queries or \emph{unsafe compliance} with harmful ones. While existing benchmarks measure these extremes, they fail to evaluate Safe Completion: the model's ability to maximise helpfulness on dual-use or borderline queries by providing safe, high-level guidance without crossing into actionable harm. We introduce \textbf{Health-ORSC-Bench}, the first large-scale benchmark designed to systematically measure \textbf{Over-Refusal} and \textbf{Safe Completion} quality in healthcare. Comprising 31,920 benign boundary prompts across seven health categories (e.g., self-harm, medical misinformation), our framework uses an automated pipeline with human validation to test models at varying levels of intent ambiguity. We evaluate 30 state-of-the-art LLMs, including GPT-5 and Claude-4, revealing a significant tension: safety-optimised models frequently refuse up to 80\% of "Hard" benign prompts, while domain-specific models often sacrifice safety for utility. Our findings demonstrate that model family and size significantly influence calibration: larger frontier models (e.g., GPT-5, Llama-4) exhibit "safety-pessimism" and higher over-refusal than smaller or MoE-based counterparts (e.g., Qwen-3-Next), highlighting that current LLMs struggle to balance refusal and compliance. Health-ORSC-Bench provides a rigorous standard for calibrating the next generation of medical AI assistants toward nuanced, safe, and helpful completions. The code and data will be released upon acceptance. \textcolor{red}{Warning: Some contents may include toxic or undesired contents.}

Locate, Steer, and Improve: A Practical Survey of Actionable Mechanistic Interpretability in Large Language Models

Jan 20, 2026Abstract:Mechanistic Interpretability (MI) has emerged as a vital approach to demystify the opaque decision-making of Large Language Models (LLMs). However, existing reviews primarily treat MI as an observational science, summarizing analytical insights while lacking a systematic framework for actionable intervention. To bridge this gap, we present a practical survey structured around the pipeline: "Locate, Steer, and Improve." We formally categorize Localizing (diagnosis) and Steering (intervention) methods based on specific Interpretable Objects to establish a rigorous intervention protocol. Furthermore, we demonstrate how this framework enables tangible improvements in Alignment, Capability, and Efficiency, effectively operationalizing MI as an actionable methodology for model optimization. The curated paper list of this work is available at https://github.com/rattlesnakey/Awesome-Actionable-MI-Survey.

Mirage Persistent Kernel: A Compiler and Runtime for Mega-Kernelizing Tensor Programs

Dec 22, 2025Abstract:We introduce Mirage Persistent Kernel (MPK), the first compiler and runtime system that automatically transforms multi-GPU model inference into a single high-performance megakernel. MPK introduces an SM-level graph representation that captures data dependencies at the granularity of individual streaming multiprocessors (SMs), enabling cross-operator software pipelining, fine-grained kernel overlap, and other previously infeasible GPU optimizations. The MPK compiler lowers tensor programs into highly optimized SM-level task graphs and generates optimized CUDA implementations for all tasks, while the MPK in-kernel parallel runtime executes these tasks within a single mega-kernel using decentralized scheduling across SMs. Together, these components provide end-to-end kernel fusion with minimal developer effort, while preserving the flexibility of existing programming models. Our evaluation shows that MPK significantly outperforms existing kernel-per-operator LLM serving systems by reducing end-to-end inference latency by up to 1.7x, pushing LLM inference performance close to hardware limits. MPK is publicly available at https://github.com/mirage-project/mirage.

Learning High-Quality Initial Noise for Single-View Synthesis with Diffusion Models

Dec 18, 2025Abstract:Single-view novel view synthesis (NVS) models based on diffusion models have recently attracted increasing attention, as they can generate a series of novel view images from a single image prompt and camera pose information as conditions. It has been observed that in diffusion models, certain high-quality initial noise patterns lead to better generation results than others. However, there remains a lack of dedicated learning frameworks that enable NVS models to learn such high-quality noise. To obtain high-quality initial noise from random Gaussian noise, we make the following contributions. First, we design a discretized Euler inversion method to inject image semantic information into random noise, thereby constructing paired datasets of random and high-quality noise. Second, we propose a learning framework based on an encoder-decoder network (EDN) that directly transforms random noise into high-quality noise. Experiments demonstrate that the proposed EDN can be seamlessly plugged into various NVS models, such as SV3D and MV-Adapter, achieving significant performance improvements across multiple datasets. Code is available at: https://github.com/zhihao0512/EDN.

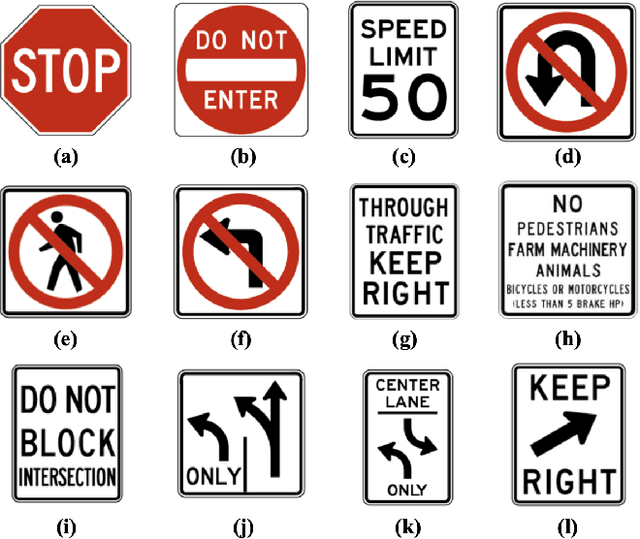

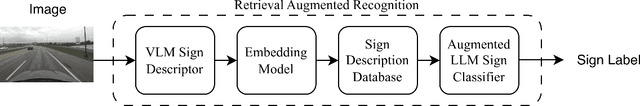

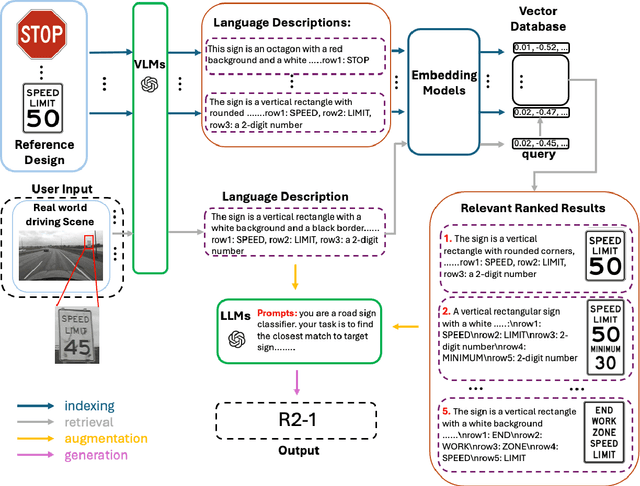

SignRAG: A Retrieval-Augmented System for Scalable Zero-Shot Road Sign Recognition

Dec 14, 2025

Abstract:Automated road sign recognition is a critical task for intelligent transportation systems, but traditional deep learning methods struggle with the sheer number of sign classes and the impracticality of creating exhaustive labeled datasets. This paper introduces a novel zero-shot recognition framework that adapts the Retrieval-Augmented Generation (RAG) paradigm to address this challenge. Our method first uses a Vision Language Model (VLM) to generate a textual description of a sign from an input image. This description is used to retrieve a small set of the most relevant sign candidates from a vector database of reference designs. Subsequently, a Large Language Model (LLM) reasons over the retrieved candidates to make a final, fine-grained recognition. We validate this approach on a comprehensive set of 303 regulatory signs from the Ohio MUTCD. Experimental results demonstrate the framework's effectiveness, achieving 95.58% accuracy on ideal reference images and 82.45% on challenging real-world road data. This work demonstrates the viability of RAG-based architectures for creating scalable and accurate systems for road sign recognition without task-specific training.

AgentPRM: Process Reward Models for LLM Agents via Step-Wise Promise and Progress

Nov 11, 2025Abstract:Despite rapid development, large language models (LLMs) still encounter challenges in multi-turn decision-making tasks (i.e., agent tasks) like web shopping and browser navigation, which require making a sequence of intelligent decisions based on environmental feedback. Previous work for LLM agents typically relies on elaborate prompt engineering or fine-tuning with expert trajectories to improve performance. In this work, we take a different perspective: we explore constructing process reward models (PRMs) to evaluate each decision and guide the agent's decision-making process. Unlike LLM reasoning, where each step is scored based on correctness, actions in agent tasks do not have a clear-cut correctness. Instead, they should be evaluated based on their proximity to the goal and the progress they have made. Building on this insight, we propose a re-defined PRM for agent tasks, named AgentPRM, to capture both the interdependence between sequential decisions and their contribution to the final goal. This enables better progress tracking and exploration-exploitation balance. To scalably obtain labeled data for training AgentPRM, we employ a Temporal Difference-based (TD-based) estimation method combined with Generalized Advantage Estimation (GAE), which proves more sample-efficient than prior methods. Extensive experiments across different agentic tasks show that AgentPRM is over $8\times$ more compute-efficient than baselines, and it demonstrates robust improvement when scaling up test-time compute. Moreover, we perform detailed analyses to show how our method works and offer more insights, e.g., applying AgentPRM to the reinforcement learning of LLM agents.

Advancing Real-World Parking Slot Detection with Large-Scale Dataset and Semi-Supervised Baseline

Sep 16, 2025Abstract:As automatic parking systems evolve, the accurate detection of parking slots has become increasingly critical. This study focuses on parking slot detection using surround-view cameras, which offer a comprehensive bird's-eye view of the parking environment. However, the current datasets are limited in scale, and the scenes they contain are seldom disrupted by real-world noise (e.g., light, occlusion, etc.). Moreover, manual data annotation is prone to errors and omissions due to the complexity of real-world conditions, significantly increasing the cost of annotating large-scale datasets. To address these issues, we first construct a large-scale parking slot detection dataset (named CRPS-D), which includes various lighting distributions, diverse weather conditions, and challenging parking slot variants. Compared with existing datasets, the proposed dataset boasts the largest data scale and consists of a higher density of parking slots, particularly featuring more slanted parking slots. Additionally, we develop a semi-supervised baseline for parking slot detection, termed SS-PSD, to further improve performance by exploiting unlabeled data. To our knowledge, this is the first semi-supervised approach in parking slot detection, which is built on the teacher-student model with confidence-guided mask consistency and adaptive feature perturbation. Experimental results demonstrate the superiority of SS-PSD over the existing state-of-the-art (SoTA) solutions on both the proposed dataset and the existing dataset. Particularly, the more unlabeled data there is, the more significant the gains brought by our semi-supervised scheme. The relevant source codes and the dataset have been made publicly available at https://github.com/zzh362/CRPS-D.

An Uncertainty-Weighted Decision Transformer for Navigation in Dense, Complex Driving Scenarios

Sep 16, 2025Abstract:Autonomous driving in dense, dynamic environments requires decision-making systems that can exploit both spatial structure and long-horizon temporal dependencies while remaining robust to uncertainty. This work presents a novel framework that integrates multi-channel bird's-eye-view occupancy grids with transformer-based sequence modeling for tactical driving in complex roundabout scenarios. To address the imbalance between frequent low-risk states and rare safety-critical decisions, we propose the Uncertainty-Weighted Decision Transformer (UWDT). UWDT employs a frozen teacher transformer to estimate per-token predictive entropy, which is then used as a weight in the student model's loss function. This mechanism amplifies learning from uncertain, high-impact states while maintaining stability across common low-risk transitions. Experiments in a roundabout simulator, across varying traffic densities, show that UWDT consistently outperforms other baselines in terms of reward, collision rate, and behavioral stability. The results demonstrate that uncertainty-aware, spatial-temporal transformers can deliver safer and more efficient decision-making for autonomous driving in complex traffic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge