Yiheng Liu

Prompt Engineering for Healthcare: Methodologies and Applications

Apr 28, 2023Abstract:This review will introduce the latest advances in prompt engineering in the field of natural language processing (NLP) for the medical domain. First, we will provide a brief overview of the development of prompt engineering and emphasize its significant contributions to healthcare NLP applications such as question-answering systems, text summarization, and machine translation. With the continuous improvement of general large language models, the importance of prompt engineering in the healthcare domain is becoming increasingly prominent. The aim of this article is to provide useful resources and bridges for healthcare NLP researchers to better explore the application of prompt engineering in this field. We hope that this review can provide new ideas and inspire ample possibilities for research and application in medical NLP.

Summary of ChatGPT/GPT-4 Research and Perspective Towards the Future of Large Language Models

Apr 08, 2023

Abstract:This paper presents a comprehensive survey of ChatGPT and GPT-4, state-of-the-art large language models (LLM) from the GPT series, and their prospective applications across diverse domains. Indeed, key innovations such as large-scale pre-training that captures knowledge across the entire world wide web, instruction fine-tuning and Reinforcement Learning from Human Feedback (RLHF) have played significant roles in enhancing LLMs' adaptability and performance. We performed an in-depth analysis of 194 relevant papers on arXiv, encompassing trend analysis, word cloud representation, and distribution analysis across various application domains. The findings reveal a significant and increasing interest in ChatGPT/GPT-4 research, predominantly centered on direct natural language processing applications, while also demonstrating considerable potential in areas ranging from education and history to mathematics, medicine, and physics. This study endeavors to furnish insights into ChatGPT's capabilities, potential implications, ethical concerns, and offer direction for future advancements in this field.

Spatial-Temporal Convolutional Attention for Mapping Functional Brain Networks

Nov 04, 2022Abstract:Using functional magnetic resonance imaging (fMRI) and deep learning to explore functional brain networks (FBNs) has attracted many researchers. However, most of these studies are still based on the temporal correlation between the sources and voxel signals, and lack of researches on the dynamics of brain function. Due to the widespread local correlations in the volumes, FBNs can be generated directly in the spatial domain in a self-supervised manner by using spatial-wise attention (SA), and the resulting FBNs has a higher spatial similarity with templates compared to the classical method. Therefore, we proposed a novel Spatial-Temporal Convolutional Attention (STCA) model to discover the dynamic FBNs by using the sliding windows. To validate the performance of the proposed method, we evaluate the approach on HCP-rest dataset. The results indicate that STCA can be used to discover FBNs in a dynamic way which provide a novel approach to better understand human brain.

Discovering Dynamic Functional Brain Networks via Spatial and Channel-wise Attention

May 31, 2022

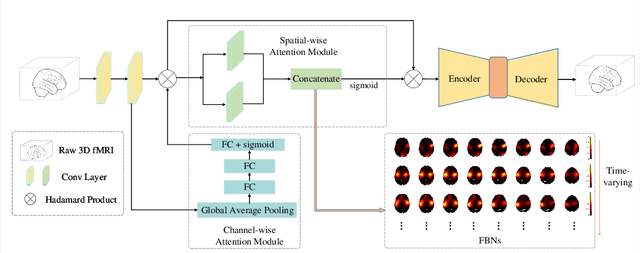

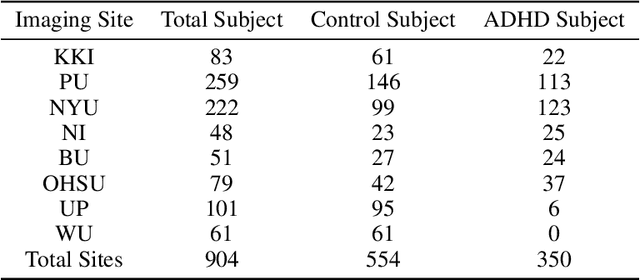

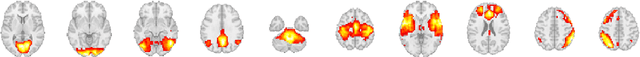

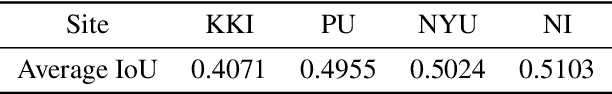

Abstract:Using deep learning models to recognize functional brain networks (FBNs) in functional magnetic resonance imaging (fMRI) has been attracting increasing interest recently. However, most existing work focuses on detecting static FBNs from entire fMRI signals, such as correlation-based functional connectivity. Sliding-window is a widely used strategy to capture the dynamics of FBNs, but it is still limited in representing intrinsic functional interactive dynamics at each time step. And the number of FBNs usually need to be set manually. More over, due to the complexity of dynamic interactions in brain, traditional linear and shallow models are insufficient in identifying complex and spatially overlapped FBNs across each time step. In this paper, we propose a novel Spatial and Channel-wise Attention Autoencoder (SCAAE) for discovering FBNs dynamically. The core idea of SCAAE is to apply attention mechanism to FBNs construction. Specifically, we designed two attention modules: 1) spatial-wise attention (SA) module to discover FBNs in the spatial domain and 2) a channel-wise attention (CA) module to weigh the channels for selecting the FBNs automatically. We evaluated our approach on ADHD200 dataset and our results indicate that the proposed SCAAE method can effectively recover the dynamic changes of the FBNs at each fMRI time step, without using sliding windows. More importantly, our proposed hybrid attention modules (SA and CA) do not enforce assumptions of linearity and independence as previous methods, and thus provide a novel approach to better understanding dynamic functional brain networks.

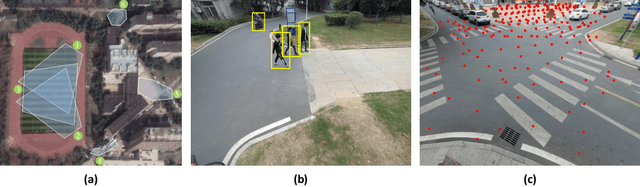

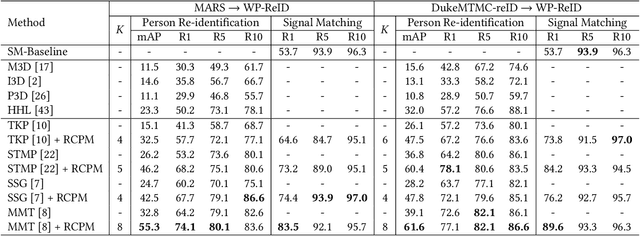

Unsupervised Person Re-Identification with Wireless Positioning under Weak Scene Labeling

Oct 29, 2021

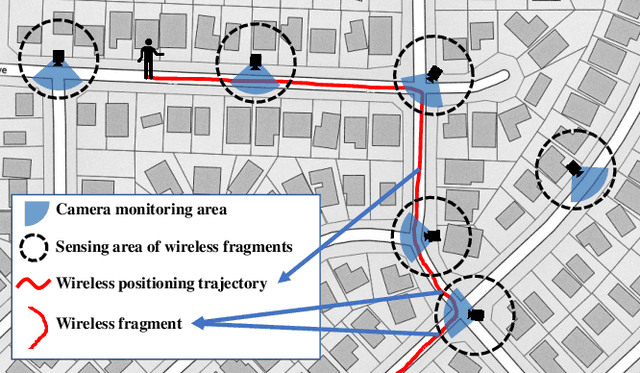

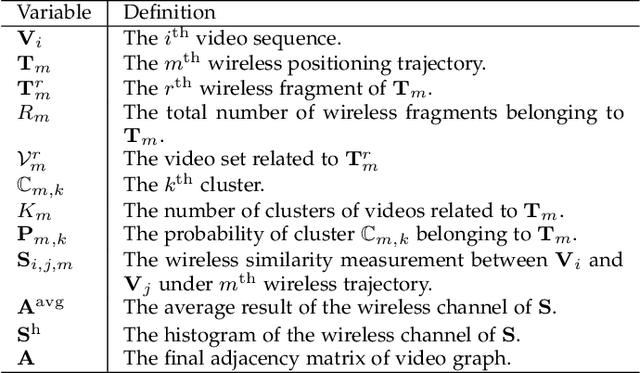

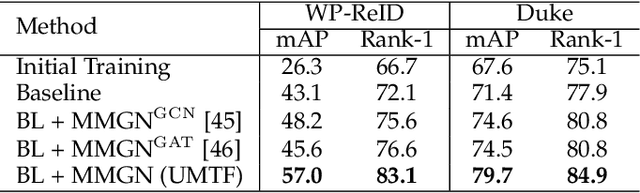

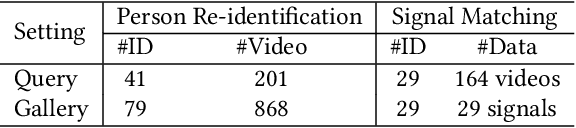

Abstract:Existing unsupervised person re-identification methods only rely on visual clues to match pedestrians under different cameras. Since visual data is essentially susceptible to occlusion, blur, clothing changes, etc., a promising solution is to introduce heterogeneous data to make up for the defect of visual data. Some works based on full-scene labeling introduce wireless positioning to assist cross-domain person re-identification, but their GPS labeling of entire monitoring scenes is laborious. To this end, we propose to explore unsupervised person re-identification with both visual data and wireless positioning trajectories under weak scene labeling, in which we only need to know the locations of the cameras. Specifically, we propose a novel unsupervised multimodal training framework (UMTF), which models the complementarity of visual data and wireless information. Our UMTF contains a multimodal data association strategy (MMDA) and a multimodal graph neural network (MMGN). MMDA explores potential data associations in unlabeled multimodal data, while MMGN propagates multimodal messages in the video graph based on the adjacency matrix learned from histogram statistics of wireless data. Thanks to the robustness of the wireless data to visual noise and the collaboration of various modules, UMTF is capable of learning a model free of the human label on data. Extensive experimental results conducted on two challenging datasets, i.e., WP-ReID and DukeMTMC-VideoReID demonstrate the effectiveness of the proposed method.

Learning Linear Non-Gaussian Graphical Models with Multidirected Edges

Oct 11, 2020

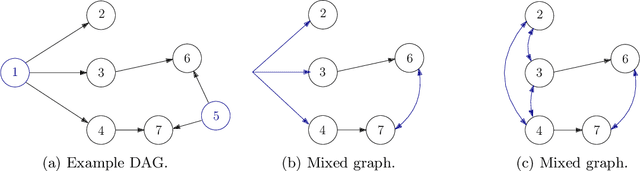

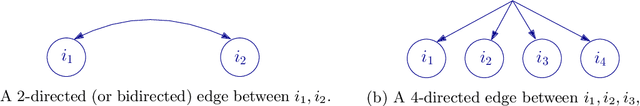

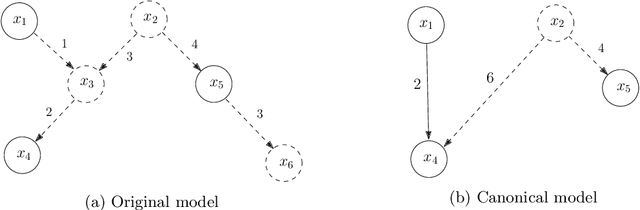

Abstract:In this paper we propose a new method to learn the underlying acyclic mixed graph of a linear non-Gaussian structural equation model given observational data. We build on an algorithm proposed by Wang and Drton, and we show that one can augment the hidden variable structure of the recovered model by learning {\em multidirected edges} rather than only directed and bidirected ones. Multidirected edges appear when more than two of the observed variables have a hidden common cause. We detect the presence of such hidden causes by looking at higher order cumulants and exploiting the multi-trek rule. Our method recovers the correct structure when the underlying graph is a bow-free acyclic mixed graph with potential multi-directed edges.

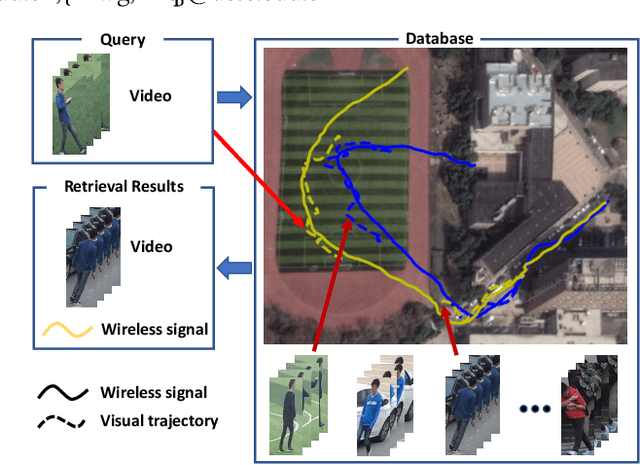

Vision Meets Wireless Positioning: Effective Person Re-identification with Recurrent Context Propagation

Sep 04, 2020

Abstract:Existing person re-identification methods rely on the visual sensor to capture the pedestrians. The image or video data from visual sensor inevitably suffers the occlusion and dramatic variations of pedestrian postures, which degrades the re-identification performance and further limits its application to the open environment. On the other hand, for most people, one of the most important carry-on items is the mobile phone, which can be sensed by WiFi and cellular networks in the form of a wireless positioning signal. Such signal is robust to the pedestrian occlusion and visual appearance change, but suffers some positioning error. In this work, we approach person re-identification with the sensing data from both vision and wireless positioning. To take advantage of such cross-modality cues, we propose a novel recurrent context propagation module that enables information to propagate between visual data and wireless positioning data and finally improves the matching accuracy. To evaluate our approach, we contribute a new Wireless Positioning Person Re-identification (WP-ReID) dataset. Extensive experiments are conducted and demonstrate the effectiveness of the proposed algorithm. Code will be released at https://github.com/yolomax/WP-ReID.

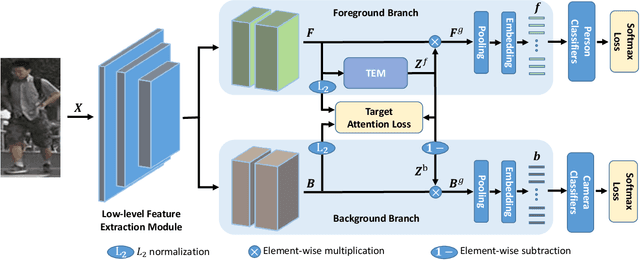

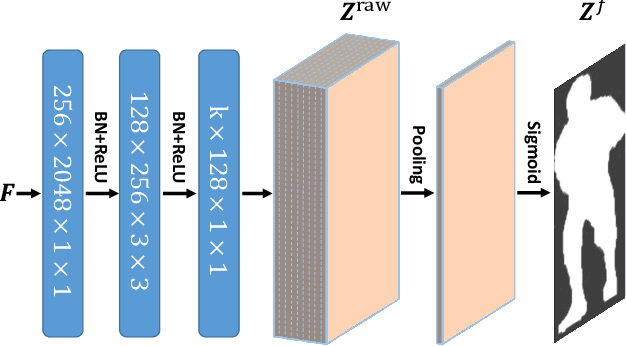

An End-to-End Foreground-Aware Network for Person Re-Identification

Oct 25, 2019

Abstract:Person re-identification is a crucial task of identifying pedestrians of interest across multiple surveillance camera views. In person re-identification, a pedestrian is usually represented with features extracted from a rectangular image region that inevitably contains the scene background, which incurs ambiguity to distinguish different pedestrians and degrades the accuracy. To this end, we propose an end-to-end foreground-aware network to discriminate foreground from background by learning a soft mask for person re-identification. In our method, in addition to the pedestrian ID as supervision for foreground, we introduce the camera ID of each pedestrian image for background modeling. The foreground branch and the background branch are optimized collaboratively. By presenting a target attention loss, the pedestrian features extracted from the foreground branch become more insensitive to the backgrounds, which greatly reduces the negative impacts of changing backgrounds on matching an identical across different camera views. Notably, in contrast to existing methods, our approach does not require any additional dataset to train a human landmark detector or a segmentation model for locating the background regions. The experimental results conducted on three challenging datasets, i.e., Market-1501, DukeMTMC-reID, and MSMT17, demonstrate the effectiveness of our approach.

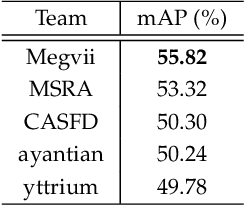

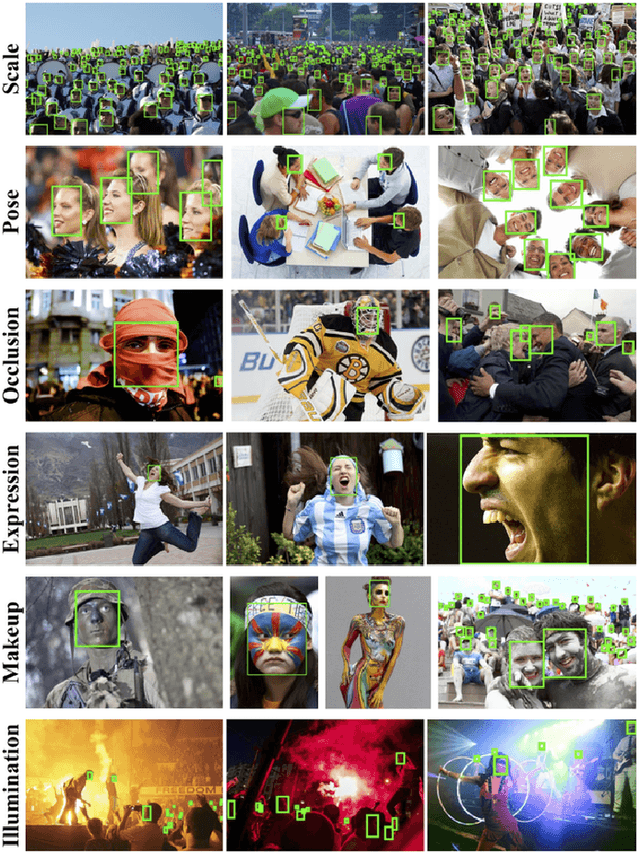

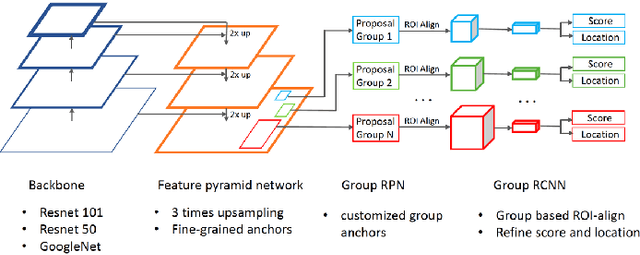

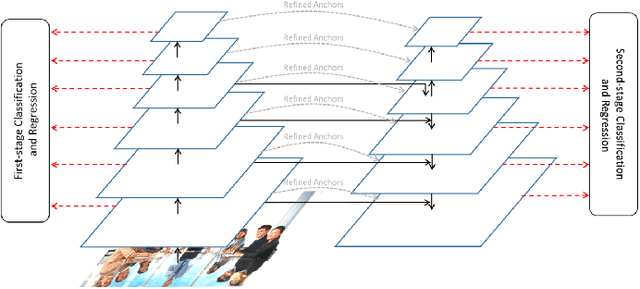

WIDER Face and Pedestrian Challenge 2018: Methods and Results

Feb 19, 2019

Abstract:This paper presents a review of the 2018 WIDER Challenge on Face and Pedestrian. The challenge focuses on the problem of precise localization of human faces and bodies, and accurate association of identities. It comprises of three tracks: (i) WIDER Face which aims at soliciting new approaches to advance the state-of-the-art in face detection, (ii) WIDER Pedestrian which aims to find effective and efficient approaches to address the problem of pedestrian detection in unconstrained environments, and (iii) WIDER Person Search which presents an exciting challenge of searching persons across 192 movies. In total, 73 teams made valid submissions to the challenge tracks. We summarize the winning solutions for all three tracks. and present discussions on open problems and potential research directions in these topics.

Spatial and Temporal Mutual Promotion for Video-based Person Re-identification

Dec 26, 2018

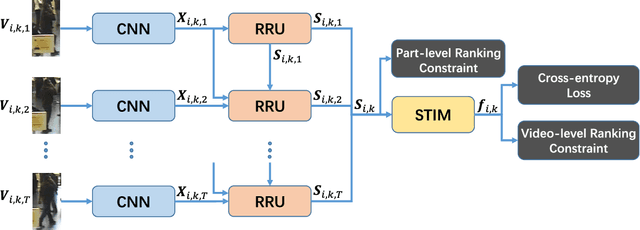

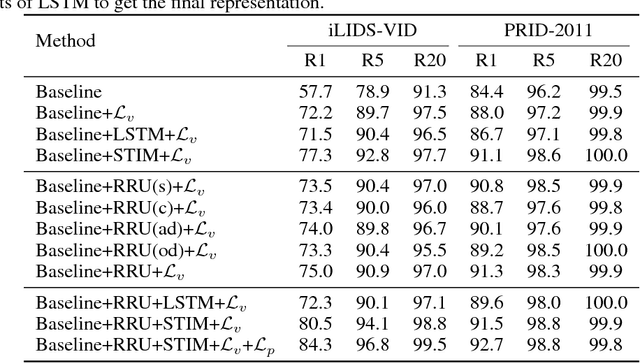

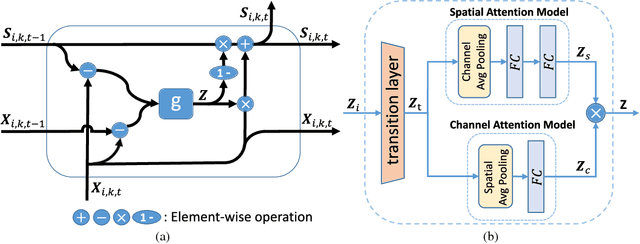

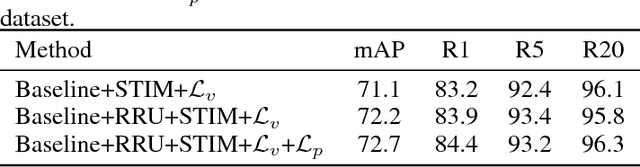

Abstract:Video-based person re-identification is a crucial task of matching video sequences of a person across multiple camera views. Generally, features directly extracted from a single frame suffer from occlusion, blur, illumination and posture changes. This leads to false activation or missing activation in some regions, which corrupts the appearance and motion representation. How to explore the abundant spatial-temporal information in video sequences is the key to solve this problem. To this end, we propose a Refining Recurrent Unit (RRU) that recovers the missing parts and suppresses noisy parts of the current frame's features by referring historical frames. With RRU, the quality of each frame's appearance representation is improved. Then we use the Spatial-Temporal clues Integration Module (STIM) to mine the spatial-temporal information from those upgraded features. Meanwhile, the multi-level training objective is used to enhance the capability of RRU and STIM. Through the cooperation of those modules, the spatial and temporal features mutually promote each other and the final spatial-temporal feature representation is more discriminative and robust. Extensive experiments are conducted on three challenging datasets, i.e., iLIDS-VID, PRID-2011 and MARS. The experimental results demonstrate that our approach outperforms existing state-of-the-art methods of video-based person re-identification on iLIDS-VID and MARS and achieves favorable results on PRID-2011.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge