Junting Chen

Physics-Aware Tensor Reconstruction for Radio Maps in Pixel-Based Fluid Antenna Systems

Feb 05, 2026Abstract:The deployment of pixel-based antennas and fluid antenna systems (FAS) is hindered by prohibitive channel state information (CSI) acquisition overhead. While radio maps enable proactive mode selection, reconstructing high-fidelity maps from sparse measurements is challenging. Existing physics-agnostic or data-driven methods often fail to recover fine-grained shadowing details under extreme sparsity. We propose a Physics-Regularized Low-Rank Tensor Completion (PR-LRTC) framework for radio map reconstruction. By modeling the signal field as a three-way tensor, we integrate environmental low-rankness with deterministic antenna physics. Specifically, we leverage Effective Aerial Degrees-of-Freedom (EADoF) theory to derive a differential gain topology map as a physical prior for regularization. The resulting optimization problem is solved via an efficient Alternating Direction Method of Multipliers (ADMM)-based algorithm. Simulations show that PR-LRTC achieves a 4 dB gain over baselines at a 10% sampling ratio. It effectively preserves sharp shadowing edges, providing a robust, physics-compliant solution for low-overhead beam management.

MIMO Beam Map Reconstruction via Toeplitz-Structured Matrix-Vector Tensor Decomposition

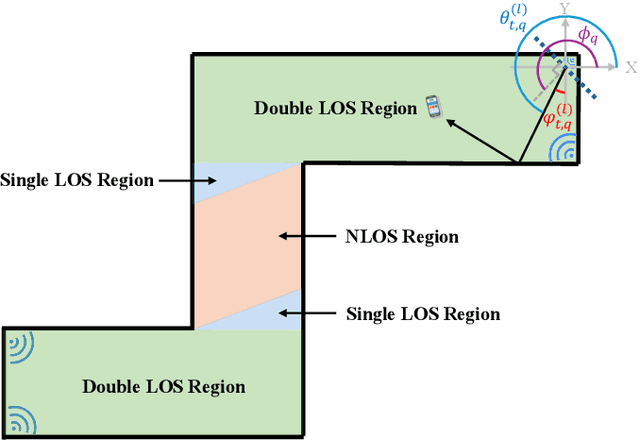

Jan 08, 2026Abstract:As wireless networks progress toward sixthgeneration (6G), understanding the spatial distribution of directional beam coverage becomes increasingly important for beam management and link optimization. Multiple-input multipleoutput (MIMO) beam map provides such spatial awareness, yet accurate construction under sparse measurements remains difficult due to incomplete spatial coverage and strong angular variations. This paper presents a tensor decomposition approach for reconstructing MIMO beam map from limited measurements. By transforming measurements from a Cartesian coordinate system into a polar coordinate system, we uncover a matrix-vector outer-product structure associated with different propagation conditions. Specifically, we mathematically demonstrate that the matrix factor, representing beam-space gain, exhibits an intrinsic Toeplitz structure due to the shift-invariant nature of array responses, and the vector factor captures distance-dependent attenuation. Leveraging these structural priors, we formulate a regularized tensor decomposition problem to jointly reconstruct line-of-sight (LOS), reflection, and obstruction propagation conditions. Simulation results confirm that the proposed method significantly enhances data efficiency, achieving a normalized mean square error (NMSE) reduction of over 20% compared to state-of-the-art baselines, even under sparse sampling regimes.

LISN: Language-Instructed Social Navigation with VLM-based Controller Modulating

Dec 10, 2025Abstract:Towards human-robot coexistence, socially aware navigation is significant for mobile robots. Yet existing studies on this area focus mainly on path efficiency and pedestrian collision avoidance, which are essential but represent only a fraction of social navigation. Beyond these basics, robots must also comply with user instructions, aligning their actions to task goals and social norms expressed by humans. In this work, we present LISN-Bench, the first simulation-based benchmark for language-instructed social navigation. Built on Rosnav-Arena 3.0, it is the first standardized social navigation benchmark to incorporate instruction following and scene understanding across diverse contexts. To address this task, we further propose Social-Nav-Modulator, a fast-slow hierarchical system where a VLM agent modulates costmaps and controller parameters. Decoupling low-level action generation from the slower VLM loop reduces reliance on high-frequency VLM inference while improving dynamic avoidance and perception adaptability. Our method achieves an average success rate of 91.3%, which is greater than 63% than the most competitive baseline, with most of the improvements observed in challenging tasks such as following a person in a crowd and navigating while strictly avoiding instruction-forbidden regions. The project website is at: https://social-nav.github.io/LISN-project/

Geometry-Aligned Differential Privacy for Location-Safe Federated Radio Map Construction

Dec 09, 2025

Abstract:Radio maps that describe spatial variations in wireless signal strength are widely used to optimize networks and support aerial platforms. Their construction requires location-labeled signal measurements from distributed users, raising fundamental concerns about location privacy. Even when raw data are kept local, the shared model updates can reveal user locations through their spatial structure, while naive noise injection either fails to hide this leakage or degrades model accuracy. This work analyzes how location leakage arises from gradients in a virtual-environment radio map model and proposes a geometry-aligned differential privacy mechanism with heterogeneous noise tailored to both confuse localization and cover gradient spatial patterns. The approach is theoretically supported with a convergence guarantee linking privacy strength to learning accuracy. Numerical experiments show the approach increases attacker localization error from 30 m to over 180 m, with only 0.2 dB increase in radio map construction error compared to a uniform-noise baseline.

AdaptPNP: Integrating Prehensile and Non-Prehensile Skills for Adaptive Robotic Manipulation

Nov 14, 2025Abstract:Non-prehensile (NP) manipulation, in which robots alter object states without forming stable grasps (for example, pushing, poking, or sliding), significantly broadens robotic manipulation capabilities when grasping is infeasible or insufficient. However, enabling a unified framework that generalizes across different tasks, objects, and environments while seamlessly integrating non-prehensile and prehensile (P) actions remains challenging: robots must determine when to invoke NP skills, select the appropriate primitive for each context, and compose P and NP strategies into robust, multi-step plans. We introduce ApaptPNP, a vision-language model (VLM)-empowered task and motion planning framework that systematically selects and combines P and NP skills to accomplish diverse manipulation objectives. Our approach leverages a VLM to interpret visual scene observations and textual task descriptions, generating a high-level plan skeleton that prescribes the sequence and coordination of P and NP actions. A digital-twin based object-centric intermediate layer predicts desired object poses, enabling proactive mental rehearsal of manipulation sequences. Finally, a control module synthesizes low-level robot commands, with continuous execution feedback enabling online task plan refinement and adaptive replanning through the VLM. We evaluate ApaptPNP across representative P&NP hybrid manipulation tasks in both simulation and real-world environments. These results underscore the potential of hybrid P&NP manipulation as a crucial step toward general-purpose, human-level robotic manipulation capabilities. Project Website: https://sites.google.com/view/adaptpnp/home

Structure-Aware Near-Field Radio Map Recovery via RBF-Assisted Matrix Completion

Nov 10, 2025Abstract:This paper proposes a novel structure-aware matrix completion framework assisted by radial basis function (RBF) interpolation for near-field radio map construction in extremely large multiple-input multiple-output (XL-MIMO) systems. Unlike the far-field scenario, near-field wavefronts exhibit strong dependencies on both angle and distance due to spherical wave propagation, leading to complicated variations in received signal strength (RSS). To effectively capture the intricate spatial variations structure inherent in near-field environments, a regularized RBF interpolation method is developed to enhance radio map reconstruction accuracy. Leveraging theoretical insights from interpolation error analysis of RBF, an inverse μ-law-inspired nonuniform sampling strategy is introduced to allocate measurements adaptively, emphasizing regions with rapid RSS variations near the transmitter. To further exploit the global low-rank structure in the near-field radio map, we integrate RBF interpolation with nuclear norm minimization (NNM)-based matrix completion. A robust Huberized leave-one-out cross-validation (LOOCV) scheme is then proposed for adaptive selection of the tolerance parameter, facilitating optimal fusion between RBF interpolation and matrix completion. The integration of local variation structure modeling via RBF interpolation and global low-rank structure exploitation via matrix completion yields a structure-aware framework that substantially improves the accuracy of near-field radio map reconstruction. Extensive simulations demonstrate that the proposed approach achieves over 10% improvement in normalized mean squared error (NMSE) compared to standard interpolation and matrix completion methods under varying sampling densities and shadowing conditions.

Unsupervised Radio Map Construction in Mixed LoS/NLoS Indoor Environments

Oct 09, 2025

Abstract:Radio maps are essential for enhancing wireless communications and localization. However, existing methods for constructing radio maps typically require costly calibration pro- cesses to collect location-labeled channel state information (CSI) datasets. This paper aims to recover the data collection trajectory directly from the channel propagation sequence, eliminating the need for location calibration. The key idea is to employ a hidden Markov model (HMM)-based framework to conditionally model the channel propagation matrix, while simultaneously modeling the location correlation in the trajectory. The primary challenges involve modeling the complex relationship between channel propagation in multiple-input multiple-output (MIMO) networks and geographical locations, and addressing both line-of-sight (LOS) and non-line-of-sight (NLOS) indoor conditions. In this paper, we propose an HMM-based framework that jointly characterizes the conditional propagation model and the evolution of the user trajectory. Specifically, the channel propagation in MIMO networks is modeled separately in terms of power, delay, and angle, with distinct models for LOS and NLOS conditions. The user trajectory is modeled using a Gaussian-Markov model. The parameters for channel propagation, the mobility model, and LOS/NLOS classification are optimized simultaneously. Experimental validation using simulated MIMO-Orthogonal Frequency-Division Multiplexing (OFDM) networks with a multi-antenna uniform linear arrays (ULA) configuration demonstrates that the proposed method achieves an average localization accuracy of 0.65 meters in an indoor environment, covering both LOS and NLOS regions. Moreover, the constructed radio map enables localization with a reduced error compared to conventional supervised methods, such as k-nearest neighbors (KNN), support vector machine (SVM), and deep neural network (DNN).

Blind Construction of Angular Power Maps in Massive MIMO Networks

Oct 08, 2025Abstract:Channel state information (CSI) acquisition is a challenging problem in massive multiple-input multiple-output (MIMO) networks. Radio maps provide a promising solution for radio resource management by reducing online CSI acquisition. However, conventional approaches for radio map construction require location-labeled CSI data, which is challenging in practice. This paper investigates unsupervised angular power map construction based on large timescale CSI data collected in a massive MIMO network without location labels. A hidden Markov model (HMM) is built to connect the hidden trajectory of a mobile with the CSI evolution of a massive MIMO channel. As a result, the mobile location can be estimated, enabling the construction of an angular power map. We show that under uniform rectilinear mobility with Poisson-distributed base stations (BSs), the Cramer-Rao Lower Bound (CRLB) for localization error can vanish at any signal-to-noise ratios (SNRs), whereas when BSs are confined to a limited region, the error remains nonzero even with infinite independent measurements. Based on reference signal received power (RSRP) data collected in a real multi-cell massive MIMO network, an average localization error of 18 meters can be achieved although measurements are mainly obtained from a single serving cell.

RadioDiff-3D: A 3D$\times$3D Radio Map Dataset and Generative Diffusion Based Benchmark for 6G Environment-Aware Communication

Jul 16, 2025Abstract:Radio maps (RMs) serve as a critical foundation for enabling environment-aware wireless communication, as they provide the spatial distribution of wireless channel characteristics. Despite recent progress in RM construction using data-driven approaches, most existing methods focus solely on pathloss prediction in a fixed 2D plane, neglecting key parameters such as direction of arrival (DoA), time of arrival (ToA), and vertical spatial variations. Such a limitation is primarily due to the reliance on static learning paradigms, which hinder generalization beyond the training data distribution. To address these challenges, we propose UrbanRadio3D, a large-scale, high-resolution 3D RM dataset constructed via ray tracing in realistic urban environments. UrbanRadio3D is over 37$\times$3 larger than previous datasets across a 3D space with 3 metrics as pathloss, DoA, and ToA, forming a novel 3D$\times$33D dataset with 7$\times$3 more height layers than prior state-of-the-art (SOTA) dataset. To benchmark 3D RM construction, a UNet with 3D convolutional operators is proposed. Moreover, we further introduce RadioDiff-3D, a diffusion-model-based generative framework utilizing the 3D convolutional architecture. RadioDiff-3D supports both radiation-aware scenarios with known transmitter locations and radiation-unaware settings based on sparse spatial observations. Extensive evaluations on UrbanRadio3D validate that RadioDiff-3D achieves superior performance in constructing rich, high-dimensional radio maps under diverse environmental dynamics. This work provides a foundational dataset and benchmark for future research in 3D environment-aware communication. The dataset is available at https://github.com/UNIC-Lab/UrbanRadio3D.

ArtGS:3D Gaussian Splatting for Interactive Visual-Physical Modeling and Manipulation of Articulated Objects

Jul 03, 2025Abstract:Articulated object manipulation remains a critical challenge in robotics due to the complex kinematic constraints and the limited physical reasoning of existing methods. In this work, we introduce ArtGS, a novel framework that extends 3D Gaussian Splatting (3DGS) by integrating visual-physical modeling for articulated object understanding and interaction. ArtGS begins with multi-view RGB-D reconstruction, followed by reasoning with a vision-language model (VLM) to extract semantic and structural information, particularly the articulated bones. Through dynamic, differentiable 3DGS-based rendering, ArtGS optimizes the parameters of the articulated bones, ensuring physically consistent motion constraints and enhancing the manipulation policy. By leveraging dynamic Gaussian splatting, cross-embodiment adaptability, and closed-loop optimization, ArtGS establishes a new framework for efficient, scalable, and generalizable articulated object modeling and manipulation. Experiments conducted in both simulation and real-world environments demonstrate that ArtGS significantly outperforms previous methods in joint estimation accuracy and manipulation success rates across a variety of articulated objects. Additional images and videos are available on the project website: https://sites.google.com/view/artgs/home

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge