Ali-akbar Agha-mohammadi

Jet Propulsion Lab., California Institute of Technology and

Belief Space Planning: A Covariance Steering Approach

May 24, 2021

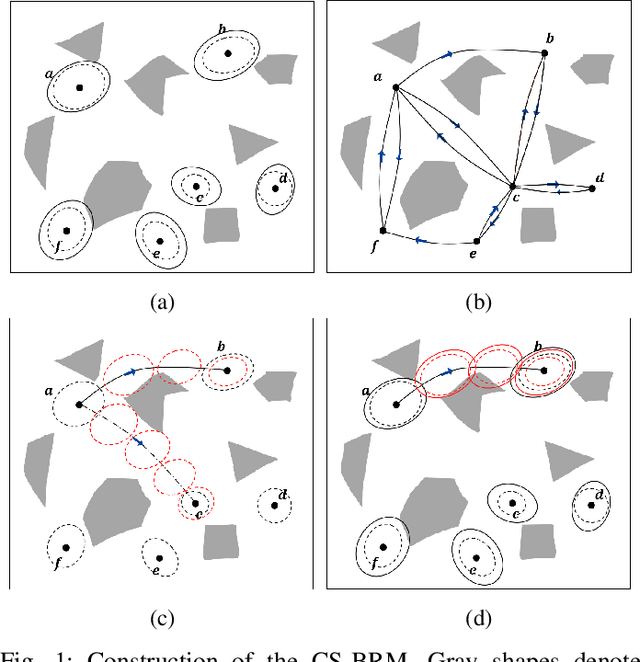

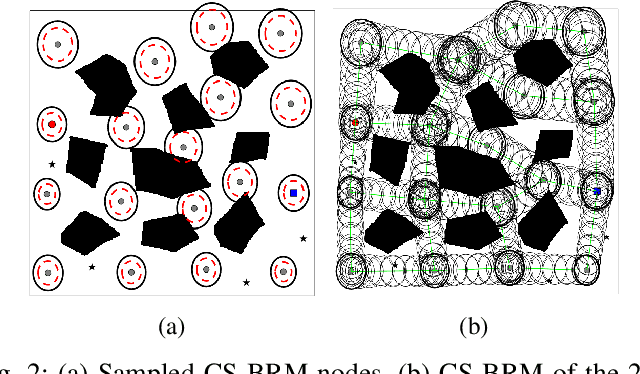

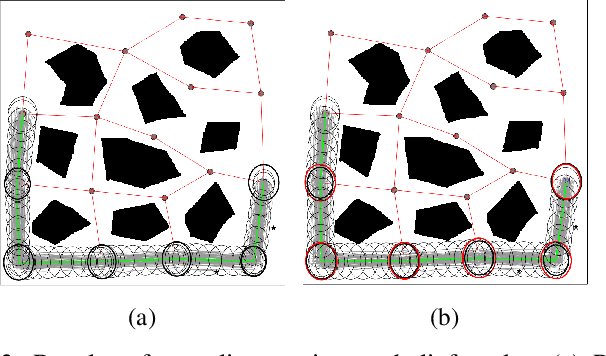

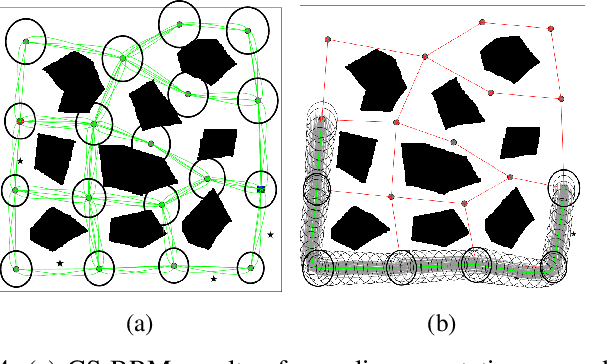

Abstract:A new belief space planning algorithm, called covariance steering Belief RoadMap (CS-BRM), is introduced, which is a multi-query algorithm for motion planning of dynamical systems under simultaneous motion and observation uncertainties. CS-BRM extends the probabilistic roadmap (PRM) approach to belief spaces and is based on the recently developed theory of covariance steering (CS) that enables guaranteed satisfaction of terminal belief constraints in finite-time. The nodes in the CS-BRM are sampled in belief space and represent distributions of the system states. A covariance steering controller steers the system from one BRM node to another, thus acting as an edge controller of the corresponding belief graph that ensures belief constraint satisfaction. After the edge controller is computed, a specific edge cost is assigned to that edge. The CS-BRM algorithm allows the sampling of non-stationary belief nodes, and thus is able to explore the velocity space and find efficient motion plans. The performance of CS-BRM is evaluated and compared to a previous belief space planning method, demonstrating the benefits of the proposed approach.

Exploration-RRT: A multi-objective Path Planning and Exploration Framework for Unknown and Unstructured Environments

Apr 08, 2021

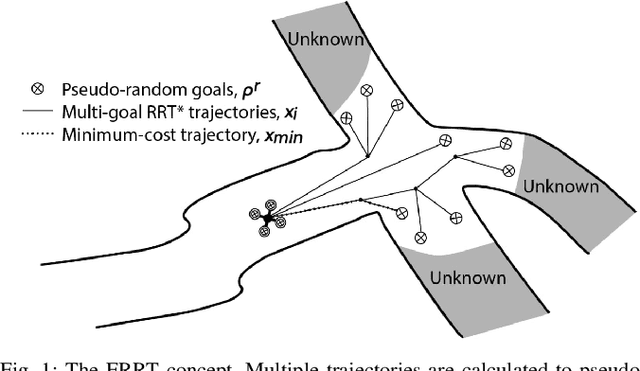

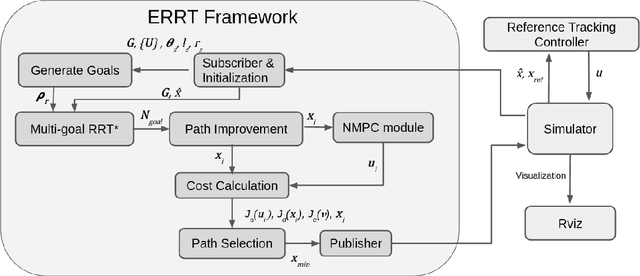

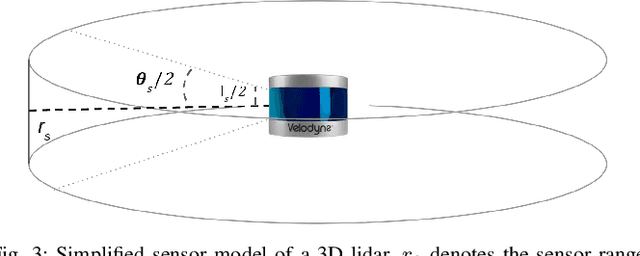

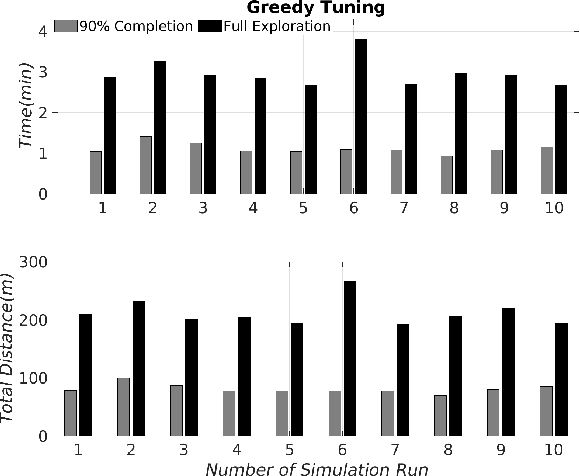

Abstract:This article establishes the Exploration-RRT algorithm: A novel general-purpose combined exploration and pathplanning algorithm, based on a multi-goal Rapidly-Exploring Random Trees (RRT) framework. Exploration-RRT (ERRT) has been specifically designed for utilization in 3D exploration missions, with partially or completely unknown and unstructured environments. The novel proposed ERRT is based on a multi-objective optimization framework and it is able to take under consideration the potential information gain, the distance travelled, and the actuation costs, along trajectories to pseudo-random goals, generated from considering the on-board sensor model and the non-linear model of the utilized platform. In this article, the algorithmic pipeline of the ERRT will be established and the overall applicability and efficiency of the proposed scheme will be presented on an application with an Unmanned Aerial Vehicle (UAV) model, equipped with a 3D lidar, in a simulated operating environment, with the goal of exploring a completely unknown area as efficiently and quickly as possible

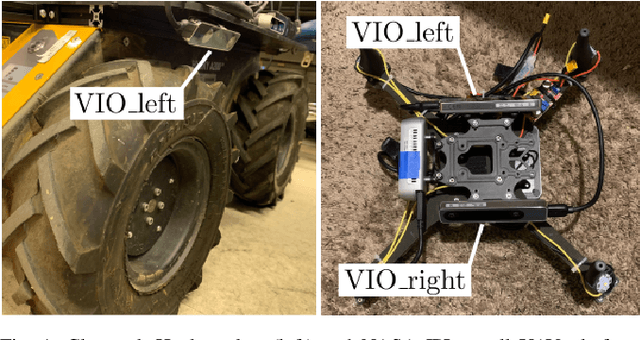

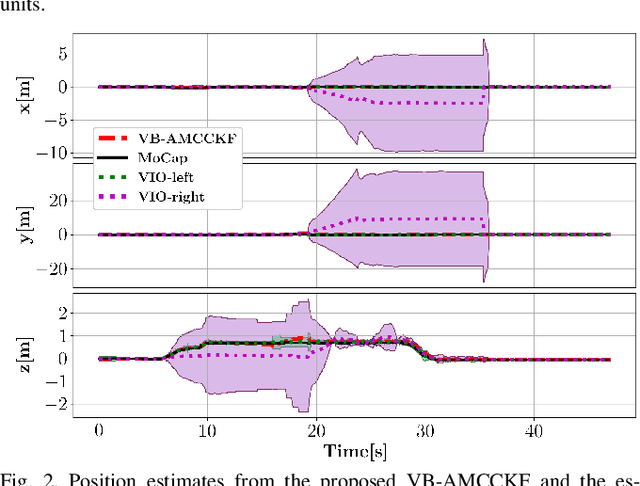

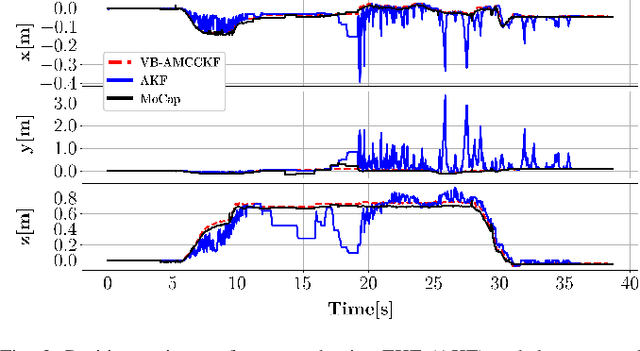

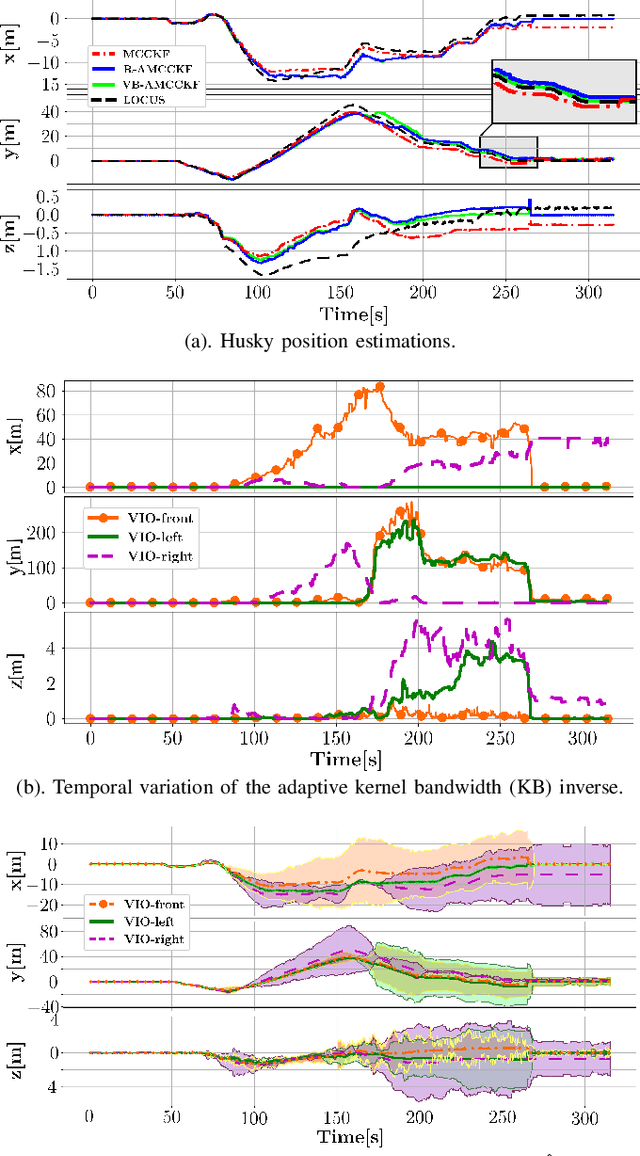

Towards Robust State Estimation by Boosting the Maximum Correntropy Criterion Kalman Filter with Adaptive Behaviors

Mar 29, 2021

Abstract:This work proposes a resilient and adaptive state estimation framework for robots operating in perceptually-degraded environments. The approach, called Adaptive Maximum Correntropy Criterion Kalman Filtering (AMCCKF), is inherently robust to corrupted measurements, such as those containing jumps or general non-Gaussian noise, and is able to modify filter parameters online to improve performance. Two separate methods are developed -- the Variational Bayesian AMCCKF (VB-AMCCKF) and Residual AMCCKF (R-AMCCKF) -- that modify the process and measurement noise models in addition to the bandwidth of the kernel function used in MCCKF based on the quality of measurements received. The two approaches differ in computational complexity and overall performance which is experimentally analyzed. The method is demonstrated in real experiments on both aerial and ground robots and is part of the solution used by the COSTAR team participating at the DARPA Subterranean Challenge.

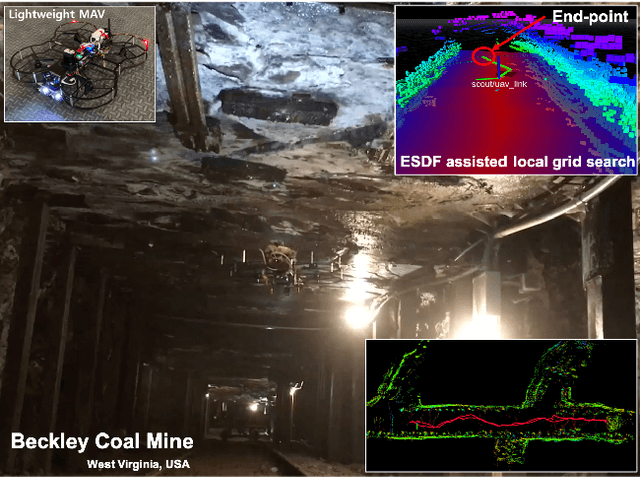

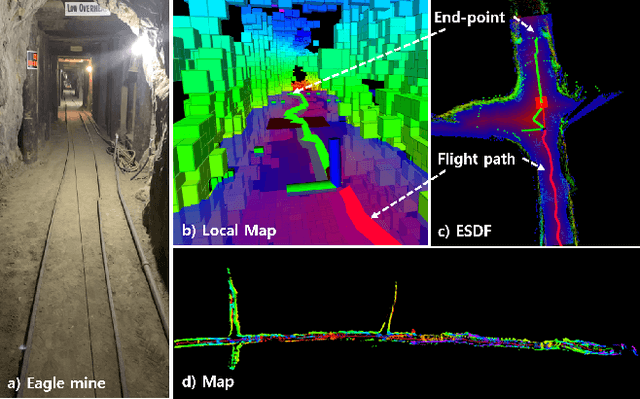

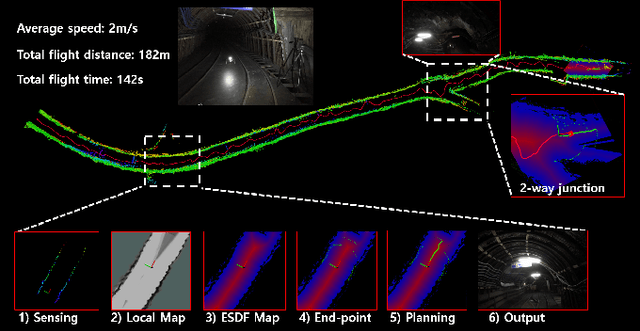

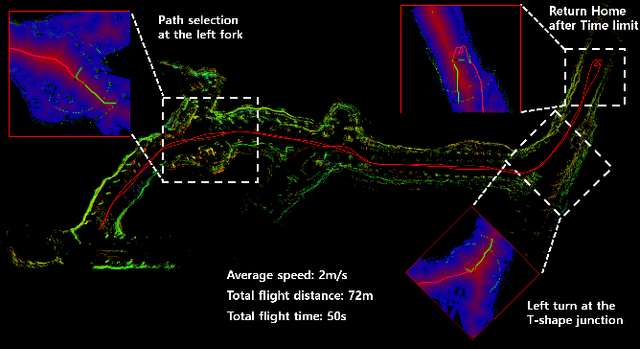

Robust Collision-free Lightweight Aerial Autonomy for Unknown Area Exploration

Mar 16, 2021

Abstract:Collision-free path planning is an essential requirement for autonomous exploration in unknown environments, especially when operating in confined spaces or near obstacles. This study presents an autonomous exploration technique using a small drone. A local end-point selection method is designed using LiDAR range measurement and then generates the path from the current position to the selected end-point. The generated path shows the consistent collision-free path in real-time by adopting the Euclidean signed distance field-based grid-search method. The simulation results consistently showed the safety, and reliability of the proposed path-planning method. Real-world experiments are conducted in three different mines, demonstrating successful autonomous exploration flight in environments with various structural conditions. The results showed the high capability of the proposed flight autonomy framework for lightweight aerial-robot systems. Besides, our drone performs an autonomous mission during our entry at the Tunnel Circuit competition (Phase 1) of the DARPA Subterranean Challenge.

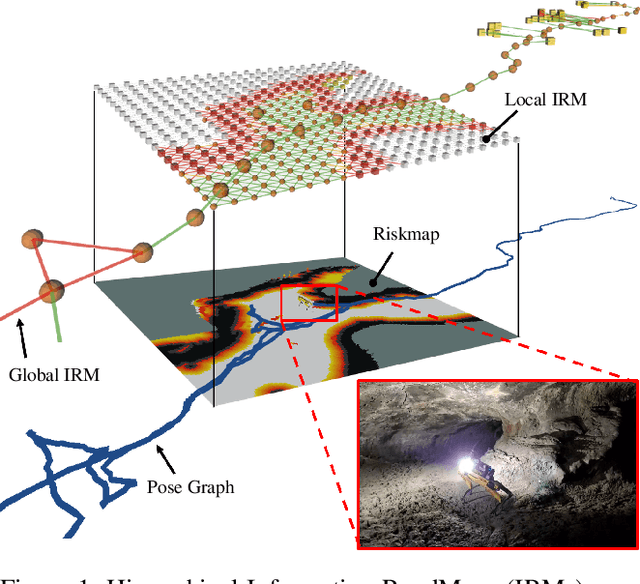

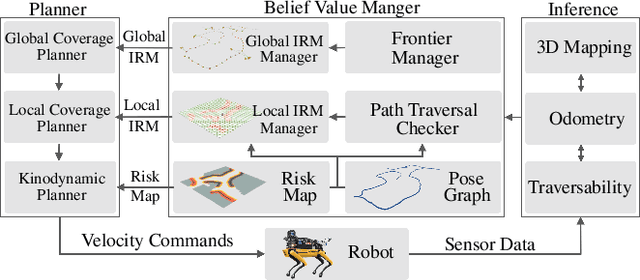

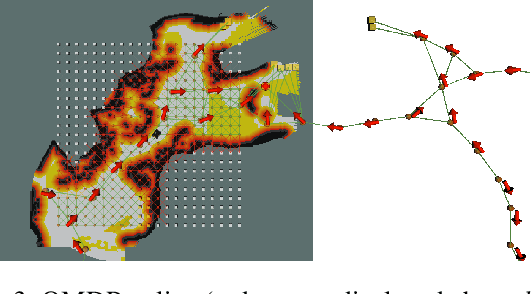

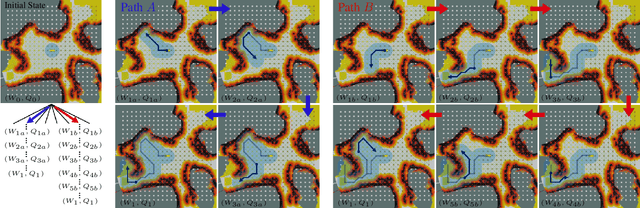

PLGRIM: Hierarchical Value Learning for Large-scale Exploration in Unknown Environments

Feb 10, 2021

Abstract:In order for a robot to explore an unknown environment autonomously, it must account for uncertainty in sensor measurements, hazard assessment, localization, and motion execution. Making decisions for maximal reward in a stochastic setting requires learning values and constructing policies over a belief space, i.e., probability distribution of the robot-world state. Value learning over belief spaces suffer from computational challenges in high-dimensional spaces, such as large spatial environments and long temporal horizons for exploration. At the same time, it should be adaptive and resilient to disturbances at run time in order to ensure the robot's safety, as required in many real-world applications. This work proposes a scalable value learning framework, PLGRIM (Probabilistic Local and Global Reasoning on Information roadMaps), that bridges the gap between (i) local, risk-aware resiliency and (ii) global, reward-seeking mission objectives. By leveraging hierarchical belief space planners with information-rich graph structures, PLGRIM can address large-scale exploration problems while providing locally near-optimal coverage plans. PLGRIM is a step toward enabling belief space planners on physical robots operating in unknown and complex environments. We validate our proposed framework with a high-fidelity dynamic simulation in diverse environments and with physical hardware, Boston Dynamics' Spot robot, in a lava tube.

DARE-SLAM: Degeneracy-Aware and Resilient Loop Closing in Perceptually-Degraded Environments

Feb 09, 2021

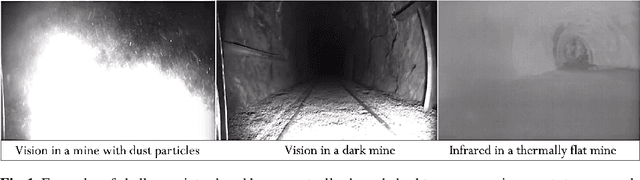

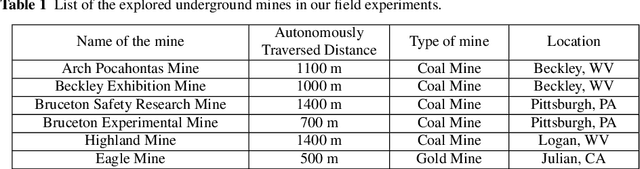

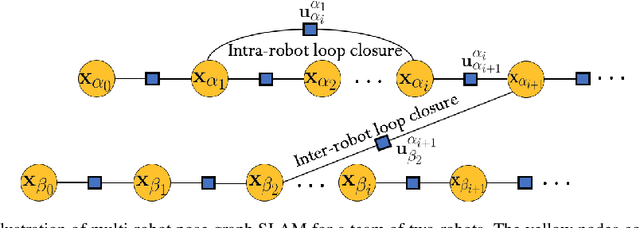

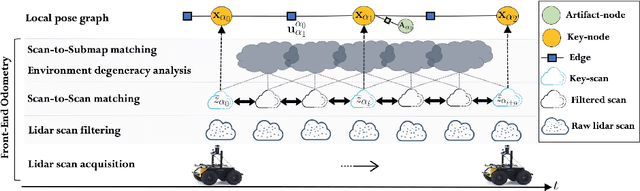

Abstract:Enabling fully autonomous robots capable of navigating and exploring large-scale, unknown and complex environments has been at the core of robotics research for several decades. A key requirement in autonomous exploration is building accurate and consistent maps of the unknown environment that can be used for reliable navigation. Loop closure detection, the ability to assert that a robot has returned to a previously visited location, is crucial for consistent mapping as it reduces the drift caused by error accumulation in the estimated robot trajectory. Moreover, in multi-robot systems, loop closures enable merging local maps obtained by a team of robots into a consistent global map of the environment. In this paper, we present a degeneracy-aware and drift-resilient loop closing method to improve place recognition and resolve 3D location ambiguities for simultaneous localization and mapping (SLAM) in GPS-denied, large-scale and perceptually-degraded environments. More specifically, we focus on SLAM in subterranean environments (e.g., lava tubes, caves, and mines) that represent examples of complex and ambiguous environments where current methods have inadequate performance.

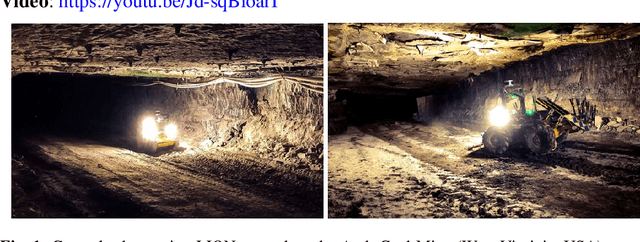

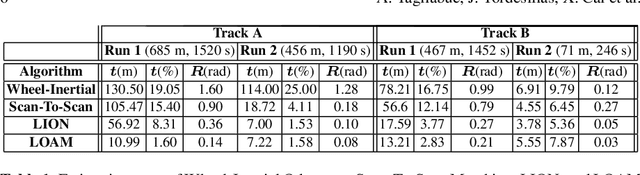

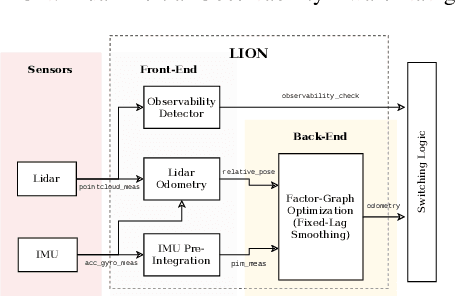

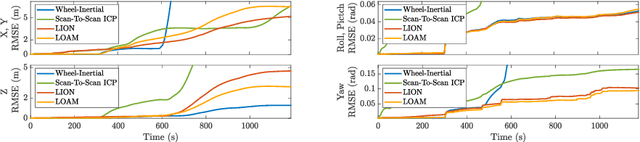

LION: Lidar-Inertial Observability-Aware Navigator for Vision-Denied Environments

Feb 05, 2021

Abstract:State estimation for robots navigating in GPS-denied and perceptually-degraded environments, such as underground tunnels, mines and planetary subsurface voids, remains challenging in robotics. Towards this goal, we present LION (Lidar-Inertial Observability-Aware Navigator), which is part of the state estimation framework developed by the team CoSTAR for the DARPA Subterranean Challenge, where the team achieved second and first places in the Tunnel and Urban circuits in August 2019 and February 2020, respectively. LION provides high-rate odometry estimates by fusing high-frequency inertial data from an IMU and low-rate relative pose estimates from a lidar via a fixed-lag sliding window smoother. LION does not require knowledge of relative positioning between lidar and IMU, as the extrinsic calibration is estimated online. In addition, LION is able to self-assess its performance using an observability metric that evaluates whether the pose estimate is geometrically ill-constrained. Odometry and confidence estimates are used by HeRO, a supervisory algorithm that provides robust estimates by switching between different odometry sources. In this paper we benchmark the performance of LION in perceptually-degraded subterranean environments, demonstrating its high technology readiness level for deployment in the field.

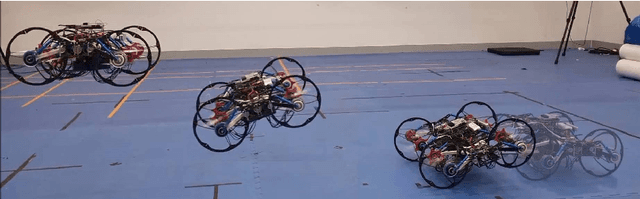

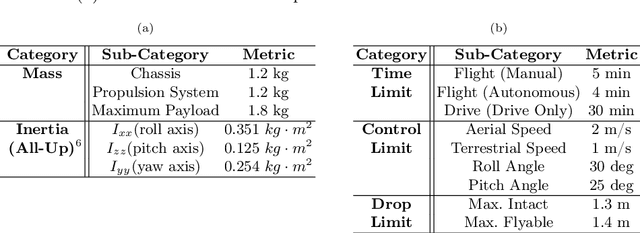

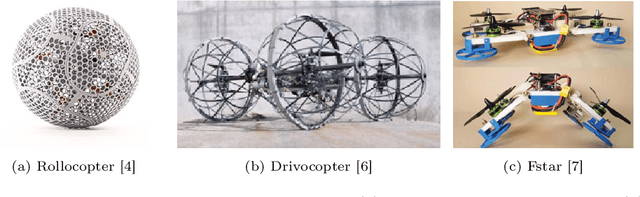

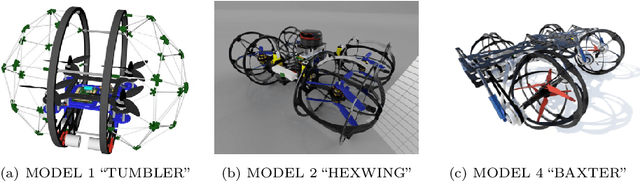

BAXTER: Bi-modal Aerial-Terrestrial Hybrid Vehicle for Long-endurance Versatile Mobility: Preprint Version

Feb 05, 2021

Abstract:Unmanned aerial vehicles are rapidly evolving within the field of robotics. However, their performance is often limited by payload capacity, operational time, and robustness to impact and collision. These limitations of aerial vehicles become more acute for missions in challenging environments such as subterranean structures which may require extended autonomous operation in confined spaces. While software solutions for aerial robots are developing rapidly, improvements to hardware are critical to applying advanced planners and algorithms in large and dangerous environments where the short range and high susceptibility to collisions of most modern aerial robots make applications in realistic subterranean missions infeasible. To provide such hardware capabilities, one needs to design and implement a hardware solution that takes into the account the Size, Weight, and Power (SWaP) constraints. This work focuses on providing a robust and versatile hybrid platform that improves payload capacity, operation time, endurance, and versatility. The Bi-modal Aerial and Terrestrial hybrid vehicle (BAXTER) is a solution that provides two modes of operation, aerial and terrestrial. BAXTER employs two novel hardware mechanisms: the M-Suspension and the Decoupled Transmission which together provide resilience during landing and crashes and efficient terrestrial operation. Extensive flight tests were conducted to characterize the vehicle's capabilities, including robustness and endurance. Additionally, we propose Agile Mode Transfer (AMT), a transition from aerial to terrestrial operation that seeks to minimize impulses during impact to the ground which is a quick and simple transition process that exploits BAXTER's resilience to impact.

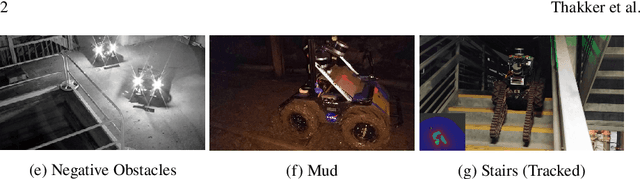

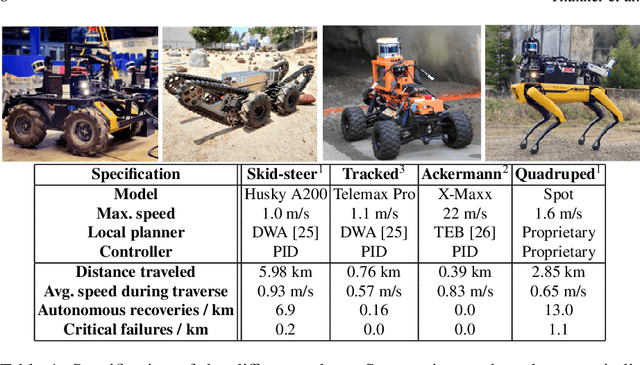

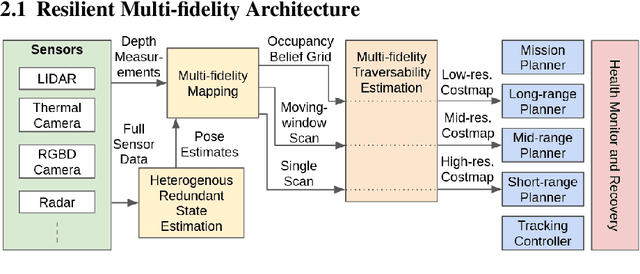

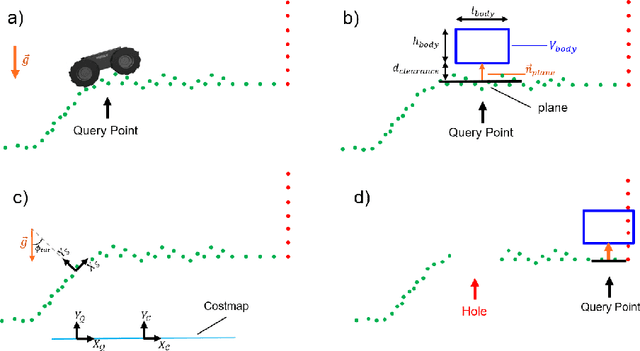

Autonomous Off-road Navigation over Extreme Terrains with Perceptually-challenging Conditions

Jan 26, 2021

Abstract:We propose a framework for resilient autonomous navigation in perceptually challenging unknown environments with mobility-stressing elements such as uneven surfaces with rocks and boulders, steep slopes, negative obstacles like cliffs and holes, and narrow passages. Environments are GPS-denied and perceptually-degraded with variable lighting from dark to lit and obscurants (dust, fog, smoke). Lack of prior maps and degraded communication eliminates the possibility of prior or off-board computation or operator intervention. This necessitates real-time on-board computation using noisy sensor data. To address these challenges, we propose a resilient architecture that exploits redundancy and heterogeneity in sensing modalities. Further resilience is achieved by triggering recovery behaviors upon failure. We propose a fast settling algorithm to generate robust multi-fidelity traversability estimates in real-time. The proposed approach was deployed on multiple physical systems including skid-steer and tracked robots, a high-speed RC car and legged robots, as a part of Team CoSTAR's effort to the DARPA Subterranean Challenge, where the team won 2nd and 1st place in the Tunnel and Urban Circuits, respectively.

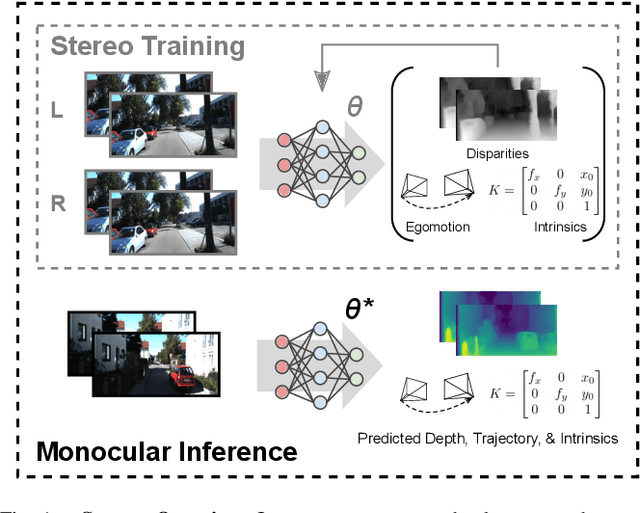

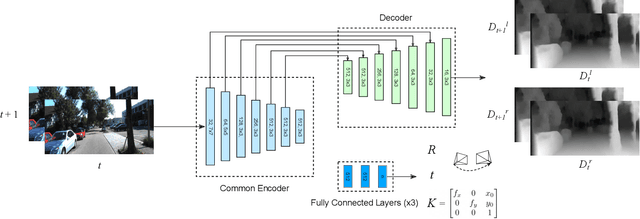

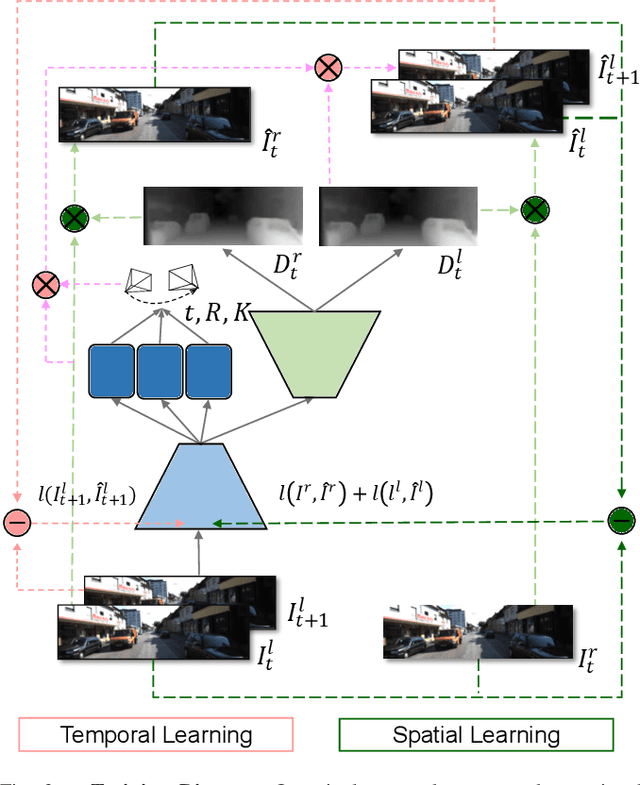

Unsupervised Monocular Depth Learning with Integrated Intrinsics and Spatio-Temporal Constraints

Nov 02, 2020

Abstract:Monocular depth inference has gained tremendous attention from researchers in recent years and remains as a promising replacement for expensive time-of-flight sensors, but issues with scale acquisition and implementation overhead still plague these systems. To this end, this work presents an unsupervised learning framework that is able to predict at-scale depth maps and egomotion, in addition to camera intrinsics, from a sequence of monocular images via a single network. Our method incorporates both spatial and temporal geometric constraints to resolve depth and pose scale factors, which are enforced within the supervisory reconstruction loss functions at training time. Only unlabeled stereo sequences are required for training the weights of our single-network architecture, which reduces overall implementation overhead as compared to previous methods. Our results demonstrate strong performance when compared to the current state-of-the-art on multiple sequences of the KITTI driving dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge