Kamak Ebadi

An Addendum to NeBula: Towards Extending TEAM CoSTAR's Solution to Larger Scale Environments

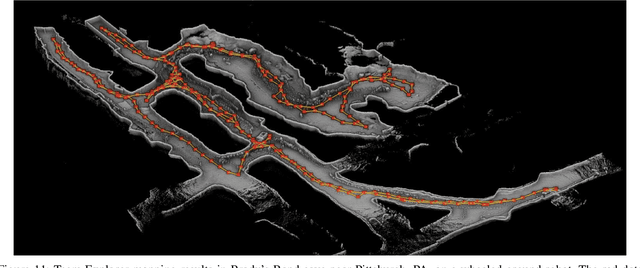

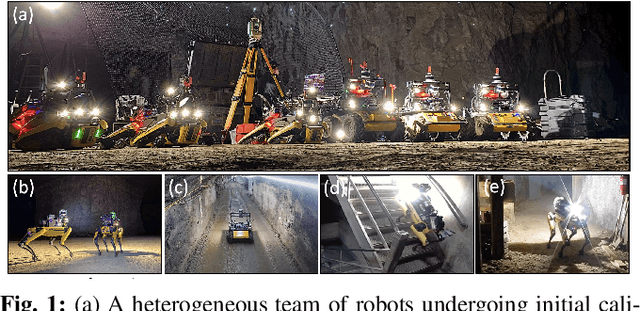

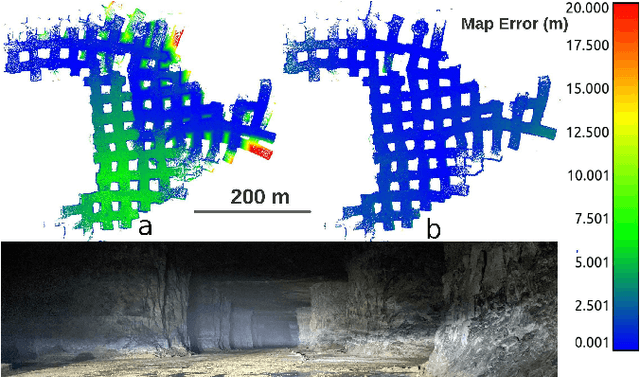

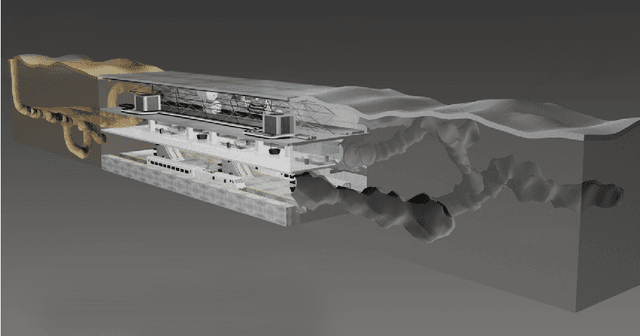

Apr 18, 2025Abstract:This paper presents an appendix to the original NeBula autonomy solution developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), participating in the DARPA Subterranean Challenge. Specifically, this paper presents extensions to NeBula's hardware, software, and algorithmic components that focus on increasing the range and scale of the exploration environment. From the algorithmic perspective, we discuss the following extensions to the original NeBula framework: (i) large-scale geometric and semantic environment mapping; (ii) an adaptive positioning system; (iii) probabilistic traversability analysis and local planning; (iv) large-scale POMDP-based global motion planning and exploration behavior; (v) large-scale networking and decentralized reasoning; (vi) communication-aware mission planning; and (vii) multi-modal ground-aerial exploration solutions. We demonstrate the application and deployment of the presented systems and solutions in various large-scale underground environments, including limestone mine exploration scenarios as well as deployment in the DARPA Subterranean challenge.

Present and Future of SLAM in Extreme Underground Environments

Aug 02, 2022

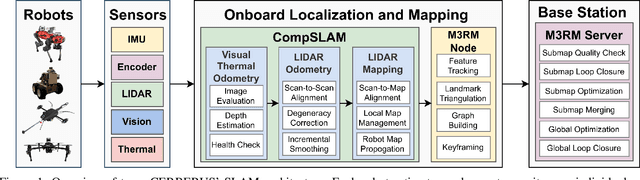

Abstract:This paper reports on the state of the art in underground SLAM by discussing different SLAM strategies and results across six teams that participated in the three-year-long SubT competition. In particular, the paper has four main goals. First, we review the algorithms, architectures, and systems adopted by the teams; particular emphasis is put on lidar-centric SLAM solutions (the go-to approach for virtually all teams in the competition), heterogeneous multi-robot operation (including both aerial and ground robots), and real-world underground operation (from the presence of obscurants to the need to handle tight computational constraints). We do not shy away from discussing the dirty details behind the different SubT SLAM systems, which are often omitted from technical papers. Second, we discuss the maturity of the field by highlighting what is possible with the current SLAM systems and what we believe is within reach with some good systems engineering. Third, we outline what we believe are fundamental open problems, that are likely to require further research to break through. Finally, we provide a list of open-source SLAM implementations and datasets that have been produced during the SubT challenge and related efforts, and constitute a useful resource for researchers and practitioners.

LAMP 2.0: A Robust Multi-Robot SLAM System for Operation in Challenging Large-Scale Underground Environments

May 31, 2022

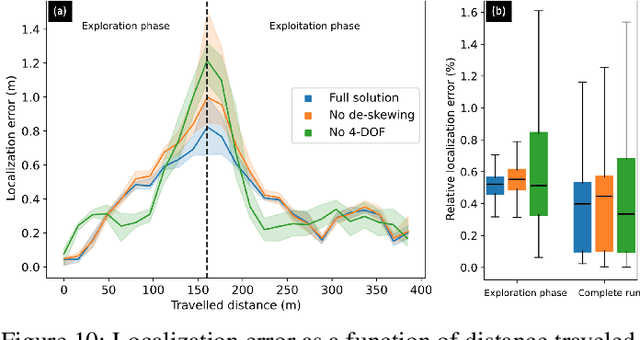

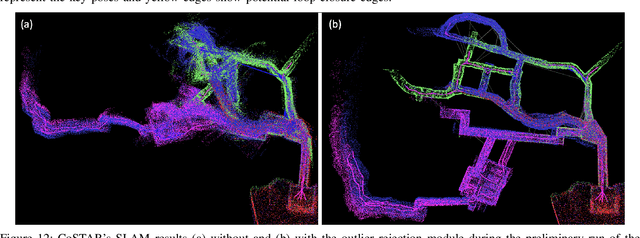

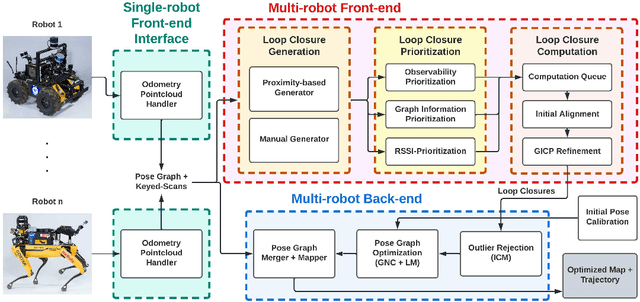

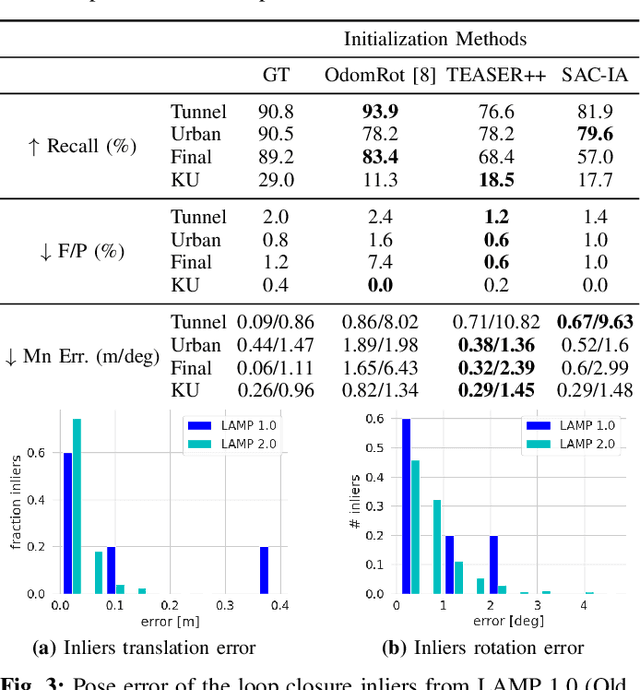

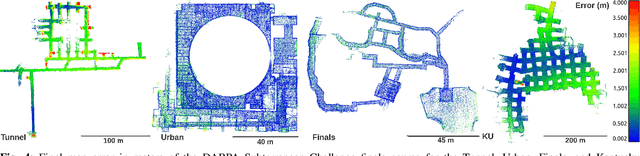

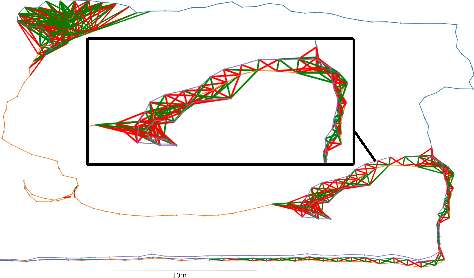

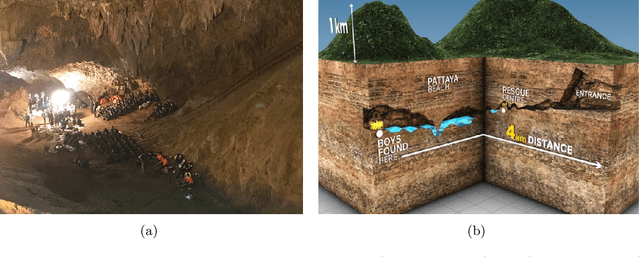

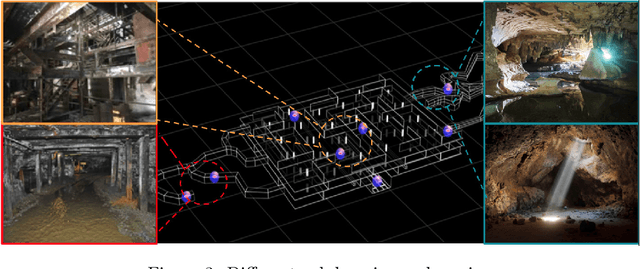

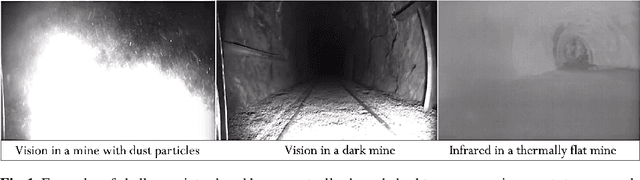

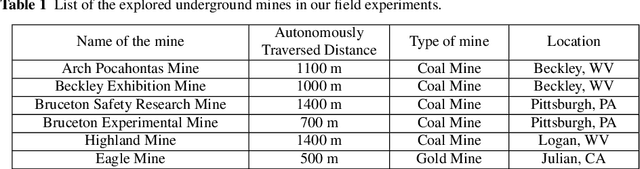

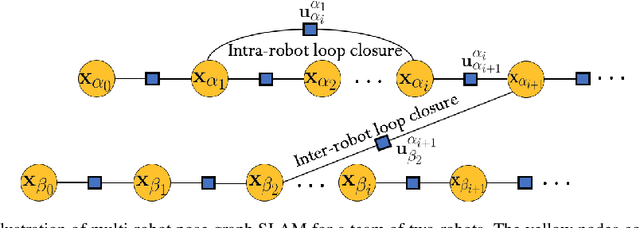

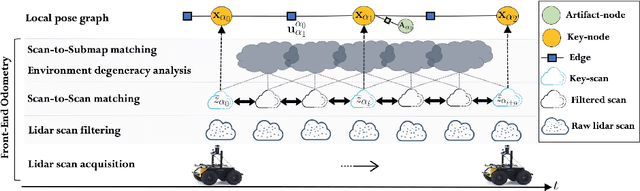

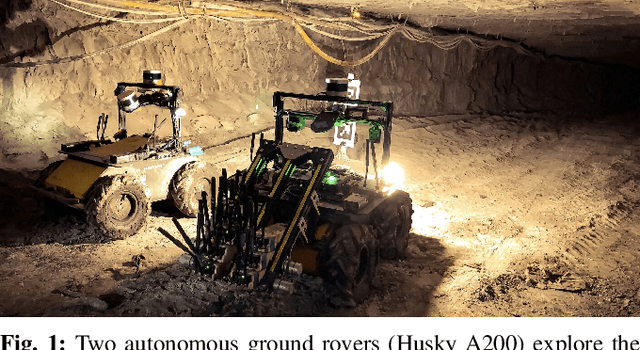

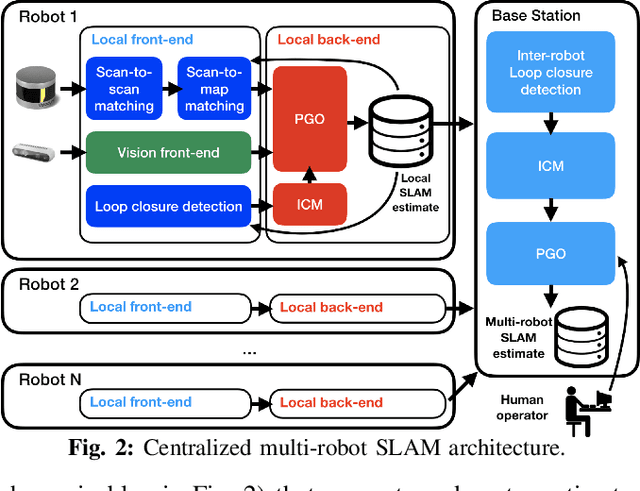

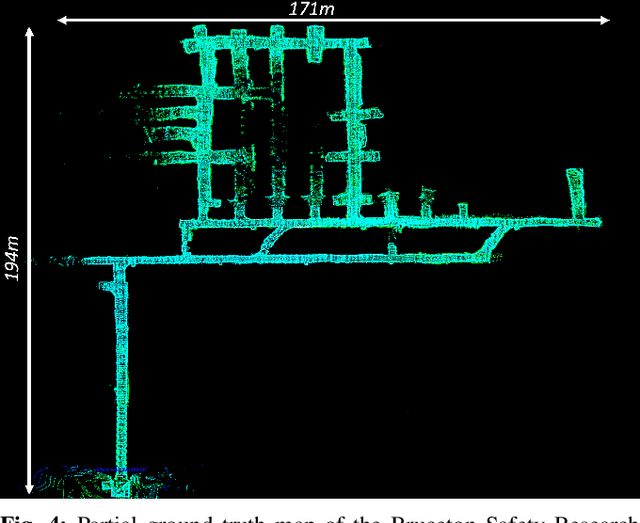

Abstract:Search and rescue with a team of heterogeneous mobile robots in unknown and large-scale underground environments requires high-precision localization and mapping. This crucial requirement is faced with many challenges in complex and perceptually-degraded subterranean environments, as the onboard perception system is required to operate in off-nominal conditions (poor visibility due to darkness and dust, rugged and muddy terrain, and the presence of self-similar and ambiguous scenes). In a disaster response scenario and in the absence of prior information about the environment, robots must rely on noisy sensor data and perform Simultaneous Localization and Mapping (SLAM) to build a 3D map of the environment and localize themselves and potential survivors. To that end, this paper reports on a multi-robot SLAM system developed by team CoSTAR in the context of the DARPA Subterranean Challenge. We extend our previous work, LAMP, by incorporating a single-robot front-end interface that is adaptable to different odometry sources and lidar configurations, a scalable multi-robot front-end to support inter- and intra-robot loop closure detection for large scale environments and multi-robot teams, and a robust back-end equipped with an outlier-resilient pose graph optimization based on Graduated Non-Convexity. We provide a detailed ablation study on the multi-robot front-end and back-end, and assess the overall system performance in challenging real-world datasets collected across mines, power plants, and caves in the United States. We also release our multi-robot back-end datasets (and the corresponding ground truth), which can serve as challenging benchmarks for large-scale underground SLAM.

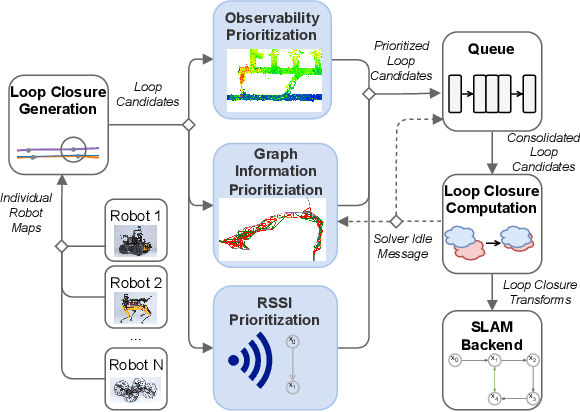

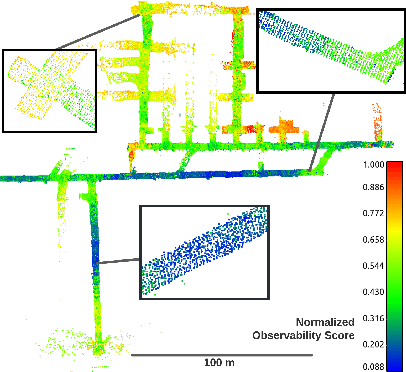

Loop Closure Prioritization for Efficient and Scalable Multi-Robot SLAM

May 24, 2022

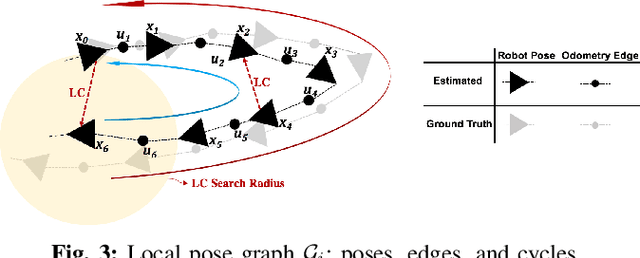

Abstract:Multi-robot SLAM systems in GPS-denied environments require loop closures to maintain a drift-free centralized map. With an increasing number of robots and size of the environment, checking and computing the transformation for all the loop closure candidates becomes computationally infeasible. In this work, we describe a loop closure module that is able to prioritize which loop closures to compute based on the underlying pose graph, the proximity to known beacons, and the characteristics of the point clouds. We validate this system in the context of the DARPA Subterranean Challenge and on numerous challenging underground datasets and demonstrate the ability of this system to generate and maintain a map with low error. We find that our proposed techniques are able to select effective loop closures which results in 51% mean reduction in median error when compared to an odometric solution and 75% mean reduction in median error when compared to a baseline version of this system with no prioritization. We also find our proposed system is able to find a lower error in the mission time of one hour when compared to a system that processes every possible loop closure in four and a half hours.

LOCUS 2.0: Robust and Computationally Efficient Lidar Odometry for Real-Time Underground 3D Mapping

May 24, 2022

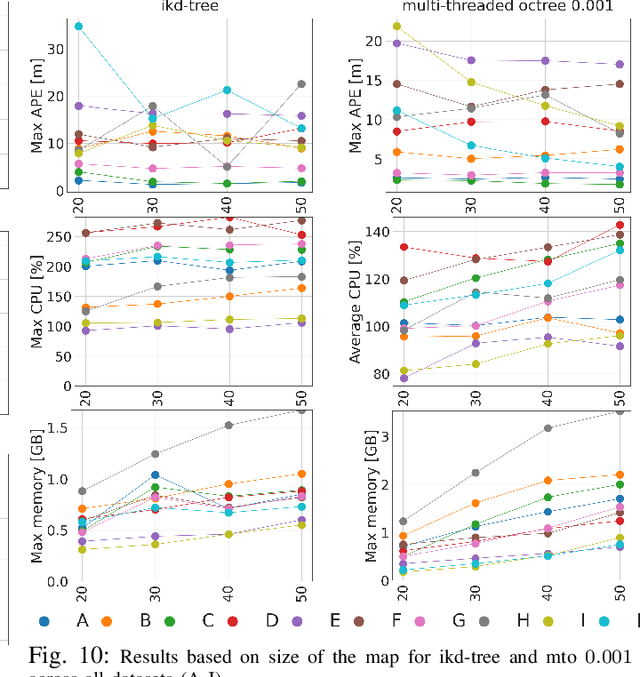

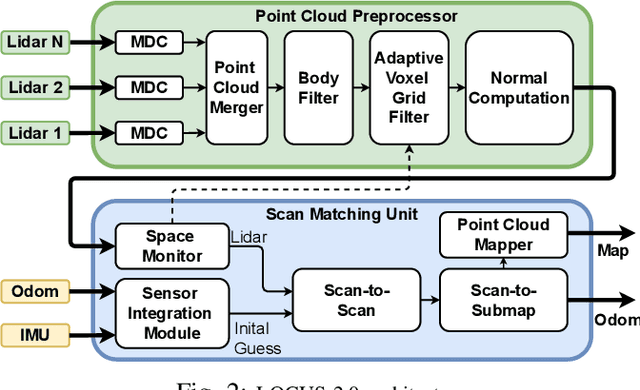

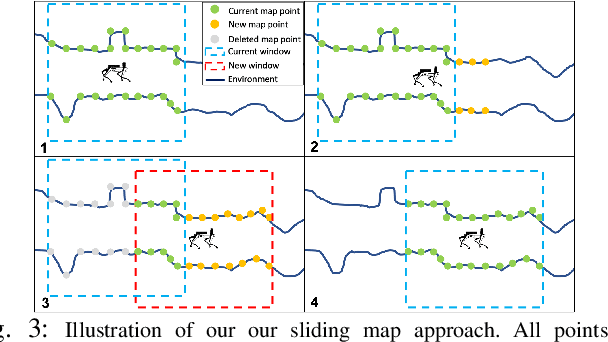

Abstract:Lidar odometry has attracted considerable attention as a robust localization method for autonomous robots operating in complex GNSS-denied environments. However, achieving reliable and efficient performance on heterogeneous platforms in large-scale environments remains an open challenge due to the limitations of onboard computation and memory resources needed for autonomous operation. In this work, we present LOCUS 2.0, a robust and computationally-efficient \lidar odometry system for real-time underground 3D mapping. LOCUS 2.0 includes a novel normals-based \morrell{Generalized Iterative Closest Point (GICP)} formulation that reduces the computation time of point cloud alignment, an adaptive voxel grid filter that maintains the desired computation load regardless of the environment's geometry, and a sliding-window map approach that bounds the memory consumption. The proposed approach is shown to be suitable to be deployed on heterogeneous robotic platforms involved in large-scale explorations under severe computation and memory constraints. We demonstrate LOCUS 2.0, a key element of the CoSTAR team's entry in the DARPA Subterranean Challenge, across various underground scenarios. We release LOCUS 2.0 as an open-source library and also release a \lidar-based odometry dataset in challenging and large-scale underground environments. The dataset features legged and wheeled platforms in multiple environments including fog, dust, darkness, and geometrically degenerate surroundings with a total of $11~h$ of operations and $16~km$ of distance traveled.

NeBula: Quest for Robotic Autonomy in Challenging Environments; TEAM CoSTAR at the DARPA Subterranean Challenge

Mar 28, 2021

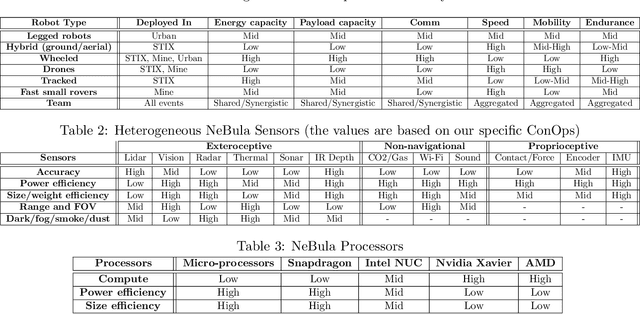

Abstract:This paper presents and discusses algorithms, hardware, and software architecture developed by the TEAM CoSTAR (Collaborative SubTerranean Autonomous Robots), competing in the DARPA Subterranean Challenge. Specifically, it presents the techniques utilized within the Tunnel (2019) and Urban (2020) competitions, where CoSTAR achieved 2nd and 1st place, respectively. We also discuss CoSTAR's demonstrations in Martian-analog surface and subsurface (lava tubes) exploration. The paper introduces our autonomy solution, referred to as NeBula (Networked Belief-aware Perceptual Autonomy). NeBula is an uncertainty-aware framework that aims at enabling resilient and modular autonomy solutions by performing reasoning and decision making in the belief space (space of probability distributions over the robot and world states). We discuss various components of the NeBula framework, including: (i) geometric and semantic environment mapping; (ii) a multi-modal positioning system; (iii) traversability analysis and local planning; (iv) global motion planning and exploration behavior; (i) risk-aware mission planning; (vi) networking and decentralized reasoning; and (vii) learning-enabled adaptation. We discuss the performance of NeBula on several robot types (e.g. wheeled, legged, flying), in various environments. We discuss the specific results and lessons learned from fielding this solution in the challenging courses of the DARPA Subterranean Challenge competition.

DARE-SLAM: Degeneracy-Aware and Resilient Loop Closing in Perceptually-Degraded Environments

Feb 09, 2021

Abstract:Enabling fully autonomous robots capable of navigating and exploring large-scale, unknown and complex environments has been at the core of robotics research for several decades. A key requirement in autonomous exploration is building accurate and consistent maps of the unknown environment that can be used for reliable navigation. Loop closure detection, the ability to assert that a robot has returned to a previously visited location, is crucial for consistent mapping as it reduces the drift caused by error accumulation in the estimated robot trajectory. Moreover, in multi-robot systems, loop closures enable merging local maps obtained by a team of robots into a consistent global map of the environment. In this paper, we present a degeneracy-aware and drift-resilient loop closing method to improve place recognition and resolve 3D location ambiguities for simultaneous localization and mapping (SLAM) in GPS-denied, large-scale and perceptually-degraded environments. More specifically, we focus on SLAM in subterranean environments (e.g., lava tubes, caves, and mines) that represent examples of complex and ambiguous environments where current methods have inadequate performance.

LAMP: Large-Scale Autonomous Mapping and Positioning for Exploration of Perceptually-Degraded Subterranean Environments

Mar 05, 2020

Abstract:Simultaneous Localization and Mapping (SLAM) in large-scale, unknown, and complex subterranean environments is a challenging problem. Sensors must operate in off-nominal conditions; uneven and slippery terrains make wheel odometry inaccurate, while long corridors without salient features make exteroceptive sensing ambiguous and prone to drift; finally, spurious loop closures that are frequent in environments with repetitive appearance, such as tunnels and mines, could result in a significant distortion of the entire map. These challenges are in stark contrast with the need to build highly-accurate 3D maps to support a wide variety of applications, ranging from disaster response to the exploration of underground extraterrestrial worlds. This paper reports on the implementation and testing of a lidar-based multi-robot SLAM system developed in the context of the DARPA Subterranean Challenge. We present a system architecture to enhance subterranean operation, including an accurate lidar-based front-end, and a flexible and robust back-end that automatically rejects outlying loop closures. We present an extensive evaluation in large-scale, challenging subterranean environments, including the results obtained in the Tunnel Circuit of the DARPA Subterranean Challenge. Finally, we discuss potential improvements, limitations of the state of the art, and future research directions.

Smoke Sky -- Exploring New Frontiers of Unmanned Aerial Systems for Wildland Fire Science and Applications

Nov 12, 2019

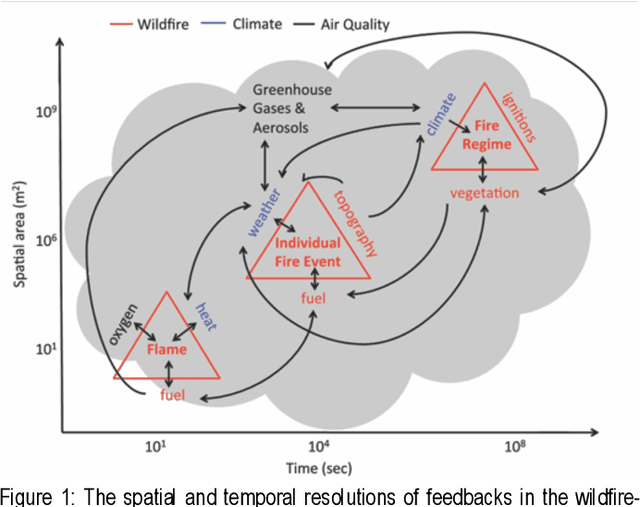

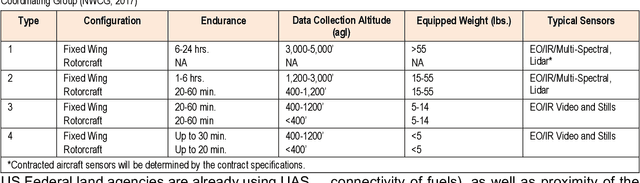

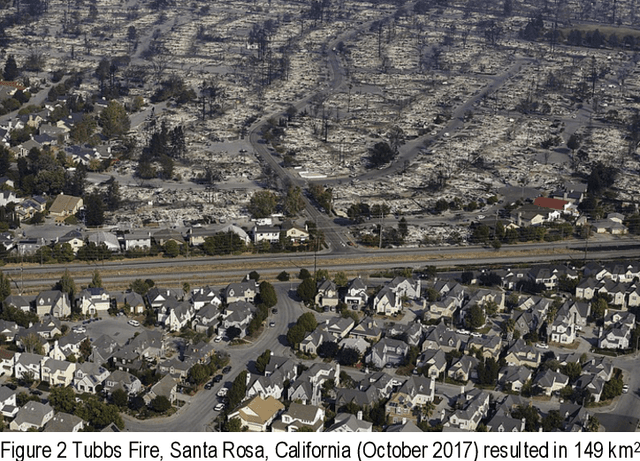

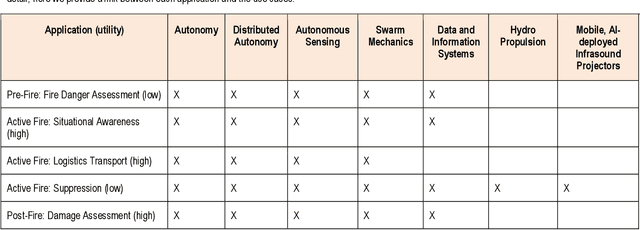

Abstract:Wildfire has had increasing impacts on society as the climate changes and the wildland urban interface grows. As such, there is a demand for innovative solutions to help manage fire. Managing wildfire can include proactive fire management such as prescribed burning within constrained areas or advancements for reactive fire management (e.g., fire suppression). Because of the growing societal impact, the JPL BlueSky program sought to assess the current state of fire management and technology and determine areas with high return on investment. To accomplish this, we met with the national interagency Unmanned Aerial System (UAS) Advisory Group (UASAG) and with leading technology transfer experts for fire science and management applications. We provide an overview of the current state as well as an analysis of the impact, maturity and feasibility of integrating different technologies that can be developed by JPL. Based on the findings, the highest return on investment technologies for fire management are first to develop single micro-aerial vehicle (MAV) autonomy, autonomous sensing over fire, and the associated data and information system for active fire local environment mapping. Once this is completed for a single MAV, expanding the work to include many in a swarm would require further investment of distributed MAV autonomy and MAV swarm mechanics, but could greatly expand the breadth of application over large fires. Important to investing in these technologies will be in developing collaborations with the key influencers and champions for using UAS technology in fire management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge