Jonathan P. How

MIT

Controlled Flight of an Insect-Scale Flapping-Wing Robot via Integrated Onboard Sensing and Computation

Feb 09, 2026Abstract:Aerial insects can effortlessly navigate dense vegetation, whereas similarly sized aerial robots typically depend on offboard sensors and computation to maintain stable flight. This disparity restricts insect-scale robots to operation within motion capture environments, substantially limiting their applicability to tasks such as search-and-rescue and precision agriculture. In this work, we present a 1.29-gram aerial robot capable of hovering and tracking trajectories with solely onboard sensing and computation. The combination of a sensor suite, estimators, and a low-level controller achieved centimeter-scale positional flight accuracy. Additionally, we developed a hierarchical controller in which a human operator provides high-level commands to direct the robot's motion. In a 30-second flight experiment conducted outside a motion capture system, the robot avoided obstacles and ultimately landed on a sunflower. This level of sensing and computational autonomy represents a significant advancement for the aerial microrobotics community, further opening opportunities to explore onboard planning and power autonomy.

Navigation Around Unknown Space Objects Using Visible-Thermal Image Fusion

Dec 13, 2025Abstract:As the popularity of on-orbit operations grows, so does the need for precise navigation around unknown resident space objects (RSOs) such as other spacecraft, orbital debris, and asteroids. The use of Simultaneous Localization and Mapping (SLAM) algorithms is often studied as a method to map out the surface of an RSO and find the inspector's relative pose using a lidar or conventional camera. However, conventional cameras struggle during eclipse or shadowed periods, and lidar, though robust to lighting conditions, tends to be heavier, bulkier, and more power-intensive. Thermal-infrared cameras can track the target RSO throughout difficult illumination conditions without these limitations. While useful, thermal-infrared imagery lacks the resolution and feature-richness of visible cameras. In this work, images of a target satellite in low Earth orbit are photo-realistically simulated in both visible and thermal-infrared bands. Pixel-level fusion methods are used to create visible/thermal-infrared composites that leverage the best aspects of each camera. Navigation errors from a monocular SLAM algorithm are compared between visible, thermal-infrared, and fused imagery in various lighting and trajectories. Fused imagery yields substantially improved navigation performance over visible-only and thermal-only methods.

MIGHTY: Hermite Spline-based Efficient Trajectory Planning

Nov 13, 2025

Abstract:Hard-constraint trajectory planners often rely on commercial solvers and demand substantial computational resources. Existing soft-constraint methods achieve faster computation, but either (1) decouple spatial and temporal optimization or (2) restrict the search space. To overcome these limitations, we introduce MIGHTY, a Hermite spline-based planner that performs spatiotemporal optimization while fully leveraging the continuous search space of a spline. In simulation, MIGHTY achieves a 9.3% reduction in computation time and a 13.1% reduction in travel time over state-of-the-art baselines, with a 100% success rate. In hardware, MIGHTY completes multiple high-speed flights up to 6.7 m/s in a cluttered static environment and long-duration flights with dynamically added obstacles.

Efficient Probabilistic Planning with Maximum-Coverage Distributionally Robust Backward Reachable Trees

Oct 06, 2025Abstract:This paper presents a new multi-query motion planning algorithm for linear Gaussian systems with the goal of reaching a Euclidean ball with high probability. We develop a new formulation for ball-shaped ambiguity sets of Gaussian distributions and leverage it to develop a distributionally robust belief roadmap construction algorithm. This algorithm synthe- sizes robust controllers which are certified to be safe for maximal size ball-shaped ambiguity sets of Gaussian distributions. Our algorithm achieves better coverage than the maximal coverage algorithm for planning over Gaussian distributions [1], and we identify mild conditions under which our algorithm achieves strictly better coverage. For the special case of no process noise or state constraints, we formally prove that our algorithm achieves maximal coverage. In addition, we present a second multi-query motion planning algorithm for linear Gaussian systems with the goal of reaching a region parameterized by the Minkowski sum of an ellipsoid and a Euclidean ball with high probability. This algorithm plans over ellipsoidal sets of maximal size ball-shaped ambiguity sets of Gaussian distributions, and provably achieves equal or better coverage than the best-known algorithm for planning over ellipsoidal ambiguity sets of Gaussian distributions [2]. We demonstrate the efficacy of both methods in a wide range of conditions via extensive simulation experiments.

Uncertainty-Aware Multi-Robot Task Allocation With Strongly Coupled Inter-Robot Rewards

Sep 26, 2025Abstract:This paper proposes a task allocation algorithm for teams of heterogeneous robots in environments with uncertain task requirements. We model these requirements as probability distributions over capabilities and use this model to allocate tasks such that robots with complementary skills naturally position near uncertain tasks, proactively mitigating task failures without wasting resources. We introduce a market-based approach that optimizes the joint team objective while explicitly capturing coupled rewards between robots, offering a polynomial-time solution in decentralized settings with strict communication assumptions. Comparative experiments against benchmark algorithms demonstrate the effectiveness of our approach and highlight the challenges of incorporating coupled rewards in a decentralized formulation.

Distribution Estimation for Global Data Association via Approximate Bayesian Inference

Sep 19, 2025

Abstract:Global data association is an essential prerequisite for robot operation in environments seen at different times or by different robots. Repetitive or symmetric data creates significant challenges for existing methods, which typically rely on maximum likelihood estimation or maximum consensus to produce a single set of associations. However, in ambiguous scenarios, the distribution of solutions to global data association problems is often highly multimodal, and such single-solution approaches frequently fail. In this work, we introduce a data association framework that leverages approximate Bayesian inference to capture multiple solution modes to the data association problem, thereby avoiding premature commitment to a single solution under ambiguity. Our approach represents hypothetical solutions as particles that evolve according to a deterministic or randomized update rule to cover the modes of the underlying solution distribution. Furthermore, we show that our method can incorporate optimization constraints imposed by the data association formulation and directly benefit from GPU-parallelized optimization. Extensive simulated and real-world experiments with highly ambiguous data show that our method correctly estimates the distribution over transformations when registering point clouds or object maps.

GRAND-SLAM: Local Optimization for Globally Consistent Large-Scale Multi-Agent Gaussian SLAM

Jun 23, 2025Abstract:3D Gaussian splatting has emerged as an expressive scene representation for RGB-D visual SLAM, but its application to large-scale, multi-agent outdoor environments remains unexplored. Multi-agent Gaussian SLAM is a promising approach to rapid exploration and reconstruction of environments, offering scalable environment representations, but existing approaches are limited to small-scale, indoor environments. To that end, we propose Gaussian Reconstruction via Multi-Agent Dense SLAM, or GRAND-SLAM, a collaborative Gaussian splatting SLAM method that integrates i) an implicit tracking module based on local optimization over submaps and ii) an approach to inter- and intra-robot loop closure integrated into a pose-graph optimization framework. Experiments show that GRAND-SLAM provides state-of-the-art tracking performance and 28% higher PSNR than existing methods on the Replica indoor dataset, as well as 91% lower multi-agent tracking error and improved rendering over existing multi-agent methods on the large-scale, outdoor Kimera-Multi dataset.

Language-Grounded Hierarchical Planning and Execution with Multi-Robot 3D Scene Graphs

Jun 09, 2025Abstract:In this paper, we introduce a multi-robot system that integrates mapping, localization, and task and motion planning (TAMP) enabled by 3D scene graphs to execute complex instructions expressed in natural language. Our system builds a shared 3D scene graph incorporating an open-set object-based map, which is leveraged for multi-robot 3D scene graph fusion. This representation supports real-time, view-invariant relocalization (via the object-based map) and planning (via the 3D scene graph), allowing a team of robots to reason about their surroundings and execute complex tasks. Additionally, we introduce a planning approach that translates operator intent into Planning Domain Definition Language (PDDL) goals using a Large Language Model (LLM) by leveraging context from the shared 3D scene graph and robot capabilities. We provide an experimental assessment of the performance of our system on real-world tasks in large-scale, outdoor environments.

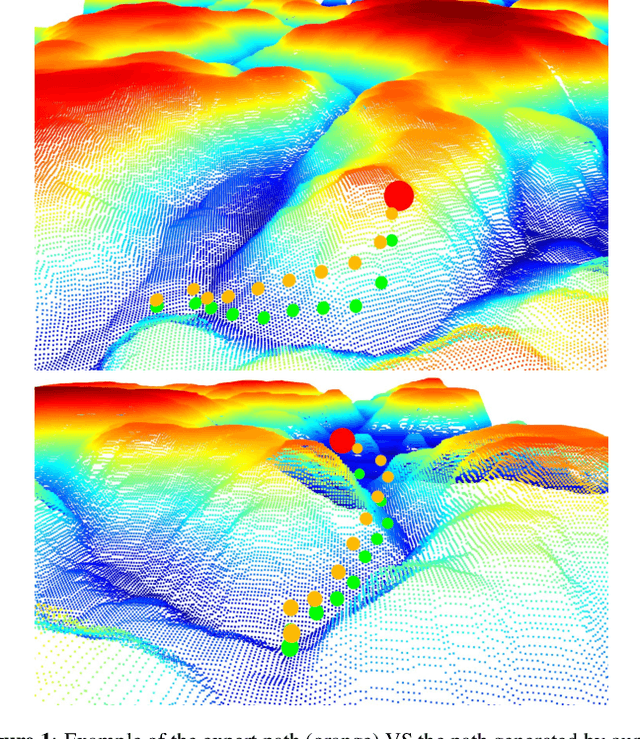

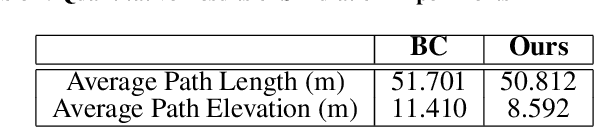

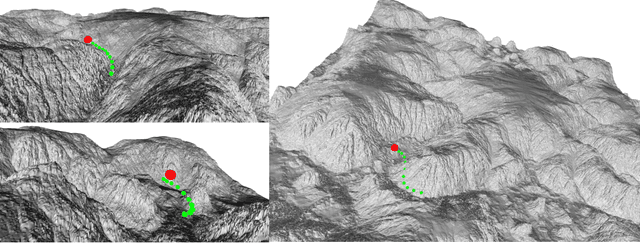

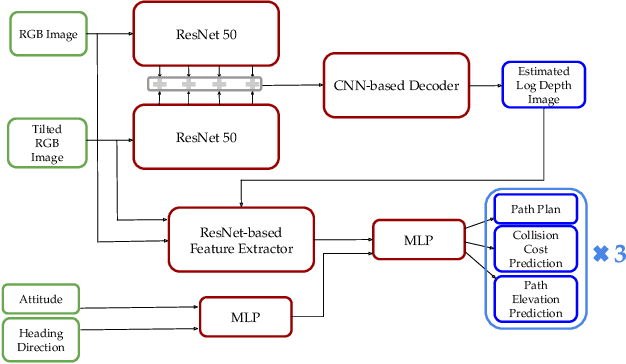

Terrain-aware Low Altitude Path Planning

May 11, 2025

Abstract:In this paper, we study the problem of generating low altitude path plans for nap-of-the-earth (NOE) flight in real time with only RGB images from onboard cameras and the vehicle pose. We propose a novel training method that combines behavior cloning and self-supervised learning that enables the learned policy to outperform the policy trained with standard behavior cloning approach on this task. Simulation studies are performed on a custom canyon terrain.

DYNUS: Uncertainty-aware Trajectory Planner in Dynamic Unknown Environments

Apr 24, 2025

Abstract:This paper introduces DYNUS, an uncertainty-aware trajectory planner designed for dynamic unknown environments. Operating in such settings presents many challenges -- most notably, because the agent cannot predict the ground-truth future paths of obstacles, a previously planned trajectory can become unsafe at any moment, requiring rapid replanning to avoid collisions. Recently developed planners have used soft-constraint approaches to achieve the necessary fast computation times; however, these methods do not guarantee collision-free paths even with static obstacles. In contrast, hard-constraint methods ensure collision-free safety, but typically have longer computation times. To address these issues, we propose three key contributions. First, the DYNUS Global Planner (DGP) and Temporal Safe Corridor Generation operate in spatio-temporal space and handle both static and dynamic obstacles in the 3D environment. Second, the Safe Planning Framework leverages a combination of exploratory, safe, and contingency trajectories to flexibly re-route when potential future collisions with dynamic obstacles are detected. Finally, the Fast Hard-Constraint Local Trajectory Formulation uses a variable elimination approach to reduce the problem size and enable faster computation by pre-computing dependencies between free and dependent variables while still ensuring collision-free trajectories. We evaluated DYNUS in a variety of simulations, including dense forests, confined office spaces, cave systems, and dynamic environments. Our experiments show that DYNUS achieves a success rate of 100% and travel times that are approximately 25.0% faster than state-of-the-art methods. We also evaluated DYNUS on multiple platforms -- a quadrotor, a wheeled robot, and a quadruped -- in both simulation and hardware experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge