Airsim

Papers and Code

Visual Marker Search for Autonomous Drone Landing in Diverse Urban Environments

Jan 16, 2026Marker-based landing is widely used in drone delivery and return-to-base systems for its simplicity and reliability. However, most approaches assume idealized landing site visibility and sensor performance, limiting robustness in complex urban settings. We present a simulation-based evaluation suite on the AirSim platform with systematically varied urban layouts, lighting, and weather to replicate realistic operational diversity. Using onboard camera sensors (RGB for marker detection and depth for obstacle avoidance), we benchmark two heuristic coverage patterns and a reinforcement learning-based agent, analyzing how exploration strategy and scene complexity affect success rate, path efficiency, and robustness. Results underscore the need to evaluate marker-based autonomous landing under diverse, sensor-relevant conditions to guide the development of reliable aerial navigation systems.

Large Language Models to Enhance Multi-task Drone Operations in Simulated Environments

Jan 13, 2026Benefiting from the rapid advancements in large language models (LLMs), human-drone interaction has reached unprecedented opportunities. In this paper, we propose a method that integrates a fine-tuned CodeT5 model with the Unreal Engine-based AirSim drone simulator to efficiently execute multi-task operations using natural language commands. This approach enables users to interact with simulated drones through prompts or command descriptions, allowing them to easily access and control the drone's status, significantly lowering the operational threshold. In the AirSim simulator, we can flexibly construct visually realistic dynamic environments to simulate drone applications in complex scenarios. By combining a large dataset of (natural language, program code) command-execution pairs generated by ChatGPT with developer-written drone code as training data, we fine-tune the CodeT5 to achieve automated translation from natural language to executable code for drone tasks. Experimental results demonstrate that the proposed method exhibits superior task execution efficiency and command understanding capabilities in simulated environments. In the future, we plan to extend the model functionality in a modular manner, enhancing its adaptability to complex scenarios and driving the application of drone technologies in real-world environments.

SynthSoM-Twin: A Multi-Modal Sensing-Communication Digital-Twin Dataset for Sim2Real Transfer via Synesthesia of Machines

Nov 14, 2025

This paper constructs a novel multi-modal sensing-communication digital-twin dataset, named SynthSoM-Twin, which is spatio-temporally consistent with the real world, for Sim2Real transfer via Synesthesia of Machines (SoM). To construct the SynthSoM-Twin dataset, we propose a new framework that can extend the quantity and missing modality of existing real-world multi-modal sensing-communication dataset. Specifically, we exploit multi-modal sensing-assisted object detection and tracking algorithms to ensure spatio-temporal consistency of static objects and dynamic objects across real world and simulation environments. The constructed scenario is imported into three high-fidelity simulators, i.e., AirSim, WaveFarer, and Sionna RT. The SynthSoM-Twin dataset contains spatio-temporally consistent data with the real world, including 66,868 snapshots of synthetic RGB images, depth maps, light detection and ranging (LiDAR) point clouds, millimeter wave (mmWave) radar point clouds, and large-scale and small-scale channel fading data. To validate the utility of SynthSoM-Twin dataset, we conduct Sim2Real transfer investigation by implementing two cross-modal downstream tasks via cross-modal generative models (CMGMs), i.e., cross-modal channel generation model and multi-modal sensing-assisted beam generation model. Based on the downstream tasks, we explore the threshold of real-world data injection that can achieve a decent trade-off between real-world data usage and models' practical performance. Experimental results show that the model training on the SynthSoM-Twin dataset achieves a decent practical performance, and the injection of real-world data further facilitates Sim2Real transferability. Based on the SynthSoM-Twin dataset, injecting less than 15% of real-world data can achieve similar and even better performance compared to that trained with all the real-world data only.

SkyVLN: Vision-and-Language Navigation and NMPC Control for UAVs in Urban Environments

Jul 09, 2025

Unmanned Aerial Vehicles (UAVs) have emerged as versatile tools across various sectors, driven by their mobility and adaptability. This paper introduces SkyVLN, a novel framework integrating vision-and-language navigation (VLN) with Nonlinear Model Predictive Control (NMPC) to enhance UAV autonomy in complex urban environments. Unlike traditional navigation methods, SkyVLN leverages Large Language Models (LLMs) to interpret natural language instructions and visual observations, enabling UAVs to navigate through dynamic 3D spaces with improved accuracy and robustness. We present a multimodal navigation agent equipped with a fine-grained spatial verbalizer and a history path memory mechanism. These components allow the UAV to disambiguate spatial contexts, handle ambiguous instructions, and backtrack when necessary. The framework also incorporates an NMPC module for dynamic obstacle avoidance, ensuring precise trajectory tracking and collision prevention. To validate our approach, we developed a high-fidelity 3D urban simulation environment using AirSim, featuring realistic imagery and dynamic urban elements. Extensive experiments demonstrate that SkyVLN significantly improves navigation success rates and efficiency, particularly in new and unseen environments.

Predictive Control over LAWN: Joint Trajectory Design and Resource Allocation

Jul 03, 2025

Low-altitude wireless networks (LAWNs) have been envisioned as flexible and transformative platforms for enabling delay-sensitive control applications in Internet of Things (IoT) systems. In this work, we investigate the real-time wireless control over a LAWN system, where an aerial drone is employed to serve multiple mobile automated guided vehicles (AGVs) via finite blocklength (FBL) transmission. Toward this end, we adopt the model predictive control (MPC) to ensure accurate trajectory tracking, while we analyze the communication reliability using the outage probability. Subsequently, we formulate an optimization problem to jointly determine control policy, transmit power allocation, and drone trajectory by accounting for the maximum travel distance and control input constraints. To address the resultant non-convex optimization problem, we first derive the closed-form expression of the outage probability under FBL transmission. Based on this, we reformulate the original problem as a quadratic programming (QP) problem, followed by developing an alternating optimization (AO) framework. Specifically, we employ the projected gradient descent (PGD) method and the successive convex approximation (SCA) technique to achieve computationally efficient sub-optimal solutions. Furthermore, we thoroughly analyze the convergence and computational complexity of the proposed algorithm. Extensive simulations and AirSim-based experiments are conducted to validate the superiority of our proposed approach compared to the baseline schemes in terms of control performance.

UAV Control with Vision-based Hand Gesture Recognition over Edge-Computing

May 22, 2025Gesture recognition presents a promising avenue for interfacing with unmanned aerial vehicles (UAVs) due to its intuitive nature and potential for precise interaction. This research conducts a comprehensive comparative analysis of vision-based hand gesture detection methodologies tailored for UAV Control. The existing gesture recognition approaches involving cropping, zooming, and color-based segmentation, do not work well for this kind of applications in dynamic conditions and suffer in performance with increasing distance and environmental noises. We propose to use a novel approach leveraging hand landmarks drawing and classification for gesture recognition based UAV control. With experimental results we show that our proposed method outperforms the other existing methods in terms of accuracy, noise resilience, and efficacy across varying distances, thus providing robust control decisions. However, implementing the deep learning based compute intensive gesture recognition algorithms on the UAV's onboard computer is significantly challenging in terms of performance. Hence, we propose to use a edge-computing based framework to offload the heavier computing tasks, thus achieving closed-loop real-time performance. With implementation over AirSim simulator as well as over a real-world UAV, we showcase the advantage of our end-to-end gesture recognition based UAV control system.

A Deep Single Image Rectification Approach for Pan-Tilt-Zoom Cameras

Apr 09, 2025Pan-Tilt-Zoom (PTZ) cameras with wide-angle lenses are widely used in surveillance but often require image rectification due to their inherent nonlinear distortions. Current deep learning approaches typically struggle to maintain fine-grained geometric details, resulting in inaccurate rectification. This paper presents a Forward Distortion and Backward Warping Network (FDBW-Net), a novel framework for wide-angle image rectification. It begins by using a forward distortion model to synthesize barrel-distorted images, reducing pixel redundancy and preventing blur. The network employs a pyramid context encoder with attention mechanisms to generate backward warping flows containing geometric details. Then, a multi-scale decoder is used to restore distorted features and output rectified images. FDBW-Net's performance is validated on diverse datasets: public benchmarks, AirSim-rendered PTZ camera imagery, and real-scene PTZ camera datasets. It demonstrates that FDBW-Net achieves SOTA performance in distortion rectification, boosting the adaptability of PTZ cameras for practical visual applications.

Griffin: Aerial-Ground Cooperative Detection and Tracking Dataset and Benchmark

Mar 10, 2025

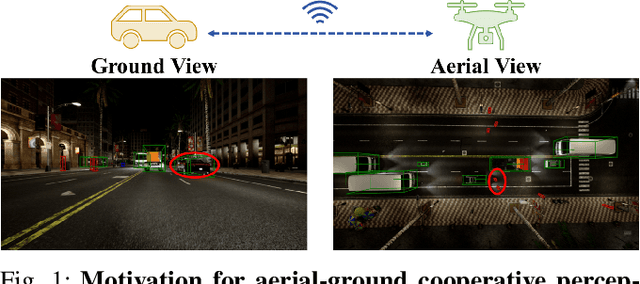

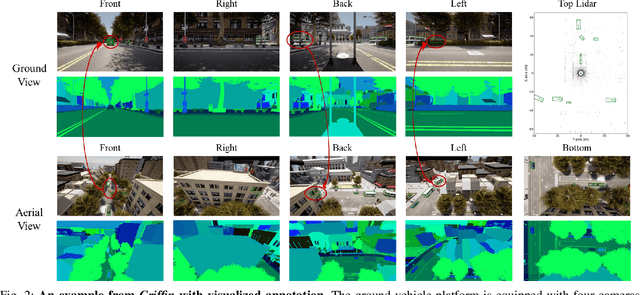

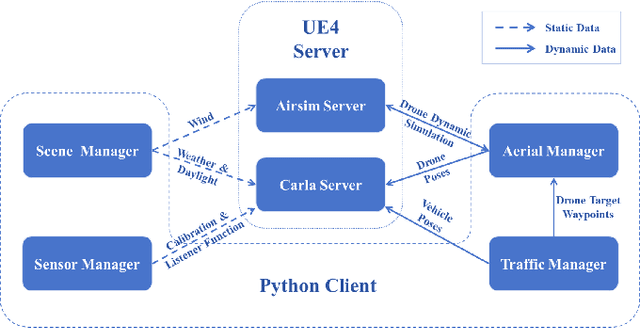

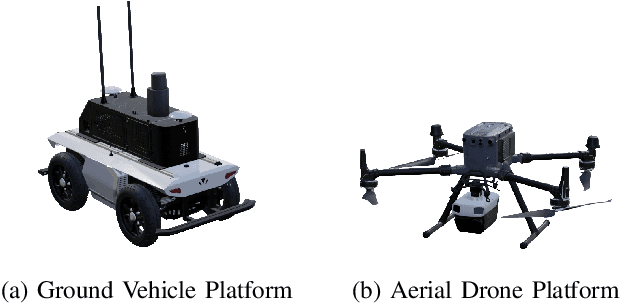

Despite significant advancements, autonomous driving systems continue to struggle with occluded objects and long-range detection due to the inherent limitations of single-perspective sensing. Aerial-ground cooperation offers a promising solution by integrating UAVs' aerial views with ground vehicles' local observations. However, progress in this emerging field has been hindered by the absence of public datasets and standardized evaluation benchmarks. To address this gap, this paper presents a comprehensive solution for aerial-ground cooperative 3D perception through three key contributions: (1) Griffin, a large-scale multi-modal dataset featuring over 200 dynamic scenes (30k+ frames) with varied UAV altitudes (20-60m), diverse weather conditions, and occlusion-aware 3D annotations, enhanced by CARLA-AirSim co-simulation for realistic UAV dynamics; (2) A unified benchmarking framework for aerial-ground cooperative detection and tracking tasks, including protocols for evaluating communication efficiency, latency tolerance, and altitude adaptability; (3) AGILE, an instance-level intermediate fusion baseline that dynamically aligns cross-view features through query-based interaction, achieving an advantageous balance between communication overhead and perception accuracy. Extensive experiments prove the effectiveness of aerial-ground cooperative perception and demonstrate the direction of further research. The dataset and codes are available at https://github.com/wang-jh18-SVM/Griffin.

SynthSoM: A synthetic intelligent multi-modal sensing-communication dataset for Synesthesia of Machines (SoM)

Jan 13, 2025

Given the importance of datasets for sensing-communication integration research, a novel simulation platform for constructing communication and multi-modal sensory dataset is developed. The developed platform integrates three high-precision software, i.e., AirSim, WaveFarer, and Wireless InSite, and further achieves in-depth integration and precise alignment of them. Based on the developed platform, a new synthetic intelligent multi-modal sensing-communication dataset for Synesthesia of Machines (SoM), named SynthSoM, is proposed. The SynthSoM dataset contains various air-ground multi-link cooperative scenarios with comprehensive conditions, including multiple weather conditions, times of the day, intelligent agent densities, frequency bands, and antenna types. The SynthSoM dataset encompasses multiple data modalities, including radio-frequency (RF) channel large-scale and small-scale fading data, RF millimeter wave (mmWave) radar sensory data, and non-RF sensory data, e.g., RGB images, depth maps, and light detection and ranging (LiDAR) point clouds. The quality of SynthSoM dataset is validated via statistics-based qualitative inspection and evaluation metrics through machine learning (ML) via real-world measurements. The SynthSoM dataset is open-sourced and provides consistent data for cross-comparing SoM-related algorithms.

Towards Selection and Transition Between Behavior-Based Neural Networks for Automated Driving

Dec 21, 2024

Autonomous driving technology is progressing rapidly, largely due to complex End To End systems based on deep neural networks. While these systems are effective, their complexity can make it difficult to understand their behavior, raising safety concerns. This paper presents a new solution a Behavior Selector that uses multiple smaller artificial neural networks (ANNs) to manage different driving tasks, such as lane following and turning. Rather than relying on a single large network, which can be burdensome, require extensive training data, and is hard to understand, the developed approach allows the system to dynamically select the appropriate neural network for each specific behavior (e.g., turns) in real time. We focus on ensuring smooth transitions between behaviors while considering the vehicles current speed and orientation to improve stability and safety. The proposed system has been tested using the AirSim simulation environment, demonstrating its effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge