Sabur Baidya

HEART-VIT: Hessian-Guided Efficient Dynamic Attention and Token Pruning in Vision Transformer

Dec 23, 2025Abstract:Vision Transformers (ViTs) deliver state-of-the-art accuracy but their quadratic attention cost and redundant computations severely hinder deployment on latency and resource-constrained platforms. Existing pruning approaches treat either tokens or heads in isolation, relying on heuristics or first-order signals, which often sacrifice accuracy or fail to generalize across inputs. We introduce HEART-ViT, a Hessian-guided efficient dynamic attention and token pruning framework for vision transformers, which to the best of our knowledge is the first unified, second-order, input-adaptive framework for ViT optimization. HEART-ViT estimates curvature-weighted sensitivities of both tokens and attention heads using efficient Hessian-vector products, enabling principled pruning decisions under explicit loss budgets.This dual-view sensitivity reveals an important structural insight: token pruning dominates computational savings, while head pruning provides fine-grained redundancy removal, and their combination achieves a superior trade-off. On ImageNet-100 and ImageNet-1K with ViT-B/16 and DeiT-B/16, HEART-ViT achieves up to 49.4 percent FLOPs reduction, 36 percent lower latency, and 46 percent higher throughput, while consistently matching or even surpassing baseline accuracy after fine-tuning, for example 4.7 percent recovery at 40 percent token pruning. Beyond theoretical benchmarks, we deploy HEART-ViT on different edge devices such as AGX Orin, demonstrating that our reductions in FLOPs and latency translate directly into real-world gains in inference speed and energy efficiency. HEART-ViT bridges the gap between theory and practice, delivering the first unified, curvature-driven pruning framework that is both accuracy-preserving and edge-efficient.

CAMP-HiVe: Cyclic Pair Merging based Efficient DNN Pruning with Hessian-Vector Approximation for Resource-Constrained Systems

Nov 09, 2025

Abstract:Deep learning algorithms are becoming an essential component of many artificial intelligence (AI) driven applications, many of which run on resource-constrained and energy-constrained systems. For efficient deployment of these algorithms, although different techniques for the compression of neural network models are proposed, neural pruning is one of the fastest and effective methods, which can provide a high compression gain with minimal cost. To harness enhanced performance gain with respect to model complexity, we propose a novel neural network pruning approach utilizing Hessian-vector products that approximate crucial curvature information in the loss function, which significantly reduces the computation demands. By employing a power iteration method, our algorithm effectively identifies and preserves the essential information, ensuring a balanced trade-off between model accuracy and computational efficiency. Herein, we introduce CAMP-HiVe, a cyclic pair merging-based pruning with Hessian Vector approximation by iteratively consolidating weight pairs, combining significant and less significant weights, thus effectively streamlining the model while preserving its performance. This dynamic, adaptive framework allows for real-time adjustment of weight significance, ensuring that only the most critical parameters are retained. Our experimental results demonstrate that our proposed method achieves significant reductions in computational requirements while maintaining high performance across different neural network architectures, e.g., ResNet18, ResNet56, and MobileNetv2, on standard benchmark datasets, e.g., CIFAR10, CIFAR-100, and ImageNet, and it outperforms the existing state-of-the-art neural pruning methods.

ACCESS-AV: Adaptive Communication-Computation Codesign for Sustainable Autonomous Vehicle Localization in Smart Factories

Jul 27, 2025Abstract:Autonomous Delivery Vehicles (ADVs) are increasingly used for transporting goods in 5G network-enabled smart factories, with the compute-intensive localization module presenting a significant opportunity for optimization. We propose ACCESS-AV, an energy-efficient Vehicle-to-Infrastructure (V2I) localization framework that leverages existing 5G infrastructure in smart factory environments. By opportunistically accessing the periodically broadcast 5G Synchronization Signal Blocks (SSBs) for localization, ACCESS-AV obviates the need for dedicated Roadside Units (RSUs) or additional onboard sensors to achieve energy efficiency as well as cost reduction. We implement an Angle-of-Arrival (AoA)-based estimation method using the Multiple Signal Classification (MUSIC) algorithm, optimized for resource-constrained ADV platforms through an adaptive communication-computation strategy that dynamically balances energy consumption with localization accuracy based on environmental conditions such as Signal-to-Noise Ratio (SNR) and vehicle velocity. Experimental results demonstrate that ACCESS-AV achieves an average energy reduction of 43.09% compared to non-adaptive systems employing AoA algorithms such as vanilla MUSIC, ESPRIT, and Root-MUSIC. It maintains sub-30 cm localization accuracy while also delivering substantial reductions in infrastructure and operational costs, establishing its viability for sustainable smart factory environments.

Predicting Human Depression with Hybrid Data Acquisition utilizing Physical Activity Sensing and Social Media Feeds

May 28, 2025Abstract:Mental disorders including depression, anxiety, and other neurological disorders pose a significant global challenge, particularly among individuals exhibiting social avoidance tendencies. This study proposes a hybrid approach by leveraging smartphone sensor data measuring daily physical activities and analyzing their social media (Twitter) interactions for evaluating an individual's depression level. Using CNN-based deep learning models and Naive Bayes classification, we identify human physical activities accurately and also classify the user sentiments. A total of 33 participants were recruited for data acquisition, and nine relevant features were extracted from the physical activities and analyzed with their weekly depression scores, evaluated using the Geriatric Depression Scale (GDS) questionnaire. Of the nine features, six are derived from physical activities, achieving an activity recognition accuracy of 95%, while three features stem from sentiment analysis of Twitter activities, yielding a sentiment analysis accuracy of 95.6%. Notably, several physical activity features exhibited significant correlations with the severity of depression symptoms. For classifying the depression severity, a support vector machine (SVM)-based algorithm is employed that demonstrated a very high accuracy of 94%, outperforming alternative models, e.g., the multilayer perceptron (MLP) and k-nearest neighbor. It is a simple approach yet highly effective in the long run for monitoring depression without breaching personal privacy.

UAV Control with Vision-based Hand Gesture Recognition over Edge-Computing

May 22, 2025Abstract:Gesture recognition presents a promising avenue for interfacing with unmanned aerial vehicles (UAVs) due to its intuitive nature and potential for precise interaction. This research conducts a comprehensive comparative analysis of vision-based hand gesture detection methodologies tailored for UAV Control. The existing gesture recognition approaches involving cropping, zooming, and color-based segmentation, do not work well for this kind of applications in dynamic conditions and suffer in performance with increasing distance and environmental noises. We propose to use a novel approach leveraging hand landmarks drawing and classification for gesture recognition based UAV control. With experimental results we show that our proposed method outperforms the other existing methods in terms of accuracy, noise resilience, and efficacy across varying distances, thus providing robust control decisions. However, implementing the deep learning based compute intensive gesture recognition algorithms on the UAV's onboard computer is significantly challenging in terms of performance. Hence, we propose to use a edge-computing based framework to offload the heavier computing tasks, thus achieving closed-loop real-time performance. With implementation over AirSim simulator as well as over a real-world UAV, we showcase the advantage of our end-to-end gesture recognition based UAV control system.

A Hierarchical Optimization Framework Using Deep Reinforcement Learning for Task-Driven Bandwidth Allocation in 5G Teleoperation

May 21, 2025Abstract:The evolution of 5G wireless technology has revolutionized connectivity, enabling a diverse range of applications. Among these are critical use cases such as real time teleoperation, which demands ultra reliable low latency communications (URLLC) to ensure precise and uninterrupted control, and enhanced mobile broadband (eMBB) services, which cater to data-intensive applications requiring high throughput and bandwidth. In our scenario, there are two queues, one for eMBB users and one for URLLC users. In teleoperation tasks, control commands are received in the URLLC queue, where communication delays occur. The dynamic index (DI) controls the service rate, affecting the telerobotic (URLLC) queue. A separate queue models eMBB data traffic. Both queues are managed through network slicing and application delay constraints, leading to a unified Lagrangian-based Lyapunov optimization for efficient resource allocation. We propose a DRL based hierarchical optimization framework that consists of two levels. At the first level, network optimization dynamically allocates resources for eMBB and URLLC users using a Lagrangian functional and an actor critic network to balance competing objectives. At the second level, control optimization finetunes the best gains for robots, ensuring stability and responsiveness in network conditions. This hierarchical approach enhances both communication and control processes, ensuring efficient resource utilization and optimized performance across the network.

Large Language Models on Small Resource-Constrained Systems: Performance Characterization, Analysis and Trade-offs

Dec 19, 2024

Abstract:Generative AI like the Large Language Models (LLMs) has become more available for the general consumer in recent years. Publicly available services, e.g., ChatGPT, perform token generation on networked cloud server hardware, effectively removing the hardware entry cost for end users. However, the reliance on network access for these services, privacy and security risks involved, and sometimes the needs of the application make it necessary to run LLMs locally on edge devices. A significant amount of research has been done on optimization of LLMs and other transformer-based models on non-networked, resource-constrained devices, but they typically target older hardware. Our research intends to provide a 'baseline' characterization of more recent commercially available embedded hardware for LLMs, and to provide a simple utility to facilitate batch testing LLMs on recent Jetson hardware. We focus on the latest line of NVIDIA Jetson devices (Jetson Orin), and a set of publicly available LLMs (Pythia) ranging between 70 million and 1.4 billion parameters. Through detailed experimental evaluation with varying software and hardware parameters, we showcase trade-off spaces and optimization choices. Additionally, we design our testing structure to facilitate further research that involves performing batch LLM testing on Jetson hardware.

Control Barrier Function Based UAV Safety Controller in Autonomous Airborne Tracking and Following Systems

Dec 28, 2023Abstract:Safe operations of UAVs are of paramount importance for various mission-critical and safety-critical UAV applications. In context of airborne target tracking and following, UAVs need to track a flying target avoiding collision and also closely follow its trajectory. The safety situation becomes critical and more complex when the flying target is non-cooperative and has erratic movements. This paper proposes a method for collision avoidance in an autonomous fast moving dynamic quadrotor UAV tracking and following another target UAV. This is achieved by designing a safety controller that minimally modifies the control input from a trajectory tracking controller and guarantees safety. This method enables pairing our proposed safety controller with already existing flight controllers. Our safety controller uses a control barrier function based quadratic program (CBF-QP) to produce an optimal control input enabling safe operation while also follow the trajectory of the target closely. We implement our solution on AirSim simulator over PX4 flight controller and with numerical results, we validate our approach through several simulation experiments with multiple scenarios and trajectories.

3DS-SLAM: A 3D Object Detection based Semantic SLAM towards Dynamic Indoor Environments

Oct 10, 2023Abstract:The existence of variable factors within the environment can cause a decline in camera localization accuracy, as it violates the fundamental assumption of a static environment in Simultaneous Localization and Mapping (SLAM) algorithms. Recent semantic SLAM systems towards dynamic environments either rely solely on 2D semantic information, or solely on geometric information, or combine their results in a loosely integrated manner. In this research paper, we introduce 3DS-SLAM, 3D Semantic SLAM, tailored for dynamic scenes with visual 3D object detection. The 3DS-SLAM is a tightly-coupled algorithm resolving both semantic and geometric constraints sequentially. We designed a 3D part-aware hybrid transformer for point cloud-based object detection to identify dynamic objects. Subsequently, we propose a dynamic feature filter based on HDBSCAN clustering to extract objects with significant absolute depth differences. When compared against ORB-SLAM2, 3DS-SLAM exhibits an average improvement of 98.01% across the dynamic sequences of the TUM RGB-D dataset. Furthermore, it surpasses the performance of the other four leading SLAM systems designed for dynamic environments.

Digital Twin in Safety-Critical Robotics Applications: Opportunities and Challenges

Sep 26, 2022

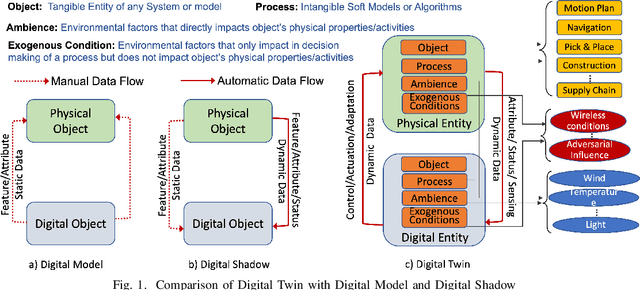

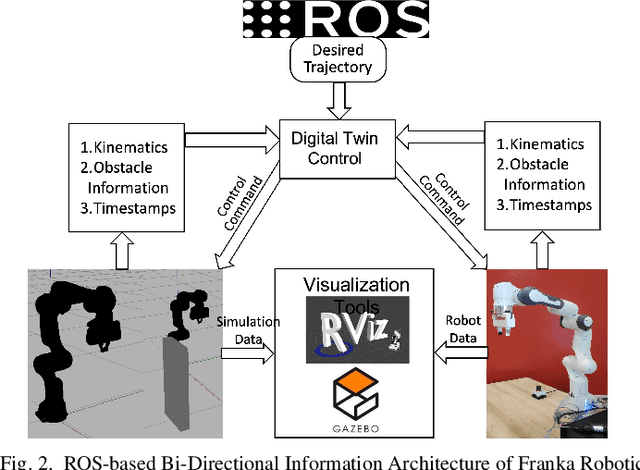

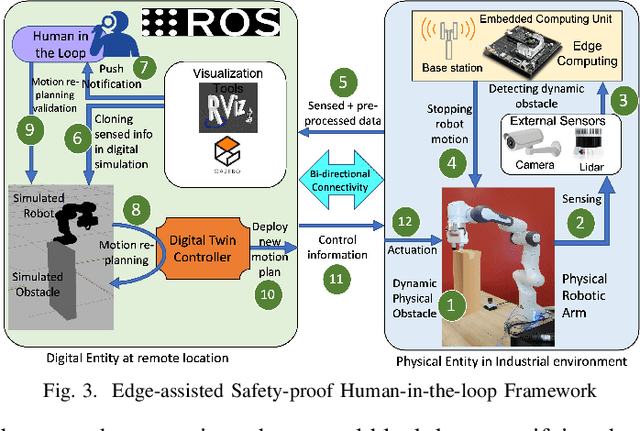

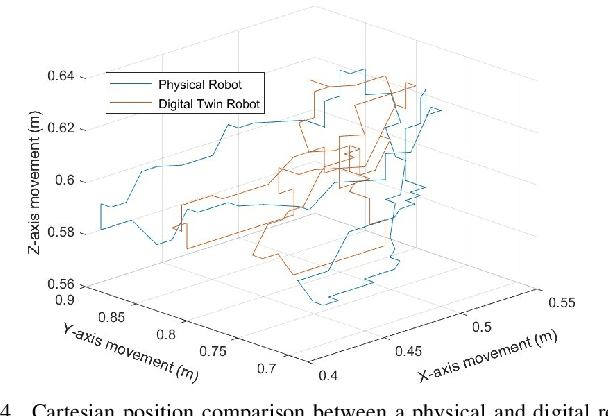

Abstract:Digital Twin technology is being envisioned to be an integral part of the industrial evolution in modern generation. With the rapid advancement in the Internet-of-Things (IoT) technology and increasing trend of automation, integration between the virtual and the physical world is now realizable to produce practical digital twins. However, the existing definitions of digital twin is incomplete and sometimes ambiguous. Herein, we conduct historical review and analyze the modern generic view of digital twin to create its new extended definition. We also review and discuss the existing work in digital twin in safety-critical robotics applications. Especially, the usage of digital twin in industrial applications necessitates autonomous and remote operations due to environmental challenges. However, the uncertainties in the environment may need close monitoring and quick adaptation of the robots which need to be safety-proof and cost effective. We demonstrate a case study on developing a framework for safety-critical robotic arm applications and present the system performance to show its advantages, and discuss the challenges and scopes ahead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge