Zixuan Fu

CPMobius: Iterative Coach-Player Reasoning for Data-Free Reinforcement Learning

Feb 03, 2026Abstract:Large Language Models (LLMs) have demonstrated strong potential in complex reasoning, yet their progress remains fundamentally constrained by reliance on massive high-quality human-curated tasks and labels, either through supervised fine-tuning (SFT) or reinforcement learning (RL) on reasoning-specific data. This dependence renders supervision-heavy training paradigms increasingly unsustainable, with signs of diminishing scalability already evident in practice. To overcome this limitation, we introduce CPMöbius (CPMobius), a collaborative Coach-Player paradigm for data-free reinforcement learning of reasoning models. Unlike traditional adversarial self-play, CPMöbius, inspired by real world human sports collaboration and multi-agent collaboration, treats the Coach and Player as independent but cooperative roles. The Coach proposes instructions targeted at the Player's capability and receives rewards based on changes in the Player's performance, while the Player is rewarded for solving the increasingly instructive tasks generated by the Coach. This cooperative optimization loop is designed to directly enhance the Player's mathematical reasoning ability. Remarkably, CPMöbius achieves substantial improvement without relying on any external training data, outperforming existing unsupervised approaches. For example, on Qwen2.5-Math-7B-Instruct, our method improves accuracy by an overall average of +4.9 and an out-of-distribution average of +5.4, exceeding RENT by +1.5 on overall accuracy and R-zero by +4.2 on OOD accuracy.

Improving Flexible Image Tokenizers for Autoregressive Image Generation

Jan 04, 2026Abstract:Flexible image tokenizers aim to represent an image using an ordered 1D variable-length token sequence. This flexible tokenization is typically achieved through nested dropout, where a portion of trailing tokens is randomly truncated during training, and the image is reconstructed using the remaining preceding sequence. However, this tail-truncation strategy inherently concentrates the image information in the early tokens, limiting the effectiveness of downstream AutoRegressive (AR) image generation as the token length increases. To overcome these limitations, we propose \textbf{ReToK}, a flexible tokenizer with \underline{Re}dundant \underline{Tok}en Padding and Hierarchical Semantic Regularization, designed to fully exploit all tokens for enhanced latent modeling. Specifically, we introduce \textbf{Redundant Token Padding} to activate tail tokens more frequently, thereby alleviating information over-concentration in the early tokens. In addition, we apply \textbf{Hierarchical Semantic Regularization} to align the decoding features of earlier tokens with those from a pre-trained vision foundation model, while progressively reducing the regularization strength toward the tail to allow finer low-level detail reconstruction. Extensive experiments demonstrate the effectiveness of ReTok: on ImageNet 256$\times$256, our method achieves superior generation performance compared with both flexible and fixed-length tokenizers. Code will be available at: \href{https://github.com/zfu006/ReTok}{https://github.com/zfu006/ReTok}

FADE: Adversarial Concept Erasure in Flow Models

Jul 16, 2025

Abstract:Diffusion models have demonstrated remarkable image generation capabilities, but also pose risks in privacy and fairness by memorizing sensitive concepts or perpetuating biases. We propose a novel \textbf{concept erasure} method for text-to-image diffusion models, designed to remove specified concepts (e.g., a private individual or a harmful stereotype) from the model's generative repertoire. Our method, termed \textbf{FADE} (Fair Adversarial Diffusion Erasure), combines a trajectory-aware fine-tuning strategy with an adversarial objective to ensure the concept is reliably removed while preserving overall model fidelity. Theoretically, we prove a formal guarantee that our approach minimizes the mutual information between the erased concept and the model's outputs, ensuring privacy and fairness. Empirically, we evaluate FADE on Stable Diffusion and FLUX, using benchmarks from prior work (e.g., object, celebrity, explicit content, and style erasure tasks from MACE). FADE achieves state-of-the-art concept removal performance, surpassing recent baselines like ESD, UCE, MACE, and ANT in terms of removal efficacy and image quality. Notably, FADE improves the harmonic mean of concept removal and fidelity by 5--10\% over the best prior method. We also conduct an ablation study to validate each component of FADE, confirming that our adversarial and trajectory-preserving objectives each contribute to its superior performance. Our work sets a new standard for safe and fair generative modeling by unlearning specified concepts without retraining from scratch.

MiniCPM4: Ultra-Efficient LLMs on End Devices

Jun 09, 2025

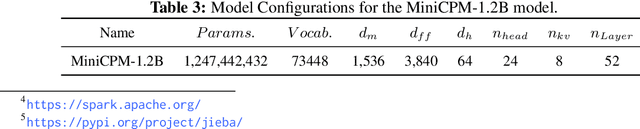

Abstract:This paper introduces MiniCPM4, a highly efficient large language model (LLM) designed explicitly for end-side devices. We achieve this efficiency through systematic innovation in four key dimensions: model architecture, training data, training algorithms, and inference systems. Specifically, in terms of model architecture, we propose InfLLM v2, a trainable sparse attention mechanism that accelerates both prefilling and decoding phases for long-context processing. Regarding training data, we propose UltraClean, an efficient and accurate pre-training data filtering and generation strategy, and UltraChat v2, a comprehensive supervised fine-tuning dataset. These datasets enable satisfactory model performance to be achieved using just 8 trillion training tokens. Regarding training algorithms, we propose ModelTunnel v2 for efficient pre-training strategy search, and improve existing post-training methods by introducing chunk-wise rollout for load-balanced reinforcement learning and data-efficient tenary LLM, BitCPM. Regarding inference systems, we propose CPM.cu that integrates sparse attention, model quantization, and speculative sampling to achieve efficient prefilling and decoding. To meet diverse on-device requirements, MiniCPM4 is available in two versions, with 0.5B and 8B parameters, respectively. Sufficient evaluation results show that MiniCPM4 outperforms open-source models of similar size across multiple benchmarks, highlighting both its efficiency and effectiveness. Notably, MiniCPM4-8B demonstrates significant speed improvements over Qwen3-8B when processing long sequences. Through further adaptation, MiniCPM4 successfully powers diverse applications, including trustworthy survey generation and tool use with model context protocol, clearly showcasing its broad usability.

Ultra-FineWeb: Efficient Data Filtering and Verification for High-Quality LLM Training Data

May 08, 2025

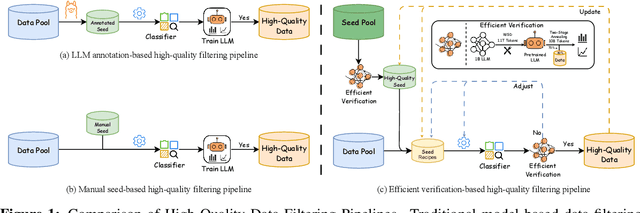

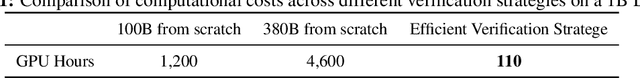

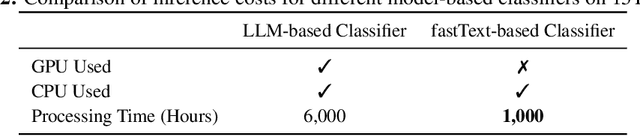

Abstract:Data quality has become a key factor in enhancing model performance with the rapid development of large language models (LLMs). Model-driven data filtering has increasingly become a primary approach for acquiring high-quality data. However, it still faces two main challenges: (1) the lack of an efficient data verification strategy makes it difficult to provide timely feedback on data quality; and (2) the selection of seed data for training classifiers lacks clear criteria and relies heavily on human expertise, introducing a degree of subjectivity. To address the first challenge, we introduce an efficient verification strategy that enables rapid evaluation of the impact of data on LLM training with minimal computational cost. To tackle the second challenge, we build upon the assumption that high-quality seed data is beneficial for LLM training, and by integrating the proposed verification strategy, we optimize the selection of positive and negative samples and propose an efficient data filtering pipeline. This pipeline not only improves filtering efficiency, classifier quality, and robustness, but also significantly reduces experimental and inference costs. In addition, to efficiently filter high-quality data, we employ a lightweight classifier based on fastText, and successfully apply the filtering pipeline to two widely-used pre-training corpora, FineWeb and Chinese FineWeb datasets, resulting in the creation of the higher-quality Ultra-FineWeb dataset. Ultra-FineWeb contains approximately 1 trillion English tokens and 120 billion Chinese tokens. Empirical results demonstrate that the LLMs trained on Ultra-FineWeb exhibit significant performance improvements across multiple benchmark tasks, validating the effectiveness of our pipeline in enhancing both data quality and training efficiency.

AIR: A Systematic Analysis of Annotations, Instructions, and Response Pairs in Preference Dataset

Apr 04, 2025

Abstract:Preference learning is critical for aligning large language models (LLMs) with human values, yet its success hinges on high-quality datasets comprising three core components: Preference \textbf{A}nnotations, \textbf{I}nstructions, and \textbf{R}esponse Pairs. Current approaches conflate these components, obscuring their individual impacts and hindering systematic optimization. In this work, we propose \textbf{AIR}, a component-wise analysis framework that systematically isolates and optimizes each component while evaluating their synergistic effects. Through rigorous experimentation, AIR reveals actionable principles: annotation simplicity (point-wise generative scoring), instruction inference stability (variance-based filtering across LLMs), and response pair quality (moderate margins + high absolute scores). When combined, these principles yield +5.3 average gains over baseline method, even with only 14k high-quality pairs. Our work shifts preference dataset design from ad hoc scaling to component-aware optimization, offering a blueprint for efficient, reproducible alignment.

Reconciling Stochastic and Deterministic Strategies for Zero-shot Image Restoration using Diffusion Model in Dual

Mar 03, 2025Abstract:Plug-and-play (PnP) methods offer an iterative strategy for solving image restoration (IR) problems in a zero-shot manner, using a learned \textit{discriminative denoiser} as the implicit prior. More recently, a sampling-based variant of this approach, which utilizes a pre-trained \textit{generative diffusion model}, has gained great popularity for solving IR problems through stochastic sampling. The IR results using PnP with a pre-trained diffusion model demonstrate distinct advantages compared to those using discriminative denoisers, \ie improved perceptual quality while sacrificing the data fidelity. The unsatisfactory results are due to the lack of integration of these strategies in the IR tasks. In this work, we propose a novel zero-shot IR scheme, dubbed Reconciling Diffusion Model in Dual (RDMD), which leverages only a \textbf{single} pre-trained diffusion model to construct \textbf{two} complementary regularizers. Specifically, the diffusion model in RDMD will iteratively perform deterministic denoising and stochastic sampling, aiming to achieve high-fidelity image restoration with appealing perceptual quality. RDMD also allows users to customize the distortion-perception tradeoff with a single hyperparameter, enhancing the adaptability of the restoration process in different practical scenarios. Extensive experiments on several IR tasks demonstrate that our proposed method could achieve superior results compared to existing approaches on both the FFHQ and ImageNet datasets.

Temporal As a Plugin: Unsupervised Video Denoising with Pre-Trained Image Denoisers

Sep 17, 2024

Abstract:Recent advancements in deep learning have shown impressive results in image and video denoising, leveraging extensive pairs of noisy and noise-free data for supervision. However, the challenge of acquiring paired videos for dynamic scenes hampers the practical deployment of deep video denoising techniques. In contrast, this obstacle is less pronounced in image denoising, where paired data is more readily available. Thus, a well-trained image denoiser could serve as a reliable spatial prior for video denoising. In this paper, we propose a novel unsupervised video denoising framework, named ``Temporal As a Plugin'' (TAP), which integrates tunable temporal modules into a pre-trained image denoiser. By incorporating temporal modules, our method can harness temporal information across noisy frames, complementing its power of spatial denoising. Furthermore, we introduce a progressive fine-tuning strategy that refines each temporal module using the generated pseudo clean video frames, progressively enhancing the network's denoising performance. Compared to other unsupervised video denoising methods, our framework demonstrates superior performance on both sRGB and raw video denoising datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge