Xudong Wang

GHS-TDA: A Synergistic Reasoning Framework Integrating Global Hypothesis Space with Topological Data Analysis

Feb 10, 2026Abstract:Chain-of-Thought (CoT) has been shown to significantly improve the reasoning accuracy of large language models (LLMs) on complex tasks. However, due to the autoregressive, step-by-step generation paradigm, existing CoT methods suffer from two fundamental limitations. First, the reasoning process is highly sensitive to early decisions: once an initial error is introduced, it tends to propagate and amplify through subsequent steps, while the lack of a global coordination and revision mechanism makes such errors difficult to correct, ultimately leading to distorted reasoning chains. Second, current CoT approaches lack structured analysis techniques for filtering redundant reasoning and extracting key reasoning features, resulting in unstable reasoning processes and limited interpretability. To address these issues, we propose GHS-TDA. GHS-TDA first constructs a semantically enriched global hypothesis graph to aggregate, align, and coordinate multiple candidate reasoning paths, thereby providing alternative global correction routes when local reasoning fails. It then applies topological data analysis based on persistent homology to capture stable multi-scale structures, remove redundancy and inconsistencies, and extract a more reliable reasoning skeleton. By jointly leveraging reasoning diversity and topological stability, GHS-TDA achieves self-adaptive convergence, produces high-confidence and interpretable reasoning paths, and consistently outperforms strong baselines in terms of both accuracy and robustness across multiple reasoning benchmarks.

GRASP: Guided Region-Aware Sparse Prompting for Adapting MLLMs to Remote Sensing

Jan 23, 2026Abstract:In recent years, Multimodal Large Language Models (MLLMs) have made significant progress in visual question answering tasks. However, directly applying existing fine-tuning methods to remote sensing (RS) images often leads to issues such as overfitting on background noise or neglecting target details. This is primarily due to the large-scale variations, sparse target distributions, and complex regional semantic features inherent in RS images. These challenges limit the effectiveness of MLLMs in RS tasks. To address these challenges, we propose a parameter-efficient fine-tuning (PEFT) strategy called Guided Region-Aware Sparse Prompting (GRASP). GRASP introduces spatially structured soft prompts associated with spatial blocks extracted from a frozen visual token grid. Through a question-guided sparse fusion mechanism, GRASP dynamically aggregates task-specific context into a compact global prompt, enabling the model to focus on relevant regions while filtering out background noise. Extensive experiments on multiple RSVQA benchmarks show that GRASP achieves competitive performance compared to existing fine-tuning and prompt-based methods while maintaining high parameter efficiency.

SeqWalker: Sequential-Horizon Vision-and-Language Navigation with Hierarchical Planning

Jan 08, 2026Abstract:Sequential-Horizon Vision-and-Language Navigation (SH-VLN) presents a challenging scenario where agents should sequentially execute multi-task navigation guided by complex, long-horizon language instructions. Current vision-and-language navigation models exhibit significant performance degradation with such multi-task instructions, as information overload impairs the agent's ability to attend to observationally relevant details. To address this problem, we propose SeqWalker, a navigation model built on a hierarchical planning framework. Our SeqWalker features: i) A High-Level Planner that dynamically selects global instructions into contextually relevant sub-instructions based on the agent's current visual observations, thus reducing cognitive load; ii) A Low-Level Planner incorporating an Exploration-Verification strategy that leverages the inherent logical structure of instructions for trajectory error correction. To evaluate SH-VLN performance, we also extend the IVLN dataset and establish a new benchmark. Extensive experiments are performed to demonstrate the superiority of the proposed SeqWalker.

Fast SAM2 with Text-Driven Token Pruning

Dec 24, 2025Abstract:Segment Anything Model 2 (SAM2), a vision foundation model has significantly advanced in prompt-driven video object segmentation, yet their practical deployment remains limited by the high computational and memory cost of processing dense visual tokens across time. The SAM2 pipelines typically propagate all visual tokens produced by the image encoder through downstream temporal reasoning modules, regardless of their relevance to the target object, resulting in reduced scalability due to quadratic memory attention overhead. In this work, we introduce a text-guided token pruning framework that improves inference efficiency by selectively reducing token density prior to temporal propagation, without modifying the underlying segmentation architecture. Operating after visual encoding and before memory based propagation, our method ranks tokens using a lightweight routing mechanism that integrates local visual context, semantic relevance derived from object-centric textual descriptions (either user-provided or automatically generated), and uncertainty cues that help preserve ambiguous or boundary critical regions. By retaining only the most informative tokens for downstream processing, the proposed approach reduces redundant computation while maintaining segmentation fidelity. Extensive experiments across multiple challenging video segmentation benchmarks demonstrate that post-encoder token pruning provides a practical and effective pathway to efficient, prompt-aware video segmentation, achieving up to 42.50 percent faster inference and 37.41 percent lower GPU memory usage compared to the unpruned baseline SAM2, while preserving competitive J and F performance. These results highlight the potential of early token selection to improve the scalability of transformer-based video segmentation systems for real-time and resource-constrained applications.

Understanding Chain-of-Thought in Large Language Models via Topological Data Analysis

Dec 22, 2025

Abstract:With the development of large language models (LLMs), particularly with the introduction of the long reasoning chain technique, the reasoning ability of LLMs in complex problem-solving has been significantly enhanced. While acknowledging the power of long reasoning chains, we cannot help but wonder: Why do different reasoning chains perform differently in reasoning? What components of the reasoning chains play a key role? Existing studies mainly focus on evaluating reasoning chains from a functional perspective, with little attention paid to their structural mechanisms. To address this gap, this work is the first to analyze and evaluate the quality of the reasoning chain from a structural perspective. We apply persistent homology from Topological Data Analysis (TDA) to map reasoning steps into semantic space, extract topological features, and analyze structural changes. These changes reveal semantic coherence, logical redundancy, and identify logical breaks and gaps. By calculating homology groups, we assess connectivity and redundancy at various scales, using barcode and persistence diagrams to quantify stability and consistency. Our results show that the topological structural complexity of reasoning chains correlates positively with accuracy. More complex chains identify correct answers sooner, while successful reasoning exhibits simpler topologies, reducing redundancy and cycles, enhancing efficiency and interpretability. This work provides a new perspective on reasoning chain quality assessment and offers guidance for future optimization.

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

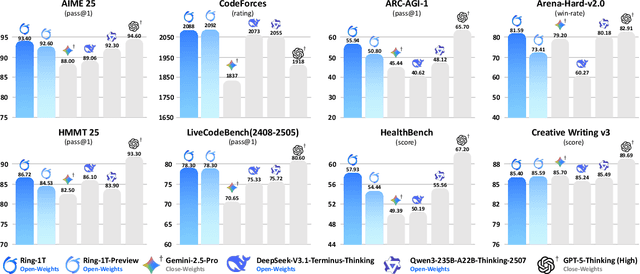

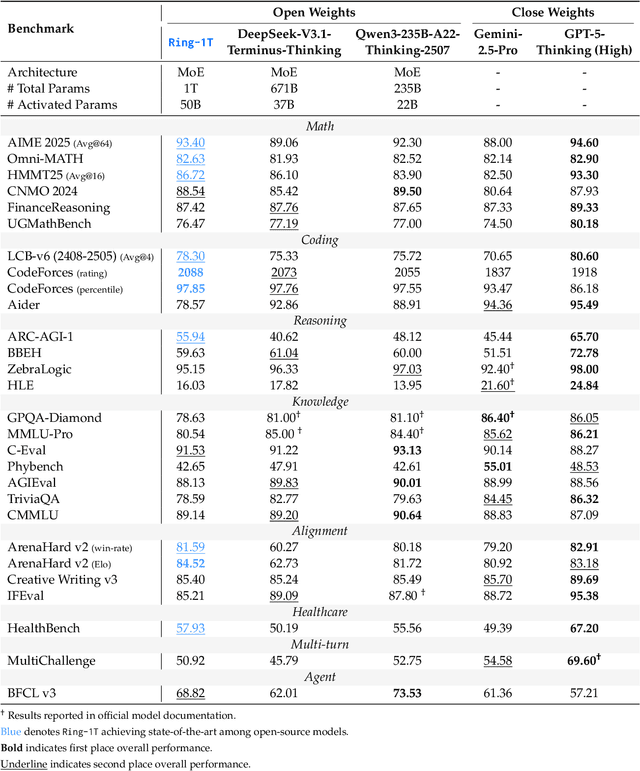

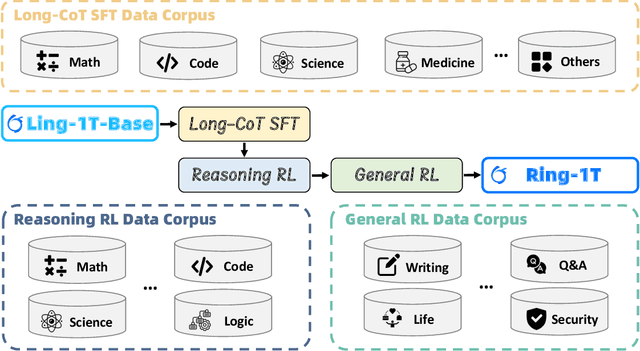

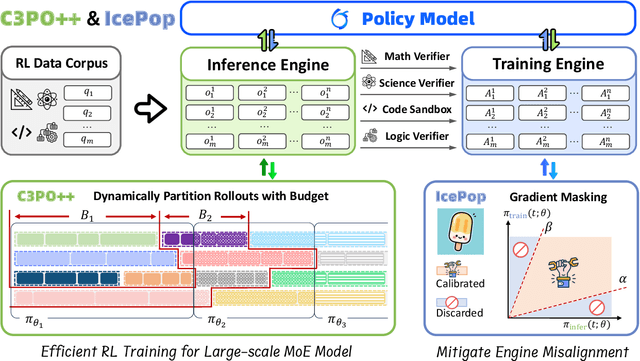

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

Towards Generalist Intelligence in Dentistry: Vision Foundation Models for Oral and Maxillofacial Radiology

Oct 16, 2025Abstract:Oral and maxillofacial radiology plays a vital role in dental healthcare, but radiographic image interpretation is limited by a shortage of trained professionals. While AI approaches have shown promise, existing dental AI systems are restricted by their single-modality focus, task-specific design, and reliance on costly labeled data, hindering their generalization across diverse clinical scenarios. To address these challenges, we introduce DentVFM, the first family of vision foundation models (VFMs) designed for dentistry. DentVFM generates task-agnostic visual representations for a wide range of dental applications and uses self-supervised learning on DentVista, a large curated dental imaging dataset with approximately 1.6 million multi-modal radiographic images from various medical centers. DentVFM includes 2D and 3D variants based on the Vision Transformer (ViT) architecture. To address gaps in dental intelligence assessment and benchmarks, we introduce DentBench, a comprehensive benchmark covering eight dental subspecialties, more diseases, imaging modalities, and a wide geographical distribution. DentVFM shows impressive generalist intelligence, demonstrating robust generalization to diverse dental tasks, such as disease diagnosis, treatment analysis, biomarker identification, and anatomical landmark detection and segmentation. Experimental results indicate DentVFM significantly outperforms supervised, self-supervised, and weakly supervised baselines, offering superior generalization, label efficiency, and scalability. Additionally, DentVFM enables cross-modality diagnostics, providing more reliable results than experienced dentists in situations where conventional imaging is unavailable. DentVFM sets a new paradigm for dental AI, offering a scalable, adaptable, and label-efficient model to improve intelligent dental healthcare and address critical gaps in global oral healthcare.

DualNILM: Energy Injection Identification Enabled Disaggregation with Deep Multi-Task Learning

Aug 20, 2025Abstract:Non-Intrusive Load Monitoring (NILM) offers a cost-effective method to obtain fine-grained appliance-level energy consumption in smart homes and building applications. However, the increasing adoption of behind-the-meter energy sources, such as solar panels and battery storage, poses new challenges for conventional NILM methods that rely solely on at-the-meter data. The injected energy from the behind-the-meter sources can obscure the power signatures of individual appliances, leading to a significant decline in NILM performance. To address this challenge, we present DualNILM, a deep multi-task learning framework designed for the dual tasks of appliance state recognition and injected energy identification in NILM. By integrating sequence-to-point and sequence-to-sequence strategies within a Transformer-based architecture, DualNILM can effectively capture multi-scale temporal dependencies in the aggregate power consumption patterns, allowing for accurate appliance state recognition and energy injection identification. We conduct validation of DualNILM using both self-collected and synthesized open NILM datasets that include both appliance-level energy consumption and energy injection. Extensive experimental results demonstrate that DualNILM maintains an excellent performance for the dual tasks in NILM, much outperforming conventional methods.

CRISP: Contrastive Residual Injection and Semantic Prompting for Continual Video Instance Segmentation

Aug 14, 2025

Abstract:Continual video instance segmentation demands both the plasticity to absorb new object categories and the stability to retain previously learned ones, all while preserving temporal consistency across frames. In this work, we introduce Contrastive Residual Injection and Semantic Prompting (CRISP), an earlier attempt tailored to address the instance-wise, category-wise, and task-wise confusion in continual video instance segmentation. For instance-wise learning, we model instance tracking and construct instance correlation loss, which emphasizes the correlation with the prior query space while strengthening the specificity of the current task query. For category-wise learning, we build an adaptive residual semantic prompt (ARSP) learning framework, which constructs a learnable semantic residual prompt pool generated by category text and uses an adjustive query-prompt matching mechanism to build a mapping relationship between the query of the current task and the semantic residual prompt. Meanwhile, a semantic consistency loss based on the contrastive learning is introduced to maintain semantic coherence between object queries and residual prompts during incremental training. For task-wise learning, to ensure the correlation at the inter-task level within the query space, we introduce a concise yet powerful initialization strategy for incremental prompts. Extensive experiments on YouTube-VIS-2019 and YouTube-VIS-2021 datasets demonstrate that CRISP significantly outperforms existing continual segmentation methods in the long-term continual video instance segmentation task, avoiding catastrophic forgetting and effectively improving segmentation and classification performance. The code is available at https://github.com/01upup10/CRISP.

Learnable Kernel Density Estimation for Graphs

May 27, 2025Abstract:This work proposes a framework LGKDE that learns kernel density estimation for graphs. The key challenge in graph density estimation lies in effectively capturing both structural patterns and semantic variations while maintaining theoretical guarantees. Combining graph kernels and kernel density estimation (KDE) is a standard approach to graph density estimation, but has unsatisfactory performance due to the handcrafted and fixed features of kernels. Our method LGKDE leverages graph neural networks to represent each graph as a discrete distribution and utilizes maximum mean discrepancy to learn the graph metric for multi-scale KDE, where all parameters are learned by maximizing the density of graphs relative to the density of their well-designed perturbed counterparts. The perturbations are conducted on both node features and graph spectra, which helps better characterize the boundary of normal density regions. Theoretically, we establish consistency and convergence guarantees for LGKDE, including bounds on the mean integrated squared error, robustness, and complexity. We validate LGKDE by demonstrating its effectiveness in recovering the underlying density of synthetic graph distributions and applying it to graph anomaly detection across diverse benchmark datasets. Extensive empirical evaluation shows that LGKDE demonstrates superior performance compared to state-of-the-art baselines on most benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge