Bin Jing

Anatomical Region-Guided Contrastive Decoding: A Plug-and-Play Strategy for Mitigating Hallucinations in Medical VLMs

Dec 19, 2025

Abstract:Medical Vision-Language Models (MedVLMs) show immense promise in clinical applicability. However, their reliability is hindered by hallucinations, where models often fail to derive answers from visual evidence, instead relying on learned textual priors. Existing mitigation strategies for MedVLMs have distinct limitations: training-based methods rely on costly expert annotations, limiting scalability, while training-free interventions like contrastive decoding, though data-efficient, apply a global, untargeted correction whose effects in complex real-world clinical settings can be unreliable. To address these challenges, we introduce Anatomical Region-Guided Contrastive Decoding (ARCD), a plug-and-play strategy that mitigates hallucinations by providing targeted, region-specific guidance. Our module leverages an anatomical mask to direct a three-tiered contrastive decoding process. By dynamically re-weighting at the token, attention, and logits levels, it verifiably steers the model's focus onto specified regions, reinforcing anatomical understanding and suppressing factually incorrect outputs. Extensive experiments across diverse datasets, including chest X-ray, CT, brain MRI, and ocular ultrasound, demonstrate our method's effectiveness in improving regional understanding, reducing hallucinations, and enhancing overall diagnostic accuracy.

CheXPO-v2: Preference Optimization for Chest X-ray VLMs with Knowledge Graph Consistency

Dec 19, 2025Abstract:Medical Vision-Language Models (VLMs) are prone to hallucinations, compromising clinical reliability. While reinforcement learning methods like Group Relative Policy Optimization (GRPO) offer a low-cost alignment solution, their reliance on sparse, outcome-based rewards inadvertently encourages models to "overthink" -- generating verbose, convoluted, and unverifiable Chain-of-Thought reasoning to justify answers. This focus on outcomes obscures factual errors and poses significant safety risks. To address this, we propose CheXPO-v2, a novel alignment framework that shifts from outcome to process supervision. Our core innovation is a Knowledge Graph Consistency Reward mechanism driven by Entity-Relation Matching. By explicitly parsing reasoning steps into structured "Disease, Relation, Anatomy" triplets, we provide fine-grained supervision that penalizes incoherent logic and hallucinations at the atomic level. Integrating this with a hard-example mining strategy, our approach significantly outperforms GRPO and state-of-the-art models on benchmarks like MIMIC-CXR-VQA. Crucially, CheXPO-v2 achieves new state-of-the-art accuracy using only 5k samples, demonstrating exceptional data efficiency while producing clinically sound and verifiable reasoning. The project source code is publicly available at: https://github.com/ecoxial2007/CheX-Phi4MM.

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

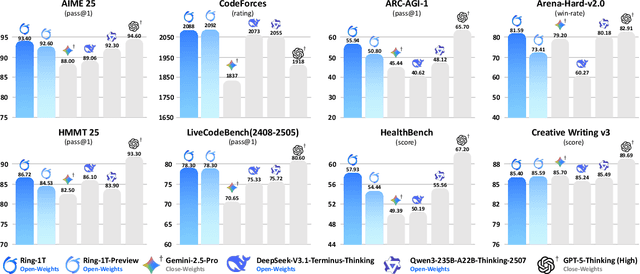

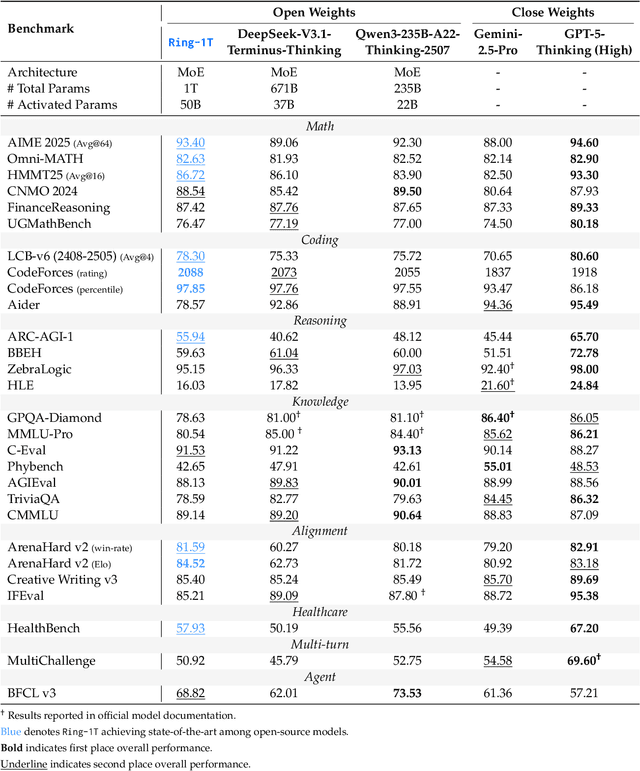

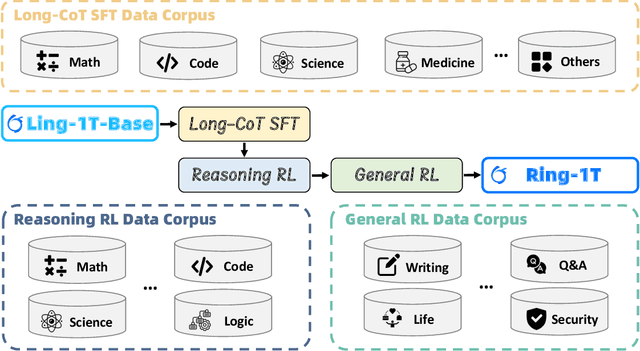

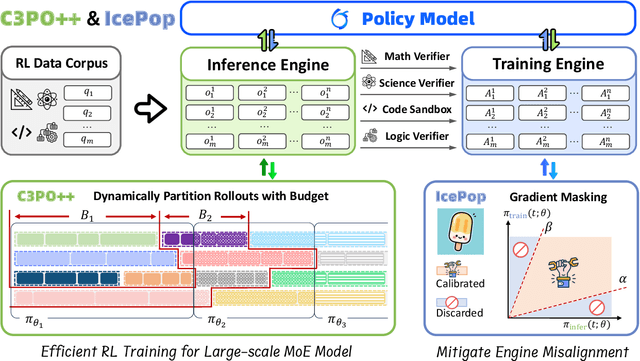

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

GLISP: A Scalable GNN Learning System by Exploiting Inherent Structural Properties of Graphs

Jan 06, 2024Abstract:As a powerful tool for modeling graph data, Graph Neural Networks (GNNs) have received increasing attention in both academia and industry. Nevertheless, it is notoriously difficult to deploy GNNs on industrial scale graphs, due to their huge data size and complex topological structures. In this paper, we propose GLISP, a sampling based GNN learning system for industrial scale graphs. By exploiting the inherent structural properties of graphs, such as power law distribution and data locality, GLISP addresses the scalability and performance issues that arise at different stages of the graph learning process. GLISP consists of three core components: graph partitioner, graph sampling service and graph inference engine. The graph partitioner adopts the proposed vertex-cut graph partitioning algorithm AdaDNE to produce balanced partitioning for power law graphs, which is essential for sampling based GNN systems. The graph sampling service employs a load balancing design that allows the one hop sampling request of high degree vertices to be handled by multiple servers. In conjunction with the memory efficient data structure, the efficiency and scalability are effectively improved. The graph inference engine splits the $K$-layer GNN into $K$ slices and caches the vertex embeddings produced by each slice in the data locality aware hybrid caching system for reuse, thus completely eliminating redundant computation caused by the data dependency of graph. Extensive experiments show that GLISP achieves up to $6.53\times$ and $70.77\times$ speedups over existing GNN systems for training and inference tasks, respectively, and can scale to the graph with over 10 billion vertices and 40 billion edges with limited resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge