Wei-Shi Zheng

Learning to Imagine: Diversify Memory for Incremental Learning using Unlabeled Data

Apr 19, 2022

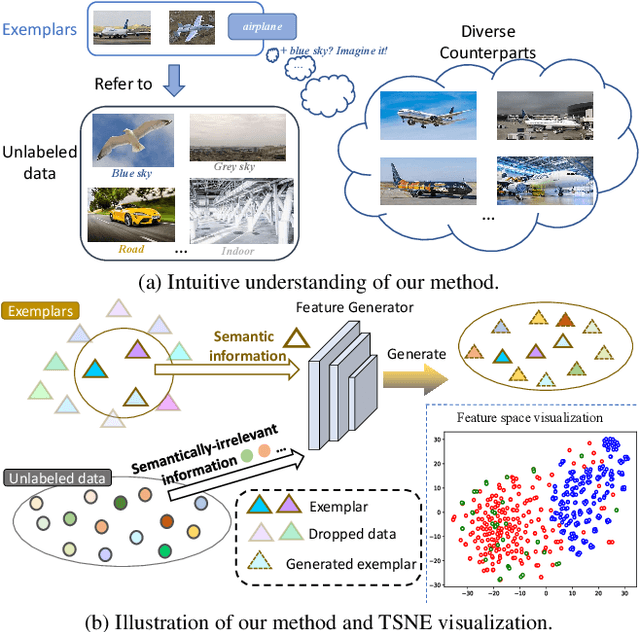

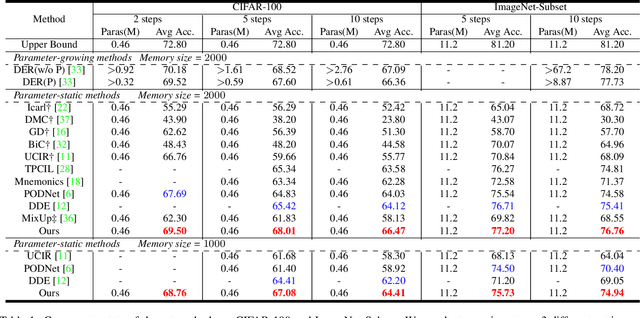

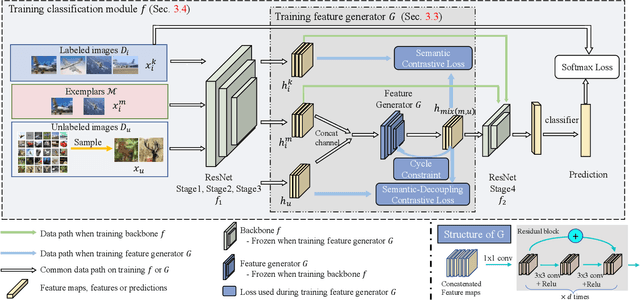

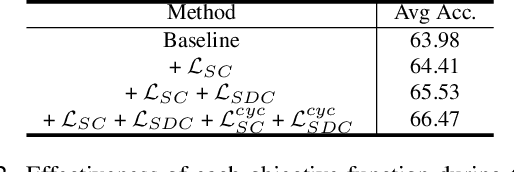

Abstract:Deep neural network (DNN) suffers from catastrophic forgetting when learning incrementally, which greatly limits its applications. Although maintaining a handful of samples (called `exemplars`) of each task could alleviate forgetting to some extent, existing methods are still limited by the small number of exemplars since these exemplars are too few to carry enough task-specific knowledge, and therefore the forgetting remains. To overcome this problem, we propose to `imagine` diverse counterparts of given exemplars referring to the abundant semantic-irrelevant information from unlabeled data. Specifically, we develop a learnable feature generator to diversify exemplars by adaptively generating diverse counterparts of exemplars based on semantic information from exemplars and semantically-irrelevant information from unlabeled data. We introduce semantic contrastive learning to enforce the generated samples to be semantic consistent with exemplars and perform semanticdecoupling contrastive learning to encourage diversity of generated samples. The diverse generated samples could effectively prevent DNN from forgetting when learning new tasks. Our method does not bring any extra inference cost and outperforms state-of-the-art methods on two benchmarks CIFAR-100 and ImageNet-Subset by a clear margin.

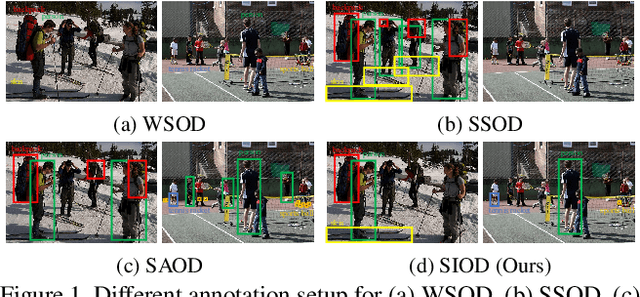

SIOD: Single Instance Annotated Per Category Per Image for Object Detection

Mar 30, 2022

Abstract:Object detection under imperfect data receives great attention recently. Weakly supervised object detection (WSOD) suffers from severe localization issues due to the lack of instance-level annotation, while semi-supervised object detection (SSOD) remains challenging led by the inter-image discrepancy between labeled and unlabeled data. In this study, we propose the Single Instance annotated Object Detection (SIOD), requiring only one instance annotation for each existing category in an image. Degraded from inter-task (WSOD) or inter-image (SSOD) discrepancies to the intra-image discrepancy, SIOD provides more reliable and rich prior knowledge for mining the rest of unlabeled instances and trades off the annotation cost and performance. Under the SIOD setting, we propose a simple yet effective framework, termed Dual-Mining (DMiner), which consists of a Similarity-based Pseudo Label Generating module (SPLG) and a Pixel-level Group Contrastive Learning module (PGCL). SPLG firstly mines latent instances from feature representation space to alleviate the annotation missing problem. To avoid being misled by inaccurate pseudo labels, we propose PGCL to boost the tolerance to false pseudo labels. Extensive experiments on MS COCO verify the feasibility of the SIOD setting and the superiority of the proposed method, which obtains consistent and significant improvements compared to baseline methods and achieves comparable results with fully supervised object detection (FSOD) methods with only 40% instances annotated.

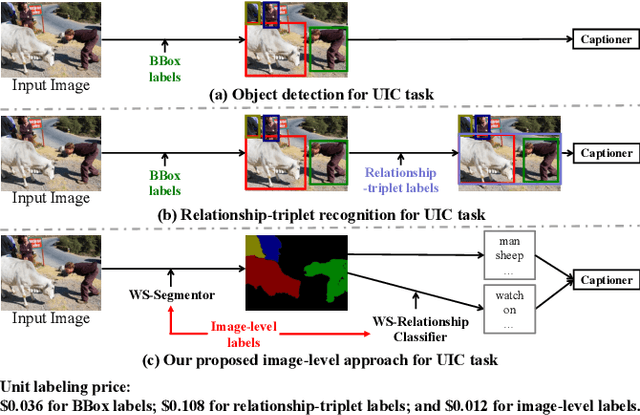

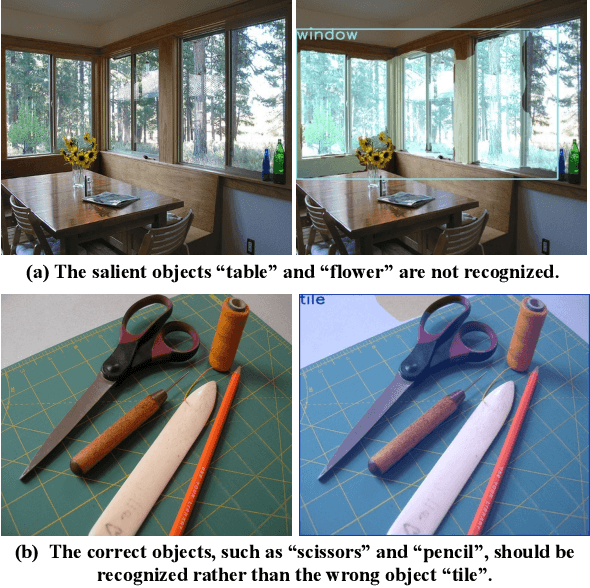

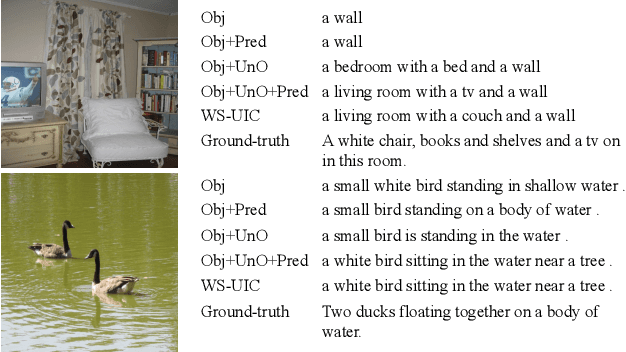

Unpaired Image Captioning by Image-level Weakly-Supervised Visual Concept Recognition

Mar 07, 2022

Abstract:The goal of unpaired image captioning (UIC) is to describe images without using image-caption pairs in the training phase. Although challenging, we except the task can be accomplished by leveraging a training set of images aligned with visual concepts. Most existing studies use off-the-shelf algorithms to obtain the visual concepts because the Bounding Box (BBox) labels or relationship-triplet labels used for the training are expensive to acquire. In order to resolve the problem in expensive annotations, we propose a novel approach to achieve cost-effective UIC. Specifically, we adopt image-level labels for the optimization of the UIC model in a weakly-supervised manner. For each image, we assume that only the image-level labels are available without specific locations and numbers. The image-level labels are utilized to train a weakly-supervised object recognition model to extract object information (e.g., instance) in an image, and the extracted instances are adopted to infer the relationships among different objects based on an enhanced graph neural network (GNN). The proposed approach achieves comparable or even better performance compared with previous methods without the expensive cost of annotations. Furthermore, we design an unrecognized object (UnO) loss combined with a visual concept reward to improve the alignment of the inferred object and relationship information with the images. It can effectively alleviate the issue encountered by existing UIC models about generating sentences with nonexistent objects. To the best of our knowledge, this is the first attempt to solve the problem of Weakly-Supervised visual concept recognition for UIC (WS-UIC) based only on image-level labels. Extensive experiments have been carried out to demonstrate that the proposed WS-UIC model achieves inspiring results on the COCO dataset while significantly reducing the cost of labeling.

Letter-level Online Writer Identification

Dec 06, 2021

Abstract:Writer identification (writer-id), an important field in biometrics, aims to identify a writer by their handwriting. Identification in existing writer-id studies requires a complete document or text, limiting the scalability and flexibility of writer-id in realistic applications. To make the application of writer-id more practical (e.g., on mobile devices), we focus on a novel problem, letter-level online writer-id, which requires only a few trajectories of written letters as identification cues. Unlike text-\ document-based writer-id which has rich context for identification, there are much fewer clues to recognize an author from only a few single letters. A main challenge is that a person often writes a letter in different styles from time to time. We refer to this problem as the variance of online writing styles (Var-O-Styles). We address the Var-O-Styles in a capture-normalize-aggregate fashion: Firstly, we extract different features of a letter trajectory by a carefully designed multi-branch encoder, in an attempt to capture different online writing styles. Then we convert all these style features to a reference style feature domain by a novel normalization layer. Finally, we aggregate the normalized features by a hierarchical attention pooling (HAP), which fuses all the input letters with multiple writing styles into a compact feature vector. In addition, we also contribute a large-scale LEtter-level online wRiter IDentification dataset (LERID) for evaluation. Extensive comparative experiments demonstrate the effectiveness of the proposed framework.

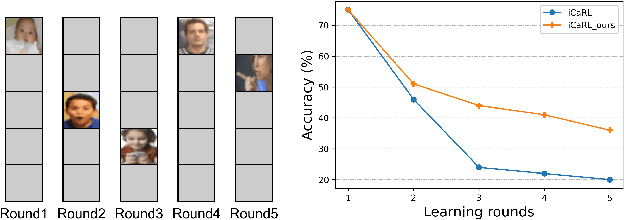

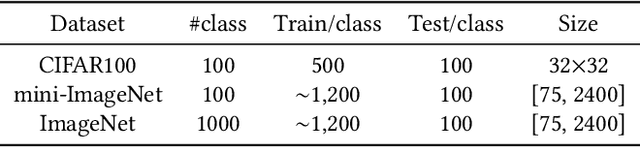

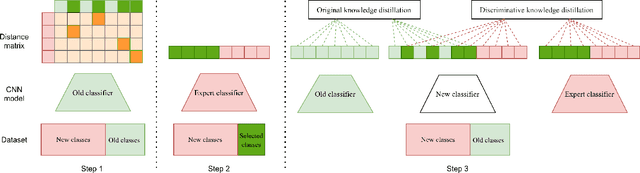

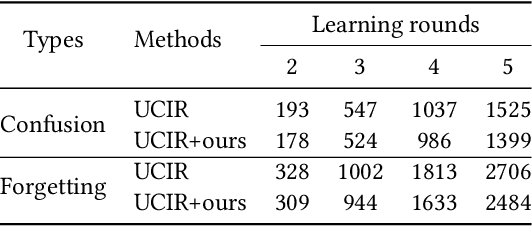

Discriminative Distillation to Reduce Class Confusion in Continual Learning

Aug 11, 2021

Abstract:Successful continual learning of new knowledge would enable intelligent systems to recognize more and more classes of objects. However, current intelligent systems often fail to correctly recognize previously learned classes of objects when updated to learn new classes. It is widely believed that such downgraded performance is solely due to the catastrophic forgetting of previously learned knowledge. In this study, we argue that the class confusion phenomena may also play a role in downgrading the classification performance during continual learning, i.e., the high similarity between new classes and any previously learned classes would also cause the classifier to make mistakes in recognizing these old classes, even if the knowledge of these old classes is not forgotten. To alleviate the class confusion issue, we propose a discriminative distillation strategy to help the classify well learn the discriminative features between confusing classes during continual learning. Experiments on multiple natural image classification tasks support that the proposed distillation strategy, when combined with existing methods, is effective in further improving continual learning.

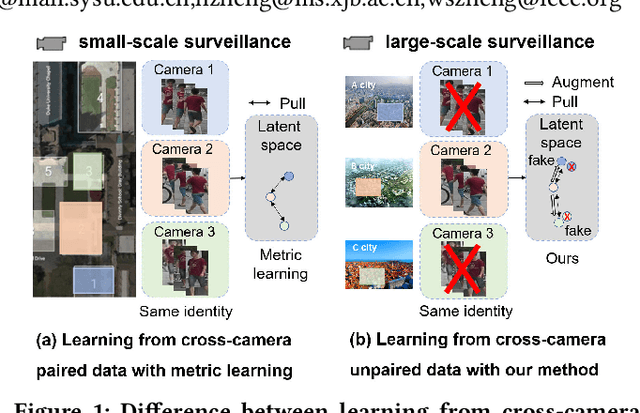

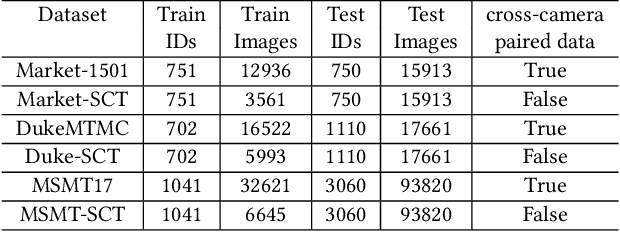

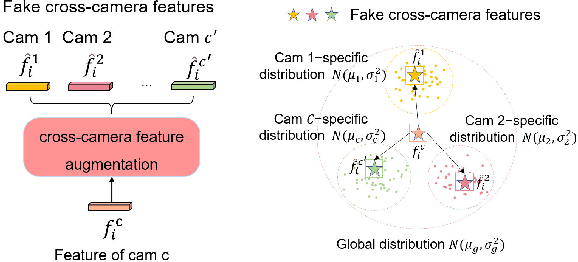

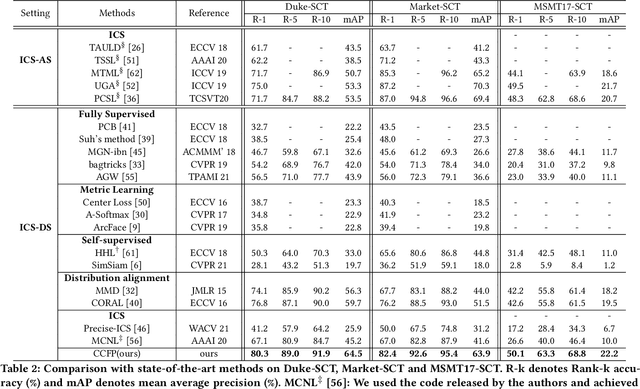

Cross-Camera Feature Prediction for Intra-Camera Supervised Person Re-identification across Distant Scenes

Jul 29, 2021

Abstract:Person re-identification (Re-ID) aims to match person images across non-overlapping camera views. The majority of Re-ID methods focus on small-scale surveillance systems in which each pedestrian is captured in different camera views of adjacent scenes. However, in large-scale surveillance systems that cover larger areas, it is required to track a pedestrian of interest across distant scenes (e.g., a criminal suspect escapes from one city to another). Since most pedestrians appear in limited local areas, it is difficult to collect training data with cross-camera pairs of the same person. In this work, we study intra-camera supervised person re-identification across distant scenes (ICS-DS Re-ID), which uses cross-camera unpaired data with intra-camera identity labels for training. It is challenging as cross-camera paired data plays a crucial role for learning camera-invariant features in most existing Re-ID methods. To learn camera-invariant representation from cross-camera unpaired training data, we propose a cross-camera feature prediction method to mine cross-camera self supervision information from camera-specific feature distribution by transforming fake cross-camera positive feature pairs and minimize the distances of the fake pairs. Furthermore, we automatically localize and extract local-level feature by a transformer. Joint learning of global-level and local-level features forms a global-local cross-camera feature prediction scheme for mining fine-grained cross-camera self supervision information. Finally, cross-camera self supervision and intra-camera supervision are aggregated in a framework. The experiments are conducted in the ICS-DS setting on Market-SCT, Duke-SCT and MSMT17-SCT datasets. The evaluation results demonstrate the superiority of our method, which gains significant improvements of 15.4 Rank-1 and 22.3 mAP on Market-SCT as compared to the second best method.

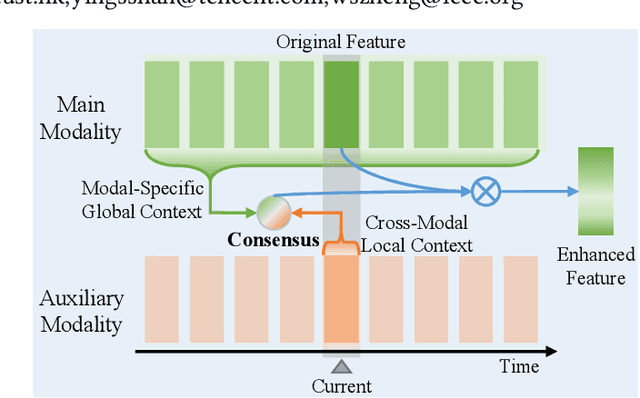

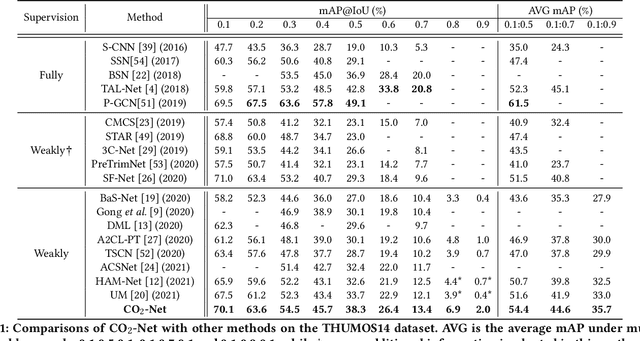

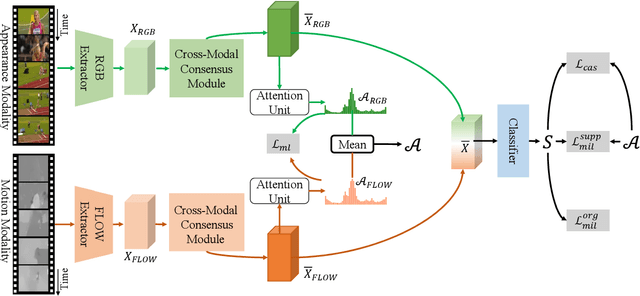

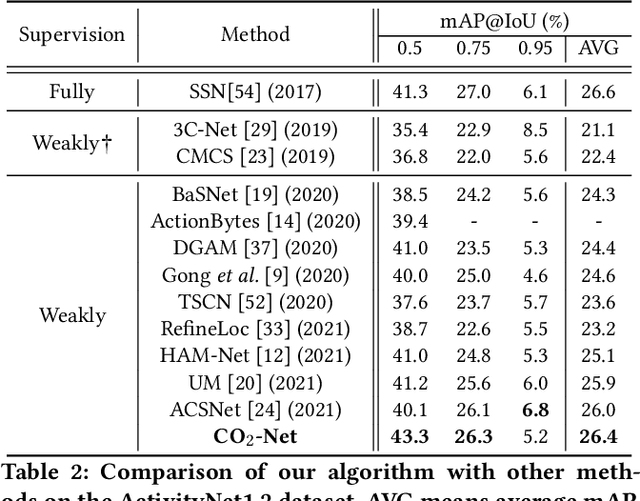

Cross-modal Consensus Network for Weakly Supervised Temporal Action Localization

Jul 27, 2021

Abstract:Weakly supervised temporal action localization (WS-TAL) is a challenging task that aims to localize action instances in the given video with video-level categorical supervision. Both appearance and motion features are used in previous works, while they do not utilize them in a proper way but apply simple concatenation or score-level fusion. In this work, we argue that the features extracted from the pretrained extractor, e.g., I3D, are not the WS-TALtask-specific features, thus the feature re-calibration is needed for reducing the task-irrelevant information redundancy. Therefore, we propose a cross-modal consensus network (CO2-Net) to tackle this problem. In CO2-Net, we mainly introduce two identical proposed cross-modal consensus modules (CCM) that design a cross-modal attention mechanism to filter out the task-irrelevant information redundancy using the global information from the main modality and the cross-modal local information of the auxiliary modality. Moreover, we treat the attention weights derived from each CCMas the pseudo targets of the attention weights derived from another CCM to maintain the consistency between the predictions derived from two CCMs, forming a mutual learning manner. Finally, we conduct extensive experiments on two common used temporal action localization datasets, THUMOS14 and ActivityNet1.2, to verify our method and achieve the state-of-the-art results. The experimental results show that our proposed cross-modal consensus module can produce more representative features for temporal action localization.

Discriminator-Free Generative Adversarial Attack

Jul 20, 2021

Abstract:The Deep Neural Networks are vulnerable toadversarial exam-ples(Figure 1), making the DNNs-based systems collapsed byadding the inconspicuous perturbations to the images. Most of the existing works for adversarial attack are gradient-based and suf-fer from the latency efficiencies and the load on GPU memory. Thegenerative-based adversarial attacks can get rid of this limitation,and some relative works propose the approaches based on GAN.However, suffering from the difficulty of the convergence of train-ing a GAN, the adversarial examples have either bad attack abilityor bad visual quality. In this work, we find that the discriminatorcould be not necessary for generative-based adversarial attack, andpropose theSymmetric Saliency-based Auto-Encoder (SSAE)to generate the perturbations, which is composed of the saliencymap module and the angle-norm disentanglement of the featuresmodule. The advantage of our proposed method lies in that it is notdepending on discriminator, and uses the generative saliency map to pay more attention to label-relevant regions. The extensive exper-iments among the various tasks, datasets, and models demonstratethat the adversarial examples generated by SSAE not only make thewidely-used models collapse, but also achieves good visual quality.The code is available at https://github.com/BravoLu/SSAE.

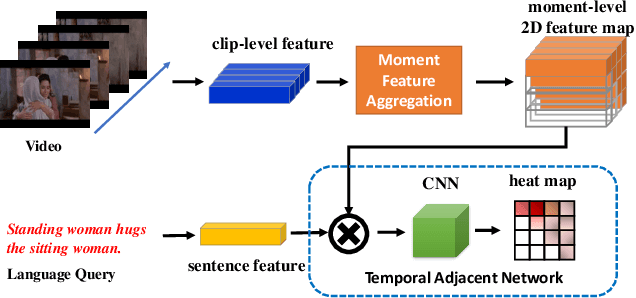

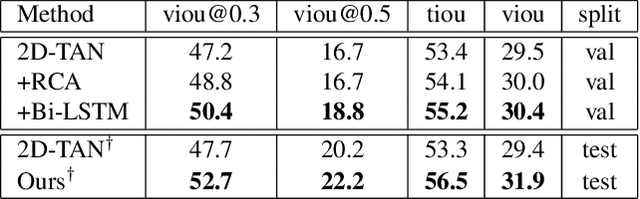

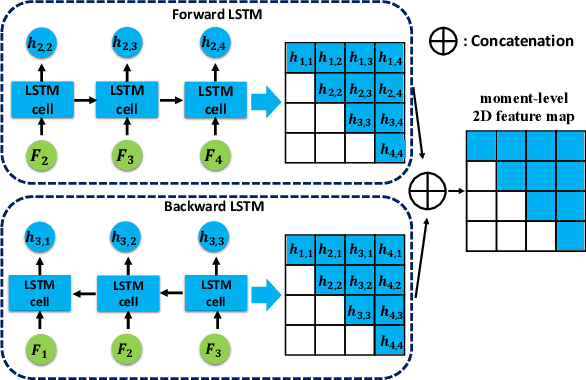

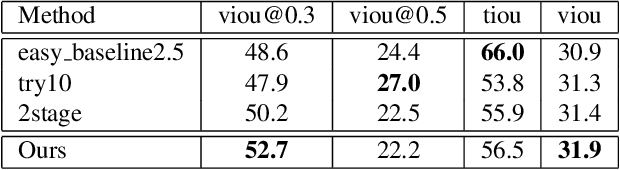

Augmented 2D-TAN: A Two-stage Approach for Human-centric Spatio-Temporal Video Grounding

Jun 20, 2021

Abstract:We propose an effective two-stage approach to tackle the problem of language-based Human-centric Spatio-Temporal Video Grounding (HC-STVG) task. In the first stage, we propose an Augmented 2D Temporal Adjacent Network (Augmented 2D-TAN) to temporally ground the target moment corresponding to the given description. Primarily, we improve the original 2D-TAN from two aspects: First, a temporal context-aware Bi-LSTM Aggregation Module is developed to aggregate clip-level representations, replacing the original max-pooling. Second, we propose to employ Random Concatenation Augmentation (RCA) mechanism during the training phase. In the second stage, we use pretrained MDETR model to generate per-frame bounding boxes via language query, and design a set of hand-crafted rules to select the best matching bounding box outputted by MDETR for each frame within the grounded moment.

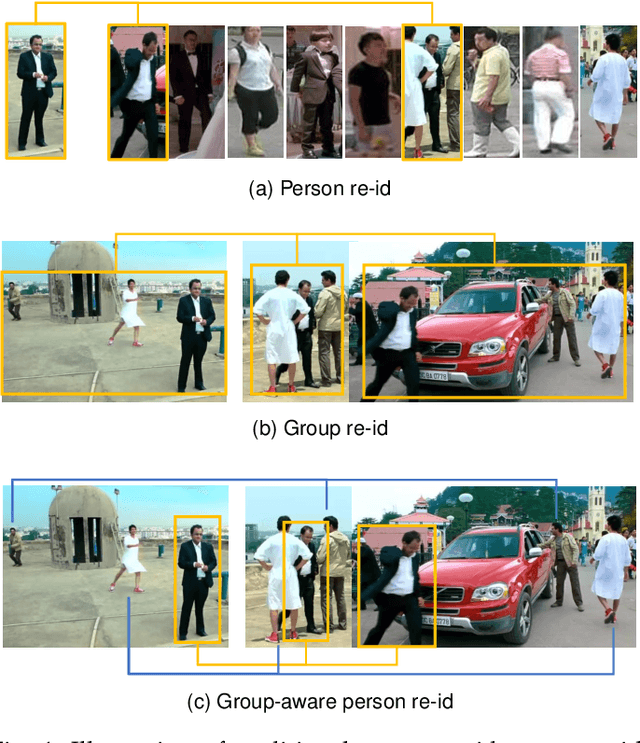

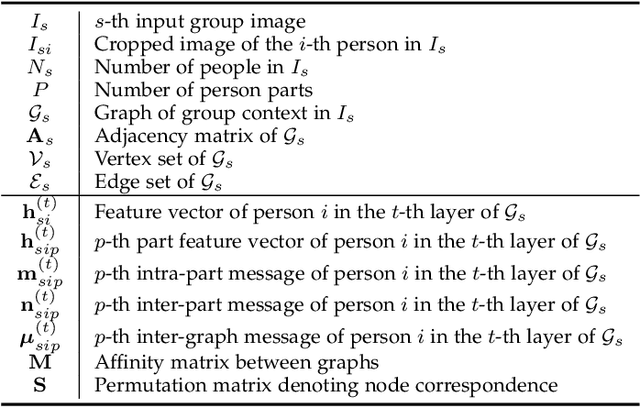

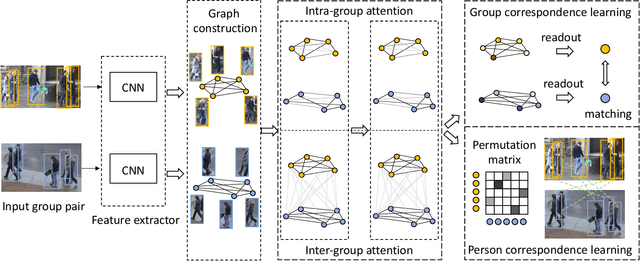

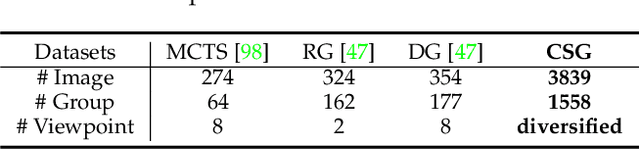

Learning Multi-Attention Context Graph for Group-Based Re-Identification

Apr 29, 2021

Abstract:Learning to re-identify or retrieve a group of people across non-overlapped camera systems has important applications in video surveillance. However, most existing methods focus on (single) person re-identification (re-id), ignoring the fact that people often walk in groups in real scenarios. In this work, we take a step further and consider employing context information for identifying groups of people, i.e., group re-id. We propose a novel unified framework based on graph neural networks to simultaneously address the group-based re-id tasks, i.e., group re-id and group-aware person re-id. Specifically, we construct a context graph with group members as its nodes to exploit dependencies among different people. A multi-level attention mechanism is developed to formulate both intra-group and inter-group context, with an additional self-attention module for robust graph-level representations by attentively aggregating node-level features. The proposed model can be directly generalized to tackle group-aware person re-id using node-level representations. Meanwhile, to facilitate the deployment of deep learning models on these tasks, we build a new group re-id dataset that contains more than 3.8K images with 1.5K annotated groups, an order of magnitude larger than existing group re-id datasets. Extensive experiments on the novel dataset as well as three existing datasets clearly demonstrate the effectiveness of the proposed framework for both group-based re-id tasks. The code is available at https://github.com/daodaofr/group_reid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge