Runze Zhang

Large Material Gaussian Model for Relightable 3D Generation

Sep 26, 2025

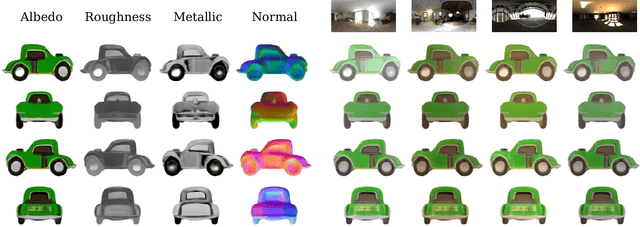

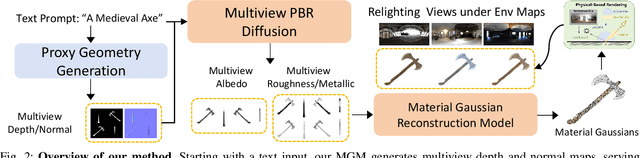

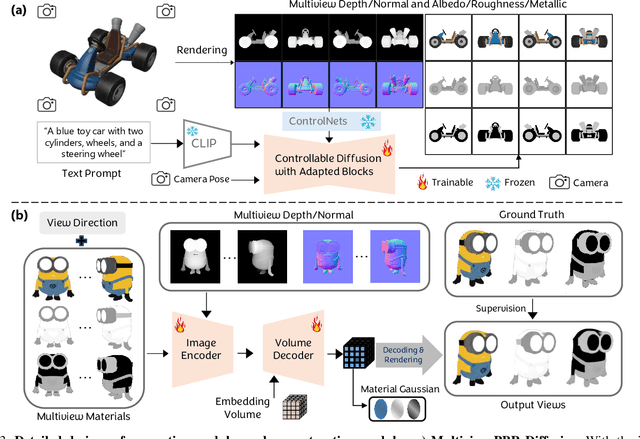

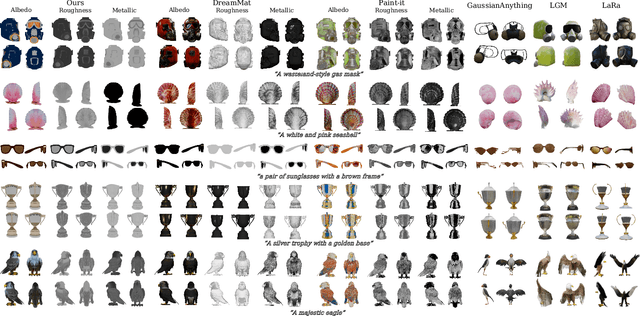

Abstract:The increasing demand for 3D assets across various industries necessitates efficient and automated methods for 3D content creation. Leveraging 3D Gaussian Splatting, recent large reconstruction models (LRMs) have demonstrated the ability to efficiently achieve high-quality 3D rendering by integrating multiview diffusion for generation and scalable transformers for reconstruction. However, existing models fail to produce the material properties of assets, which is crucial for realistic rendering in diverse lighting environments. In this paper, we introduce the Large Material Gaussian Model (MGM), a novel framework designed to generate high-quality 3D content with Physically Based Rendering (PBR) materials, ie, albedo, roughness, and metallic properties, rather than merely producing RGB textures with uncontrolled light baking. Specifically, we first fine-tune a new multiview material diffusion model conditioned on input depth and normal maps. Utilizing the generated multiview PBR images, we explore a Gaussian material representation that not only aligns with 2D Gaussian Splatting but also models each channel of the PBR materials. The reconstructed point clouds can then be rendered to acquire PBR attributes, enabling dynamic relighting by applying various ambient light maps. Extensive experiments demonstrate that the materials produced by our method not only exhibit greater visual appeal compared to baseline methods but also enhance material modeling, thereby enabling practical downstream rendering applications.

UAV-Based Remote Sensing of Soil Moisture Across Diverse Land Covers: Validation and Bayesian Uncertainty Characterization

Jun 05, 2025Abstract:High-resolution soil moisture (SM) observations are critical for agricultural monitoring, forestry management, and hazard prediction, yet current satellite passive microwave missions cannot directly provide retrievals at tens-of-meter spatial scales. Unmanned aerial vehicle (UAV) mounted microwave radiometry presents a promising alternative, but most evaluations to date have focused on agricultural settings, with limited exploration across other land covers and few efforts to quantify retrieval uncertainty. This study addresses both gaps by evaluating SM retrievals from a drone-based Portable L-band Radiometer (PoLRa) across shrubland, bare soil, and forest strips in Central Illinois, U.S., using a 10-day field campaign in 2024. Controlled UAV flights at altitudes of 10 m, 20 m, and 30 m were performed to generate brightness temperatures (TB) at spatial resolutions of 7 m, 14 m, and 21 m. SM retrievals were carried out using multiple tau-omega-based algorithms, including the single channel algorithm (SCA), dual channel algorithm (DCA), and multi-temporal dual channel algorithm (MTDCA). A Bayesian inference framework was then applied to provide probabilistic uncertainty characterization for both SM and vegetation optical depth (VOD). Results show that the gridded TB distributions consistently capture dry-wet gradients associated with vegetation density variations, and spatial correlations between polarized observations are largely maintained across scales. Validation against in situ measurements indicates that PoLRa derived SM retrievals from the SCAV and MTDCA algorithms achieve unbiased root-mean-square errors (ubRMSE) generally below 0.04 m3/m3 across different land covers. Bayesian posterior analyses confirm that reference SM values largely fall within the derived uncertainty intervals, with mean uncertainty ranges around 0.02 m3/m3 and 0.11 m3/m3 for SCA and DCA related retrievals.

DropletVideo: A Dataset and Approach to Explore Integral Spatio-Temporal Consistent Video Generation

Mar 08, 2025Abstract:Spatio-temporal consistency is a critical research topic in video generation. A qualified generated video segment must ensure plot plausibility and coherence while maintaining visual consistency of objects and scenes across varying viewpoints. Prior research, especially in open-source projects, primarily focuses on either temporal or spatial consistency, or their basic combination, such as appending a description of a camera movement after a prompt without constraining the outcomes of this movement. However, camera movement may introduce new objects to the scene or eliminate existing ones, thereby overlaying and affecting the preceding narrative. Especially in videos with numerous camera movements, the interplay between multiple plots becomes increasingly complex. This paper introduces and examines integral spatio-temporal consistency, considering the synergy between plot progression and camera techniques, and the long-term impact of prior content on subsequent generation. Our research encompasses dataset construction through to the development of the model. Initially, we constructed a DropletVideo-10M dataset, which comprises 10 million videos featuring dynamic camera motion and object actions. Each video is annotated with an average caption of 206 words, detailing various camera movements and plot developments. Following this, we developed and trained the DropletVideo model, which excels in preserving spatio-temporal coherence during video generation. The DropletVideo dataset and model are accessible at https://dropletx.github.io.

ArcPro: Architectural Programs for Structured 3D Abstraction of Sparse Points

Mar 05, 2025Abstract:We introduce ArcPro, a novel learning framework built on architectural programs to recover structured 3D abstractions from highly sparse and low-quality point clouds. Specifically, we design a domain-specific language (DSL) to hierarchically represent building structures as a program, which can be efficiently converted into a mesh. We bridge feedforward and inverse procedural modeling by using a feedforward process for training data synthesis, allowing the network to make reverse predictions. We train an encoder-decoder on the points-program pairs to establish a mapping from unstructured point clouds to architectural programs, where a 3D convolutional encoder extracts point cloud features and a transformer decoder autoregressively predicts the programs in a tokenized form. Inference by our method is highly efficient and produces plausible and faithful 3D abstractions. Comprehensive experiments demonstrate that ArcPro outperforms both traditional architectural proxy reconstruction and learning-based abstraction methods. We further explore its potential to work with multi-view image and natural language inputs.

Sub-Meter Remote Sensing of Soil Moisture Using Portable L-band Microwave Radiometer

Sep 25, 2024Abstract:Spaceborne microwave passive soil moisture products are known for their accuracy but are often limited by coarse spatial resolutions. This limits their ability to capture finer soil moisture gradients and hinders their applications. The Portable L band radiometer (PoLRa) offers soil moisture measurements from submeter to tens of meters depending on the altitude of measurement. Given that the assessments of soil moisture derived from this sensor are notably lacking, this study aims to evaluate the performance of submeter soil moisture retrieved from PoLRa mounted on poles at four different locations in central Illinois, USA. The evaluation focuses on the consistency of PoLRa measured brightness temperatures from different directions relative to the same area, and the accuracy of PoLRa derived soil moisture. As PoLRa shares many aspects of the L band radiometer onboard the NASA Soil Moisture Active Passive (SMAP) mission, two SMAP operational algorithms and the conventional dual channel algorithm were applied to calculate soil moisture from the measured brightness temperatures. The vertically polarized brightness temperatures from the PoLRa are typically more stable than their horizontally polarized counterparts. In each test period, the standard deviations of observed dual polarization brightness temperatures are generally less than 5 K. By comparing PoLRa based soil moisture retrievals against the moisture values obtained by handheld time domain reflectometry, the unbiased root mean square error and the Pearson correlation coefficient are mostly below 0.04 and above 0.75, confirming the high accuracy of PoLRa derived soil moisture retrievals and the feasibility of utilizing SMAP algorithms for PoLRa data. These findings highlight the significant potential of ground or drone based PoLRa measurements as a standalone reference for future spaceborne L band sensors.

DreamMat: High-quality PBR Material Generation with Geometry- and Light-aware Diffusion Models

May 27, 2024Abstract:2D diffusion model, which often contains unwanted baked-in shading effects and results in unrealistic rendering effects in the downstream applications. Generating Physically Based Rendering (PBR) materials instead of just RGB textures would be a promising solution. However, directly distilling the PBR material parameters from 2D diffusion models still suffers from incorrect material decomposition, such as baked-in shading effects in albedo. We introduce DreamMat, an innovative approach to resolve the aforementioned problem, to generate high-quality PBR materials from text descriptions. We find out that the main reason for the incorrect material distillation is that large-scale 2D diffusion models are only trained to generate final shading colors, resulting in insufficient constraints on material decomposition during distillation. To tackle this problem, we first finetune a new light-aware 2D diffusion model to condition on a given lighting environment and generate the shading results on this specific lighting condition. Then, by applying the same environment lights in the material distillation, DreamMat can generate high-quality PBR materials that are not only consistent with the given geometry but also free from any baked-in shading effects in albedo. Extensive experiments demonstrate that the materials produced through our methods exhibit greater visual appeal to users and achieve significantly superior rendering quality compared to baseline methods, which are preferable for downstream tasks such as game and film production.

Image Content Generation with Causal Reasoning

Dec 12, 2023Abstract:The emergence of ChatGPT has once again sparked research in generative artificial intelligence (GAI). While people have been amazed by the generated results, they have also noticed the reasoning potential reflected in the generated textual content. However, this current ability for causal reasoning is primarily limited to the domain of language generation, such as in models like GPT-3. In visual modality, there is currently no equivalent research. Considering causal reasoning in visual content generation is significant. This is because visual information contains infinite granularity. Particularly, images can provide more intuitive and specific demonstrations for certain reasoning tasks, especially when compared to coarse-grained text. Hence, we propose a new image generation task called visual question answering with image (VQAI) and establish a dataset of the same name based on the classic \textit{Tom and Jerry} animated series. Additionally, we develop a new paradigm for image generation to tackle the challenges of this task. Finally, we perform extensive experiments and analyses, including visualizations of the generated content and discussions on the potentials and limitations. The code and data are publicly available under the license of CC BY-NC-SA 4.0 for academic and non-commercial usage. The code and dataset are publicly available at: https://github.com/IEIT-AGI/MIX-Shannon/blob/main/projects/VQAI/lgd_vqai.md.

LiDAL: Inter-frame Uncertainty Based Active Learning for 3D LiDAR Semantic Segmentation

Nov 11, 2022Abstract:We propose LiDAL, a novel active learning method for 3D LiDAR semantic segmentation by exploiting inter-frame uncertainty among LiDAR frames. Our core idea is that a well-trained model should generate robust results irrespective of viewpoints for scene scanning and thus the inconsistencies in model predictions across frames provide a very reliable measure of uncertainty for active sample selection. To implement this uncertainty measure, we introduce new inter-frame divergence and entropy formulations, which serve as the metrics for active selection. Moreover, we demonstrate additional performance gains by predicting and incorporating pseudo-labels, which are also selected using the proposed inter-frame uncertainty measure. Experimental results validate the effectiveness of LiDAL: we achieve 95% of the performance of fully supervised learning with less than 5% of annotations on the SemanticKITTI and nuScenes datasets, outperforming state-of-the-art active learning methods. Code release: https://github.com/hzykent/LiDAL.

SoccerNet 2022 Challenges Results

Oct 05, 2022

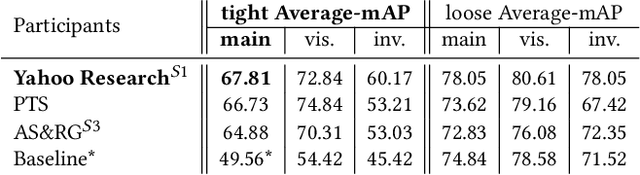

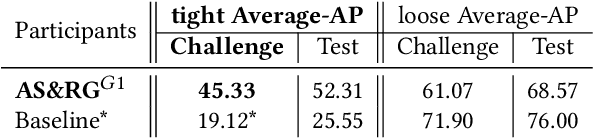

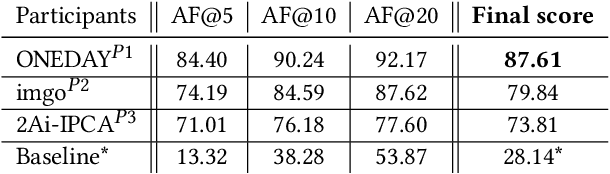

Abstract:The SoccerNet 2022 challenges were the second annual video understanding challenges organized by the SoccerNet team. In 2022, the challenges were composed of 6 vision-based tasks: (1) action spotting, focusing on retrieving action timestamps in long untrimmed videos, (2) replay grounding, focusing on retrieving the live moment of an action shown in a replay, (3) pitch localization, focusing on detecting line and goal part elements, (4) camera calibration, dedicated to retrieving the intrinsic and extrinsic camera parameters, (5) player re-identification, focusing on retrieving the same players across multiple views, and (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams. Compared to last year's challenges, tasks (1-2) had their evaluation metrics redefined to consider tighter temporal accuracies, and tasks (3-6) were novel, including their underlying data and annotations. More information on the tasks, challenges and leaderboards are available on https://www.soccer-net.org. Baselines and development kits are available on https://github.com/SoccerNet.

Lung-Originated Tumor Segmentation from Computed Tomography Scan (LOTUS) Benchmark

Jan 03, 2022

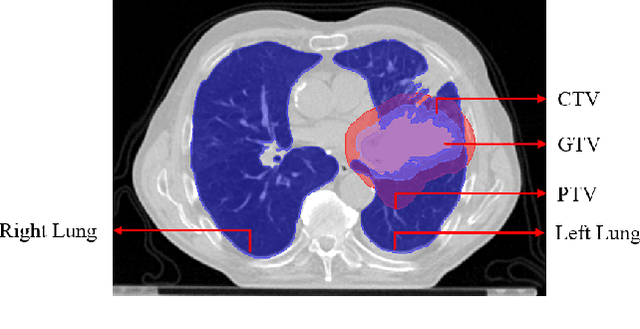

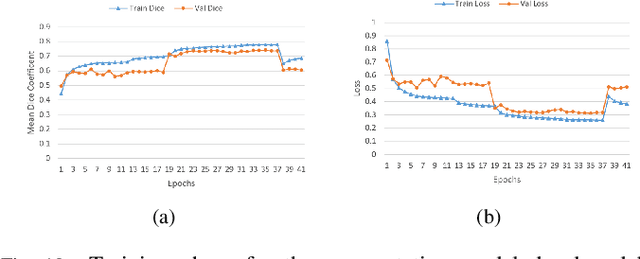

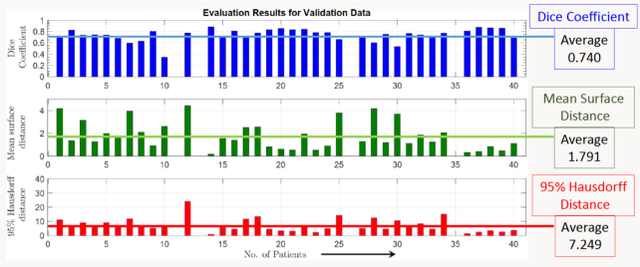

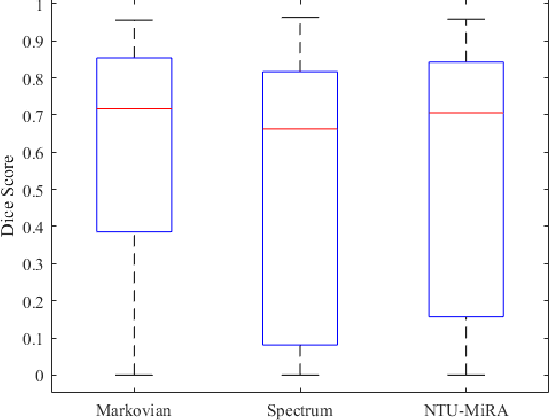

Abstract:Lung cancer is one of the deadliest cancers, and in part its effective diagnosis and treatment depend on the accurate delineation of the tumor. Human-centered segmentation, which is currently the most common approach, is subject to inter-observer variability, and is also time-consuming, considering the fact that only experts are capable of providing annotations. Automatic and semi-automatic tumor segmentation methods have recently shown promising results. However, as different researchers have validated their algorithms using various datasets and performance metrics, reliably evaluating these methods is still an open challenge. The goal of the Lung-Originated Tumor Segmentation from Computed Tomography Scan (LOTUS) Benchmark created through 2018 IEEE Video and Image Processing (VIP) Cup competition, is to provide a unique dataset and pre-defined metrics, so that different researchers can develop and evaluate their methods in a unified fashion. The 2018 VIP Cup started with a global engagement from 42 countries to access the competition data. At the registration stage, there were 129 members clustered into 28 teams from 10 countries, out of which 9 teams made it to the final stage and 6 teams successfully completed all the required tasks. In a nutshell, all the algorithms proposed during the competition, are based on deep learning models combined with a false positive reduction technique. Methods developed by the three finalists show promising results in tumor segmentation, however, more effort should be put into reducing the false positive rate. This competition manuscript presents an overview of the VIP-Cup challenge, along with the proposed algorithms and results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge