Rengang Li

DropletVideo: A Dataset and Approach to Explore Integral Spatio-Temporal Consistent Video Generation

Mar 08, 2025Abstract:Spatio-temporal consistency is a critical research topic in video generation. A qualified generated video segment must ensure plot plausibility and coherence while maintaining visual consistency of objects and scenes across varying viewpoints. Prior research, especially in open-source projects, primarily focuses on either temporal or spatial consistency, or their basic combination, such as appending a description of a camera movement after a prompt without constraining the outcomes of this movement. However, camera movement may introduce new objects to the scene or eliminate existing ones, thereby overlaying and affecting the preceding narrative. Especially in videos with numerous camera movements, the interplay between multiple plots becomes increasingly complex. This paper introduces and examines integral spatio-temporal consistency, considering the synergy between plot progression and camera techniques, and the long-term impact of prior content on subsequent generation. Our research encompasses dataset construction through to the development of the model. Initially, we constructed a DropletVideo-10M dataset, which comprises 10 million videos featuring dynamic camera motion and object actions. Each video is annotated with an average caption of 206 words, detailing various camera movements and plot developments. Following this, we developed and trained the DropletVideo model, which excels in preserving spatio-temporal coherence during video generation. The DropletVideo dataset and model are accessible at https://dropletx.github.io.

Image Content Generation with Causal Reasoning

Dec 12, 2023Abstract:The emergence of ChatGPT has once again sparked research in generative artificial intelligence (GAI). While people have been amazed by the generated results, they have also noticed the reasoning potential reflected in the generated textual content. However, this current ability for causal reasoning is primarily limited to the domain of language generation, such as in models like GPT-3. In visual modality, there is currently no equivalent research. Considering causal reasoning in visual content generation is significant. This is because visual information contains infinite granularity. Particularly, images can provide more intuitive and specific demonstrations for certain reasoning tasks, especially when compared to coarse-grained text. Hence, we propose a new image generation task called visual question answering with image (VQAI) and establish a dataset of the same name based on the classic \textit{Tom and Jerry} animated series. Additionally, we develop a new paradigm for image generation to tackle the challenges of this task. Finally, we perform extensive experiments and analyses, including visualizations of the generated content and discussions on the potentials and limitations. The code and data are publicly available under the license of CC BY-NC-SA 4.0 for academic and non-commercial usage. The code and dataset are publicly available at: https://github.com/IEIT-AGI/MIX-Shannon/blob/main/projects/VQAI/lgd_vqai.md.

Real-Aug: Realistic Scene Synthesis for LiDAR Augmentation in 3D Object Detection

May 22, 2023Abstract:Data and model are the undoubtable two supporting pillars for LiDAR object detection. However, data-centric works have fallen far behind compared with the ever-growing list of fancy new models. In this work, we systematically study the synthesis-based LiDAR data augmentation approach (so-called GT-Aug) which offers maxium controllability over generated data samples. We pinpoint the main shortcoming of existing works is introducing unrealistic LiDAR scan patterns during GT-Aug. In light of this finding, we propose Real-Aug, a synthesis-based augmentation method which prioritizes on generating realistic LiDAR scans. Our method consists a reality-conforming scene composition module which handles the details of the composition and a real-synthesis mixing up training strategy which gradually adapts the data distribution from synthetic data to the real one. To verify the effectiveness of our methods, we conduct extensive ablation studies and validate the proposed Real-Aug on a wide combination of detectors and datasets. We achieve a state-of-the-art 0.744 NDS and 0.702 mAP on nuScenes test set. The code shall be released soon.

SGED: A Benchmark dataset for Performance Evaluation of Spiking Gesture Emotion Recognition

Apr 28, 2023

Abstract:In the field of affective computing, researchers in the community have promoted the performance of models and algorithms by using the complementarity of multimodal information. However, the emergence of more and more modal information makes the development of datasets unable to keep up with the progress of existing modal sensing equipment. Collecting and studying multimodal data is a complex and significant work. In order to supplement the challenge of partial missing of community data. We collected and labeled a new homogeneous multimodal gesture emotion recognition dataset based on the analysis of the existing data sets. This data set complements the defects of homogeneous multimodal data and provides a new research direction for emotion recognition. Moreover, we propose a pseudo dual-flow network based on this dataset, and verify the application potential of this dataset in the affective computing community. The experimental results demonstrate that it is feasible to use the traditional visual information and spiking visual information based on homogeneous multimodal data for visual emotion recognition.The dataset is available at \url{https://github.com/201528014227051/SGED}

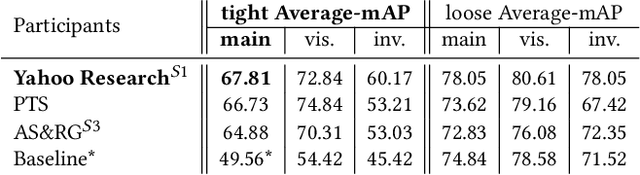

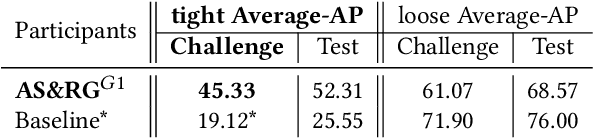

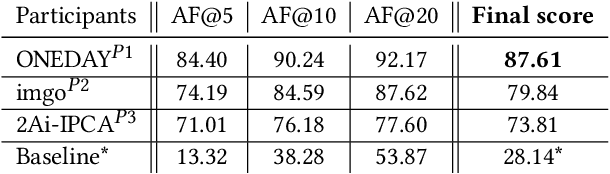

SoccerNet 2022 Challenges Results

Oct 05, 2022

Abstract:The SoccerNet 2022 challenges were the second annual video understanding challenges organized by the SoccerNet team. In 2022, the challenges were composed of 6 vision-based tasks: (1) action spotting, focusing on retrieving action timestamps in long untrimmed videos, (2) replay grounding, focusing on retrieving the live moment of an action shown in a replay, (3) pitch localization, focusing on detecting line and goal part elements, (4) camera calibration, dedicated to retrieving the intrinsic and extrinsic camera parameters, (5) player re-identification, focusing on retrieving the same players across multiple views, and (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams. Compared to last year's challenges, tasks (1-2) had their evaluation metrics redefined to consider tighter temporal accuracies, and tasks (3-6) were novel, including their underlying data and annotations. More information on the tasks, challenges and leaderboards are available on https://www.soccer-net.org. Baselines and development kits are available on https://github.com/SoccerNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge