Lei Li

Carnegie Mellon University

3D Textured Shape Recovery with Learned Geometric Priors

Sep 07, 2022

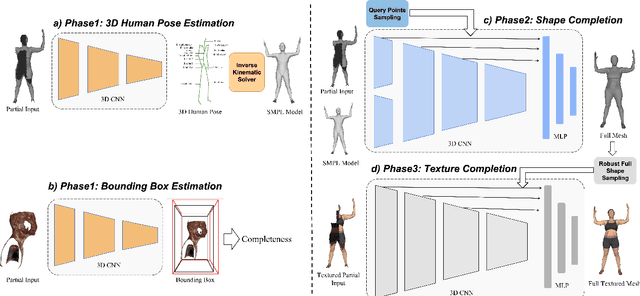

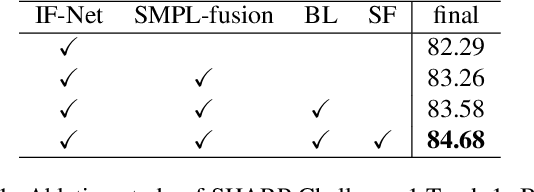

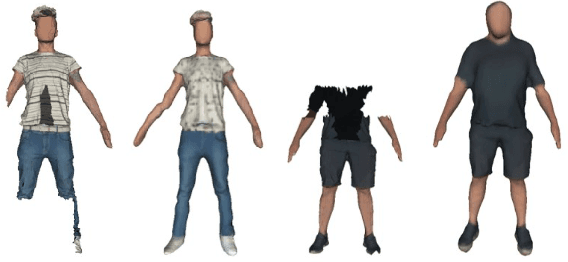

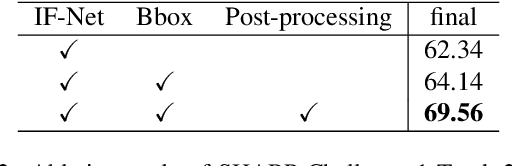

Abstract:3D textured shape recovery from partial scans is crucial for many real-world applications. Existing approaches have demonstrated the efficacy of implicit function representation, but they suffer from partial inputs with severe occlusions and varying object types, which greatly hinders their application value in the real world. This technical report presents our approach to address these limitations by incorporating learned geometric priors. To this end, we generate a SMPL model from learned pose prediction and fuse it into the partial input to add prior knowledge of human bodies. We also propose a novel completeness-aware bounding box adaptation for handling different levels of scales and partialness of partial scans.

Multi-Modality Cardiac Image Computing: A Survey

Aug 26, 2022

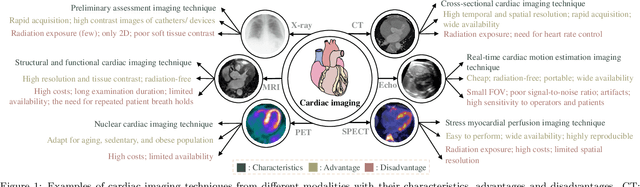

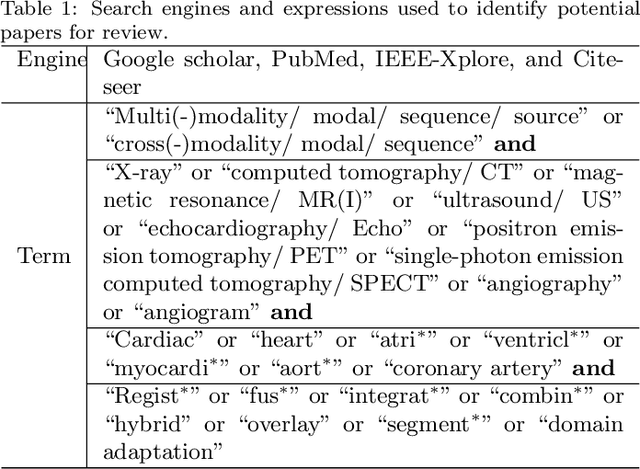

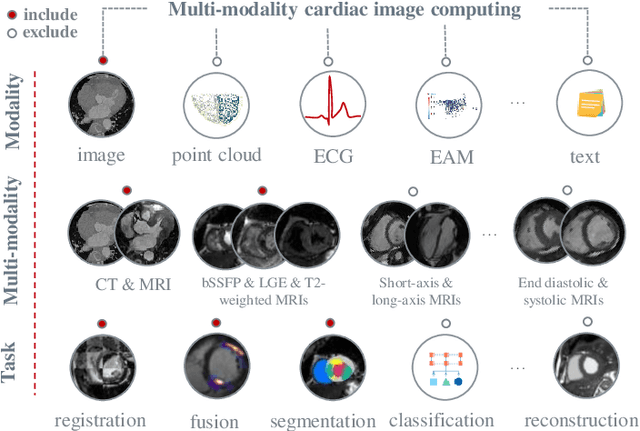

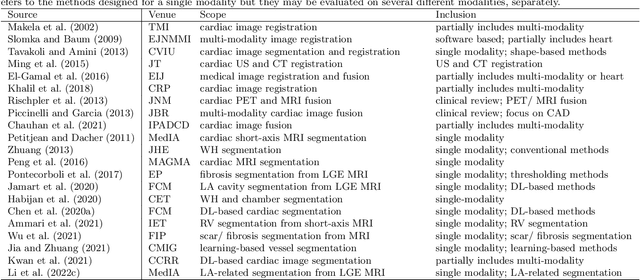

Abstract:Multi-modality cardiac imaging plays a key role in the management of patients with cardiovascular diseases. It allows a combination of complementary anatomical, morphological and functional information, increases diagnosis accuracy, and improves the efficacy of cardiovascular interventions and clinical outcomes. Fully-automated processing and quantitative analysis of multi-modality cardiac images could have a direct impact on clinical research and evidence-based patient management. However, these require overcoming significant challenges including inter-modality misalignment and finding optimal methods to integrate information from different modalities. This paper aims to provide a comprehensive review of multi-modality imaging in cardiology, the computing methods, the validation strategies, the related clinical workflows and future perspectives. For the computing methodologies, we have a favored focus on the three tasks, i.e., registration, fusion and segmentation, which generally involve multi-modality imaging data, \textit{either combining information from different modalities or transferring information across modalities}. The review highlights that multi-modality cardiac imaging data has the potential of wide applicability in the clinic, such as trans-aortic valve implantation guidance, myocardial viability assessment, and catheter ablation therapy and its patient selection. Nevertheless, many challenges remain unsolved, such as missing modality, combination of imaging and non-imaging data, and uniform analysis and representation of different modalities. There is also work to do in defining how the well-developed techniques fit in clinical workflows and how much additional and relevant information they introduce. These problems are likely to continue to be an active field of research and the questions to be answered in the future.

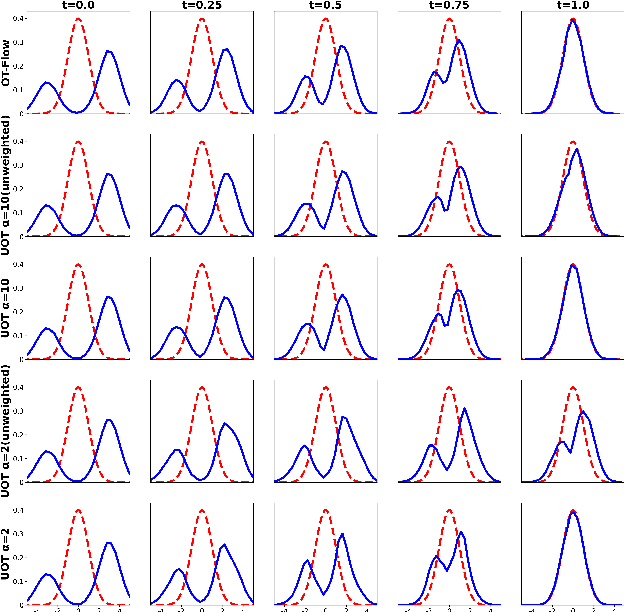

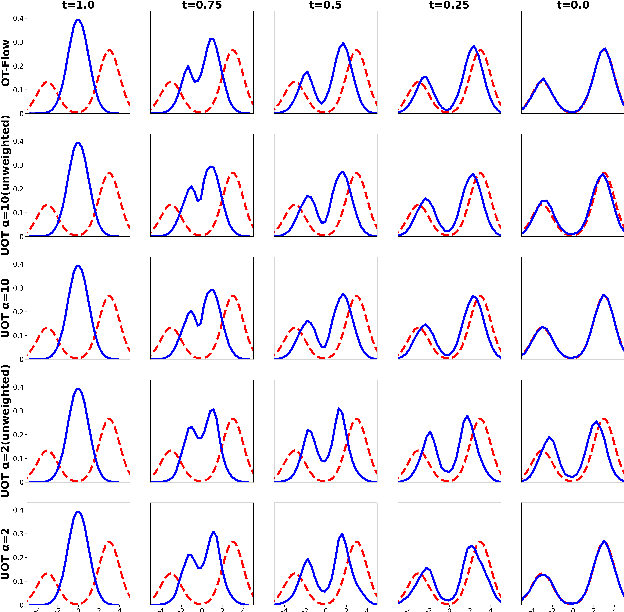

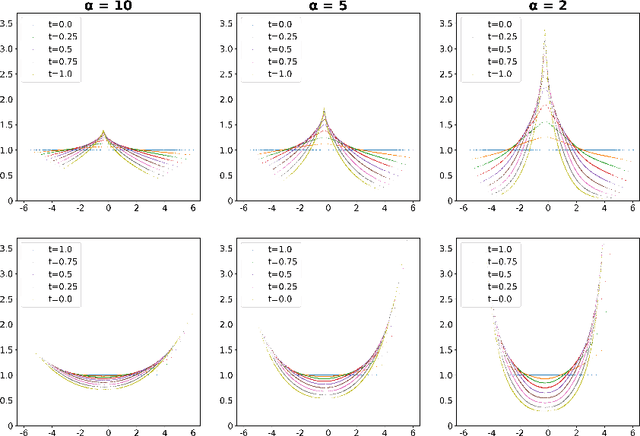

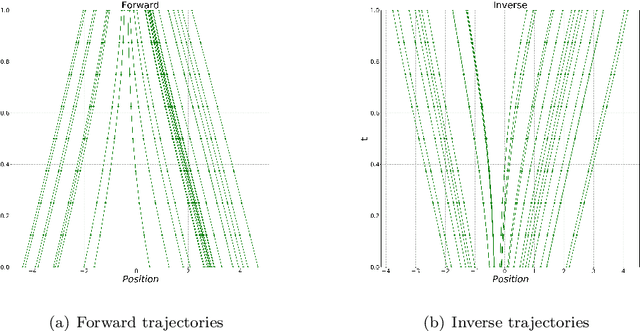

A deep learning framework for geodesics under spherical Wasserstein-Fisher-Rao metric and its application for weighted sample generation

Aug 25, 2022

Abstract:Wasserstein-Fisher-Rao (WFR) distance is a family of metrics to gauge the discrepancy of two Radon measures, which takes into account both transportation and weight change. Spherical WFR distance is a projected version of WFR distance for probability measures so that the space of Radon measures equipped with WFR can be viewed as metric cone over the space of probability measures with spherical WFR. Compared to the case for Wasserstein distance, the understanding of geodesics under the spherical WFR is less clear and still an ongoing research focus. In this paper, we develop a deep learning framework to compute the geodesics under the spherical WFR metric, and the learned geodesics can be adopted to generate weighted samples. Our approach is based on a Benamou-Brenier type dynamic formulation for spherical WFR. To overcome the difficulty in enforcing the boundary constraint brought by the weight change, a Kullback-Leibler (KL) divergence term based on the inverse map is introduced into the cost function. Moreover, a new regularization term using the particle velocity is introduced as a substitute for the Hamilton-Jacobi equation for the potential in dynamic formula. When used for sample generation, our framework can be beneficial for applications with given weighted samples, especially in the Bayesian inference, compared to sample generation with previous flow models.

AIM 2022 Challenge on Super-Resolution of Compressed Image and Video: Dataset, Methods and Results

Aug 25, 2022

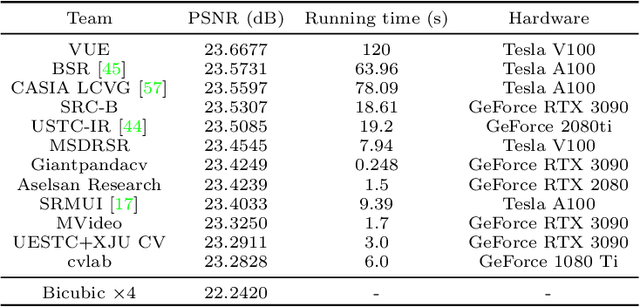

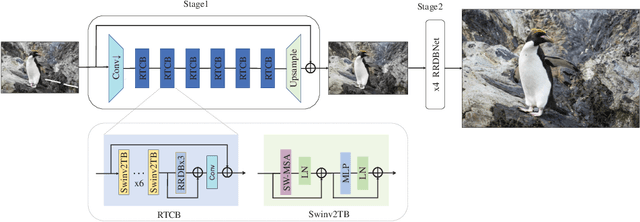

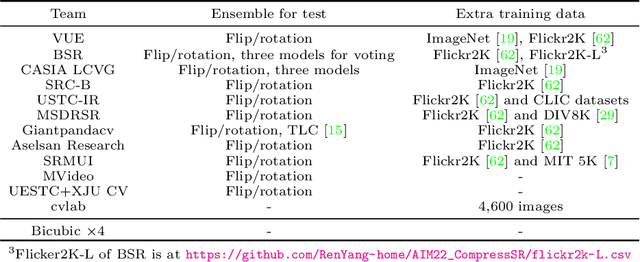

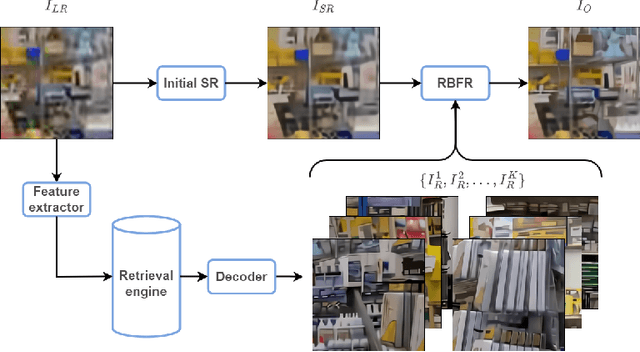

Abstract:This paper reviews the Challenge on Super-Resolution of Compressed Image and Video at AIM 2022. This challenge includes two tracks. Track 1 aims at the super-resolution of compressed image, and Track~2 targets the super-resolution of compressed video. In Track 1, we use the popular dataset DIV2K as the training, validation and test sets. In Track 2, we propose the LDV 3.0 dataset, which contains 365 videos, including the LDV 2.0 dataset (335 videos) and 30 additional videos. In this challenge, there are 12 teams and 2 teams that submitted the final results to Track 1 and Track 2, respectively. The proposed methods and solutions gauge the state-of-the-art of super-resolution on compressed image and video. The proposed LDV 3.0 dataset is available at https://github.com/RenYang-home/LDV_dataset. The homepage of this challenge is at https://github.com/RenYang-home/AIM22_CompressSR.

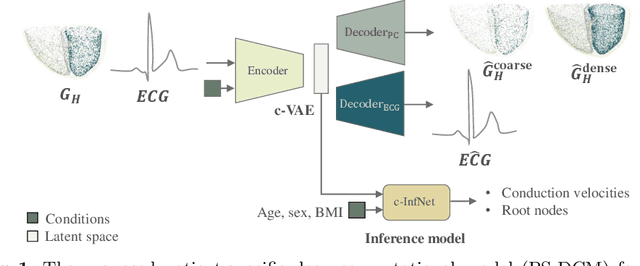

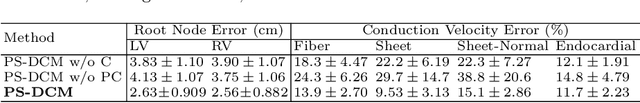

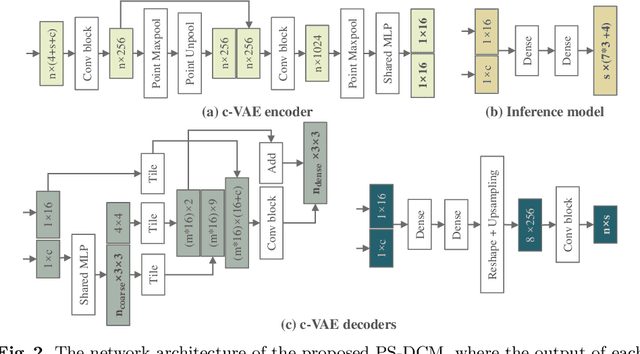

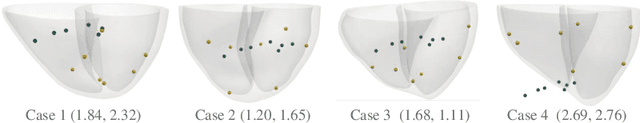

Deep Computational Model for the Inference of Ventricular Activation Properties

Aug 08, 2022

Abstract:Patient-specific cardiac computational models are essential for the efficient realization of precision medicine and in-silico clinical trials using digital twins. Cardiac digital twins can provide non-invasive characterizations of cardiac functions for individual patients, and therefore are promising for the patient-specific diagnosis and therapy stratification. However, current workflows for both the anatomical and functional twinning phases, referring to the inference of model anatomy and parameter from clinical data, are not sufficiently efficient, robust, and accurate. In this work, we propose a deep learning based patient-specific computational model, which can fuse both anatomical and electrophysiological information for the inference of ventricular activation properties, i.e., conduction velocities and root nodes. The activation properties can provide a quantitative assessment of cardiac electrophysiological function for the guidance of interventional procedures. We employ the Eikonal model to generate simulated electrocardiogram (ECG) with ground truth properties to train the inference model, where specific patient information has also been considered. For evaluation, we test the model on the simulated data and obtain generally promising results with fast computational time.

Distributional Correlation--Aware Knowledge Distillation for Stock Trading Volume Prediction

Aug 04, 2022

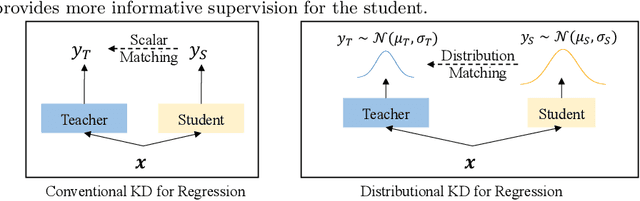

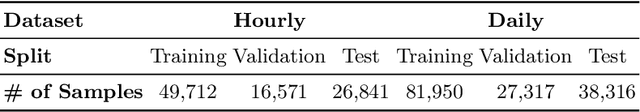

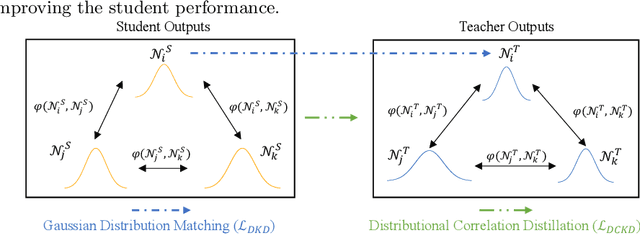

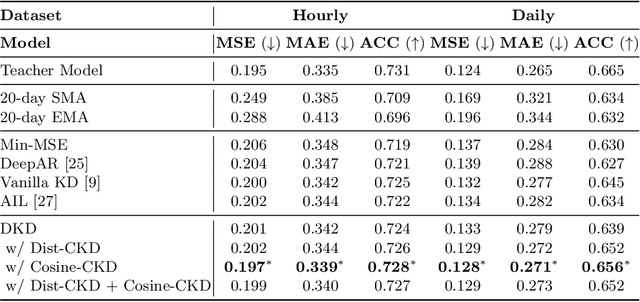

Abstract:Traditional knowledge distillation in classification problems transfers the knowledge via class correlations in the soft label produced by teacher models, which are not available in regression problems like stock trading volume prediction. To remedy this, we present a novel distillation framework for training a light-weight student model to perform trading volume prediction given historical transaction data. Specifically, we turn the regression model into a probabilistic forecasting model, by training models to predict a Gaussian distribution to which the trading volume belongs. The student model can thus learn from the teacher at a more informative distributional level, by matching its predicted distributions to that of the teacher. Two correlational distillation objectives are further introduced to encourage the student to produce consistent pair-wise relationships with the teacher model. We evaluate the framework on a real-world stock volume dataset with two different time window settings. Experiments demonstrate that our framework is superior to strong baseline models, compressing the model size by $5\times$ while maintaining $99.6\%$ prediction accuracy. The extensive analysis further reveals that our framework is more effective than vanilla distillation methods under low-resource scenarios.

A sharp uniform-in-time error estimate for Stochastic Gradient Langevin Dynamics

Jul 19, 2022Abstract:We establish a sharp uniform-in-time error estimate for the Stochastic Gradient Langevin Dynamics (SGLD), which is a popular sampling algorithm. Under mild assumptions, we obtain a uniform-in-time $O(\eta^2)$ bound for the KL-divergence between the SGLD iteration and the Langevin diffusion, where $\eta$ is the step size (or learning rate). Our analysis is also valid for varying step sizes. Based on this, we are able to obtain an $O(\eta)$ bound for the distance between the SGLD iteration and the invariant distribution of the Langevin diffusion, in terms of Wasserstein or total variation distances.

On uniform-in-time diffusion approximation for stochastic gradient descent

Jul 11, 2022Abstract:The diffusion approximation of stochastic gradient descent (SGD) in current literature is only valid on a finite time interval. In this paper, we establish the uniform-in-time diffusion approximation of SGD, by only assuming that the expected loss is strongly convex and some other mild conditions, without assuming the convexity of each random loss function. The main technique is to establish the exponential decay rates of the derivatives of the solution to the backward Kolmogorov equation. The uniform-in-time approximation allows us to study asymptotic behaviors of SGD via the continuous stochastic differential equation (SDE) even when the random objective function $f(\cdot;\xi)$ is not strongly convex.

On the Impact of Noises in Crowd-Sourced Data for Speech Translation

Jul 01, 2022

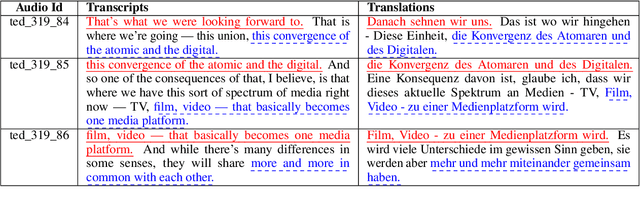

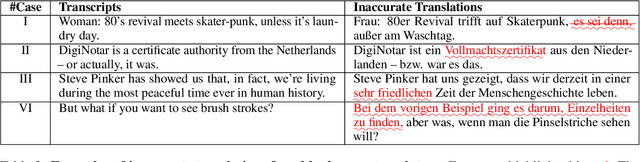

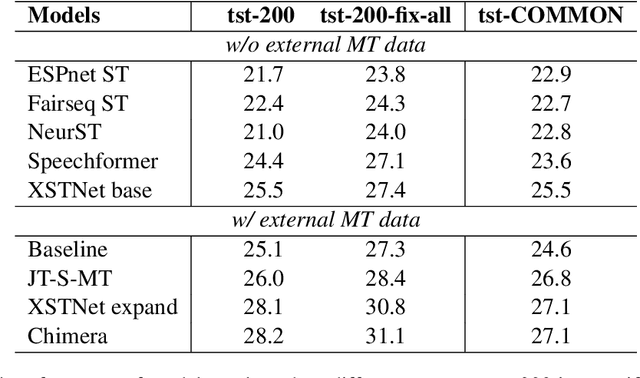

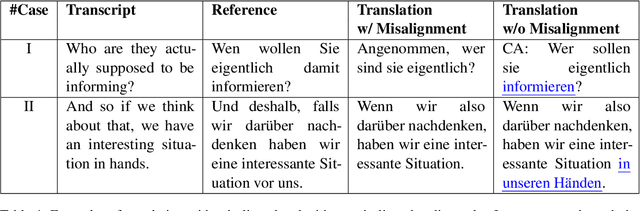

Abstract:Training speech translation (ST) models requires large and high-quality datasets. MuST-C is one of the most widely used ST benchmark datasets. It contains around 400 hours of speech-transcript-translation data for each of the eight translation directions. This dataset passes several quality-control filters during creation. However, we find that MuST-C still suffers from three major quality issues: audio-text misalignment, inaccurate translation, and unnecessary speaker's name. What are the impacts of these data quality issues for model development and evaluation? In this paper, we propose an automatic method to fix or filter the above quality issues, using English-German (En-De) translation as an example. Our experiments show that ST models perform better on clean test sets, and the rank of proposed models remains consistent across different test sets. Besides, simply removing misaligned data points from the training set does not lead to a better ST model.

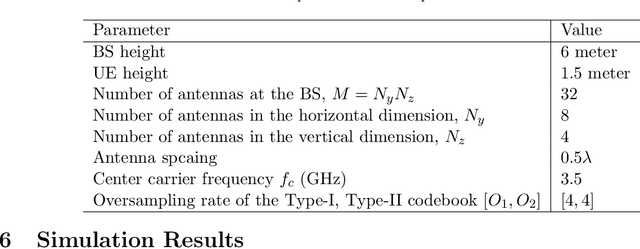

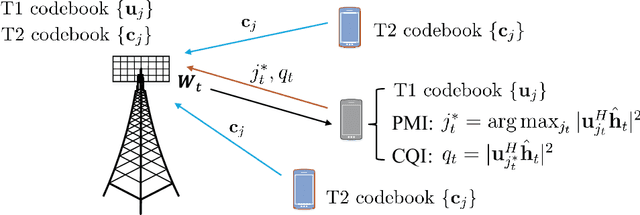

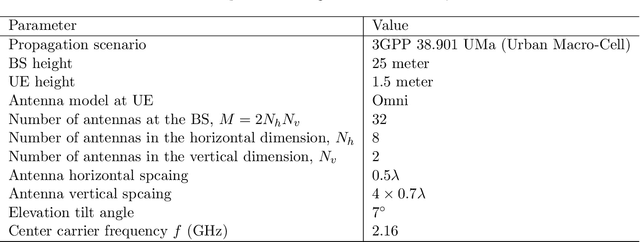

CSI Sensing from Heterogeneous User Feedbacks: A Constrained Phase Retrieval Approach

Jun 28, 2022

Abstract:This paper investigates the downlink channel state information (CSI) sensing in 5G heterogeneous networks composed of user equipments (UEs) with different feedback capabilities. We aim to enhance the CSI accuracy of UEs only affording the low-resolution Type-I codebook. While existing works have demonstrated that the task can be accomplished by solving a phase retrieval (PR) formulation based on the feedback of precoding matrix indicator (PMI) and channel quality indicator (CQI), they need many feedback rounds. In this paper, we propose a novel CSI sensing scheme that can significantly reduce the feedback overhead. Our scheme involves a novel parameter dimension reduction design by exploiting the spatial consistency of wireless channels among nearby UEs, and a constrained PR (CPR) formulation that characterizes the feasible region of CSI by the PMI information. To address the computational challenge due to the non-convexity and the large number of constraints of CPR, we develop a two-stage algorithm that firstly identifies and removes inactive constraints, followed by a fast first-order algorithm. The study is further extended to multi-carrier systems. Extensive tests over DeepMIMO and QuaDriGa datasets showcase that our designs greatly outperform existing methods and achieve the high-resolution Type-II codebook performance with a few rounds of feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge