Junwei Pan

From Feature Interaction to Feature Generation: A Generative Paradigm of CTR Prediction Models

Dec 16, 2025

Abstract:Click-Through Rate (CTR) prediction, a core task in recommendation systems, aims to estimate the probability of users clicking on items. Existing models predominantly follow a discriminative paradigm, which relies heavily on explicit interactions between raw ID embeddings. However, this paradigm inherently renders them susceptible to two critical issues: embedding dimensional collapse and information redundancy, stemming from the over-reliance on feature interactions \emph{over raw ID embeddings}. To address these limitations, we propose a novel \emph{Supervised Feature Generation (SFG)} framework, \emph{shifting the paradigm from discriminative ``feature interaction" to generative ``feature generation"}. Specifically, SFG comprises two key components: an \emph{Encoder} that constructs hidden embeddings for each feature, and a \emph{Decoder} tasked with regenerating the feature embeddings of all features from these hidden representations. Unlike existing generative approaches that adopt self-supervised losses, we introduce a supervised loss to utilize the supervised signal, \ie, click or not, in the CTR prediction task. This framework exhibits strong generalizability: it can be seamlessly integrated with most existing CTR models, reformulating them under the generative paradigm. Extensive experiments demonstrate that SFG consistently mitigates embedding collapse and reduces information redundancy, while yielding substantial performance gains across various datasets and base models. The code is available at https://github.com/USTC-StarTeam/GE4Rec.

Large Foundation Model for Ads Recommendation

Aug 20, 2025

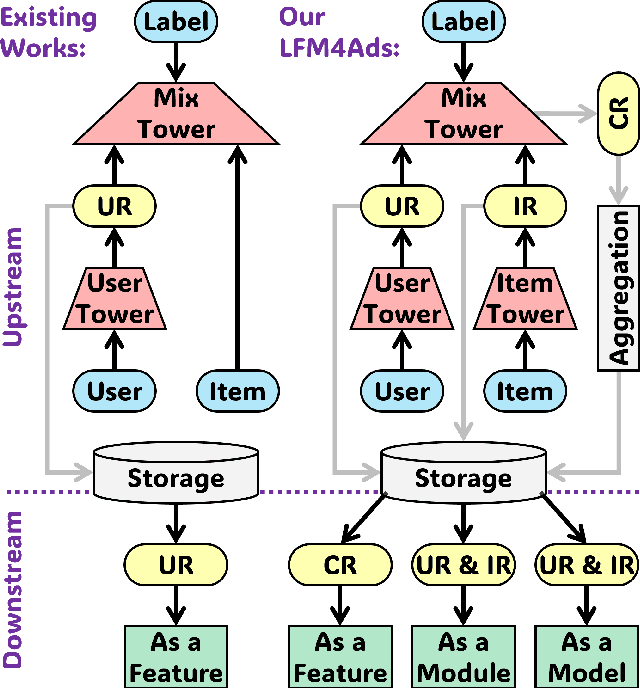

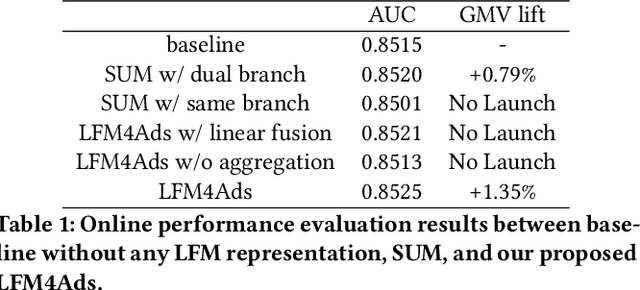

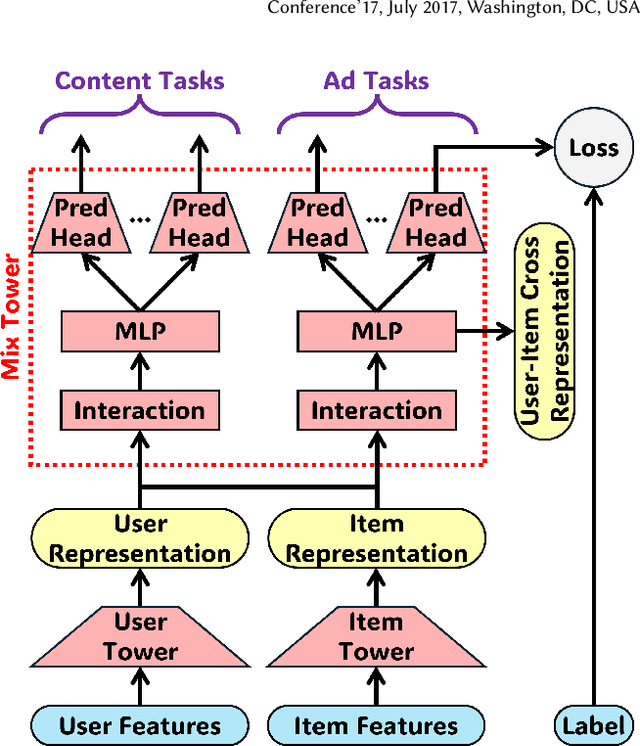

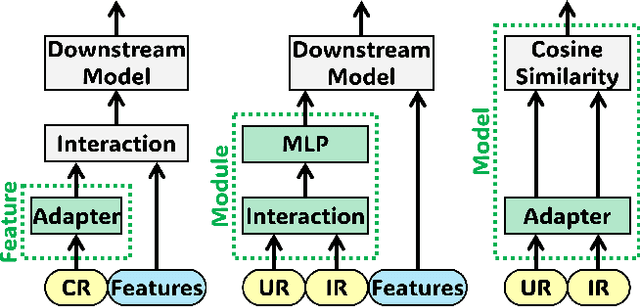

Abstract:Online advertising relies on accurate recommendation models, with recent advances using pre-trained large-scale foundation models (LFMs) to capture users' general interests across multiple scenarios and tasks. However, existing methods have critical limitations: they extract and transfer only user representations (URs), ignoring valuable item representations (IRs) and user-item cross representations (CRs); and they simply use a UR as a feature in downstream applications, which fails to bridge upstream-downstream gaps and overlooks more transfer granularities. In this paper, we propose LFM4Ads, an All-Representation Multi-Granularity transfer framework for ads recommendation. It first comprehensively transfers URs, IRs, and CRs, i.e., all available representations in the pre-trained foundation model. To effectively utilize the CRs, it identifies the optimal extraction layer and aggregates them into transferable coarse-grained forms. Furthermore, we enhance the transferability via multi-granularity mechanisms: non-linear adapters for feature-level transfer, an Isomorphic Interaction Module for module-level transfer, and Standalone Retrieval for model-level transfer. LFM4Ads has been successfully deployed in Tencent's industrial-scale advertising platform, processing tens of billions of daily samples while maintaining terabyte-scale model parameters with billions of sparse embedding keys across approximately two thousand features. Since its production deployment in Q4 2024, LFM4Ads has achieved 10+ successful production launches across various advertising scenarios, including primary ones like Weixin Moments and Channels. These launches achieve an overall GMV lift of 2.45% across the entire platform, translating to estimated annual revenue increases in the hundreds of millions of dollars.

Enhancing CTR Prediction with De-correlated Expert Networks

May 23, 2025Abstract:Modeling feature interactions is essential for accurate click-through rate (CTR) prediction in advertising systems. Recent studies have adopted the Mixture-of-Experts (MoE) approach to improve performance by ensembling multiple feature interaction experts. These studies employ various strategies, such as learning independent embedding tables for each expert or utilizing heterogeneous expert architectures, to differentiate the experts, which we refer to expert \emph{de-correlation}. However, it remains unclear whether these strategies effectively achieve de-correlated experts. To address this, we propose a De-Correlated MoE (D-MoE) framework, which introduces a Cross-Expert De-Correlation loss to minimize expert correlations.Additionally, we propose a novel metric, termed Cross-Expert Correlation, to quantitatively evaluate the expert de-correlation degree. Based on this metric, we identify a key finding for MoE framework design: \emph{different de-correlation strategies are mutually compatible, and progressively employing them leads to reduced correlation and enhanced performance}.Extensive experiments have been conducted to validate the effectiveness of D-MoE and the de-correlation principle. Moreover, online A/B testing on Tencent's advertising platforms demonstrates that D-MoE achieves a significant 1.19\% Gross Merchandise Volume (GMV) lift compared to the Multi-Embedding MoE baseline.

Pre-train, Align, and Disentangle: Empowering Sequential Recommendation with Large Language Models

Dec 05, 2024Abstract:Sequential recommendation (SR) aims to model the sequential dependencies in users' historical interactions to better capture their evolving interests. However, existing SR approaches primarily rely on collaborative data, which leads to limitations such as the cold-start problem and sub-optimal performance. Meanwhile, despite the success of large language models (LLMs), their application in industrial recommender systems is hindered by high inference latency, inability to capture all distribution statistics, and catastrophic forgetting. To this end, we propose a novel Pre-train, Align, and Disentangle (PAD) paradigm to empower recommendation models with LLMs. Specifically, we first pre-train both the SR and LLM models to get collaborative and textual embeddings. Next, a characteristic recommendation-anchored alignment loss is proposed using multi-kernel maximum mean discrepancy with Gaussian kernels. Finally, a triple-experts architecture, consisting aligned and modality-specific experts with disentangled embeddings, is fine-tuned in a frequency-aware manner. Experiments conducted on three public datasets demonstrate the effectiveness of PAD, showing significant improvements and compatibility with various SR backbone models, especially on cold items. The implementation code and datasets will be publicly available.

Towards Unifying Feature Interaction Models for Click-Through Rate Prediction

Nov 19, 2024

Abstract:Modeling feature interactions plays a crucial role in accurately predicting click-through rates (CTR) in advertising systems. To capture the intricate patterns of interaction, many existing models employ matrix-factorization techniques to represent features as lower-dimensional embedding vectors, enabling the modeling of interactions as products between these embeddings. In this paper, we propose a general framework called IPA to systematically unify these models. Our framework comprises three key components: the Interaction Function, which facilitates feature interaction; the Layer Pooling, which constructs higher-level interaction layers; and the Layer Aggregator, which combines the outputs of all layers to serve as input for the subsequent classifier. We demonstrate that most existing models can be categorized within our framework by making specific choices for these three components. Through extensive experiments and a dimensional collapse analysis, we evaluate the performance of these choices. Furthermore, by leveraging the most powerful components within our framework, we introduce a novel model that achieves competitive results compared to state-of-the-art CTR models. PFL gets significant GMV lift during online A/B test in Tencent's advertising platform and has been deployed as the production model in several primary scenarios.

Towards Scalable Semantic Representation for Recommendation

Oct 12, 2024

Abstract:With recent advances in large language models (LLMs), there has been emerging numbers of research in developing Semantic IDs based on LLMs to enhance the performance of recommendation systems. However, the dimension of these embeddings needs to match that of the ID embedding in recommendation, which is usually much smaller than the original length. Such dimension compression results in inevitable losses in discriminability and dimension robustness of the LLM embeddings, which motivates us to scale up the semantic representation. In this paper, we propose Mixture-of-Codes, which first constructs multiple independent codebooks for LLM representation in the indexing stage, and then utilizes the Semantic Representation along with a fusion module for the downstream recommendation stage. Extensive analysis and experiments demonstrate that our method achieves superior discriminability and dimension robustness scalability, leading to the best scale-up performance in recommendations.

Long-Sequence Recommendation Models Need Decoupled Embeddings

Oct 03, 2024

Abstract:Lifelong user behavior sequences, comprising up to tens of thousands of history behaviors, are crucial for capturing user interests and predicting user responses in modern recommendation systems. A two-stage paradigm is typically adopted to handle these long sequences: a few relevant behaviors are first searched from the original long sequences via an attention mechanism in the first stage and then aggregated with the target item to construct a discriminative representation for prediction in the second stage. In this work, we identify and characterize, for the first time, a neglected deficiency in existing long-sequence recommendation models: a single set of embeddings struggles with learning both attention and representation, leading to interference between these two processes. Initial attempts to address this issue using linear projections -- a technique borrowed from language processing -- proved ineffective, shedding light on the unique challenges of recommendation models. To overcome this, we propose the Decoupled Attention and Representation Embeddings (DARE) model, where two distinct embedding tables are initialized and learned separately to fully decouple attention and representation. Extensive experiments and analysis demonstrate that DARE provides more accurate search of correlated behaviors and outperforms baselines with AUC gains up to 0.9% on public datasets and notable online system improvements. Furthermore, decoupling embedding spaces allows us to reduce the attention embedding dimension and accelerate the search procedure by 50% without significant performance impact, enabling more efficient, high-performance online serving.

Disentangled Representation with Cross Experts Covariance Loss for Multi-Domain Recommendation

May 21, 2024

Abstract:Multi-domain learning (MDL) has emerged as a prominent research area aimed at enhancing the quality of personalized services. The key challenge in MDL lies in striking a balance between learning commonalities across domains while preserving the distinct characteristics of each domain. However, this gives rise to a challenging dilemma. On one hand, a model needs to leverage domain-specific modules, such as experts or embeddings, to preserve the uniqueness of each domain. On the other hand, due to the long-tailed distributions observed in real-world domains, some tail domains may lack sufficient samples to fully learn their corresponding modules. Unfortunately, existing approaches have not adequately addressed this dilemma. To address this issue, we propose a novel model called Crocodile, which stands for Cross-experts Covariance Loss for Disentangled Learning. Crocodile adopts a multi-embedding paradigm to facilitate model learning and employs a Covariance Loss on these embeddings to disentangle them. This disentanglement enables the model to capture diverse user interests across domains effectively. Additionally, we introduce a novel gating mechanism to further enhance the capabilities of Crocodile. Through empirical analysis, we demonstrate that our proposed method successfully resolves these two challenges and outperforms all state-of-the-art methods on publicly available datasets. We firmly believe that the analytical perspectives and design concept of disentanglement presented in our work can pave the way for future research in the field of MDL.

Deep Pattern Network for Click-Through Rate Prediction

Apr 17, 2024

Abstract:Click-through rate (CTR) prediction tasks play a pivotal role in real-world applications, particularly in recommendation systems and online advertising. A significant research branch in this domain focuses on user behavior modeling. Current research predominantly centers on modeling co-occurrence relationships between the target item and items previously interacted with by users in their historical data. However, this focus neglects the intricate modeling of user behavior patterns. In reality, the abundance of user interaction records encompasses diverse behavior patterns, indicative of a spectrum of habitual paradigms. These patterns harbor substantial potential to significantly enhance CTR prediction performance. To harness the informational potential within user behavior patterns, we extend Target Attention (TA) to Target Pattern Attention (TPA) to model pattern-level dependencies. Furthermore, three critical challenges demand attention: the inclusion of unrelated items within behavior patterns, data sparsity in behavior patterns, and computational complexity arising from numerous patterns. To address these challenges, we introduce the Deep Pattern Network (DPN), designed to comprehensively leverage information from user behavior patterns. DPN efficiently retrieves target-related user behavior patterns using a target-aware attention mechanism. Additionally, it contributes to refining user behavior patterns through a pre-training paradigm based on self-supervised learning while promoting dependency learning within sparse patterns. Our comprehensive experiments, conducted across three public datasets, substantiate the superior performance and broad compatibility of DPN.

Understanding the Ranking Loss for Recommendation with Sparse User Feedback

Mar 21, 2024Abstract:Click-through rate (CTR) prediction holds significant importance in the realm of online advertising. While many existing approaches treat it as a binary classification problem and utilize binary cross entropy (BCE) as the optimization objective, recent advancements have indicated that combining BCE loss with ranking loss yields substantial performance improvements. However, the full efficacy of this combination loss remains incompletely understood. In this paper, we uncover a new challenge associated with BCE loss in scenarios with sparse positive feedback, such as CTR prediction: the gradient vanishing for negative samples. Subsequently, we introduce a novel perspective on the effectiveness of ranking loss in CTR prediction, highlighting its ability to generate larger gradients on negative samples, thereby mitigating their optimization issues and resulting in improved classification ability. Our perspective is supported by extensive theoretical analysis and empirical evaluation conducted on publicly available datasets. Furthermore, we successfully deployed the ranking loss in Tencent's online advertising system, achieving notable lifts of 0.70% and 1.26% in Gross Merchandise Value (GMV) for two main scenarios. The code for our approach is openly accessible at the following GitHub repository: https://github.com/SkylerLinn/Understanding-the-Ranking-Loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge