Juan Zhao

China Mobile Research Institute, Beijing, China

Unified Enhancement of the Generalization and Robustness of Language Models via Bi-Stage Optimization

Mar 19, 2025

Abstract:Neural network language models (LMs) are confronted with significant challenges in generalization and robustness. Currently, many studies focus on improving either generalization or robustness in isolation, without methods addressing both aspects simultaneously, which presents a significant challenge in developing LMs that are both robust and generalized. In this paper, we propose a bi-stage optimization framework to uniformly enhance both the generalization and robustness of LMs, termed UEGR. Specifically, during the forward propagation stage, we enrich the output probability distributions of adversarial samples by adaptive dropout to generate diverse sub models, and incorporate JS divergence and adversarial losses of these output distributions to reinforce output stability. During backward propagation stage, we compute parameter saliency scores and selectively update only the most critical parameters to minimize unnecessary deviations and consolidate the model's resilience. Theoretical analysis shows that our framework includes gradient regularization to limit the model's sensitivity to input perturbations and selective parameter updates to flatten the loss landscape, thus improving both generalization and robustness. The experimental results show that our method significantly improves the generalization and robustness of LMs compared to other existing methods across 13 publicly available language datasets, achieving state-of-the-art (SOTA) performance.

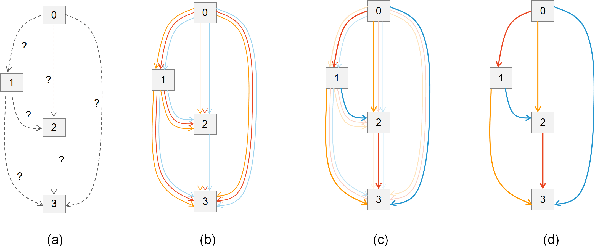

Deciphering interventional dynamical causality from non-intervention systems

Jun 29, 2024

Abstract:Detecting and quantifying causality is a focal topic in the fields of science, engineering, and interdisciplinary studies. However, causal studies on non-intervention systems attract much attention but remain extremely challenging. To address this challenge, we propose a framework named Interventional Dynamical Causality (IntDC) for such non-intervention systems, along with its computational criterion, Interventional Embedding Entropy (IEE), to quantify causality. The IEE criterion theoretically and numerically enables the deciphering of IntDC solely from observational (non-interventional) time-series data, without requiring any knowledge of dynamical models or real interventions in the considered system. Demonstrations of performance showed the accuracy and robustness of IEE on benchmark simulated systems as well as real-world systems, including the neural connectomes of C. elegans, COVID-19 transmission networks in Japan, and regulatory networks surrounding key circadian genes.

Methodology and Real-World Applications of Dynamic Uncertain Causality Graph for Clinical Diagnosis with Explainability and Invariance

Jun 09, 2024Abstract:AI-aided clinical diagnosis is desired in medical care. Existing deep learning models lack explainability and mainly focus on image analysis. The recently developed Dynamic Uncertain Causality Graph (DUCG) approach is causality-driven, explainable, and invariant across different application scenarios, without problems of data collection, labeling, fitting, privacy, bias, generalization, high cost and high energy consumption. Through close collaboration between clinical experts and DUCG technicians, 46 DUCG models covering 54 chief complaints were constructed. Over 1,000 diseases can be diagnosed without triage. Before being applied in real-world, the 46 DUCG models were retrospectively verified by third-party hospitals. The verified diagnostic precisions were no less than 95%, in which the diagnostic precision for every disease including uncommon ones was no less than 80%. After verifications, the 46 DUCG models were applied in the real-world in China. Over one million real diagnosis cases have been performed, with only 17 incorrect diagnoses identified. Due to DUCG's transparency, the mistakes causing the incorrect diagnoses were found and corrected. The diagnostic abilities of the clinicians who applied DUCG frequently were improved significantly. Following the introduction to the earlier presented DUCG methodology, the recommendation algorithm for potential medical checks is presented and the key idea of DUCG is extracted.

TruthSR: Trustworthy Sequential Recommender Systems via User-generated Multimodal Content

Apr 26, 2024

Abstract:Sequential recommender systems explore users' preferences and behavioral patterns from their historically generated data. Recently, researchers aim to improve sequential recommendation by utilizing massive user-generated multi-modal content, such as reviews, images, etc. This content often contains inevitable noise. Some studies attempt to reduce noise interference by suppressing cross-modal inconsistent information. However, they could potentially constrain the capturing of personalized user preferences. In addition, it is almost impossible to entirely eliminate noise in diverse user-generated multi-modal content. To solve these problems, we propose a trustworthy sequential recommendation method via noisy user-generated multi-modal content. Specifically, we explicitly capture the consistency and complementarity of user-generated multi-modal content to mitigate noise interference. We also achieve the modeling of the user's multi-modal sequential preferences. In addition, we design a trustworthy decision mechanism that integrates subjective user perspective and objective item perspective to dynamically evaluate the uncertainty of prediction results. Experimental evaluation on four widely-used datasets demonstrates the superior performance of our model compared to state-of-the-art methods. The code is released at https://github.com/FairyMeng/TrustSR.

Partition-based Nonrigid Registration for 3D Face Model

Jan 05, 2024Abstract:This paper presents a partition-based surface registration for 3D morphable model(3DMM). In the 3DMM, it often requires to warp a handcrafted template model into different captured models. The proposed method first utilizes the landmarks to partition the template model then scale each part and finally smooth the boundaries. This method is especially effective when the disparity between the template model and the target model is huge. The experiment result shows the method perform well than the traditional warp method and robust to the local minima.

A Food Package Recognition and Sorting System Based on Structured Light and Deep Learning

Sep 07, 2023

Abstract:Vision algorithm-based robotic arm grasping system is one of the robotic arm systems that can be applied to a wide range of scenarios. It uses algorithms to automatically identify the location of the target and guide the robotic arm to grasp it, which has more flexible features than the teachable robotic arm grasping system. However, for some food packages, their transparent packages or reflective materials bring challenges to the recognition of vision algorithms, and traditional vision algorithms cannot achieve high accuracy for these packages. In addition, in the process of robotic arm grasping, the positioning on the z-axis height still requires manual setting of parameters, which may cause errors. Based on the above two problems, we designed a sorting system for food packaging using deep learning algorithms and structured light 3D reconstruction technology. Using a pre-trained MASK R-CNN model to recognize the class of the object in the image and get its 2D coordinates, then using structured light 3D reconstruction technique to calculate its 3D coordinates, and finally after the coordinate system conversion to guide the robotic arm for grasping. After testing, it is shown that the method can fully automate the recognition and grasping of different kinds of food packages with high accuracy. Using this method, it can help food manufacturers to reduce production costs and improve production efficiency.

Adaptive Hybrid Spatial-Temporal Graph Neural Network for Cellular Traffic Prediction

Feb 28, 2023

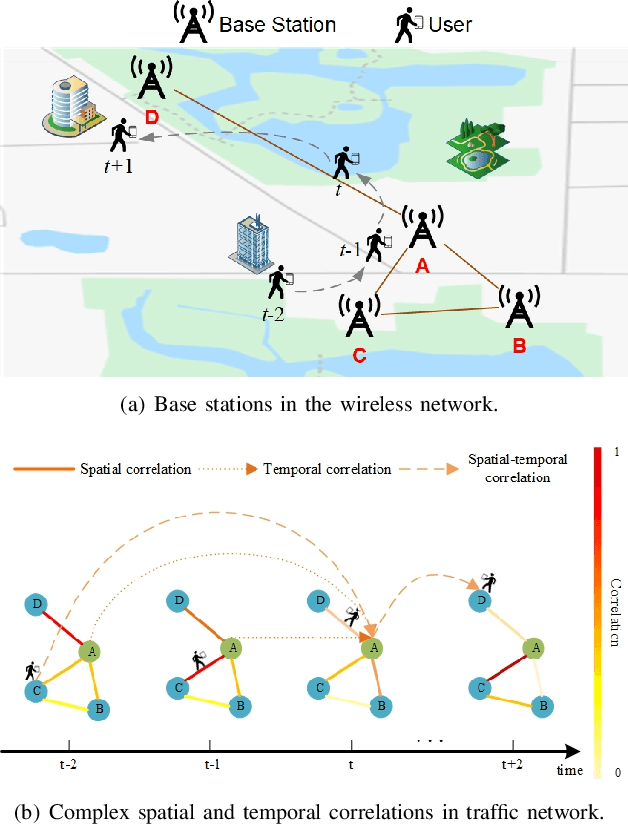

Abstract:Cellular traffic prediction is an indispensable part for intelligent telecommunication networks. Nevertheless, due to the frequent user mobility and complex network scheduling mechanisms, cellular traffic often inherits complicated spatial-temporal patterns, making the prediction incredibly challenging. Although recent advanced algorithms such as graph-based prediction approaches have been proposed, they frequently model spatial dependencies based on static or dynamic graphs and neglect the coexisting multiple spatial correlations induced by traffic generation. Meanwhile, some works lack the consideration of the diverse cellular traffic patterns, result in suboptimal prediction results. In this paper, we propose a novel deep learning network architecture, Adaptive Hybrid Spatial-Temporal Graph Neural Network (AHSTGNN), to tackle the cellular traffic prediction problem. First, we apply adaptive hybrid graph learning to learn the compound spatial correlations among cell towers. Second, we implement a Temporal Convolution Module with multi-periodic temporal data input to capture the nonlinear temporal dependencies. In addition, we introduce an extra Spatial-Temporal Adaptive Module to conquer the heterogeneity lying in cell towers. Our experiments on two real-world cellular traffic datasets show AHSTGNN outperforms the state-of-the-art by a significant margin, illustrating the superior scalability of our method for spatial-temporal cellular traffic prediction.

Natural language processing to identify lupus nephritis phenotype in electronic health records

Dec 20, 2021

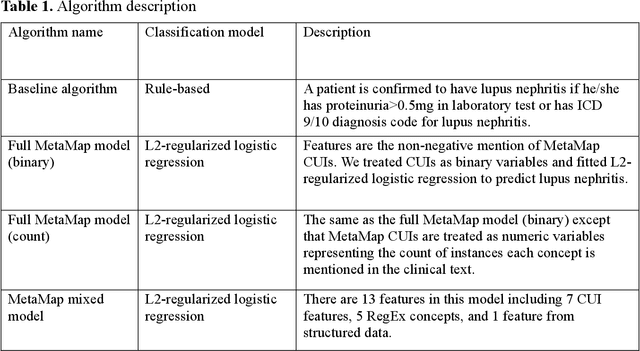

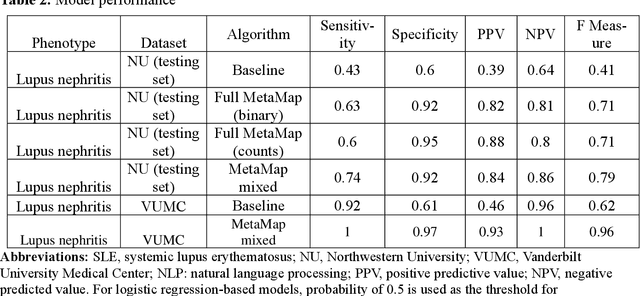

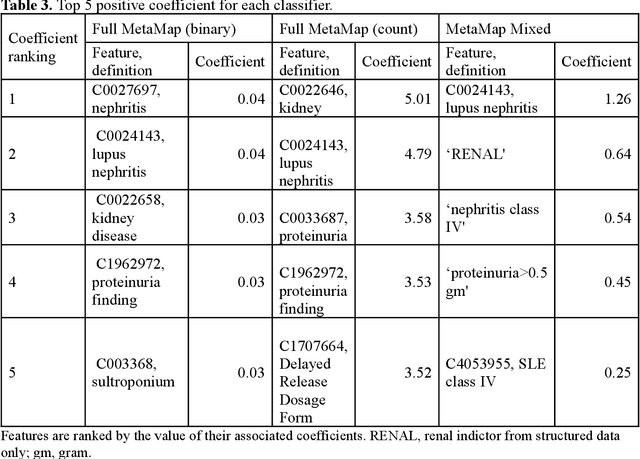

Abstract:Systemic lupus erythematosus (SLE) is a rare autoimmune disorder characterized by an unpredictable course of flares and remission with diverse manifestations. Lupus nephritis, one of the major disease manifestations of SLE for organ damage and mortality, is a key component of lupus classification criteria. Accurately identifying lupus nephritis in electronic health records (EHRs) would therefore benefit large cohort observational studies and clinical trials where characterization of the patient population is critical for recruitment, study design, and analysis. Lupus nephritis can be recognized through procedure codes and structured data, such as laboratory tests. However, other critical information documenting lupus nephritis, such as histologic reports from kidney biopsies and prior medical history narratives, require sophisticated text processing to mine information from pathology reports and clinical notes. In this study, we developed algorithms to identify lupus nephritis with and without natural language processing (NLP) using EHR data. We developed four algorithms: a rule-based algorithm using only structured data (baseline algorithm) and three algorithms using different NLP models. The three NLP models are based on regularized logistic regression and use different sets of features including positive mention of concept unique identifiers (CUIs), number of appearances of CUIs, and a mixture of three components respectively. The baseline algorithm and the best performed NLP algorithm were external validated on a dataset from Vanderbilt University Medical Center (VUMC). Our best performing NLP model incorporating features from both structured data, regular expression concepts, and mapped CUIs improved F measure in both the NMEDW (0.41 vs 0.79) and VUMC (0.62 vs 0.96) datasets compared to the baseline lupus nephritis algorithm.

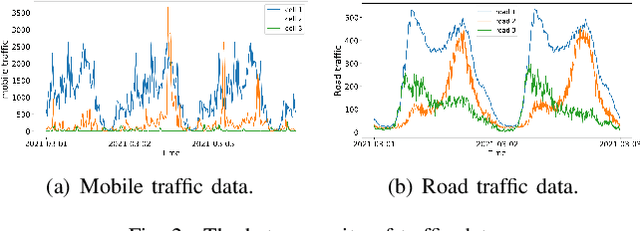

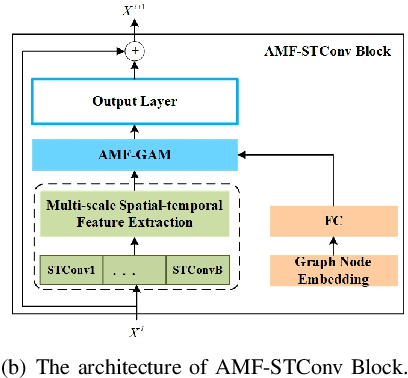

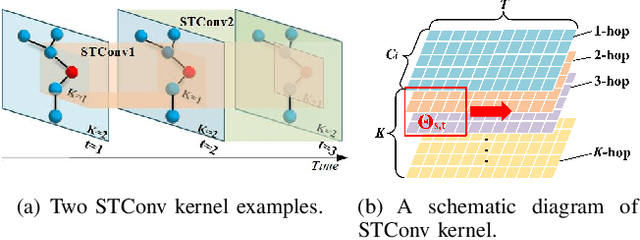

Adaptive Multi-receptive Field Spatial-Temporal Graph Convolutional Network for Traffic Forecasting

Nov 01, 2021

Abstract:Mobile network traffic forecasting is one of the key functions in daily network operation. A commercial mobile network is large, heterogeneous, complex and dynamic. These intrinsic features make mobile network traffic forecasting far from being solved even with recent advanced algorithms such as graph convolutional network-based prediction approaches and various attention mechanisms, which have been proved successful in vehicle traffic forecasting. In this paper, we cast the problem as a spatial-temporal sequence prediction task. We propose a novel deep learning network architecture, Adaptive Multi-receptive Field Spatial-Temporal Graph Convolutional Networks (AMF-STGCN), to model the traffic dynamics of mobile base stations. AMF-STGCN extends GCN by (1) jointly modeling the complex spatial-temporal dependencies in mobile networks, (2) applying attention mechanisms to capture various Receptive Fields of heterogeneous base stations, and (3) introducing an extra decoder based on a fully connected deep network to conquer the error propagation challenge with multi-step forecasting. Experiments on four real-world datasets from two different domains consistently show AMF-STGCN outperforms the state-of-the-art methods.

Heart-Darts: Classification of Heartbeats Using Differentiable Architecture Search

May 03, 2021

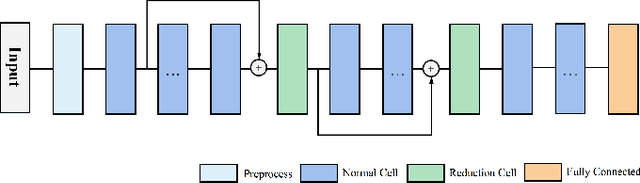

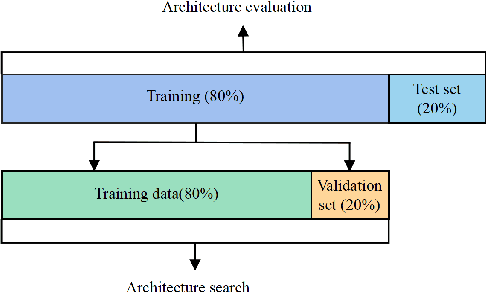

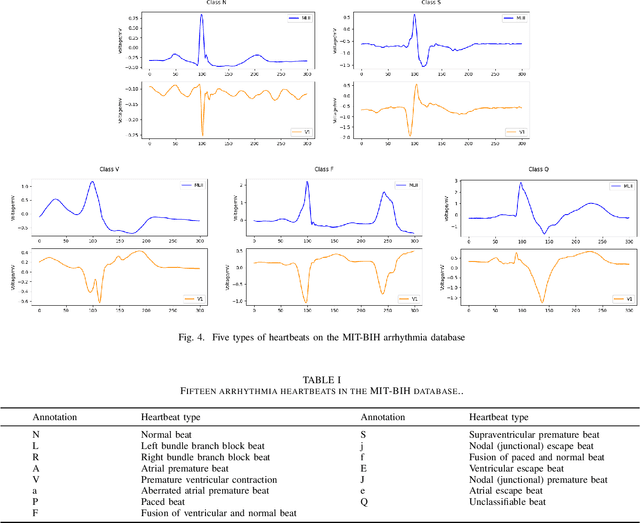

Abstract:Arrhythmia is a cardiovascular disease that manifests irregular heartbeats. In arrhythmia detection, the electrocardiogram (ECG) signal is an important diagnostic technique. However, manually evaluating ECG signals is a complicated and time-consuming task. With the application of convolutional neural networks (CNNs), the evaluation process has been accelerated and the performance is improved. It is noteworthy that the performance of CNNs heavily depends on their architecture design, which is a complex process grounded on expert experience and trial-and-error. In this paper, we propose a novel approach, Heart-Darts, to efficiently classify the ECG signals by automatically designing the CNN model with the differentiable architecture search (i.e., Darts, a cell-based neural architecture search method). Specifically, we initially search a cell architecture by Darts and then customize a novel CNN model for ECG classification based on the obtained cells. To investigate the efficiency of the proposed method, we evaluate the constructed model on the MIT-BIH arrhythmia database. Additionally, the extensibility of the proposed CNN model is validated on two other new databases. Extensive experimental results demonstrate that the proposed method outperforms several state-of-the-art CNN models in ECG classification in terms of both performance and generalization capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge