Ang Li

Bridging BCI and Communications: A MIMO Framework for EEG-to-ECoG Wireless Channel Modeling

May 16, 2025Abstract:As a method to connect human brain and external devices, Brain-computer interfaces (BCIs) are receiving extensive research attention. Recently, the integration of communication theory with BCI has emerged as a popular trend, offering potential to enhance system performance and shape next-generation communications. A key challenge in this field is modeling the brain wireless communication channel between intracranial electrocorticography (ECoG) emitting neurons and extracranial electroencephalography (EEG) receiving electrodes. However, the complex physiology of brain challenges the application of traditional channel modeling methods, leaving relevant research in its infancy. To address this gap, we propose a frequency-division multiple-input multiple-output (MIMO) estimation framework leveraging simultaneous macaque EEG and ECoG recordings, while employing neurophysiology-informed regularization to suppress noise interference. This approach reveals profound similarities between neural signal propagation and multi-antenna communication systems. Experimental results show improved estimation accuracy over conventional methods while highlighting a trade-off between frequency resolution and temporal stability determined by signal duration. This work establish a conceptual bridge between neural interfacing and communication theory, accelerating synergistic developments in both fields.

Dynamic Domain Information Modulation Algorithm for Multi-domain Sentiment Analysis

May 10, 2025

Abstract:Multi-domain sentiment classification aims to mitigate poor performance models due to the scarcity of labeled data in a single domain, by utilizing data labeled from various domains. A series of models that jointly train domain classifiers and sentiment classifiers have demonstrated their advantages, because domain classification helps generate necessary information for sentiment classification. Intuitively, the importance of sentiment classification tasks is the same in all domains for multi-domain sentiment classification; but domain classification tasks are different because the impact of domain information on sentiment classification varies across different fields; this can be controlled through adjustable weights or hyper parameters. However, as the number of domains increases, existing hyperparameter optimization algorithms may face the following challenges: (1) tremendous demand for computing resources, (2) convergence problems, and (3) high algorithm complexity. To efficiently generate the domain information required for sentiment classification in each domain, we propose a dynamic information modulation algorithm. Specifically, the model training process is divided into two stages. In the first stage, a shared hyperparameter, which would control the proportion of domain classification tasks across all fields, is determined. In the second stage, we introduce a novel domain-aware modulation algorithm to adjust the domain information contained in the input text, which is then calculated based on a gradient-based and loss-based method. In summary, experimental results on a public sentiment analysis dataset containing 16 domains prove the superiority of the proposed method.

SymRTLO: Enhancing RTL Code Optimization with LLMs and Neuron-Inspired Symbolic Reasoning

Apr 14, 2025

Abstract:Optimizing Register Transfer Level (RTL) code is crucial for improving the power, performance, and area (PPA) of digital circuits in the early stages of synthesis. Manual rewriting, guided by synthesis feedback, can yield high-quality results but is time-consuming and error-prone. Most existing compiler-based approaches have difficulty handling complex design constraints. Large Language Model (LLM)-based methods have emerged as a promising alternative to address these challenges. However, LLM-based approaches often face difficulties in ensuring alignment between the generated code and the provided prompts. This paper presents SymRTLO, a novel neuron-symbolic RTL optimization framework that seamlessly integrates LLM-based code rewriting with symbolic reasoning techniques. Our method incorporates a retrieval-augmented generation (RAG) system of optimization rules and Abstract Syntax Tree (AST)-based templates, enabling LLM-based rewriting that maintains syntactic correctness while minimizing undesired circuit behaviors. A symbolic module is proposed for analyzing and optimizing finite state machine (FSM) logic, allowing fine-grained state merging and partial specification handling beyond the scope of pattern-based compilers. Furthermore, a fast verification pipeline, combining formal equivalence checks with test-driven validation, further reduces the complexity of verification. Experiments on the RTL-Rewriter benchmark with Synopsys Design Compiler and Yosys show that SymRTLO improves power, performance, and area (PPA) by up to 43.9%, 62.5%, and 51.1%, respectively, compared to the state-of-the-art methods.

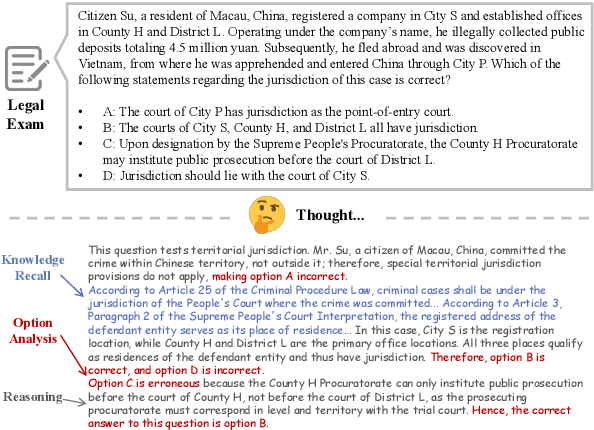

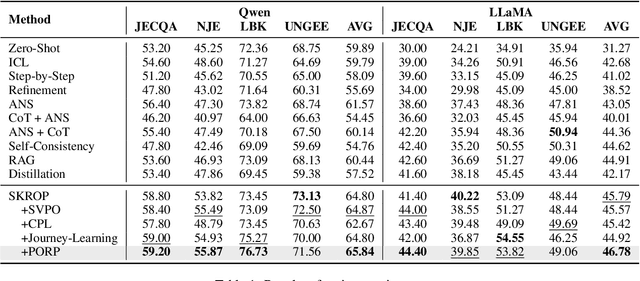

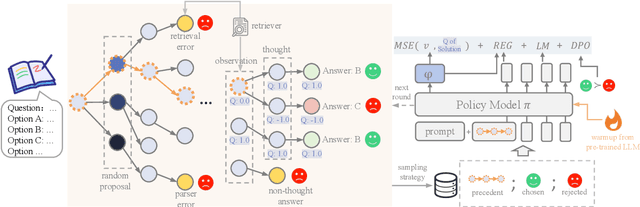

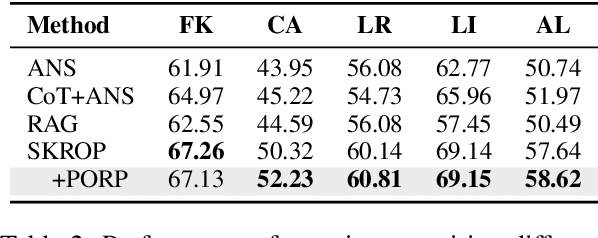

Towards Stepwise Domain Knowledge-Driven Reasoning Optimization and Reflection Improvement

Apr 12, 2025

Abstract:Recently, stepwise supervision on Chain of Thoughts (CoTs) presents an enhancement on the logical reasoning tasks such as coding and math, with the help of Monte Carlo Tree Search (MCTS). However, its contribution to tasks requiring domain-specific expertise and knowledge remains unexplored. Motivated by the interest, we identify several potential challenges of vanilla MCTS within this context, and propose the framework of Stepwise Domain Knowledge-Driven Reasoning Optimization, employing the MCTS algorithm to develop step-level supervision for problems that require essential comprehension, reasoning, and specialized knowledge. Additionally, we also introduce the Preference Optimization towards Reflection Paths, which iteratively learns self-reflection on the reasoning thoughts from better perspectives. We have conducted extensive experiments to evaluate the advantage of the methodologies. Empirical results demonstrate the effectiveness on various legal-domain problems. We also report a diverse set of valuable findings, hoping to encourage the enthusiasm to the research of domain-specific LLMs and MCTS.

ThermoStereoRT: Thermal Stereo Matching in Real Time via Knowledge Distillation and Attention-based Refinement

Apr 10, 2025Abstract:We introduce ThermoStereoRT, a real-time thermal stereo matching method designed for all-weather conditions that recovers disparity from two rectified thermal stereo images, envisioning applications such as night-time drone surveillance or under-bed cleaning robots. Leveraging a lightweight yet powerful backbone, ThermoStereoRT constructs a 3D cost volume from thermal images and employs multi-scale attention mechanisms to produce an initial disparity map. To refine this map, we design a novel channel and spatial attention module. Addressing the challenge of sparse ground truth data in thermal imagery, we utilize knowledge distillation to boost performance without increasing computational demands. Comprehensive evaluations on multiple datasets demonstrate that ThermoStereoRT delivers both real-time capacity and robust accuracy, making it a promising solution for real-world deployment in various challenging environments. Our code will be released on https://github.com/SJTU-ViSYS-team/ThermoStereoRT

* 7 pages, 6 figures, 4 tables. Accepted to IEEE ICRA 2025. This is the preprint version

Role and Use of Race in AI/ML Models Related to Health

Apr 01, 2025Abstract:The role and use of race within health-related artificial intelligence and machine learning (AI/ML) models has sparked increasing attention and controversy. Despite the complexity and breadth of related issues, a robust and holistic framework to guide stakeholders in their examination and resolution remains lacking. This perspective provides a broad-based, systematic, and cross-cutting landscape analysis of race-related challenges, structured around the AI/ML lifecycle and framed through "points to consider" to support inquiry and decision-making.

Agent S2: A Compositional Generalist-Specialist Framework for Computer Use Agents

Apr 01, 2025Abstract:Computer use agents automate digital tasks by directly interacting with graphical user interfaces (GUIs) on computers and mobile devices, offering significant potential to enhance human productivity by completing an open-ended space of user queries. However, current agents face significant challenges: imprecise grounding of GUI elements, difficulties with long-horizon task planning, and performance bottlenecks from relying on single generalist models for diverse cognitive tasks. To this end, we introduce Agent S2, a novel compositional framework that delegates cognitive responsibilities across various generalist and specialist models. We propose a novel Mixture-of-Grounding technique to achieve precise GUI localization and introduce Proactive Hierarchical Planning, dynamically refining action plans at multiple temporal scales in response to evolving observations. Evaluations demonstrate that Agent S2 establishes new state-of-the-art (SOTA) performance on three prominent computer use benchmarks. Specifically, Agent S2 achieves 18.9% and 32.7% relative improvements over leading baseline agents such as Claude Computer Use and UI-TARS on the OSWorld 15-step and 50-step evaluation. Moreover, Agent S2 generalizes effectively to other operating systems and applications, surpassing previous best methods by 52.8% on WindowsAgentArena and by 16.52% on AndroidWorld relatively. Code available at https://github.com/simular-ai/Agent-S.

Prada: Black-Box LLM Adaptation with Private Data on Resource-Constrained Devices

Mar 19, 2025Abstract:In recent years, Large Language Models (LLMs) have demonstrated remarkable abilities in various natural language processing tasks. However, adapting these models to specialized domains using private datasets stored on resource-constrained edge devices, such as smartphones and personal computers, remains challenging due to significant privacy concerns and limited computational resources. Existing model adaptation methods either compromise data privacy by requiring data transmission or jeopardize model privacy by exposing proprietary LLM parameters. To address these challenges, we propose Prada, a novel privacy-preserving and efficient black-box LLM adaptation system using private on-device datasets. Prada employs a lightweight proxy model fine-tuned with Low-Rank Adaptation (LoRA) locally on user devices. During inference, Prada leverages the logits offset, i.e., difference in outputs between the base and adapted proxy models, to iteratively refine outputs from a remote black-box LLM. This offset-based adaptation approach preserves both data privacy and model privacy, as there is no need to share sensitive data or proprietary model parameters. Furthermore, we incorporate speculative decoding to further speed up the inference process of Prada, making the system practically deployable on bandwidth-constrained edge devices, enabling a more practical deployment of Prada. Extensive experiments on various downstream tasks demonstrate that Prada achieves performance comparable to centralized fine-tuning methods while significantly reducing computational overhead by up to 60% and communication costs by up to 80%.

LLM Generated Persona is a Promise with a Catch

Mar 18, 2025Abstract:The use of large language models (LLMs) to simulate human behavior has gained significant attention, particularly through personas that approximate individual characteristics. Persona-based simulations hold promise for transforming disciplines that rely on population-level feedback, including social science, economic analysis, marketing research, and business operations. Traditional methods to collect realistic persona data face significant challenges. They are prohibitively expensive and logistically challenging due to privacy constraints, and often fail to capture multi-dimensional attributes, particularly subjective qualities. Consequently, synthetic persona generation with LLMs offers a scalable, cost-effective alternative. However, current approaches rely on ad hoc and heuristic generation techniques that do not guarantee methodological rigor or simulation precision, resulting in systematic biases in downstream tasks. Through extensive large-scale experiments including presidential election forecasts and general opinion surveys of the U.S. population, we reveal that these biases can lead to significant deviations from real-world outcomes. Our findings underscore the need to develop a rigorous science of persona generation and outline the methodological innovations, organizational and institutional support, and empirical foundations required to enhance the reliability and scalability of LLM-driven persona simulations. To support further research and development in this area, we have open-sourced approximately one million generated personas, available for public access and analysis at https://huggingface.co/datasets/Tianyi-Lab/Personas.

Robust Full-Space Physical Layer Security for STAR-RIS-Aided Wireless Networks: Eavesdropper with Uncertain Location and Channel

Mar 15, 2025Abstract:A robust full-space physical layer security (PLS) transmission scheme is proposed in this paper considering the full-space wiretapping challenge of wireless networks supported by simultaneous transmitting and reflecting reconfigurable intelligent surface (STAR-RIS). Different from the existing schemes, the proposed PLS scheme takes account of the uncertainty on the eavesdropper's position within the 360$^\circ$ service area offered by the STAR-RIS. Specifically, the large system analytical method is utilized to derive the asymptotic expression of the average security rate achieved by the security user, considering that the base station (BS) only has the statistical information of the eavesdropper's channel state information (CSI) and the uncertainty of its location. To evaluate the effectiveness of the proposed PLS scheme, we first formulate an optimization problem aimed at maximizing the weighted sum rate of the security user and the public user. This optimization is conducted under the power allocation constraint, and some practical limitations for STAR-RIS implementation, through jointly designing the active and passive beamforming variables. A novel iterative algorithm based on the minimum mean-square error (MMSE) and cross-entropy optimization (CEO) methods is proposed to effectively address the established non-convex optimization problem with discrete variables. Simulation results indicate that the proposed robust PLS scheme can effectively mitigate the information leakage across the entire coverage area of the STAR-RIS-assisted system, leading to superior performance gain when compared to benchmark schemes encompassing traditional RIS-aided scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge