Compilable Neural Code Generation with Compiler Feedback

Mar 10, 2022Xin Wang, Yasheng Wang, Yao Wan, Fei Mi, Yitong Li, Pingyi Zhou, Jin Liu, Hao Wu, Xin Jiang, Qun Liu

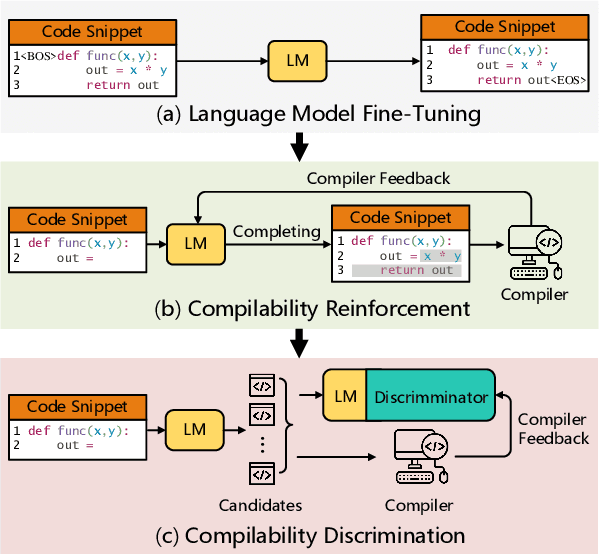

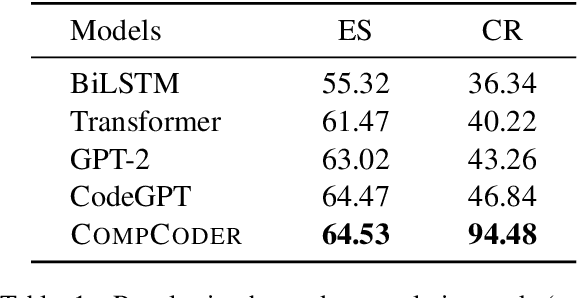

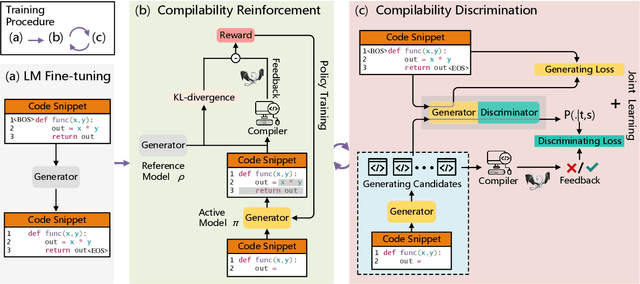

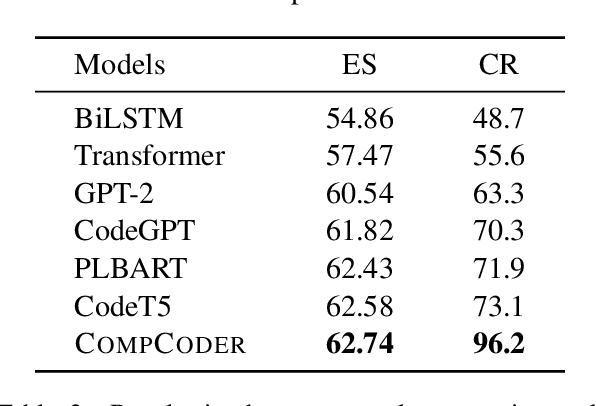

Automatically generating compilable programs with (or without) natural language descriptions has always been a touchstone problem for computational linguistics and automated software engineering. Existing deep-learning approaches model code generation as text generation, either constrained by grammar structures in decoder, or driven by pre-trained language models on large-scale code corpus (e.g., CodeGPT, PLBART, and CodeT5). However, few of them account for compilability of the generated programs. To improve compilability of the generated programs, this paper proposes COMPCODER, a three-stage pipeline utilizing compiler feedback for compilable code generation, including language model fine-tuning, compilability reinforcement, and compilability discrimination. Comprehensive experiments on two code generation tasks demonstrate the effectiveness of our proposed approach, improving the success rate of compilation from 44.18 to 89.18 in code completion on average and from 70.3 to 96.2 in text-to-code generation, respectively, when comparing with the state-of-the-art CodeGPT.

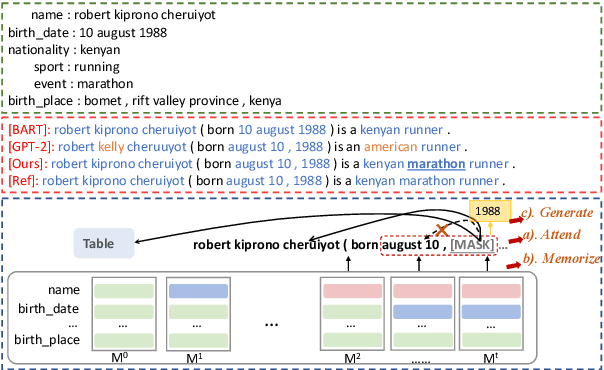

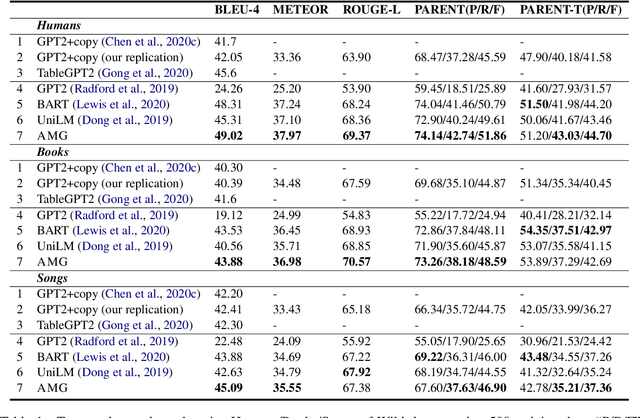

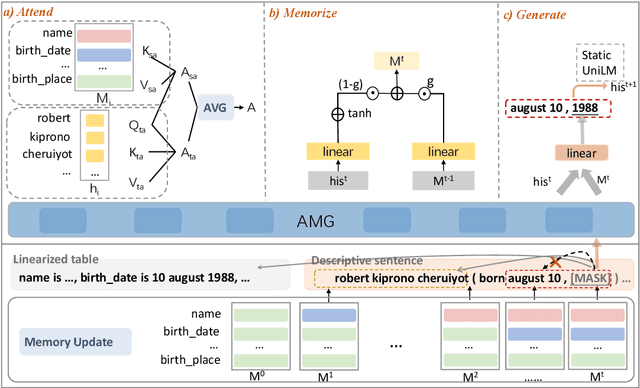

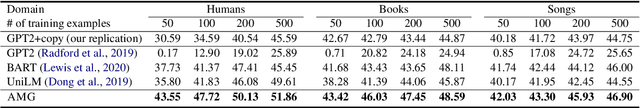

Attend, Memorize and Generate: Towards Faithful Table-to-Text Generation in Few Shots

Mar 01, 2022Wenting Zhao, Ye Liu, Yao Wan, Philip S. Yu

Few-shot table-to-text generation is a task of composing fluent and faithful sentences to convey table content using limited data. Despite many efforts having been made towards generating impressive fluent sentences by fine-tuning powerful pre-trained language models, the faithfulness of generated content still needs to be improved. To this end, this paper proposes a novel approach Attend, Memorize and Generate (called AMG), inspired by the text generation process of humans. In particular, AMG (1) attends over the multi-granularity of context using a novel strategy based on table slot level and traditional token-by-token level attention to exploit both the table structure and natural linguistic information; (2) dynamically memorizes the table slot allocation states; and (3) generates faithful sentences according to both the context and memory allocation states. Comprehensive experiments with human evaluation on three domains (i.e., humans, songs, and books) of the Wiki dataset show that our model can generate higher qualified texts when compared with several state-of-the-art baselines, in both fluency and faithfulness.

What Do They Capture? -- A Structural Analysis of Pre-Trained Language Models for Source Code

Feb 14, 2022Yao Wan, Wei Zhao, Hongyu Zhang, Yulei Sui, Guandong Xu, Hai Jin

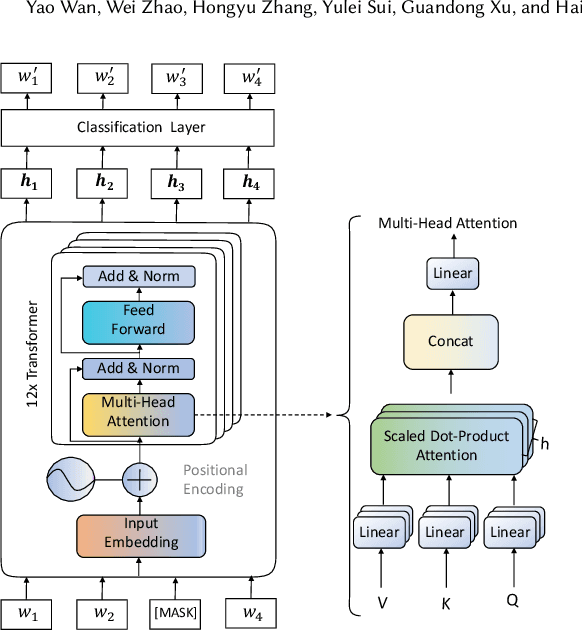

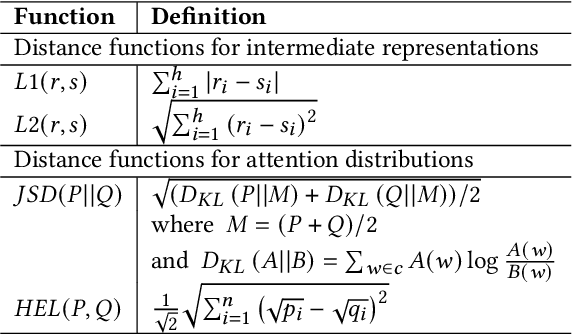

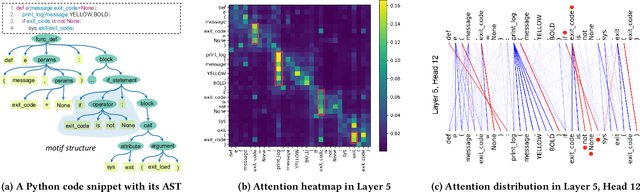

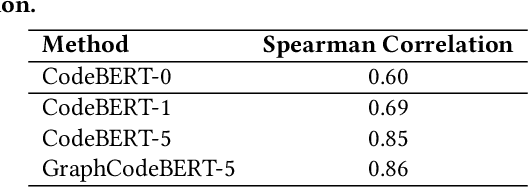

Recently, many pre-trained language models for source code have been proposed to model the context of code and serve as a basis for downstream code intelligence tasks such as code completion, code search, and code summarization. These models leverage masked pre-training and Transformer and have achieved promising results. However, currently there is still little progress regarding interpretability of existing pre-trained code models. It is not clear why these models work and what feature correlations they can capture. In this paper, we conduct a thorough structural analysis aiming to provide an interpretation of pre-trained language models for source code (e.g., CodeBERT, and GraphCodeBERT) from three distinctive perspectives: (1) attention analysis, (2) probing on the word embedding, and (3) syntax tree induction. Through comprehensive analysis, this paper reveals several insightful findings that may inspire future studies: (1) Attention aligns strongly with the syntax structure of code. (2) Pre-training language models of code can preserve the syntax structure of code in the intermediate representations of each Transformer layer. (3) The pre-trained models of code have the ability of inducing syntax trees of code. Theses findings suggest that it may be helpful to incorporate the syntax structure of code into the process of pre-training for better code representations.

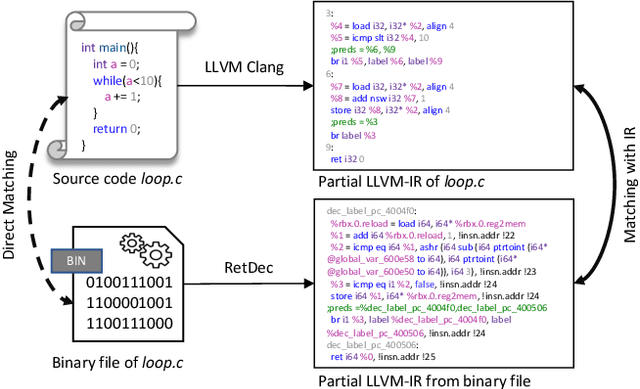

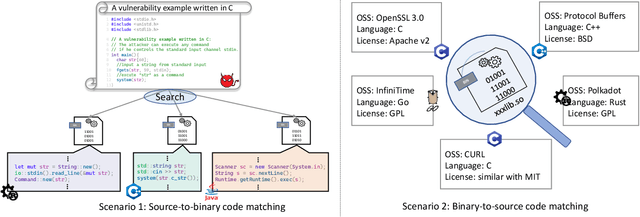

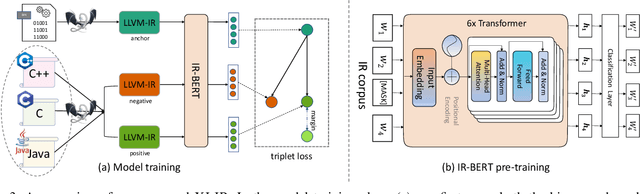

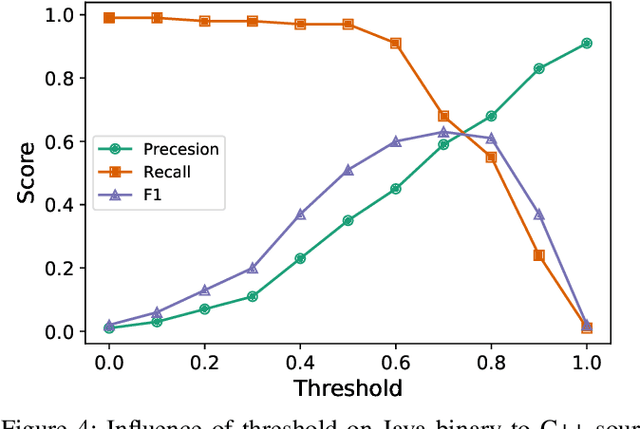

Cross-Language Binary-Source Code Matching with Intermediate Representations

Jan 19, 2022Yi Gui, Yao Wan, Hongyu Zhang, Huifang Huang, Yulei Sui, Guandong Xu, Zhiyuan Shao, Hai Jin

Binary-source code matching plays an important role in many security and software engineering related tasks such as malware detection, reverse engineering and vulnerability assessment. Currently, several approaches have been proposed for binary-source code matching by jointly learning the embeddings of binary code and source code in a common vector space. Despite much effort, existing approaches target on matching the binary code and source code written in a single programming language. However, in practice, software applications are often written in different programming languages to cater for different requirements and computing platforms. Matching binary and source code across programming languages introduces additional challenges when maintaining multi-language and multi-platform applications. To this end, this paper formulates the problem of cross-language binary-source code matching, and develops a new dataset for this new problem. We present a novel approach XLIR, which is a Transformer-based neural network by learning the intermediate representations for both binary and source code. To validate the effectiveness of XLIR, comprehensive experiments are conducted on two tasks of cross-language binary-source code matching, and cross-language source-source code matching, on top of our curated dataset. Experimental results and analysis show that our proposed XLIR with intermediate representations significantly outperforms other state-of-the-art models in both of the two tasks.

DANets: Deep Abstract Networks for Tabular Data Classification and Regression

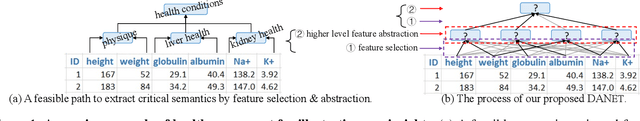

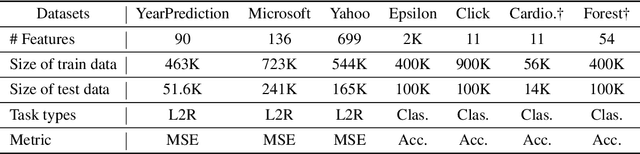

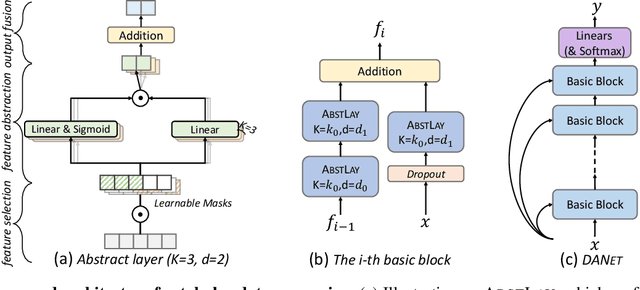

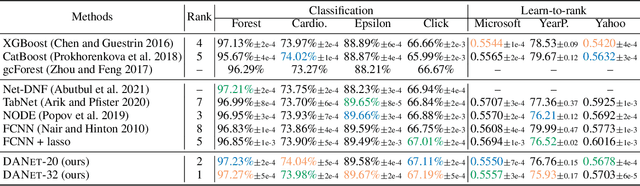

Dec 24, 2021Jintai Chen, Kuanlun Liao, Yao Wan, Danny Z. Chen, Jian Wu

Tabular data are ubiquitous in real world applications. Although many commonly-used neural components (e.g., convolution) and extensible neural networks (e.g., ResNet) have been developed by the machine learning community, few of them were effective for tabular data and few designs were adequately tailored for tabular data structures. In this paper, we propose a novel and flexible neural component for tabular data, called Abstract Layer (AbstLay), which learns to explicitly group correlative input features and generate higher-level features for semantics abstraction. Also, we design a structure re-parameterization method to compress AbstLay, thus reducing the computational complexity by a clear margin in the reference phase. A special basic block is built using AbstLays, and we construct a family of Deep Abstract Networks (DANets) for tabular data classification and regression by stacking such blocks. In DANets, a special shortcut path is introduced to fetch information from raw tabular features, assisting feature interactions across different levels. Comprehensive experiments on seven real-world tabular datasets show that our AbstLay and DANets are effective for tabular data classification and regression, and the computational complexity is superior to competitive methods. Besides, we evaluate the performance gains of DANet as it goes deep, verifying the extendibility of our method. Our code is available at https://github.com/WhatAShot/DANet.

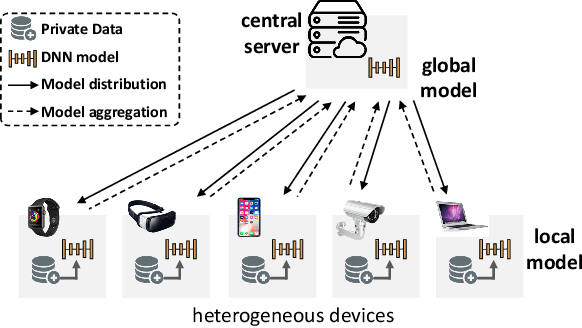

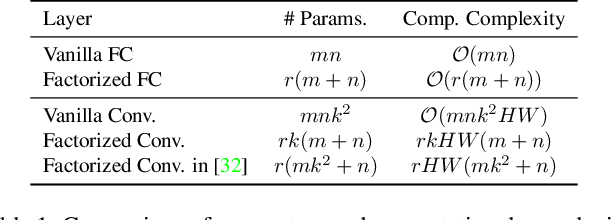

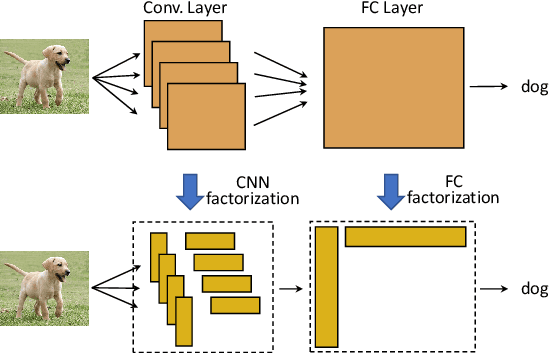

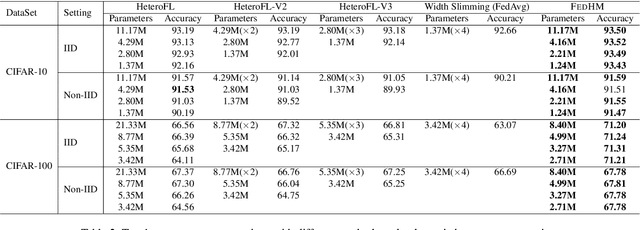

FedHM: Efficient Federated Learning for Heterogeneous Models via Low-rank Factorization

Nov 29, 2021Dezhong Yao, Wanning Pan, Yao Wan, Hai Jin, Lichao Sun

The underlying assumption of recent federated learning (FL) paradigms is that local models usually share the same network architecture as the global model, which becomes impractical for mobile and IoT devices with different setups of hardware and infrastructure. A scalable federated learning framework should address heterogeneous clients equipped with different computation and communication capabilities. To this end, this paper proposes FedHM, a novel federated model compression framework that distributes the heterogeneous low-rank models to clients and then aggregates them into a global full-rank model. Our solution enables the training of heterogeneous local models with varying computational complexities and aggregates a single global model. Furthermore, FedHM not only reduces the computational complexity of the device, but also reduces the communication cost by using low-rank models. Extensive experimental results demonstrate that our proposed \system outperforms the current pruning-based FL approaches in terms of test Top-1 accuracy (4.6% accuracy gain on average), with smaller model size (1.5x smaller on average) under various heterogeneous FL settings.

HETFORMER: Heterogeneous Transformer with Sparse Attention for Long-Text Extractive Summarization

Oct 19, 2021Ye Liu, Jian-Guo Zhang, Yao Wan, Congying Xia, Lifang He, Philip S. Yu

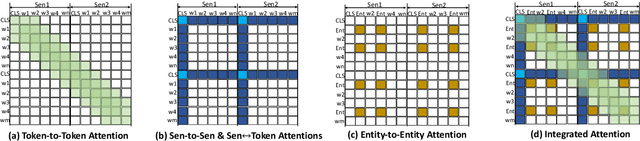

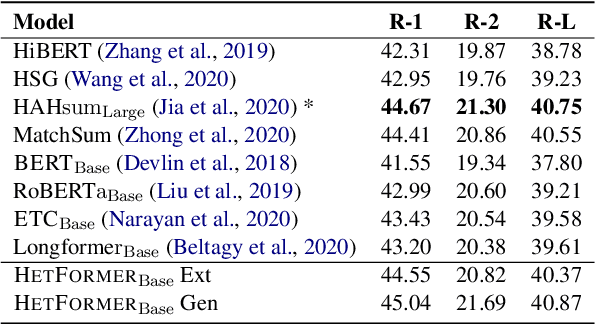

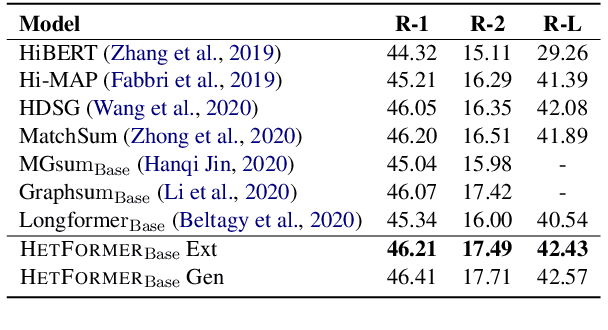

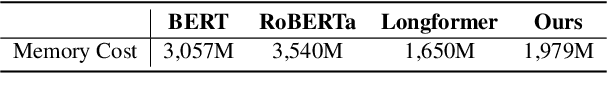

To capture the semantic graph structure from raw text, most existing summarization approaches are built on GNNs with a pre-trained model. However, these methods suffer from cumbersome procedures and inefficient computations for long-text documents. To mitigate these issues, this paper proposes HETFORMER, a Transformer-based pre-trained model with multi-granularity sparse attentions for long-text extractive summarization. Specifically, we model different types of semantic nodes in raw text as a potential heterogeneous graph and directly learn heterogeneous relationships (edges) among nodes by Transformer. Extensive experiments on both single- and multi-document summarization tasks show that HETFORMER achieves state-of-the-art performance in Rouge F1 while using less memory and fewer parameters.

SynCoBERT: Syntax-Guided Multi-Modal Contrastive Pre-Training for Code Representation

Sep 09, 2021Xin Wang, Yasheng Wang, Fei Mi, Pingyi Zhou, Yao Wan, Xiao Liu, Li Li, Hao Wu, Jin Liu, Xin Jiang

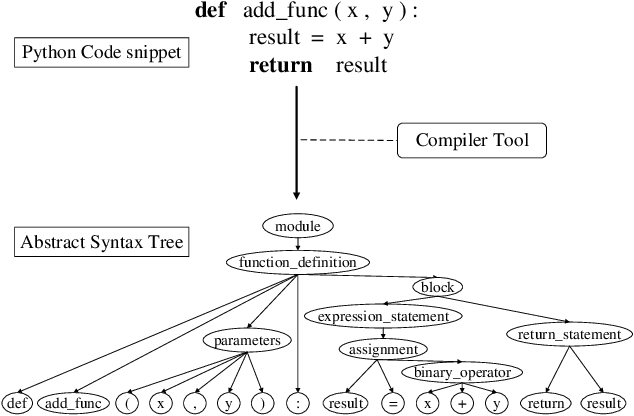

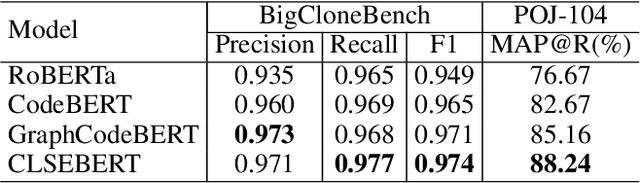

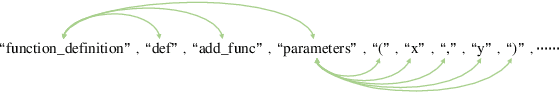

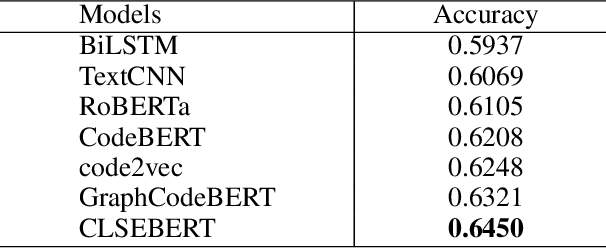

Code representation learning, which aims to encode the semantics of source code into distributed vectors, plays an important role in recent deep-learning-based models for code intelligence. Recently, many pre-trained language models for source code (e.g., CuBERT and CodeBERT) have been proposed to model the context of code and serve as a basis for downstream code intelligence tasks such as code search, code clone detection, and program translation. Current approaches typically consider the source code as a plain sequence of tokens, or inject the structure information (e.g., AST and data-flow) into the sequential model pre-training. To further explore the properties of programming languages, this paper proposes SynCoBERT, a syntax-guided multi-modal contrastive pre-training approach for better code representations. Specially, we design two novel pre-training objectives originating from the symbolic and syntactic properties of source code, i.e., Identifier Prediction (IP) and AST Edge Prediction (TEP), which are designed to predict identifiers, and edges between two nodes of AST, respectively. Meanwhile, to exploit the complementary information in semantically equivalent modalities (i.e., code, comment, AST) of the code, we propose a multi-modal contrastive learning strategy to maximize the mutual information among different modalities. Extensive experiments on four downstream tasks related to code intelligence show that SynCoBERT advances the state-of-the-art with the same pre-training corpus and model size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge