Xuequan Lu

SimAda: A Simple Unified Framework for Adapting Segment Anything Model in Underperformed Scenes

Jan 31, 2024

Abstract:Segment anything model (SAM) has demonstrated excellent generalization capabilities in common vision scenarios, yet lacking an understanding of specialized data. Although numerous works have focused on optimizing SAM for downstream tasks, these task-specific approaches usually limit the generalizability to other downstream tasks. In this paper, we aim to investigate the impact of the general vision modules on finetuning SAM and enable them to generalize across all downstream tasks. We propose a simple unified framework called SimAda for adapting SAM in underperformed scenes. Specifically, our framework abstracts the general modules of different methods into basic design elements, and we design four variants based on a shared theoretical framework. SimAda is simple yet effective, which removes all dataset-specific designs and focuses solely on general optimization, ensuring that SimAda can be applied to all SAM-based and even Transformer-based models. We conduct extensive experiments on nine datasets of six downstream tasks. The results demonstrate that SimAda significantly improves the performance of SAM on multiple downstream tasks and achieves state-of-the-art performance on most of them, without requiring task-specific designs. Code is available at: https://github.com/zongzi13545329/SimAda

BA-SAM: Scalable Bias-Mode Attention Mask for Segment Anything Model

Jan 08, 2024

Abstract:In this paper, we address the challenge of image resolution variation for the Segment Anything Model (SAM). SAM, known for its zero-shot generalizability, exhibits a performance degradation when faced with datasets with varying image sizes. Previous approaches tend to resize the image to a fixed size or adopt structure modifications, hindering the preservation of SAM's rich prior knowledge. Besides, such task-specific tuning necessitates a complete retraining of the model, which is cost-expensive and unacceptable for deployment in the downstream tasks. In this paper, we reformulate this issue as a length extrapolation problem, where token sequence length varies while maintaining a consistent patch size for images of different sizes. To this end, we propose Scalable Bias-Mode Attention Mask (BA-SAM) to enhance SAM's adaptability to varying image resolutions while eliminating the need for structure modifications. Firstly, we introduce a new scaling factor to ensure consistent magnitude in the attention layer's dot product values when the token sequence length changes. Secondly, we present a bias-mode attention mask that allows each token to prioritize neighboring information, mitigating the impact of untrained distant information. Our BA-SAM demonstrates efficacy in two scenarios: zero-shot and fine-tuning. Extensive evaluation on diverse datasets, including DIS5K, DUTS, ISIC, COD10K, and COCO, reveals its ability to significantly mitigate performance degradation in the zero-shot setting and achieve state-of-the-art performance with minimal fine-tuning. Furthermore, we propose a generalized model and benchmark, showcasing BA-SAM's generalizability across all four datasets simultaneously.

DHGCN: Dynamic Hop Graph Convolution Network for Self-supervised Point Cloud Learning

Jan 05, 2024

Abstract:Recent works attempt to extend Graph Convolution Networks (GCNs) to point clouds for classification and segmentation tasks. These works tend to sample and group points to create smaller point sets locally and mainly focus on extracting local features through GCNs, while ignoring the relationship between point sets. In this paper, we propose the Dynamic Hop Graph Convolution Network (DHGCN) for explicitly learning the contextual relationships between the voxelized point parts, which are treated as graph nodes. Motivated by the intuition that the contextual information between point parts lies in the pairwise adjacent relationship, which can be depicted by the hop distance of the graph quantitatively, we devise a novel self-supervised part-level hop distance reconstruction task and design a novel loss function accordingly to facilitate training. In addition, we propose the Hop Graph Attention (HGA), which takes the learned hop distance as input for producing attention weights to allow edge features to contribute distinctively in aggregation. Eventually, the proposed DHGCN is a plug-and-play module that is compatible with point-based backbone networks. Comprehensive experiments on different backbones and tasks demonstrate that our self-supervised method achieves state-of-the-art performance. Our source code is available at: https://github.com/Jinec98/DHGCN.

Generalized Category Discovery in Semantic Segmentation

Nov 20, 2023

Abstract:This paper explores a novel setting called Generalized Category Discovery in Semantic Segmentation (GCDSS), aiming to segment unlabeled images given prior knowledge from a labeled set of base classes. The unlabeled images contain pixels of the base class or novel class. In contrast to Novel Category Discovery in Semantic Segmentation (NCDSS), there is no prerequisite for prior knowledge mandating the existence of at least one novel class in each unlabeled image. Besides, we broaden the segmentation scope beyond foreground objects to include the entire image. Existing NCDSS methods rely on the aforementioned priors, making them challenging to truly apply in real-world situations. We propose a straightforward yet effective framework that reinterprets the GCDSS challenge as a task of mask classification. Additionally, we construct a baseline method and introduce the Neighborhood Relations-Guided Mask Clustering Algorithm (NeRG-MaskCA) for mask categorization to address the fragmentation in semantic representation. A benchmark dataset, Cityscapes-GCD, derived from the Cityscapes dataset, is established to evaluate the GCDSS framework. Our method demonstrates the feasibility of the GCDSS problem and the potential for discovering and segmenting novel object classes in unlabeled images. We employ the generated pseudo-labels from our approach as ground truth to supervise the training of other models, thereby enabling them with the ability to segment novel classes. It paves the way for further research in generalized category discovery, broadening the horizons of semantic segmentation and its applications. For details, please visit https://github.com/JethroPeng/GCDSS

Weighted Point Cloud Normal Estimation

May 06, 2023

Abstract:Existing normal estimation methods for point clouds are often less robust to severe noise and complex geometric structures. Also, they usually ignore the contributions of different neighbouring points during normal estimation, which leads to less accurate results. In this paper, we introduce a weighted normal estimation method for 3D point cloud data. We innovate in two key points: 1) we develop a novel weighted normal regression technique that predicts point-wise weights from local point patches and use them for robust, feature-preserving normal regression; 2) we propose to conduct contrastive learning between point patches and the corresponding ground-truth normals of the patches' central points as a pre-training process to facilitate normal regression. Comprehensive experiments demonstrate that our method can robustly handle noisy and complex point clouds, achieving state-of-the-art performance on both synthetic and real-world datasets.

Don't worry about mistakes! Glass Segmentation Network via Mistake Correction

Apr 21, 2023Abstract:Recall one time when we were in an unfamiliar mall. We might mistakenly think that there exists or does not exist a piece of glass in front of us. Such mistakes will remind us to walk more safely and freely at the same or a similar place next time. To absorb the human mistake correction wisdom, we propose a novel glass segmentation network to detect transparent glass, dubbed GlassSegNet. Motivated by this human behavior, GlassSegNet utilizes two key stages: the identification stage (IS) and the correction stage (CS). The IS is designed to simulate the detection procedure of human recognition for identifying transparent glass by global context and edge information. The CS then progressively refines the coarse prediction by correcting mistake regions based on gained experience. Extensive experiments show clear improvements of our GlassSegNet over thirty-four state-of-the-art methods on three benchmark datasets.

Instance-Aware Domain Generalization for Face Anti-Spoofing

Apr 12, 2023Abstract:Face anti-spoofing (FAS) based on domain generalization (DG) has been recently studied to improve the generalization on unseen scenarios. Previous methods typically rely on domain labels to align the distribution of each domain for learning domain-invariant representations. However, artificial domain labels are coarse-grained and subjective, which cannot reflect real domain distributions accurately. Besides, such domain-aware methods focus on domain-level alignment, which is not fine-grained enough to ensure that learned representations are insensitive to domain styles. To address these issues, we propose a novel perspective for DG FAS that aligns features on the instance level without the need for domain labels. Specifically, Instance-Aware Domain Generalization framework is proposed to learn the generalizable feature by weakening the features' sensitivity to instance-specific styles. Concretely, we propose Asymmetric Instance Adaptive Whitening to adaptively eliminate the style-sensitive feature correlation, boosting the generalization. Moreover, Dynamic Kernel Generator and Categorical Style Assembly are proposed to first extract the instance-specific features and then generate the style-diversified features with large style shifts, respectively, further facilitating the learning of style-insensitive features. Extensive experiments and analysis demonstrate the superiority of our method over state-of-the-art competitors. Code will be publicly available at https://github.com/qianyuzqy/IADG.

IterativePFN: True Iterative Point Cloud Filtering

Apr 04, 2023

Abstract:The quality of point clouds is often limited by noise introduced during their capture process. Consequently, a fundamental 3D vision task is the removal of noise, known as point cloud filtering or denoising. State-of-the-art learning based methods focus on training neural networks to infer filtered displacements and directly shift noisy points onto the underlying clean surfaces. In high noise conditions, they iterate the filtering process. However, this iterative filtering is only done at test time and is less effective at ensuring points converge quickly onto the clean surfaces. We propose IterativePFN (iterative point cloud filtering network), which consists of multiple IterationModules that model the true iterative filtering process internally, within a single network. We train our IterativePFN network using a novel loss function that utilizes an adaptive ground truth target at each iteration to capture the relationship between intermediate filtering results during training. This ensures that the filtered results converge faster to the clean surfaces. Our method is able to obtain better performance compared to state-of-the-art methods. The source code can be found at: https://github.com/ddsediri/IterativePFN.

Boosting Semi-Supervised Learning by Exploiting All Unlabeled Data

Mar 20, 2023

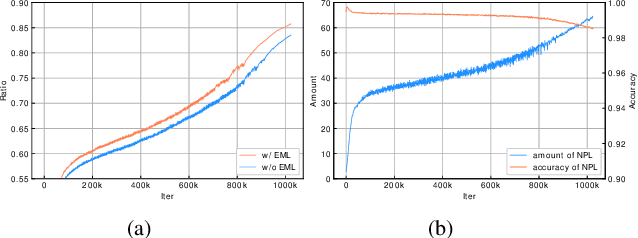

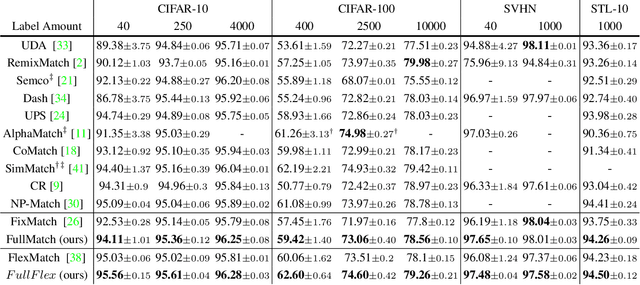

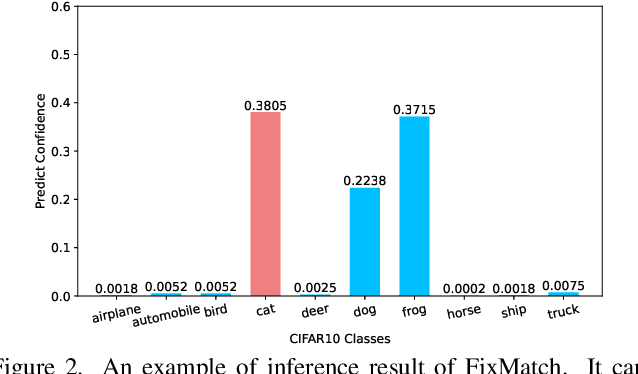

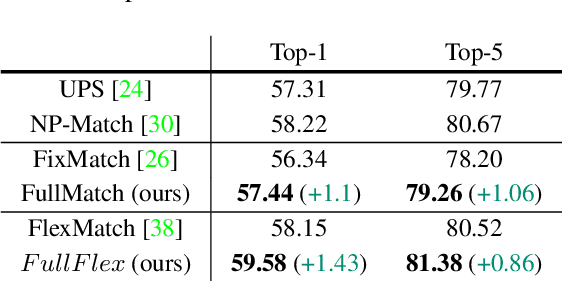

Abstract:Semi-supervised learning (SSL) has attracted enormous attention due to its vast potential of mitigating the dependence on large labeled datasets. The latest methods (e.g., FixMatch) use a combination of consistency regularization and pseudo-labeling to achieve remarkable successes. However, these methods all suffer from the waste of complicated examples since all pseudo-labels have to be selected by a high threshold to filter out noisy ones. Hence, the examples with ambiguous predictions will not contribute to the training phase. For better leveraging all unlabeled examples, we propose two novel techniques: Entropy Meaning Loss (EML) and Adaptive Negative Learning (ANL). EML incorporates the prediction distribution of non-target classes into the optimization objective to avoid competition with target class, and thus generating more high-confidence predictions for selecting pseudo-label. ANL introduces the additional negative pseudo-label for all unlabeled data to leverage low-confidence examples. It adaptively allocates this label by dynamically evaluating the top-k performance of the model. EML and ANL do not introduce any additional parameter and hyperparameter. We integrate these techniques with FixMatch, and develop a simple yet powerful framework called FullMatch. Extensive experiments on several common SSL benchmarks (CIFAR-10/100, SVHN, STL-10 and ImageNet) demonstrate that FullMatch exceeds FixMatch by a large margin. Integrated with FlexMatch (an advanced FixMatch-based framework), we achieve state-of-the-art performance. Source code is at https://github.com/megvii-research/FullMatch.

Skeleton-based Action Recognition through Contrasting Two-Stream Spatial-Temporal Networks

Jan 27, 2023

Abstract:For pursuing accurate skeleton-based action recognition, most prior methods use the strategy of combining Graph Convolution Networks (GCNs) with attention-based methods in a serial way. However, they regard the human skeleton as a complete graph, resulting in less variations between different actions (e.g., the connection between the elbow and head in action ``clapping hands''). For this, we propose a novel Contrastive GCN-Transformer Network (ConGT) which fuses the spatial and temporal modules in a parallel way. The ConGT involves two parallel streams: Spatial-Temporal Graph Convolution stream (STG) and Spatial-Temporal Transformer stream (STT). The STG is designed to obtain action representations maintaining the natural topology structure of the human skeleton. The STT is devised to acquire action representations containing the global relationships among joints. Since the action representations produced from these two streams contain different characteristics, and each of them knows little information of the other, we introduce the contrastive learning paradigm to guide their output representations of the same sample to be as close as possible in a self-supervised manner. Through the contrastive learning, they can learn information from each other to enrich the action features by maximizing the mutual information between the two types of action representations. To further improve action recognition accuracy, we introduce the Cyclical Focal Loss (CFL) which can focus on confident training samples in early training epochs, with an increasing focus on hard samples during the middle epochs. We conduct experiments on three benchmark datasets, which demonstrate that our model achieves state-of-the-art performance in action recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge