Xiaoyang Li

AGE-Net: Spectral--Spatial Fusion and Anatomical Graph Reasoning with Evidential Ordinal Regression for Knee Osteoarthritis Grading

Jan 24, 2026Abstract:Automated Kellgren--Lawrence (KL) grading from knee radiographs is challenging due to subtle structural changes, long-range anatomical dependencies, and ambiguity near grade boundaries. We propose AGE-Net, a ConvNeXt-based framework that integrates Spectral--Spatial Fusion (SSF), Anatomical Graph Reasoning (AGR), and Differential Refinement (DFR). To capture predictive uncertainty and preserve label ordinality, AGE-Net employs a Normal-Inverse-Gamma (NIG) evidential regression head and a pairwise ordinal ranking constraint. On a knee KL dataset, AGE-Net achieves a quadratic weighted kappa (QWK) of 0.9017 +/- 0.0045 and a mean squared error (MSE) of 0.2349 +/- 0.0028 over three random seeds, outperforming strong CNN baselines and showing consistent gains in ablation studies. We further outline evaluations of uncertainty quality, robustness, and explainability, with additional experimental figures to be included in the full manuscript.

ClinNet: Evidential Ordinal Regression with Bilateral Asymmetry and Prototype Memory for Knee Osteoarthritis Grading

Jan 24, 2026Abstract:Knee osteoarthritis (KOA) grading based on radiographic images is a critical yet challenging task due to subtle inter-grade differences, annotation uncertainty, and the inherently ordinal nature of disease progression. Conventional deep learning approaches typically formulate this problem as deterministic multi-class classification, ignoring both the continuous progression of degeneration and the uncertainty in expert annotations. In this work, we propose ClinNet, a novel trustworthy framework that addresses KOA grading as an evidential ordinal regression problem. The proposed method integrates three key components: (1) a Bilateral Asymmetry Encoder (BAE) that explicitly models medial-lateral structural discrepancies; (2) a Diagnostic Memory Bank that maintains class-wise prototypes to stabilize feature representations; and (3) an Evidential Ordinal Head based on the Normal-Inverse-Gamma (NIG) distribution to jointly estimate continuous KL grades and epistemic uncertainty. Extensive experiments demonstrate that ClinNet achieves a Quadratic Weighted Kappa of 0.892 and Accuracy of 0.768, statistically outperforming state-of-the-art baselines (p < 0.001). Crucially, we demonstrate that the model's uncertainty estimates successfully flag out-of-distribution samples and potential misdiagnoses, paving the way for safe clinical deployment.

Codebook Design for Limited Feedback in Near-Field XL-MIMO Systems

Jan 15, 2026Abstract:In this paper, we study efficient codebook design for limited feedback in extremely large-scale multiple-input-multiple-output (XL-MIMO) frequency division duplexing (FDD) systems. It is worth noting that existing codebook designs for XL-MIMO, such as polar-domain codebook, have not well taken into account user (location) distribution in practice, thereby incurring excessive feedback overhead. To address this issue, we propose in this paper a novel and efficient feedback codebook tailored to user distribution. To this end, we first consider a typical scenario where users are uniformly distributed within a specific polar-region, based on which a sum-rate maximization problem is formulated to jointly optimize angle-range samples and bit allocation among angle/range feedback. This problem is challenging to solve due to the lack of a closed-form expression for the received power in terms of angle and range samples. By leveraging a Voronoi partitioning approach, we show that uniform angle sampling is optimal for received power maximization. For more challenging range sampling design, we obtain a tight lower-bound on the received power and show that geometric sampling, where the ratio between adjacent samples is constant, can maximize the lower bound and thus serves as a high-quality suboptimal solution. We then extend the proposed framework to accommodate more general non-uniform user distribution via an alternating sampling method. Furthermore, theoretical analysis reveals that as the array size increases, the optimal allocation of feedback bits increasingly favors range samples at the expense of angle samples. Finally, numerical results validate the superior rate performance and robustness of the proposed codebook design under various system setups, achieving significant gains over benchmark schemes, including the widely used polar-domain codebook.

Let It Flow: Agentic Crafting on Rock and Roll, Building the ROME Model within an Open Agentic Learning Ecosystem

Dec 31, 2025Abstract:Agentic crafting requires LLMs to operate in real-world environments over multiple turns by taking actions, observing outcomes, and iteratively refining artifacts. Despite its importance, the open-source community lacks a principled, end-to-end ecosystem to streamline agent development. We introduce the Agentic Learning Ecosystem (ALE), a foundational infrastructure that optimizes the production pipeline for agent LLMs. ALE consists of three components: ROLL, a post-training framework for weight optimization; ROCK, a sandbox environment manager for trajectory generation; and iFlow CLI, an agent framework for efficient context engineering. We release ROME (ROME is Obviously an Agentic Model), an open-source agent grounded by ALE and trained on over one million trajectories. Our approach includes data composition protocols for synthesizing complex behaviors and a novel policy optimization algorithm, Interaction-based Policy Alignment (IPA), which assigns credit over semantic interaction chunks rather than individual tokens to improve long-horizon training stability. Empirically, we evaluate ROME within a structured setting and introduce Terminal Bench Pro, a benchmark with improved scale and contamination control. ROME demonstrates strong performance across benchmarks like SWE-bench Verified and Terminal Bench, proving the effectiveness of the ALE infrastructure.

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 23, 2025Abstract:Recent strides in video generation have paved the way for unified audio-visual generation. In this work, we present Seedance 1.5 pro, a foundational model engineered specifically for native, joint audio-video generation. Leveraging a dual-branch Diffusion Transformer architecture, the model integrates a cross-modal joint module with a specialized multi-stage data pipeline, achieving exceptional audio-visual synchronization and superior generation quality. To ensure practical utility, we implement meticulous post-training optimizations, including Supervised Fine-Tuning (SFT) on high-quality datasets and Reinforcement Learning from Human Feedback (RLHF) with multi-dimensional reward models. Furthermore, we introduce an acceleration framework that boosts inference speed by over 10X. Seedance 1.5 pro distinguishes itself through precise multilingual and dialect lip-syncing, dynamic cinematic camera control, and enhanced narrative coherence, positioning it as a robust engine for professional-grade content creation. Seedance 1.5 pro is now accessible on Volcano Engine at https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo.

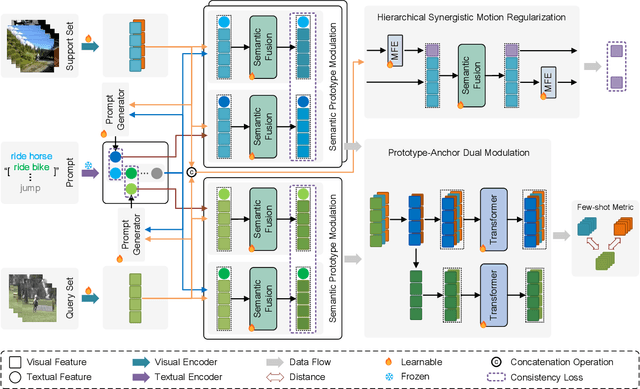

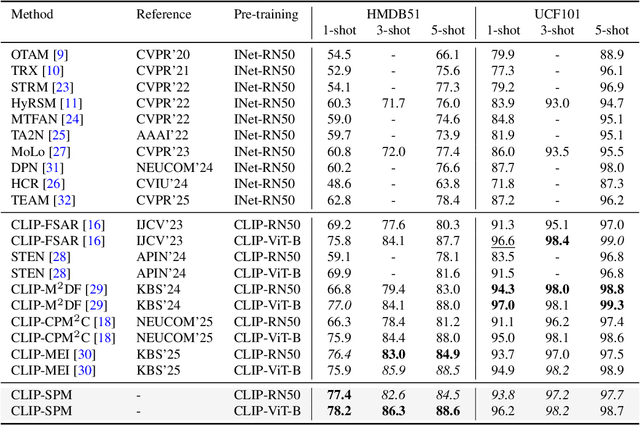

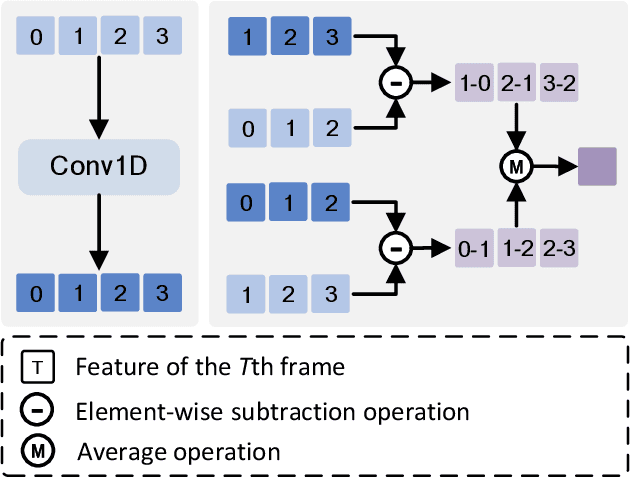

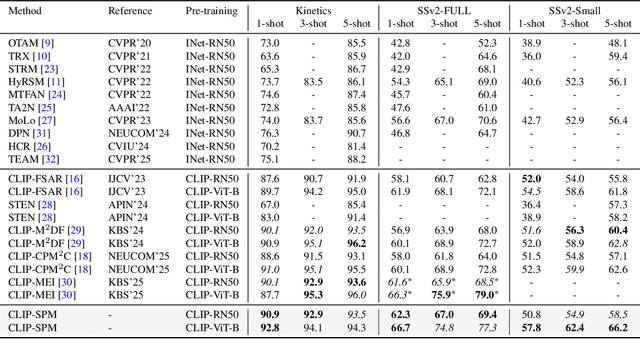

Distinguishing Visually Similar Actions: Prompt-Guided Semantic Prototype Modulation for Few-Shot Action Recognition

Dec 22, 2025

Abstract:Few-shot action recognition aims to enable models to quickly learn new action categories from limited labeled samples, addressing the challenge of data scarcity in real-world applications. Current research primarily addresses three core challenges: (1) temporal modeling, where models are prone to interference from irrelevant static background information and struggle to capture the essence of dynamic action features; (2) visual similarity, where categories with subtle visual differences are difficult to distinguish; and (3) the modality gap between visual-textual support prototypes and visual-only queries, which complicates alignment within a shared embedding space. To address these challenges, this paper proposes a CLIP-SPM framework, which includes three components: (1) the Hierarchical Synergistic Motion Refinement (HSMR) module, which aligns deep and shallow motion features to improve temporal modeling by reducing static background interference; (2) the Semantic Prototype Modulation (SPM) strategy, which generates query-relevant text prompts to bridge the modality gap and integrates them with visual features, enhancing the discriminability between similar actions; and (3) the Prototype-Anchor Dual Modulation (PADM) method, which refines support prototypes and aligns query features with a global semantic anchor, improving consistency across support and query samples. Comprehensive experiments across standard benchmarks, including Kinetics, SSv2-Full, SSv2-Small, UCF101, and HMDB51, demonstrate that our CLIP-SPM achieves competitive performance under 1-shot, 3-shot, and 5-shot settings. Extensive ablation studies and visual analyses further validate the effectiveness of each component and its contributions to addressing the core challenges. The source code and models are publicly available at GitHub.

Context-Aware Network Based on Multi-scale Spatio-temporal Attention for Action Recognition in Videos

Dec 21, 2025Abstract:Action recognition is a critical task in video understanding, requiring the comprehensive capture of spatio-temporal cues across various scales. However, existing methods often overlook the multi-granularity nature of actions. To address this limitation, we introduce the Context-Aware Network (CAN). CAN consists of two core modules: the Multi-scale Temporal Cue Module (MTCM) and the Group Spatial Cue Module (GSCM). MTCM effectively extracts temporal cues at multiple scales, capturing both fast-changing motion details and overall action flow. GSCM, on the other hand, extracts spatial cues at different scales by grouping feature maps and applying specialized extraction methods to each group. Experiments conducted on five benchmark datasets (Something-Something V1 and V2, Diving48, Kinetics-400, and UCF101) demonstrate the effectiveness of CAN. Our approach achieves competitive performance, outperforming most mainstream methods, with accuracies of 50.4% on Something-Something V1, 63.9% on Something-Something V2, 88.4% on Diving48, 74.9% on Kinetics-400, and 86.9% on UCF101. These results highlight the importance of capturing multi-scale spatio-temporal cues for robust action recognition.

Weak-to-Strong Generalization Enables Fully Automated De Novo Training of Multi-head Mask-RCNN Model for Segmenting Densely Overlapping Cell Nuclei in Multiplex Whole-slice Brain Images

Dec 12, 2025Abstract:We present a weak to strong generalization methodology for fully automated training of a multi-head extension of the Mask-RCNN method with efficient channel attention for reliable segmentation of overlapping cell nuclei in multiplex cyclic immunofluorescent (IF) whole-slide images (WSI), and present evidence for pseudo-label correction and coverage expansion, the key phenomena underlying weak to strong generalization. This method can learn to segment de novo a new class of images from a new instrument and/or a new imaging protocol without the need for human annotations. We also present metrics for automated self-diagnosis of segmentation quality in production environments, where human visual proofreading of massive WSI images is unaffordable. Our method was benchmarked against five current widely used methods and showed a significant improvement. The code, sample WSI images, and high-resolution segmentation results are provided in open form for community adoption and adaptation.

Reinforcement Learning Optimization for Large-Scale Learning: An Efficient and User-Friendly Scaling Library

Jun 06, 2025Abstract:We introduce ROLL, an efficient, scalable, and user-friendly library designed for Reinforcement Learning Optimization for Large-scale Learning. ROLL caters to three primary user groups: tech pioneers aiming for cost-effective, fault-tolerant large-scale training, developers requiring flexible control over training workflows, and researchers seeking agile experimentation. ROLL is built upon several key modules to serve these user groups effectively. First, a single-controller architecture combined with an abstraction of the parallel worker simplifies the development of the training pipeline. Second, the parallel strategy and data transfer modules enable efficient and scalable training. Third, the rollout scheduler offers fine-grained management of each sample's lifecycle during the rollout stage. Fourth, the environment worker and reward worker support rapid and flexible experimentation with agentic RL algorithms and reward designs. Finally, AutoDeviceMapping allows users to assign resources to different models flexibly across various stages.

Towards Intelligent Edge Sensing for ISCC Network: Joint Multi-Tier DNN Partitioning and Beamforming Design

Apr 30, 2025

Abstract:The combination of Integrated Sensing and Communication (ISAC) and Mobile Edge Computing (MEC) enables devices to simultaneously sense the environment and offload data to the base stations (BS) for intelligent processing, thereby reducing local computational burdens. However, transmitting raw sensing data from ISAC devices to the BS often incurs substantial fronthaul overhead and latency. This paper investigates a three-tier collaborative inference framework enabled by Integrated Sensing, Communication, and Computing (ISCC), where cloud servers, MEC servers, and ISAC devices cooperatively execute different segments of a pre-trained deep neural network (DNN) for intelligent sensing. By offloading intermediate DNN features, the proposed framework can significantly reduce fronthaul transmission load. Furthermore, multiple-input multiple-output (MIMO) technology is employed to enhance both sensing quality and offloading efficiency. To minimize the overall sensing task inference latency across all ISAC devices, we jointly optimize the DNN partitioning strategy, ISAC beamforming, and computational resource allocation at the MEC servers and devices, subject to sensing beampattern constraints. We also propose an efficient two-layer optimization algorithm. In the inner layer, we derive closed-form solutions for computational resource allocation using the Karush-Kuhn-Tucker conditions. Moreover, we design the ISAC beamforming vectors via an iterative method based on the majorization-minimization and weighted minimum mean square error techniques. In the outer layer, we develop a cross-entropy based probabilistic learning algorithm to determine an optimal DNN partitioning strategy. Simulation results demonstrate that the proposed framework substantially outperforms existing two-tier schemes in inference latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge