RobustMQ: Benchmarking Robustness of Quantized Models

Aug 04, 2023Yisong Xiao, Aishan Liu, Tianyuan Zhang, Haotong Qin, Jinyang Guo, Xianglong Liu

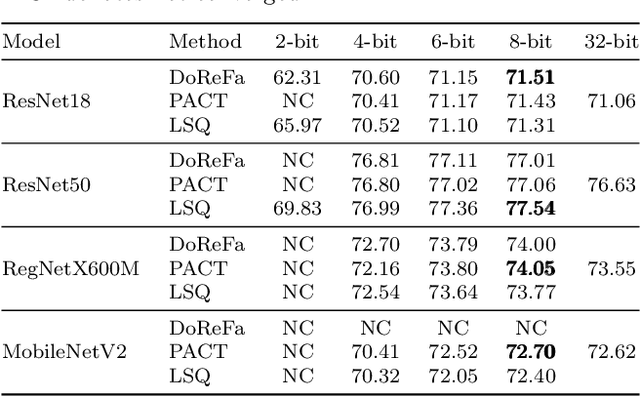

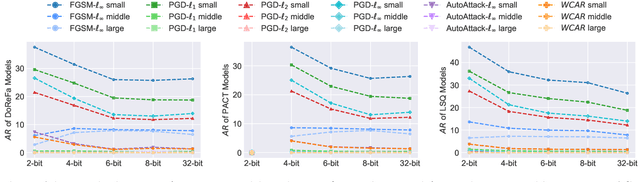

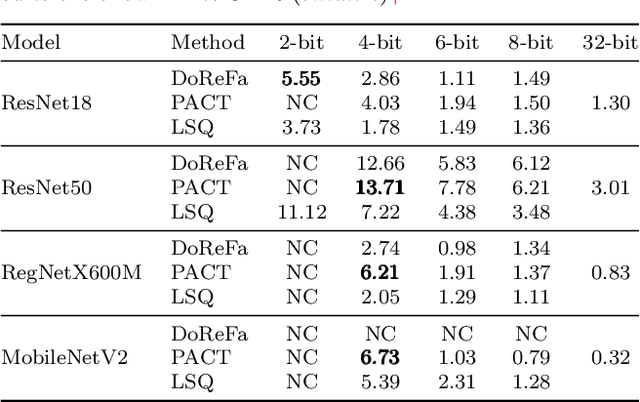

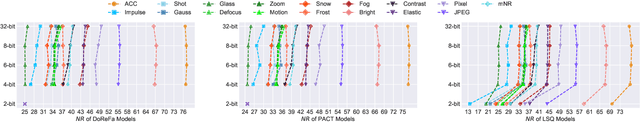

Quantization has emerged as an essential technique for deploying deep neural networks (DNNs) on devices with limited resources. However, quantized models exhibit vulnerabilities when exposed to various noises in real-world applications. Despite the importance of evaluating the impact of quantization on robustness, existing research on this topic is limited and often disregards established principles of robustness evaluation, resulting in incomplete and inconclusive findings. To address this gap, we thoroughly evaluated the robustness of quantized models against various noises (adversarial attacks, natural corruptions, and systematic noises) on ImageNet. The comprehensive evaluation results empirically provide valuable insights into the robustness of quantized models in various scenarios, for example: (1) quantized models exhibit higher adversarial robustness than their floating-point counterparts, but are more vulnerable to natural corruptions and systematic noises; (2) in general, increasing the quantization bit-width results in a decrease in adversarial robustness, an increase in natural robustness, and an increase in systematic robustness; (3) among corruption methods, \textit{impulse noise} and \textit{glass blur} are the most harmful to quantized models, while \textit{brightness} has the least impact; (4) among systematic noises, the \textit{nearest neighbor interpolation} has the highest impact, while bilinear interpolation, cubic interpolation, and area interpolation are the three least harmful. Our research contributes to advancing the robust quantization of models and their deployment in real-world scenarios.

Isolation and Induction: Training Robust Deep Neural Networks against Model Stealing Attacks

Aug 03, 2023Jun Guo, Aishan Liu, Xingyu Zheng, Siyuan Liang, Yisong Xiao, Yichao Wu, Xianglong Liu

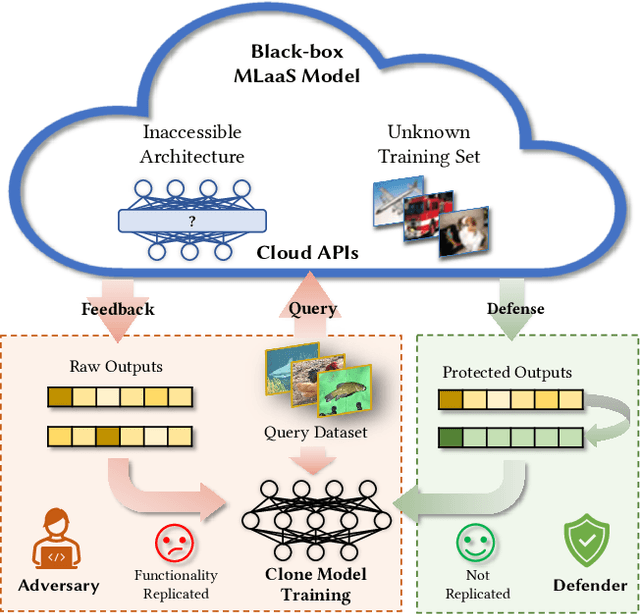

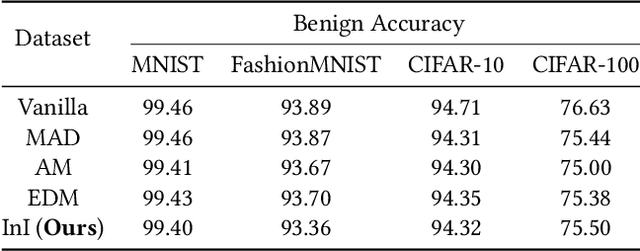

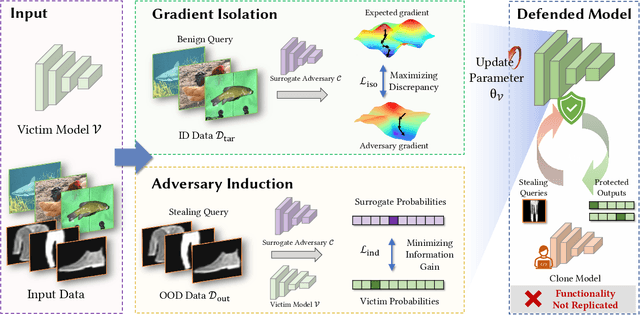

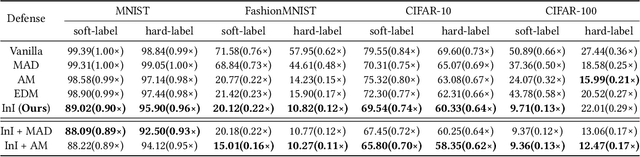

Despite the broad application of Machine Learning models as a Service (MLaaS), they are vulnerable to model stealing attacks. These attacks can replicate the model functionality by using the black-box query process without any prior knowledge of the target victim model. Existing stealing defenses add deceptive perturbations to the victim's posterior probabilities to mislead the attackers. However, these defenses are now suffering problems of high inference computational overheads and unfavorable trade-offs between benign accuracy and stealing robustness, which challenges the feasibility of deployed models in practice. To address the problems, this paper proposes Isolation and Induction (InI), a novel and effective training framework for model stealing defenses. Instead of deploying auxiliary defense modules that introduce redundant inference time, InI directly trains a defensive model by isolating the adversary's training gradient from the expected gradient, which can effectively reduce the inference computational cost. In contrast to adding perturbations over model predictions that harm the benign accuracy, we train models to produce uninformative outputs against stealing queries, which can induce the adversary to extract little useful knowledge from victim models with minimal impact on the benign performance. Extensive experiments on several visual classification datasets (e.g., MNIST and CIFAR10) demonstrate the superior robustness (up to 48% reduction on stealing accuracy) and speed (up to 25.4x faster) of our InI over other state-of-the-art methods. Our codes can be found in https://github.com/DIG-Beihang/InI-Model-Stealing-Defense.

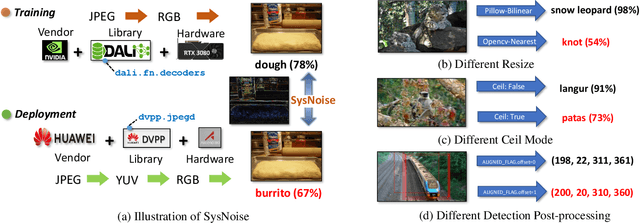

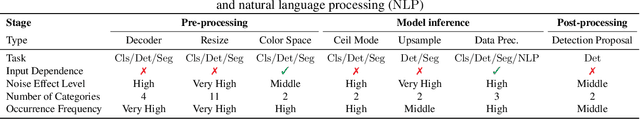

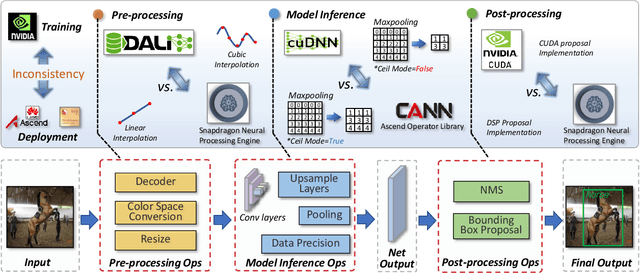

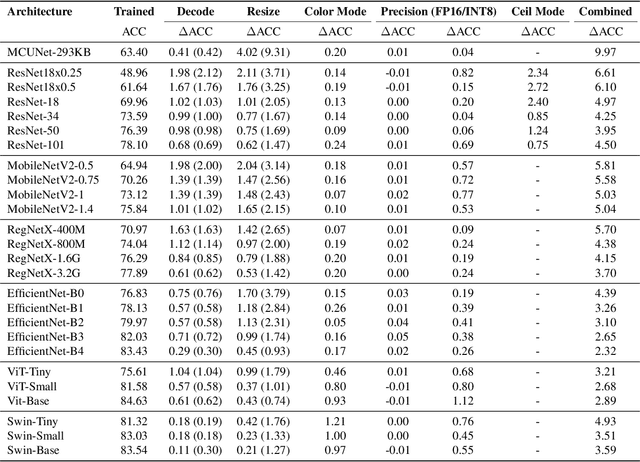

SysNoise: Exploring and Benchmarking Training-Deployment System Inconsistency

Jul 01, 2023Yan Wang, Yuhang Li, Ruihao Gong, Aishan Liu, Yanfei Wang, Jian Hu, Yongqiang Yao, Yunchen Zhang, Tianzi Xiao, Fengwei Yu, Xianglong Liu

Extensive studies have shown that deep learning models are vulnerable to adversarial and natural noises, yet little is known about model robustness on noises caused by different system implementations. In this paper, we for the first time introduce SysNoise, a frequently occurred but often overlooked noise in the deep learning training-deployment cycle. In particular, SysNoise happens when the source training system switches to a disparate target system in deployments, where various tiny system mismatch adds up to a non-negligible difference. We first identify and classify SysNoise into three categories based on the inference stage; we then build a holistic benchmark to quantitatively measure the impact of SysNoise on 20+ models, comprehending image classification, object detection, instance segmentation and natural language processing tasks. Our extensive experiments revealed that SysNoise could bring certain impacts on model robustness across different tasks and common mitigations like data augmentation and adversarial training show limited effects on it. Together, our findings open a new research topic and we hope this work will raise research attention to deep learning deployment systems accounting for model performance. We have open-sourced the benchmark and framework at https://modeltc.github.io/systemnoise_web.

* Proceedings of Machine Learning and Systems. 2023 Mar 18

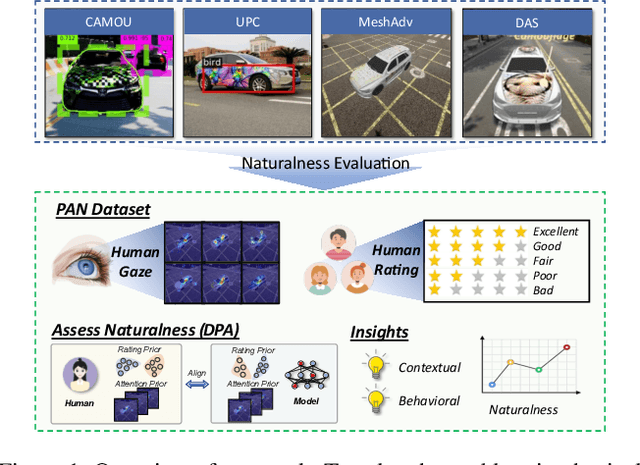

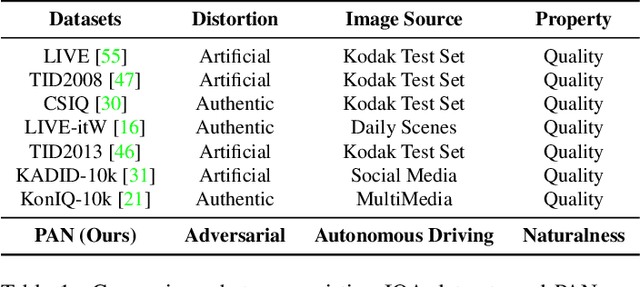

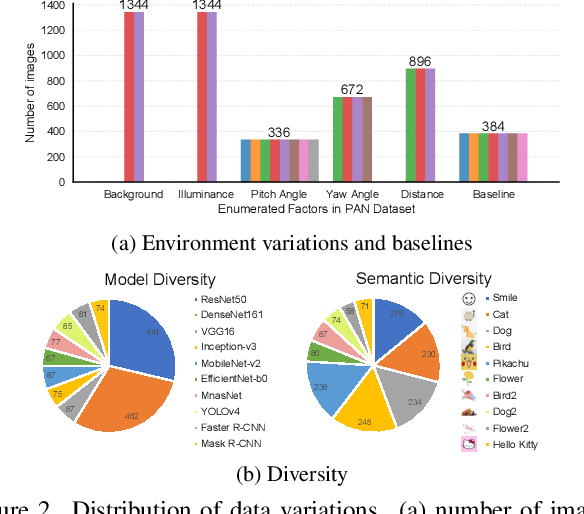

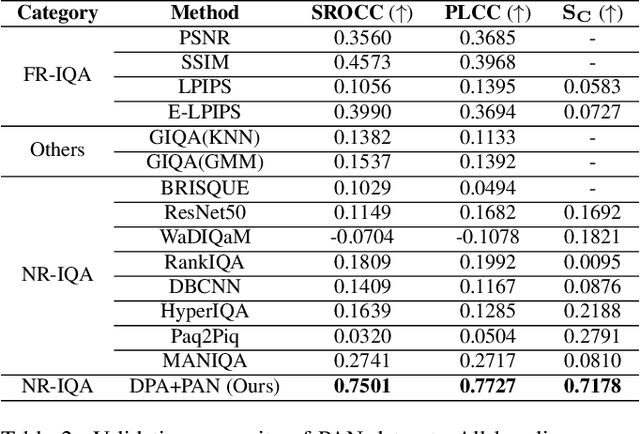

Towards Benchmarking and Assessing Visual Naturalness of Physical World Adversarial Attacks

May 22, 2023Simin Li, Shuing Zhang, Gujun Chen, Dong Wang, Pu Feng, Jiakai Wang, Aishan Liu, Xin Yi, Xianglong Liu

Physical world adversarial attack is a highly practical and threatening attack, which fools real world deep learning systems by generating conspicuous and maliciously crafted real world artifacts. In physical world attacks, evaluating naturalness is highly emphasized since human can easily detect and remove unnatural attacks. However, current studies evaluate naturalness in a case-by-case fashion, which suffers from errors, bias and inconsistencies. In this paper, we take the first step to benchmark and assess visual naturalness of physical world attacks, taking autonomous driving scenario as the first attempt. First, to benchmark attack naturalness, we contribute the first Physical Attack Naturalness (PAN) dataset with human rating and gaze. PAN verifies several insights for the first time: naturalness is (disparately) affected by contextual features (i.e., environmental and semantic variations) and correlates with behavioral feature (i.e., gaze signal). Second, to automatically assess attack naturalness that aligns with human ratings, we further introduce Dual Prior Alignment (DPA) network, which aims to embed human knowledge into model reasoning process. Specifically, DPA imitates human reasoning in naturalness assessment by rating prior alignment and mimics human gaze behavior by attentive prior alignment. We hope our work fosters researches to improve and automatically assess naturalness of physical world attacks. Our code and dataset can be found at https://github.com/zhangsn-19/PAN.

Latent Imitator: Generating Natural Individual Discriminatory Instances for Black-Box Fairness Testing

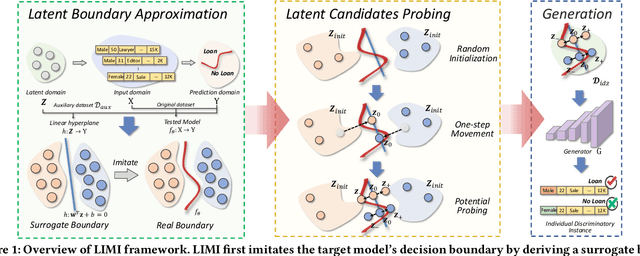

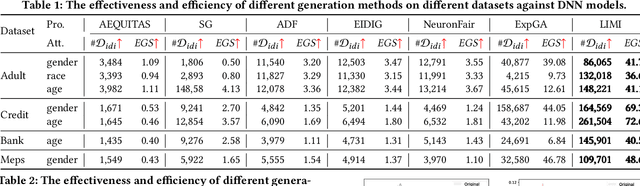

May 19, 2023Yisong Xiao, Aishan Liu, Tianlin Li, Xianglong Liu

Machine learning (ML) systems have achieved remarkable performance across a wide area of applications. However, they frequently exhibit unfair behaviors in sensitive application domains, raising severe fairness concerns. To evaluate and test fairness, engineers often generate individual discriminatory instances to expose unfair behaviors before model deployment. However, existing baselines ignore the naturalness of generation and produce instances that deviate from the real data distribution, which may fail to reveal the actual model fairness since these unnatural discriminatory instances are unlikely to appear in practice. To address the problem, this paper proposes a framework named Latent Imitator (LIMI) to generate more natural individual discriminatory instances with the help of a generative adversarial network (GAN), where we imitate the decision boundary of the target model in the semantic latent space of GAN and further samples latent instances on it. Specifically, we first derive a surrogate linear boundary to coarsely approximate the decision boundary of the target model, which reflects the nature of the original data distribution. Subsequently, to obtain more natural instances, we manipulate random latent vectors to the surrogate boundary with a one-step movement, and further conduct vector calculation to probe two potential discriminatory candidates that may be more closely located in the real decision boundary. Extensive experiments on various datasets demonstrate that our LIMI outperforms other baselines largely in effectiveness ($\times$9.42 instances), efficiency ($\times$8.71 speeds), and naturalness (+19.65%) on average. In addition, we empirically demonstrate that retraining on test samples generated by our approach can lead to improvements in both individual fairness (45.67% on $IF_r$ and 32.81% on $IF_o$) and group fairness (9.86% on $SPD$ and 28.38% on $AOD$}).

Outlier Suppression+: Accurate quantization of large language models by equivalent and optimal shifting and scaling

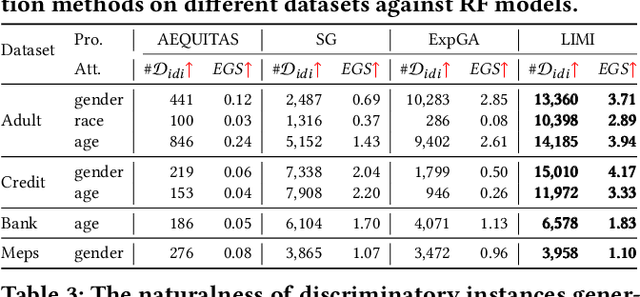

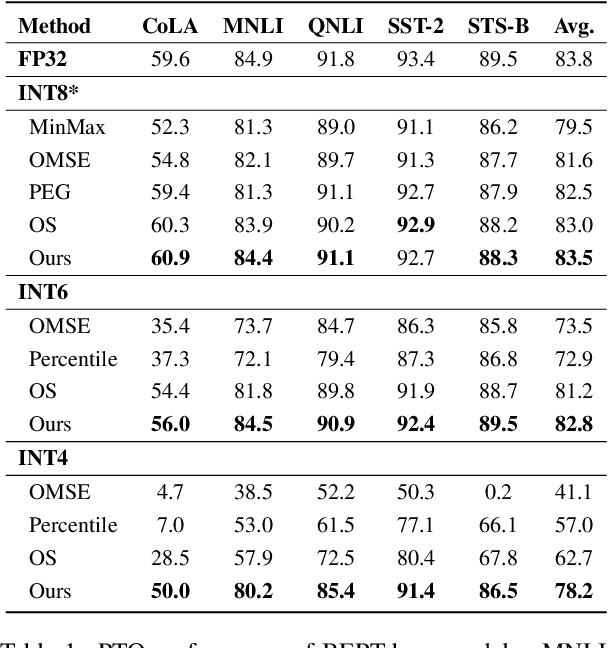

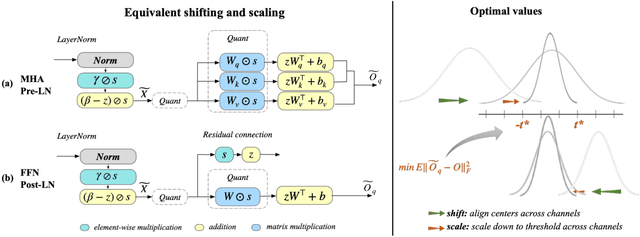

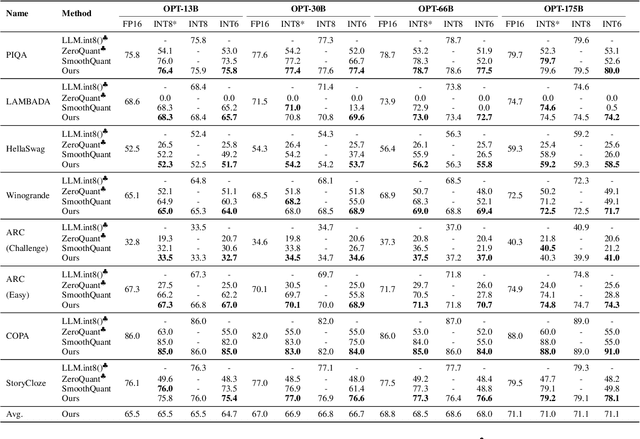

Apr 18, 2023Xiuying Wei, Yunchen Zhang, Yuhang Li, Xiangguo Zhang, Ruihao Gong, Jinyang Guo, Xianglong Liu

Quantization of transformer language models faces significant challenges due to the existence of detrimental outliers in activations. We observe that these outliers are asymmetric and concentrated in specific channels. To address this issue, we propose the Outlier Suppression+ framework. First, we introduce channel-wise shifting and scaling operations to eliminate asymmetric presentation and scale down problematic channels. We demonstrate that these operations can be seamlessly migrated into subsequent modules while maintaining equivalence. Second, we quantitatively analyze the optimal values for shifting and scaling, taking into account both the asymmetric property and quantization errors of weights in the next layer. Our lightweight framework can incur minimal performance degradation under static and standard post-training quantization settings. Comprehensive results across various tasks and models reveal that our approach achieves near-floating-point performance on both small models, such as BERT, and large language models (LLMs) including OPTs, BLOOM, and BLOOMZ at 8-bit and 6-bit settings. Furthermore, we establish a new state of the art for 4-bit BERT.

Towards Accurate Post-Training Quantization for Vision Transformer

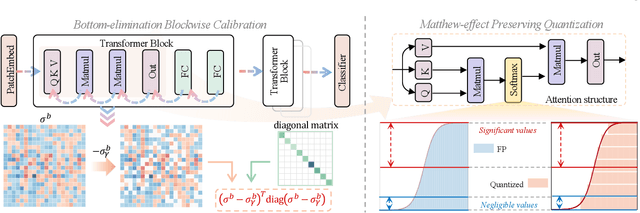

Mar 25, 2023Yifu Ding, Haotong Qin, Qinghua Yan, Zhenhua Chai, Junjie Liu, Xiaolin Wei, Xianglong Liu

Vision transformer emerges as a potential architecture for vision tasks. However, the intense computation and non-negligible delay hinder its application in the real world. As a widespread model compression technique, existing post-training quantization methods still cause severe performance drops. We find the main reasons lie in (1) the existing calibration metric is inaccurate in measuring the quantization influence for extremely low-bit representation, and (2) the existing quantization paradigm is unfriendly to the power-law distribution of Softmax. Based on these observations, we propose a novel Accurate Post-training Quantization framework for Vision Transformer, namely APQ-ViT. We first present a unified Bottom-elimination Blockwise Calibration scheme to optimize the calibration metric to perceive the overall quantization disturbance in a blockwise manner and prioritize the crucial quantization errors that influence more on the final output. Then, we design a Matthew-effect Preserving Quantization for Softmax to maintain the power-law character and keep the function of the attention mechanism. Comprehensive experiments on large-scale classification and detection datasets demonstrate that our APQ-ViT surpasses the existing post-training quantization methods by convincing margins, especially in lower bit-width settings (e.g., averagely up to 5.17% improvement for classification and 24.43% for detection on W4A4). We also highlight that APQ-ViT enjoys versatility and works well on diverse transformer variants.

X-Adv: Physical Adversarial Object Attacks against X-ray Prohibited Item Detection

Feb 19, 2023Aishan Liu, Jun Guo, Jiakai Wang, Siyuan Liang, Renshuai Tao, Wenbo Zhou, Cong Liu, Xianglong Liu, Dacheng Tao

Adversarial attacks are valuable for evaluating the robustness of deep learning models. Existing attacks are primarily conducted on the visible light spectrum (e.g., pixel-wise texture perturbation). However, attacks targeting texture-free X-ray images remain underexplored, despite the widespread application of X-ray imaging in safety-critical scenarios such as the X-ray detection of prohibited items. In this paper, we take the first step toward the study of adversarial attacks targeted at X-ray prohibited item detection, and reveal the serious threats posed by such attacks in this safety-critical scenario. Specifically, we posit that successful physical adversarial attacks in this scenario should be specially designed to circumvent the challenges posed by color/texture fading and complex overlapping. To this end, we propose X-adv to generate physically printable metals that act as an adversarial agent capable of deceiving X-ray detectors when placed in luggage. To resolve the issues associated with color/texture fading, we develop a differentiable converter that facilitates the generation of 3D-printable objects with adversarial shapes, using the gradients of a surrogate model rather than directly generating adversarial textures. To place the printed 3D adversarial objects in luggage with complex overlapped instances, we design a policy-based reinforcement learning strategy to find locations eliciting strong attack performance in worst-case scenarios whereby the prohibited items are heavily occluded by other items. To verify the effectiveness of the proposed X-Adv, we conduct extensive experiments in both the digital and the physical world (employing a commercial X-ray security inspection system for the latter case). Furthermore, we present the physical-world X-ray adversarial attack dataset XAD.

Attacking Cooperative Multi-Agent Reinforcement Learning by Adversarial Minority Influence

Feb 07, 2023Simin Li, Jun Guo, Jingqiao Xiu, Pu Feng, Xin Yu, Jiakai Wang, Aishan Liu, Wenjun Wu, Xianglong Liu

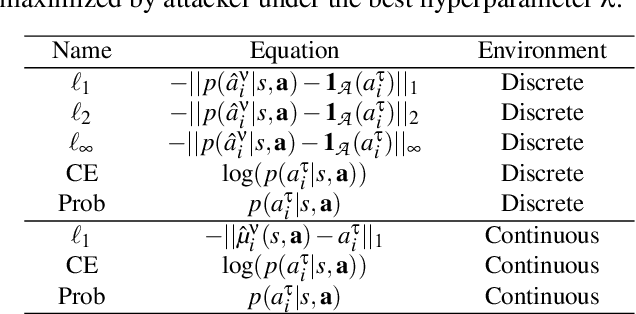

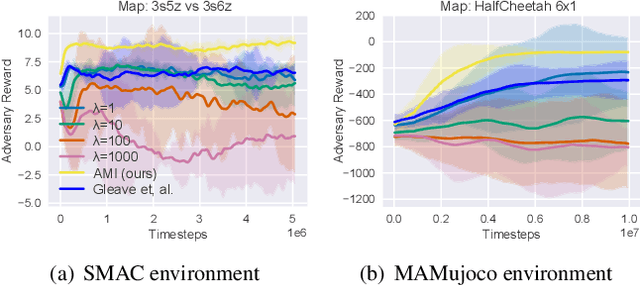

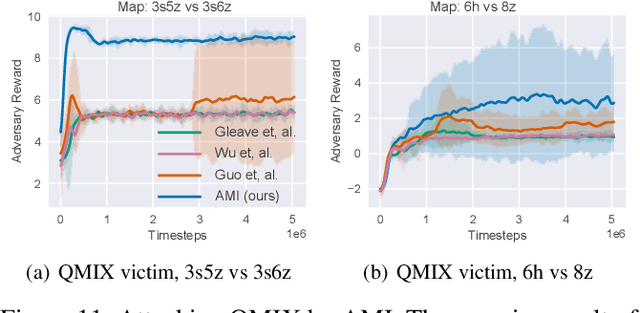

Cooperative multi-agent reinforcement learning (c-MARL) offers a general paradigm for a group of agents to achieve a shared goal by taking individual decisions, yet is found to be vulnerable to adversarial attacks. Though harmful, adversarial attacks also play a critical role in evaluating the robustness and finding blind spots of c-MARL algorithms. However, existing attacks are not sufficiently strong and practical, which is mainly due to the ignorance of complex influence between agents and cooperative nature of victims in c-MARL. In this paper, we propose adversarial minority influence (AMI), the first practical attack against c-MARL by introducing an adversarial agent. AMI addresses the aforementioned problems by unilaterally influencing other cooperative victims to a targeted worst-case cooperation. Technically, to maximally deviate victim policy under complex agent-wise influence, our unilateral attack characterize and maximize the influence from adversary to victims. This is done by adapting a unilateral agent-wise relation metric derived from mutual information, which filters out the detrimental influence from victims to adversary. To fool victims into a jointly worst-case failure, our targeted attack influence victims to a long-term, cooperatively worst case by distracting each victim to a specific target. Such target is learned by a reinforcement learning agent in a trial-and-error process. Extensive experiments in simulation environments, including discrete control (SMAC), continuous control (MAMujoco) and real-world robot swarm control demonstrate the superiority of our AMI approach. Our codes are available in https://anonymous.4open.science/r/AMI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge