ScaLA: Accelerating Adaptation of Pre-Trained Transformer-Based Language Models via Efficient Large-Batch Adversarial Noise

Jan 29, 2022Minjia Zhang, Niranjan Uma Naresh, Yuxiong He

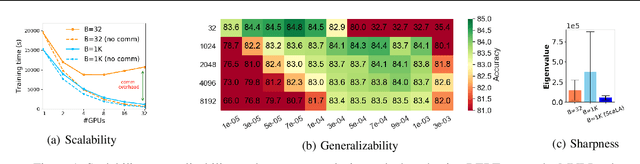

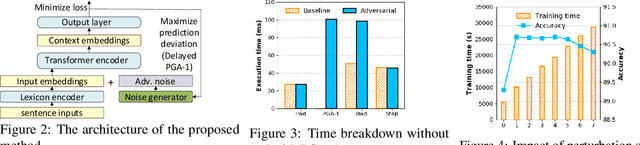

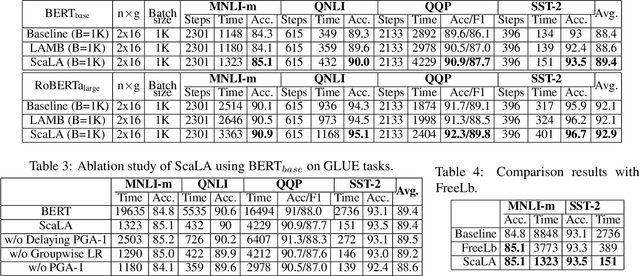

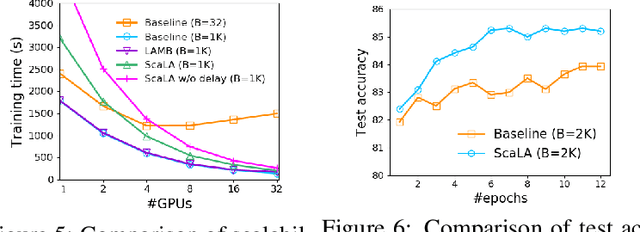

In recent years, large pre-trained Transformer-based language models have led to dramatic improvements in many natural language understanding tasks. To train these models with increasing sizes, many neural network practitioners attempt to increase the batch sizes in order to leverage multiple GPUs to improve training speed. However, increasing the batch size often makes the optimization more difficult, leading to slow convergence or poor generalization that can require orders of magnitude more training time to achieve the same model quality. In this paper, we explore the steepness of the loss landscape of large-batch optimization for adapting pre-trained Transformer-based language models to domain-specific tasks and find that it tends to be highly complex and irregular, posing challenges to generalization on downstream tasks. To tackle this challenge, we propose ScaLA, a novel and efficient method to accelerate the adaptation speed of pre-trained transformer networks. Different from prior methods, we take a sequential game-theoretic approach by adding lightweight adversarial noise into large-batch optimization, which significantly improves adaptation speed while preserving model generalization. Experiment results show that ScaLA attains 2.7--9.8$\times$ adaptation speedups over the baseline for GLUE on BERT-base and RoBERTa-large, while achieving comparable and sometimes higher accuracy than the state-of-the-art large-batch optimization methods. Finally, we also address the theoretical aspect of large-batch optimization with adversarial noise and provide a theoretical convergence rate analysis for ScaLA using techniques for analyzing non-convex saddle-point problems.

DeepSpeed-MoE: Advancing Mixture-of-Experts Inference and Training to Power Next-Generation AI Scale

Jan 14, 2022Samyam Rajbhandari, Conglong Li, Zhewei Yao, Minjia Zhang, Reza Yazdani Aminabadi, Ammar Ahmad Awan, Jeff Rasley, Yuxiong He

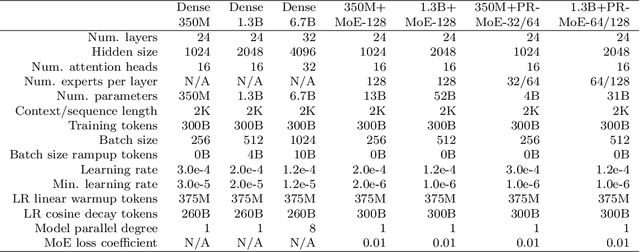

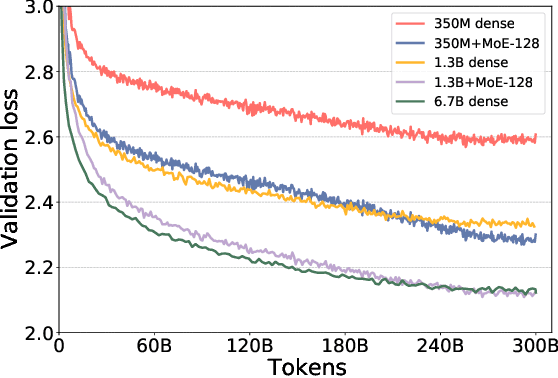

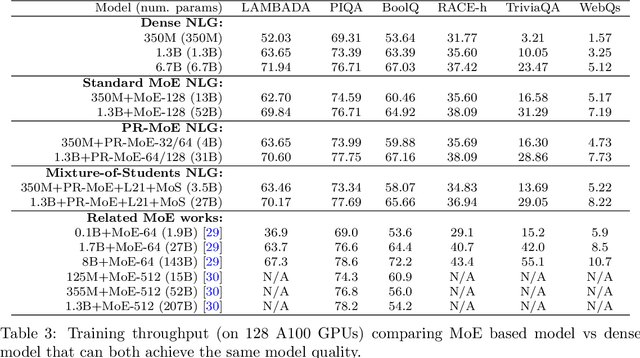

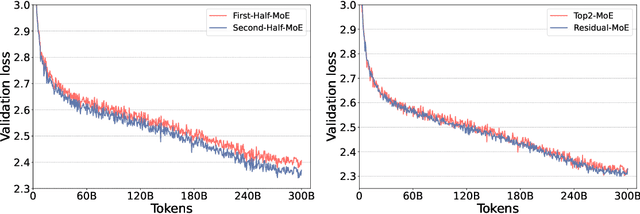

As the training of giant dense models hits the boundary on the availability and capability of the hardware resources today, Mixture-of-Experts (MoE) models become one of the most promising model architectures due to their significant training cost reduction compared to a quality-equivalent dense model. Its training cost saving is demonstrated from encoder-decoder models (prior works) to a 5x saving for auto-aggressive language models (this work along with parallel explorations). However, due to the much larger model size and unique architecture, how to provide fast MoE model inference remains challenging and unsolved, limiting its practical usage. To tackle this, we present DeepSpeed-MoE, an end-to-end MoE training and inference solution as part of the DeepSpeed library, including novel MoE architecture designs and model compression techniques that reduce MoE model size by up to 3.7x, and a highly optimized inference system that provides 7.3x better latency and cost compared to existing MoE inference solutions. DeepSpeed-MoE offers an unprecedented scale and efficiency to serve massive MoE models with up to 4.5x faster and 9x cheaper inference compared to quality-equivalent dense models. We hope our innovations and systems help open a promising path to new directions in the large model landscape, a shift from dense to sparse MoE models, where training and deploying higher-quality models with fewer resources becomes more widely possible.

A Survey of Large-Scale Deep Learning Serving System Optimization: Challenges and Opportunities

Nov 28, 2021Fuxun Yu, Di Wang, Longfei Shangguan, Minjia Zhang, Xulong Tang, Chenchen Liu, Xiang Chen

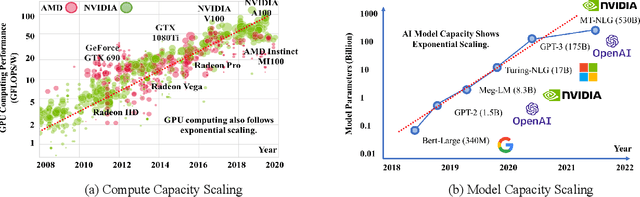

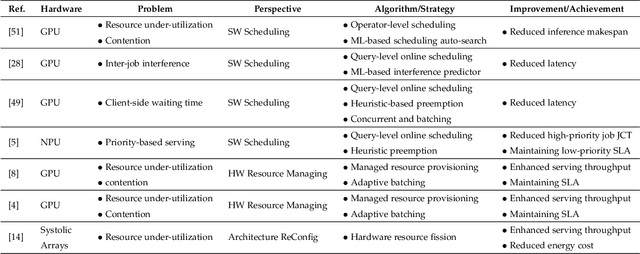

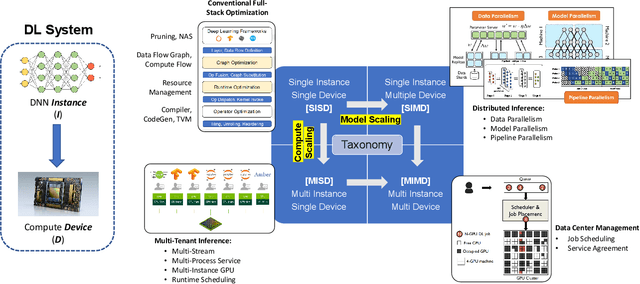

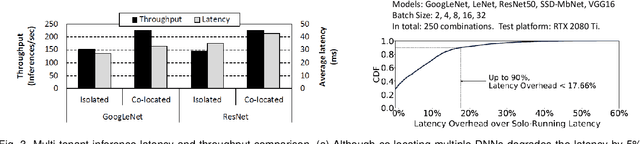

Deep Learning (DL) models have achieved superior performance in many application domains, including vision, language, medical, commercial ads, entertainment, etc. With the fast development, both DL applications and the underlying serving hardware have demonstrated strong scaling trends, i.e., Model Scaling and Compute Scaling, for example, the recent pre-trained model with hundreds of billions of parameters with ~TB level memory consumption, as well as the newest GPU accelerators providing hundreds of TFLOPS. With both scaling trends, new problems and challenges emerge in DL inference serving systems, which gradually trends towards Large-scale Deep learning Serving systems (LDS). This survey aims to summarize and categorize the emerging challenges and optimization opportunities for large-scale deep learning serving systems. By providing a novel taxonomy, summarizing the computing paradigms, and elaborating the recent technique advances, we hope that this survey could shed light on new optimization perspectives and motivate novel works in large-scale deep learning system optimization.

NxMTransformer: Semi-Structured Sparsification for Natural Language Understanding via ADMM

Oct 28, 2021Connor Holmes, Minjia Zhang, Yuxiong He, Bo Wu

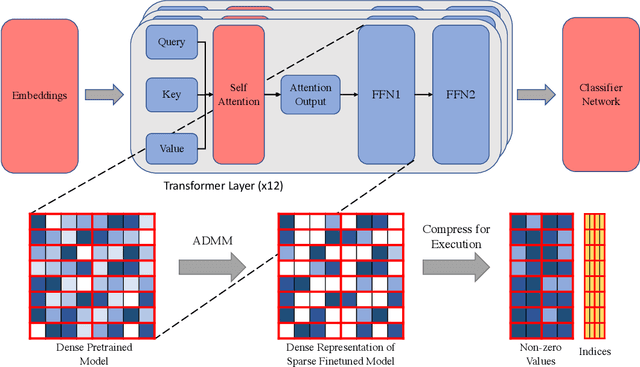

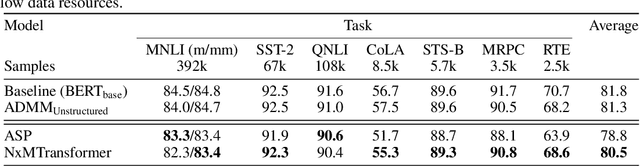

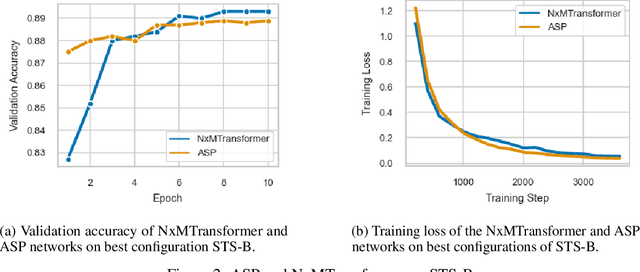

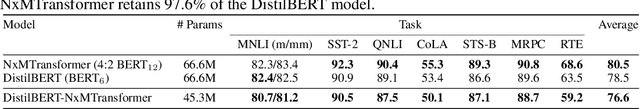

Natural Language Processing (NLP) has recently achieved success by using huge pre-trained Transformer networks. However, these models often contain hundreds of millions or even billions of parameters, bringing challenges to online deployment due to latency constraints. Recently, hardware manufacturers have introduced dedicated hardware for NxM sparsity to provide the flexibility of unstructured pruning with the runtime efficiency of structured approaches. NxM sparsity permits arbitrarily selecting M parameters to retain from a contiguous group of N in the dense representation. However, due to the extremely high complexity of pre-trained models, the standard sparse fine-tuning techniques often fail to generalize well on downstream tasks, which have limited data resources. To address such an issue in a principled manner, we introduce a new learning framework, called NxMTransformer, to induce NxM semi-structured sparsity on pretrained language models for natural language understanding to obtain better performance. In particular, we propose to formulate the NxM sparsity as a constrained optimization problem and use Alternating Direction Method of Multipliers (ADMM) to optimize the downstream tasks while taking the underlying hardware constraints into consideration. ADMM decomposes the NxM sparsification problem into two sub-problems that can be solved sequentially, generating sparsified Transformer networks that achieve high accuracy while being able to effectively execute on newly released hardware. We apply our approach to a wide range of NLP tasks, and our proposed method is able to achieve 1.7 points higher accuracy in GLUE score than current practices. Moreover, we perform detailed analysis on our approach and shed light on how ADMM affects fine-tuning accuracy for downstream tasks. Finally, we illustrate how NxMTransformer achieves performance improvement with knowledge distillation.

Carousel Memory: Rethinking the Design of Episodic Memory for Continual Learning

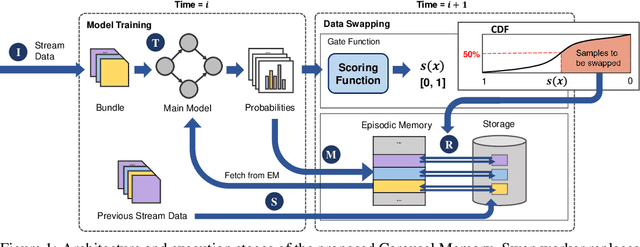

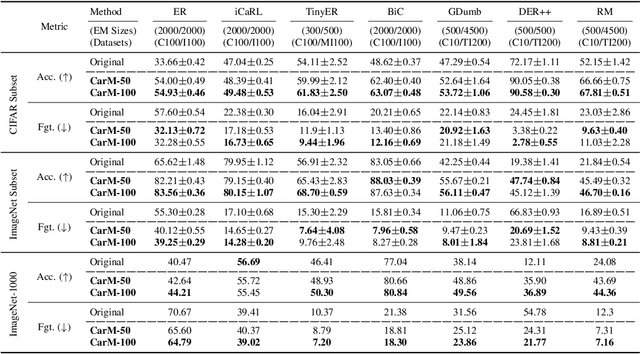

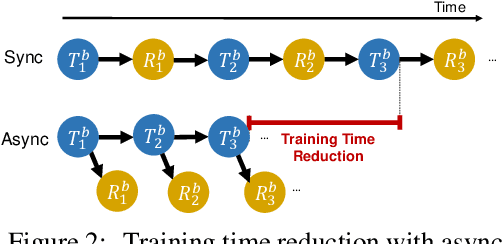

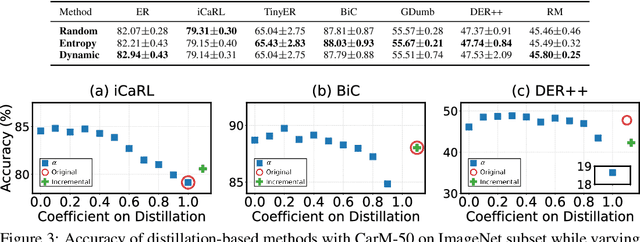

Oct 15, 2021Soobee Lee, Minindu Weerakoon, Jonghyun Choi, Minjia Zhang, Di Wang, Myeongjae Jeon

Continual Learning (CL) is an emerging machine learning paradigm that aims to learn from a continuous stream of tasks without forgetting knowledge learned from the previous tasks. To avoid performance decrease caused by forgetting, prior studies exploit episodic memory (EM), which stores a subset of the past observed samples while learning from new non-i.i.d. data. Despite the promising results, since CL is often assumed to execute on mobile or IoT devices, the EM size is bounded by the small hardware memory capacity and makes it infeasible to meet the accuracy requirements for real-world applications. Specifically, all prior CL methods discard samples overflowed from the EM and can never retrieve them back for subsequent training steps, incurring loss of information that would exacerbate catastrophic forgetting. We explore a novel hierarchical EM management strategy to address the forgetting issue. In particular, in mobile and IoT devices, real-time data can be stored not just in high-speed RAMs but in internal storage devices as well, which offer significantly larger capacity than the RAMs. Based on this insight, we propose to exploit the abundant storage to preserve past experiences and alleviate the forgetting by allowing CL to efficiently migrate samples between memory and storage without being interfered by the slow access speed of the storage. We call it Carousel Memory (CarM). As CarM is complementary to existing CL methods, we conduct extensive evaluations of our method with seven popular CL methods and show that CarM significantly improves the accuracy of the methods across different settings by large margins in final average accuracy (up to 28.4%) while retaining the same training efficiency.

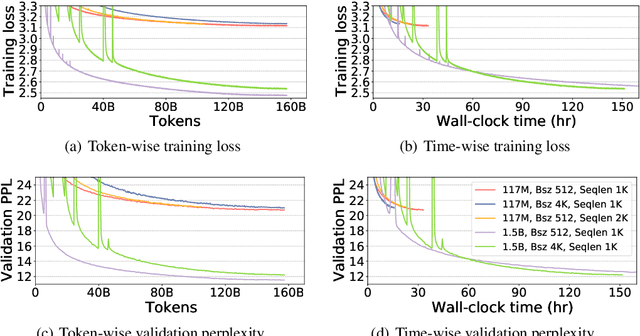

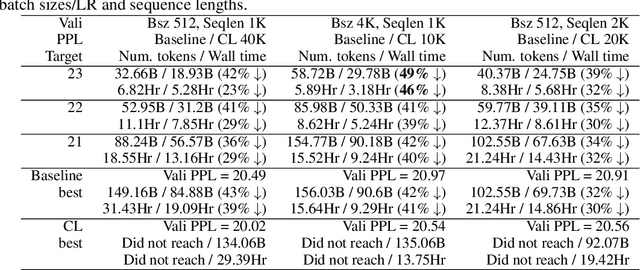

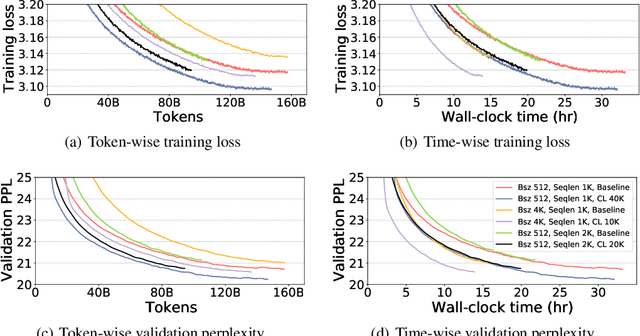

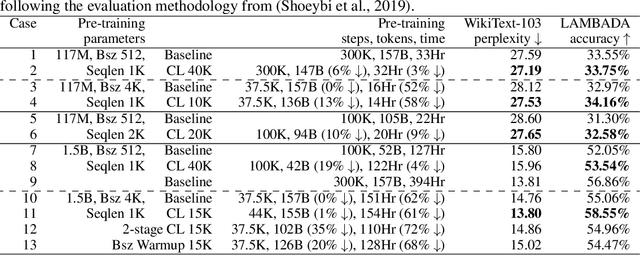

Curriculum Learning: A Regularization Method for Efficient and Stable Billion-Scale GPT Model Pre-Training

Aug 13, 2021Conglong Li, Minjia Zhang, Yuxiong He

Recent works have demonstrated great success in training high-capacity autoregressive language models (GPT, GPT-2, GPT-3) on a huge amount of unlabeled text corpus for text generation. Despite showing great results, this generates two training efficiency challenges. First, training large corpora can be extremely timing consuming, and how to present training samples to the model to improve the token-wise convergence speed remains a challenging and open question. Second, many of these large models have to be trained with hundreds or even thousands of processors using data-parallelism with a very large batch size. Despite of its better compute efficiency, it has been observed that large-batch training often runs into training instability issue or converges to solutions with bad generalization performance. To overcome these two challenges, we present a study of a curriculum learning based approach, which helps improves the pre-training convergence speed of autoregressive models. More importantly, we find that curriculum learning, as a regularization method, exerts a gradient variance reduction effect and enables to train autoregressive models with much larger batch sizes and learning rates without training instability, further improving the training speed. Our evaluations demonstrate that curriculum learning enables training GPT-2 models (with up to 1.5B parameters) with 8x larger batch size and 4x larger learning rate, whereas the baseline approach struggles with training divergence. To achieve the same validation perplexity targets during pre-training, curriculum learning reduces the required number of tokens and wall clock time by up to 59% and 54%, respectively. To achieve the same or better zero-shot WikiText-103/LAMBADA evaluation results at the end of pre-training, curriculum learning reduces the required number of tokens and wall clock time by up to 13% and 61%, respectively.

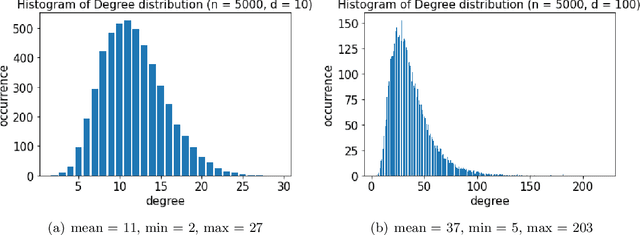

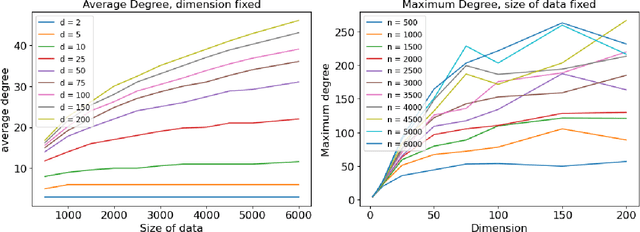

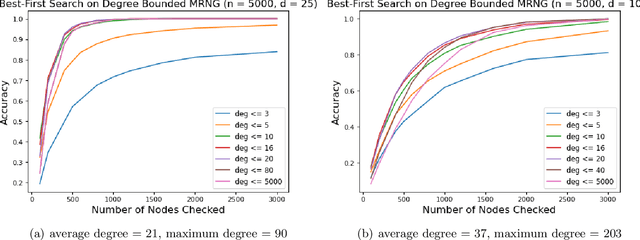

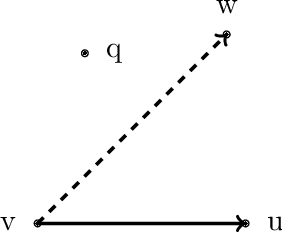

Understanding and Generalizing Monotonic Proximity Graphs for Approximate Nearest Neighbor Search

Jul 27, 2021Dantong Zhu, Minjia Zhang

Graph-based algorithms have shown great empirical potential for the approximate nearest neighbor (ANN) search problem. Currently, graph-based ANN search algorithms are designed mainly using heuristics, whereas theoretical analysis of such algorithms is quite lacking. In this paper, we study a fundamental model of proximity graphs used in graph-based ANN search, called Monotonic Relative Neighborhood Graph (MRNG), from a theoretical perspective. We use mathematical proofs to explain why proximity graphs that are built based on MRNG tend to have good searching performance. We also run experiments on MRNG and graphs generalizing MRNG to obtain a deeper understanding of the model. Our experiments give guidance on how to approximate and generalize MRNG to build proximity graphs on a large scale. In addition, we discover and study a hidden structure of MRNG called conflicting nodes, and we give theoretical evidence how conflicting nodes could be used to improve ANN search methods that are based on MRNG.

ZeRO-Offload: Democratizing Billion-Scale Model Training

Jan 18, 2021Jie Ren, Samyam Rajbhandari, Reza Yazdani Aminabadi, Olatunji Ruwase, Shuangyan Yang, Minjia Zhang, Dong Li, Yuxiong He

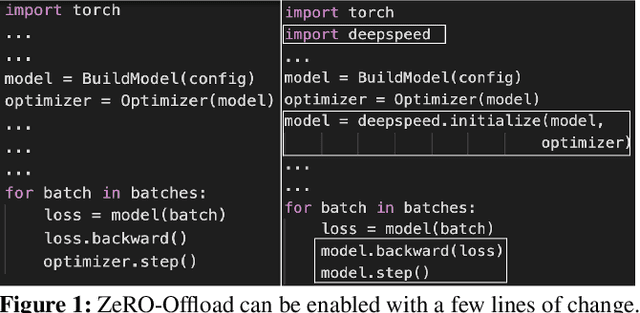

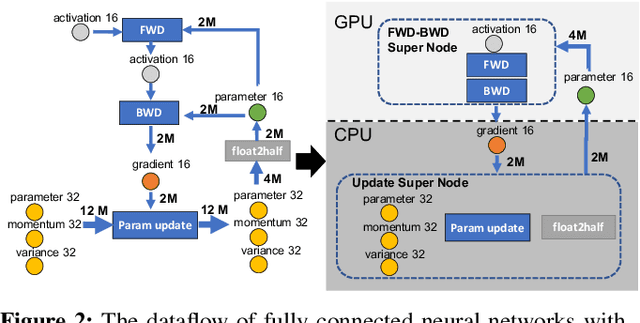

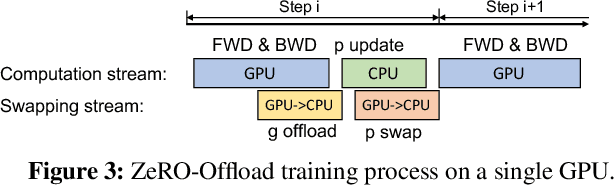

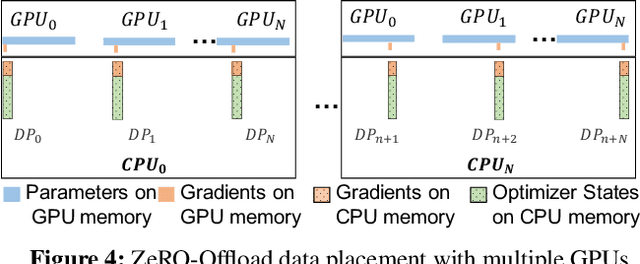

Large-scale model training has been a playing ground for a limited few requiring complex model refactoring and access to prohibitively expensive GPU clusters. ZeRO-Offload changes the large model training landscape by making large model training accessible to nearly everyone. It can train models with over 13 billion parameters on a single GPU, a 10x increase in size compared to popular framework such as PyTorch, and it does so without requiring any model change from the data scientists or sacrificing computational efficiency. ZeRO-Offload enables large model training by offloading data and compute to CPU. To preserve compute efficiency, it is designed to minimize the data movement to/from GPU, and reduce CPU compute time while maximizing memory savings on GPU. As a result, ZeRO-Offload can achieve 40 TFlops/GPU on a single NVIDIA V100 GPU for 10B parameter model compared to 30TF using PyTorch alone for a 1.4B parameter model, the largest that can be trained without running out of memory. ZeRO-Offload is also designed to scale on multiple-GPUs when available, offering near linear speedup on up to 128 GPUs. Additionally, it can work together with model parallelism to train models with over 70 billion parameters on a single DGX-2 box, a 4.5x increase in model size compared to using model parallelism alone. By combining compute and memory efficiency with ease-of-use, ZeRO-Offload democratizes large-scale model training making it accessible to even data scientists with access to just a single GPU.

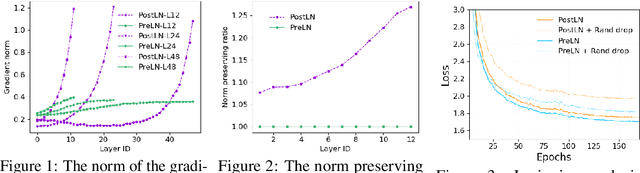

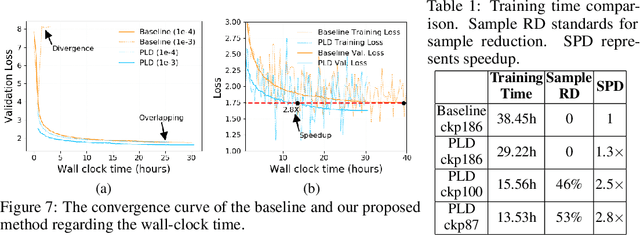

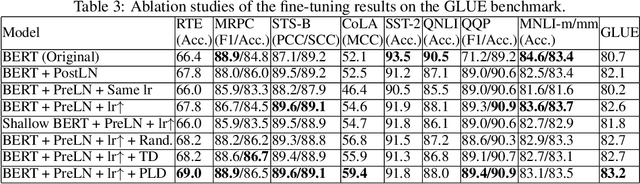

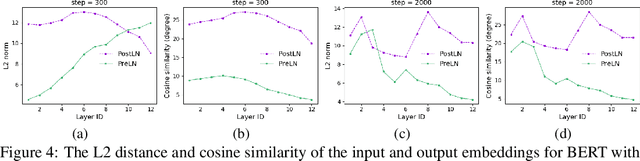

Accelerating Training of Transformer-Based Language Models with Progressive Layer Dropping

Oct 26, 2020Minjia Zhang, Yuxiong He

Recently, Transformer-based language models have demonstrated remarkable performance across many NLP domains. However, the unsupervised pre-training step of these models suffers from unbearable overall computational expenses. Current methods for accelerating the pre-training either rely on massive parallelism with advanced hardware or are not applicable to language modeling. In this work, we propose a method based on progressive layer dropping that speeds the training of Transformer-based language models, not at the cost of excessive hardware resources but from model architecture change and training technique boosted efficiency. Extensive experiments on BERT show that the proposed method achieves a 24% time reduction on average per sample and allows the pre-training to be 2.5 times faster than the baseline to get a similar accuracy on downstream tasks. While being faster, our pre-trained models are equipped with strong knowledge transferability, achieving comparable and sometimes higher GLUE score than the baseline when pre-trained with the same number of samples.

LSTM-Sharp: An Adaptable, Energy-Efficient Hardware Accelerator for Long Short-Term Memory

Nov 04, 2019Reza Yazdani, Olatunji Ruwase, Minjia Zhang, Yuxiong He, Jose-Maria Arnau, Antonio Gonzalez

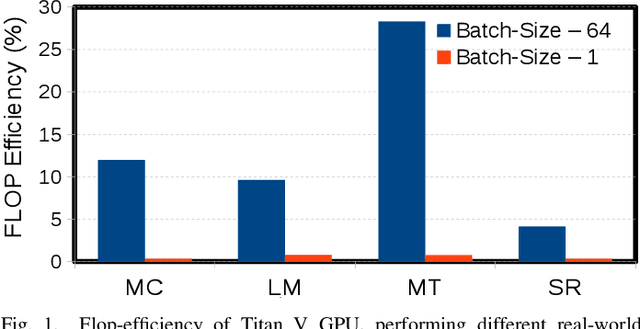

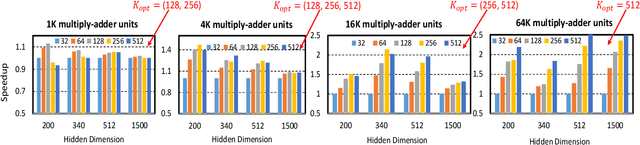

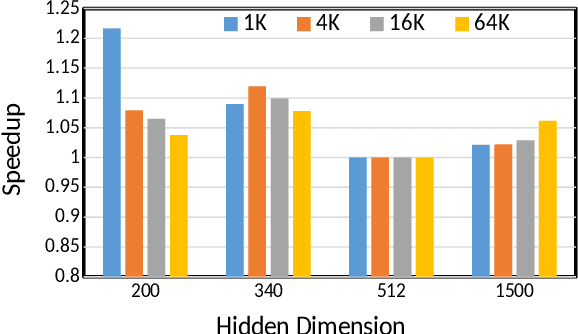

The effectiveness of LSTM neural networks for popular tasks such as Automatic Speech Recognition has fostered an increasing interest in LSTM inference acceleration. Due to the recurrent nature and data dependencies of LSTM computations, designing a customized architecture specifically tailored to its computation pattern is crucial for efficiency. Since LSTMs are used for a variety of tasks, generalizing this efficiency to diverse configurations, i.e., adaptiveness, is another key feature of these accelerators. In this work, we first show the problem of low resource-utilization and adaptiveness for the state-of-the-art LSTM implementations on GPU, FPGA and ASIC architectures. To solve these issues, we propose an intelligent tiled-based dispatching mechanism that efficiently handles the data dependencies and increases the adaptiveness of LSTM computation. To do so, we propose LSTM-Sharp as a hardware accelerator, which pipelines LSTM computation using an effective scheduling scheme to hide most of the dependent serialization. Furthermore, LSTM-Sharp employs dynamic reconfigurable architecture to adapt to the model's characteristics. LSTM-Sharp achieves 1.5x, 2.86x, and 82x speedups on average over the state-of-the-art ASIC, FPGA, and GPU implementations respectively, for different LSTM models and resource budgets. Furthermore, we provide significant energy-reduction with respect to the previous solutions, due to the low power dissipation of LSTM-Sharp (383 GFLOPs/Watt).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge